- 机器学习:基于逻辑回归和高斯贝叶斯对人口普查数据集的分类与预测

文章目录

1、数据说明

| 属性 | 数据类型 | 数值类型 | 字段描述 |

|---|---|---|---|

| 1 | id | - | string |

| 2 | age | numeric | integer |

| 3 | workclass | categorical | string |

| 4 | fnlwgt | continuous | integer |

| 5 | education | categorical | string |

| 6 | education_num | numeric | integer |

| 7 | marital_status | categorical | string |

| 8 | occupation | categorical | string |

| 9 | relationship | categorical | string |

| 10 | race | categorical | string |

| 11 | gender | categorical | string |

| 12 | capital_gain | numeric | integer |

| 13 | capital_loss | numeric | integer |

| 14 | hours_per_week | numeric | integer |

| 15 | native_country | categorical | string |

| 16 | income_bracket | categorical | integer |

2、数据探索

导入模块

from plotly import __version__

import plotly.offline as offline

from plotly.offline import init_notebook_mode, iplot

init_notebook_mode(connected=true)

from plotly.graph_objs import *

import colorlover as cl

from plotly import tools

import pandas as pd

import numpy as np

colors = ['#e43620', '#f16d30','#d99a6c','#fed976', '#b3cb95', '#41bfb3','#229bac',

'#256894', '#fed936', '#f36d30', '#b3cb25', '#f14d30','#49bfb5','#252bac',]

导入数据

data_train = pd.read_csv(r'/home/mw/income_census_train.csv')

data_test = pd.read_csv(r'/home/mw/income_census_test.csv')

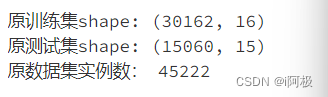

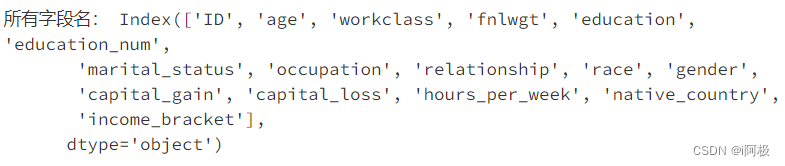

数据集实例数与特征数,缺失值查看

print('原训练集shape:',data_train.shape)

print('原测试集shape:',data_test.shape)

print('原数据集实例数:',data_train.shape[0]+data_test.shape[0])

print('缺失值检测\n原训练集:',data_train.isnull().values.sum(), ',原测试集:',data_test.isnull().values.sum())

print('所有字段名:',data_train.columns)

各个特征的描述与相关总结

data_train.describe()

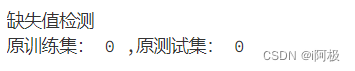

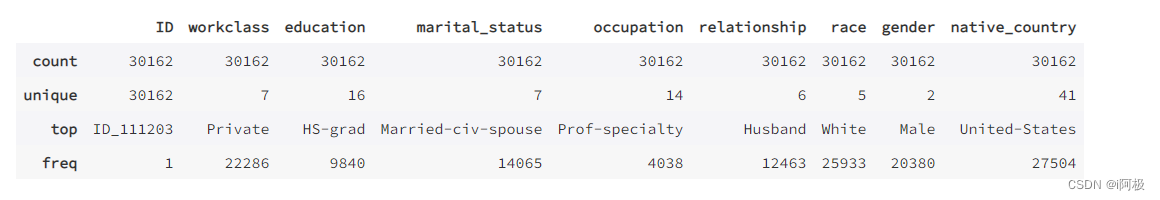

展示所有类型特征

data_train.describe(include=['o'])

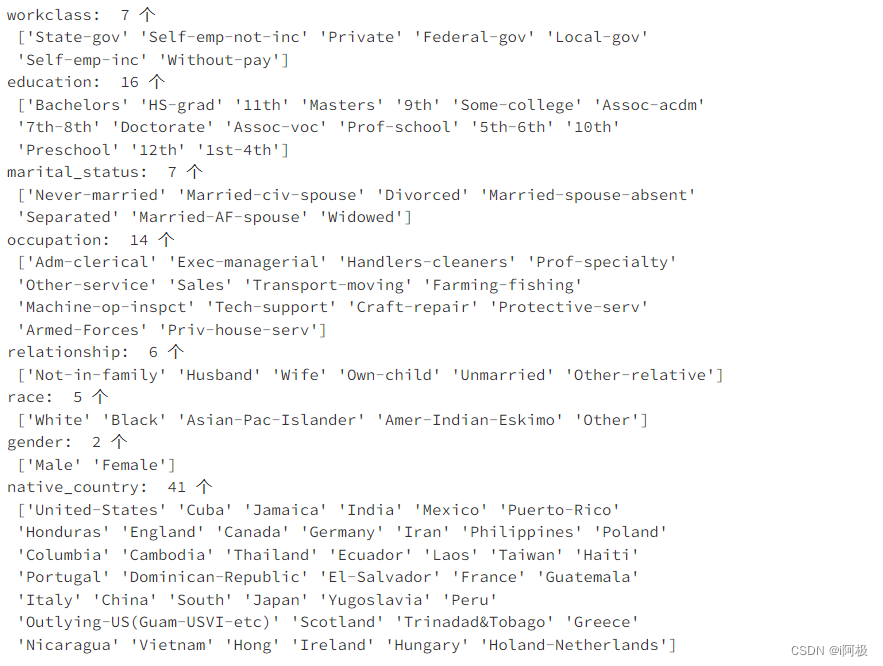

查看8个categorial数据类型的属性唯一值

print('workclass: ',len(data_train['workclass'].unique()),'个\n',data_train['workclass'].unique())

print('education: ',len(data_train['education'].unique()),'个\n',data_train['education'].unique())

print('marital_status: ',len(data_train['marital_status'].unique()),'个\n',data_train['marital_status'].unique())

print('occupation: ',len(data_train['occupation'].unique()),'个\n',data_train['occupation'].unique())

print('relationship: ',len(data_train['relationship'].unique()),'个\n',data_train['relationship'].unique())

print('race: ',len(data_train['race'].unique()),'个\n',data_train['race'].unique())

print('gender: ',len(data_train['gender'].unique()),'个\n',data_train['gender'].unique())

print('native_country: ',len(data_train['native_country'].unique()),'个\n',data_train['native_country'].unique())

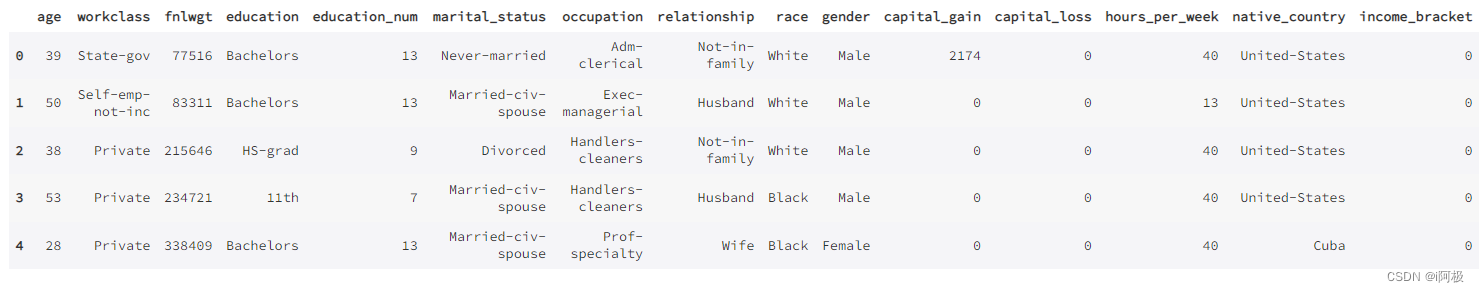

data = data_train.drop(['id'],axis = 1)

data.head()

3、数据预处理

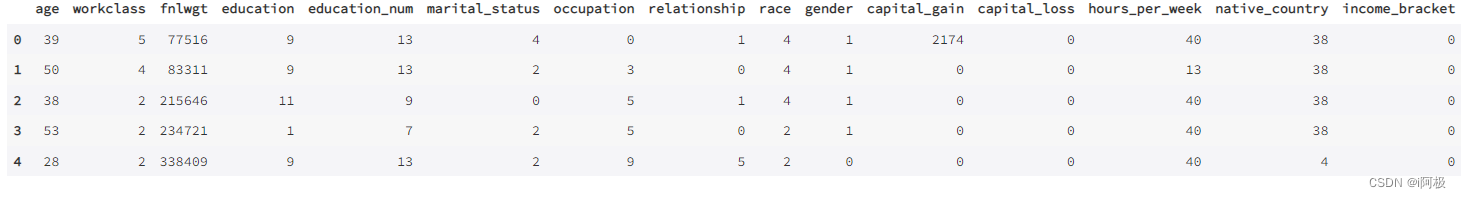

将oject数据转化为int类型

for feature in data.columns:

if data[feature].dtype == 'object':

data[feature] = pd.categorical(data[feature]).codes # codes 这个分类的分类代码

data.head()

data.info()

4、建立模型

选取特征数据与类别数据

from sklearn.preprocessing import standardscaler

from sklearn.linear_model import logisticregression

from sklearn.model_selection import train_test_split

x_df = data.iloc[:,data.columns != 'income_bracket']

y_df = data.iloc[:,data.columns == 'income_bracket']

x = np.array(x_df)

y = np.array(y_df)

标准化数据

scaler = standardscaler()

x = scaler.fit_transform(x)

5、特征选取(feature selection)

from sklearn.tree import decisiontreeclassifier

# from sklearn.decomposition import pca

# fit an extra tree model to the data

tree = decisiontreeclassifier(random_state=0)

tree.fit(x, y)

# 显示每个属性的相对重要性得分

relval = tree.feature_importances_

trace = bar(x = x_df.columns.tolist(), y = relval, text = [round(i,2) for i in relval], textposition= "outside", marker = dict(color = colors))

iplot(figure(data = [trace], layout = layout(title="特征重要性", width = 800, height = 400, yaxis = dict(range = [0,0.25]))))

from sklearn.feature_selection import rfe

# 使用决策树作为模型

lr = decisiontreeclassifier()

names = x_df.columns.tolist()

# 将所有特征排序

selector = rfe(lr, n_features_to_select = 10)

selector.fit(x,y.ravel())

print("排序后的特征:",sorted(zip(map(lambda x:round(x,4), selector.ranking_), names)))

# 得到新的dataframe

x_df_new = x_df.iloc[:, selector.get_support(indices = false)]

x_df_new.columns

x_new = scaler.fit_transform(np.array(x_df_new)) # 数组化 + 标准化

x_train, x_test, y_train, y_test = train_test_split(x_new,y) # 切分

from sklearn.linear_model import logisticregression

from sklearn.naive_bayes import gaussiannb

from sklearn import metrics

from sklearn.metrics import confusion_matrix,roc_curve, auc, recall_score, classification_report

import matplotlib.pyplot as plt

import itertools

# 绘制混淆矩阵

def plot_confusion_matrix(cm, classes,

title='confusion matrix',

cmap=plt.cm.blues):

"""

this function prints and plots the confusion matrix.

normalization can be applied by setting `normalize=true`.

"""

plt.imshow(cm, interpolation='nearest', cmap=cmap)

plt.title(title)

plt.colorbar()

tick_marks = np.arange(len(classes))

plt.xticks(tick_marks, classes, rotation=0)

plt.yticks(tick_marks, classes)

thresh = cm.max() / 2.

for i, j in itertools.product(range(cm.shape[0]), range(cm.shape[1])):

plt.text(j, i, cm[i, j],

horizontalalignment="center",

color="white" if cm[i, j] > thresh else "black")

plt.tight_layout()

plt.ylabel('true label')

plt.xlabel('predicted label')

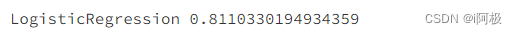

6、逻辑回归(logistic regression)

# instance

lr = logisticregression()

# fit

lr_clf = lr.fit(x_train,y_train.ravel())

# predict

y_pred = lr_clf.predict(x_test)

print('logisticregression %s' % metrics.accuracy_score(y_test, y_pred))

cm = confusion_matrix(y_test, y_pred)

class_names = [0,1]

plt.figure()

plot_confusion_matrix(cm , classes=class_names, title='confusion matrix')

plt.show()

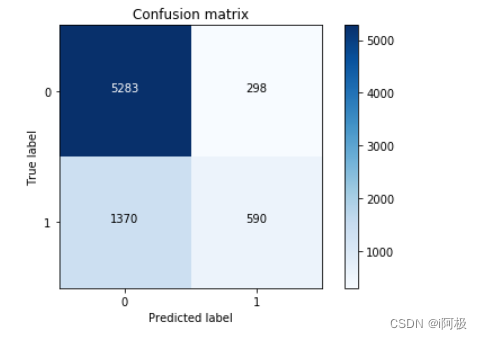

7、高斯贝叶斯(gaussian naive bayes)

gnb = gaussiannb()

gnb_clf = gnb.fit(x_train, y_train.ravel())

y_pred2 = gnb_clf.predict(x_test)

print('gaussiannb %s' % metrics.accuracy_score(y_test, y_pred2))

cm = confusion_matrix(y_test, y_pred2)

class_names = [0,1]

plt.figure()

plot_confusion_matrix(cm , classes=class_names, title='confusion matrix')

plt.show()

发表评论