安装llama-cpp-python包时,出现下述问题:

collecting llama-cpp-python

using cached llama_cpp_python-0.2.28.tar.gz (9.4 mb)

installing build dependencies ... done

getting requirements to build wheel ... done

installing backend dependencies ... done

preparing metadata (pyproject.toml) ... done

requirement already satisfied: typing-extensions>=4.5.0 in d:\software\anaconda3\lib\site-packages (from llama-cpp-python) (4.8.0)

collecting diskcache>=5.6.1

using cached diskcache-5.6.3-py3-none-any.whl (45 kb)

requirement already satisfied: numpy>=1.20.0 in d:\software\anaconda3\lib\site-packages (from llama-cpp-python) (1.23.5)

building wheels for collected packages: llama-cpp-python

building wheel for llama-cpp-python (pyproject.toml) ... error

error: subprocess-exited-with-error

× building wheel for llama-cpp-python (pyproject.toml) did not run successfully.

│ exit code: 1

╰─> [20 lines of output]

*** scikit-build-core 0.7.1 using cmake 3.28.1 (wheel)

*** configuring cmake...

2024-01-15 02:55:12,546 - scikit_build_core - warning - can't find a python library, got libdir=none, ldlibrary=none, multiarch=none, masd=none

loading initial cache file c:\windows\temp\tmpyjbtivnu\build\cmakeinit.txt

-- building for: nmake makefiles

cmake error at cmakelists.txt:3 (project):

running

'nmake' '-?'

failed with:

no such file or directory

cmake error: cmake_c_compiler not set, after enablelanguage

cmake error: cmake_cxx_compiler not set, after enablelanguage

-- configuring incomplete, errors occurred!

*** cmake configuration failed

[end of output]

note: this error originates from a subprocess, and is likely not a problem with pip.

error: failed building wheel for llama-cpp-python

failed to build llama-cpp-python

error: could not build wheels for llama-cpp-python, which is required to install pyproject.toml-based projects

根据下面的链接

https://github.com/abetlen/llama-cpp-python/issues/54

building windows wheels for python 3.10 requires microsoft visual studio 2022.

所以需要新装个visualstudio 2022 + c++ building tool再重装

去官网下载

必须勾选c++桌面开发(10g+),我只改了ide(4g+)的路径。

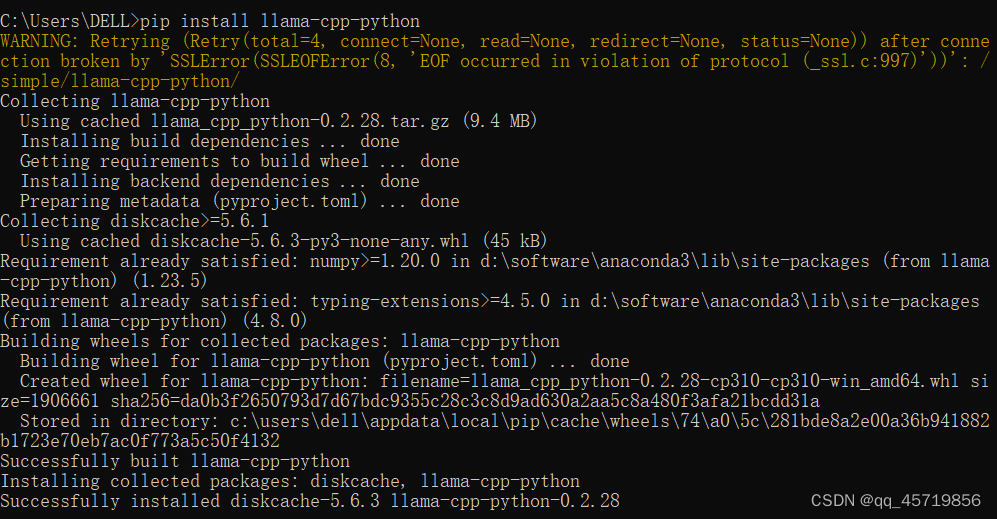

安装好之后,再次输入,即可安装成功

pip install llama-cpp-python

warning: retrying (retry(total=4, connect=none, read=none, redirect=none, status=none)) after connection broken by 'sslerror(ssleoferror(8, 'eof occurred in violation of protocol (_ssl.c:997)'))': /simple/llama-cpp-python/

collecting llama-cpp-python

using cached llama_cpp_python-0.2.28.tar.gz (9.4 mb)

installing build dependencies ... done

getting requirements to build wheel ... done

installing backend dependencies ... done

preparing metadata (pyproject.toml) ... done

collecting diskcache>=5.6.1

using cached diskcache-5.6.3-py3-none-any.whl (45 kb)

requirement already satisfied: numpy>=1.20.0 in d:\software\anaconda3\lib\site-packages (from llama-cpp-python) (1.23.5)

requirement already satisfied: typing-extensions>=4.5.0 in d:\software\anaconda3\lib\site-packages (from llama-cpp-python) (4.8.0)

building wheels for collected packages: llama-cpp-python

building wheel for llama-cpp-python (pyproject.toml) ... done

created wheel for llama-cpp-python: filename=llama_cpp_python-0.2.28-cp310-cp310-win_amd64.whl size=1906661 sha256=da0b3f2650793d7d67bdc9355c28c3c8d9ad630a2aa5c8a480f3afa21bcdd31a

stored in directory: c:\users\dell\appdata\local\pip\cache\wheels\74\a0\5c\281bde8a2e00a36b941882b1723e70eb7ac0f773a5c50f4132

successfully built llama-cpp-python

installing collected packages: diskcache, llama-cpp-python

successfully installed diskcache-5.6.3 llama-cpp-python-0.2.28

发表评论