讲述下如何使用multus来实现sriov的使用。

一、sriov 简介

sr-iov在2010年左右由intel提出,但是随着容器技术的推广,intel官方也给出了sr-iov技术在容器中使用的开源组件,例如:sriov-cni和sriov-device-plugin等,所以sr-iov也开始在容器领域得到的大量使用。

在传统的虚拟化中,虚拟机的网卡通常是通过桥接(bridge或ovs)的方式,因为这种方式最方便,也最简单,但是这样做最大的问题在于性能。本文讲的sr-iov在2010年左右由intel提出,sr-iov全称single-root i/o virtualization,是一种基于硬件的虚拟化解决方案,它允许多个云主机高效共享pcie设备,且同时获得与物理设备性能媲美的i/o性能,能有效提高性能和可伸缩性。

sr-iov技数主要是虚拟出来通道给用户使用的,通道分为两种:

- pf(physical function,物理功能):管理 pcie 设备在物理层面的通道功能,可以看作是一个完整的 pcie 设备,包含了 sr-iov 的功能结构,具有管理、配置 vf 的功能。

- vf(virtual function,虚拟功能):是 pcie 设备在虚拟层面的通道功能,即仅仅包含了 i/o 功能,vf 之间共享物理资源。vf 是一种裁剪版的 pcie 设备,仅允许配置其自身的资源,虚拟机无法通过 vf 对 sr-iov 网卡进行管理。所有的 vf 都是通过 pf 衍生而来,有些型号的 sr-iov 网卡最多可以生成 256 个 vf。sr-iov设备数据包分发机制

从逻辑上可以认为启用了 sr-iov 技术后的物理网卡内置了一个特别的 switch,将所有的 pf 和 vf 端口连接起来,通过 vf 和 pf 的 mac 地址以及 vlan id 来进行数据包分发。

- 在 ingress 上(从外部进入网卡):如果数据包的目的mac地址和vlanid都匹配某一个vf,那么数据包会分发到该vf,否则数据包会进入pf;如果数据包的目的mac地址是广播地址,那么数据包会在同一个 vlan 内广播,所有 vlan id 一致的 vf 都会收到该数据包。

- 在 egress 上(从 pf 或者 vf发出):如果数据包的mac地址不匹配同一vlan内的任何端口(vf或pf),那么数据包会向网卡外部转发,否则会直接在内部转发给对应的端口;如果数据包的 mac 地址为广播地址,那么数据包会在同一个 vlan 内以及向网卡外部广播。注意:所有未设置 vlan id 的 vf 和 pf,可以认为是在同一个 lan 中,不带 vlan 的数据包在该 lan 中按照上述规则进行处理。此外,设置了 vlan 的 vf,发出数据包时,会自动给数据包加上 vlan,在接收到数据包时,可以设置是否由硬件剥离 vlan 头部。

二、sr-iov设备与容器网络

英特尔推出了 sr-iov cni 插件,支持 kubernetes pod 在两种模式任意之一的条件下直接连接 sr-iov 虚拟功能 (vf)。

- 第一个模式在容器主机核心中使用标准 sr-iov vf 驱动程序。

- 第二个模式支持在用户空间执行 vf 驱动程序和网络协议的 dpdk vnf。

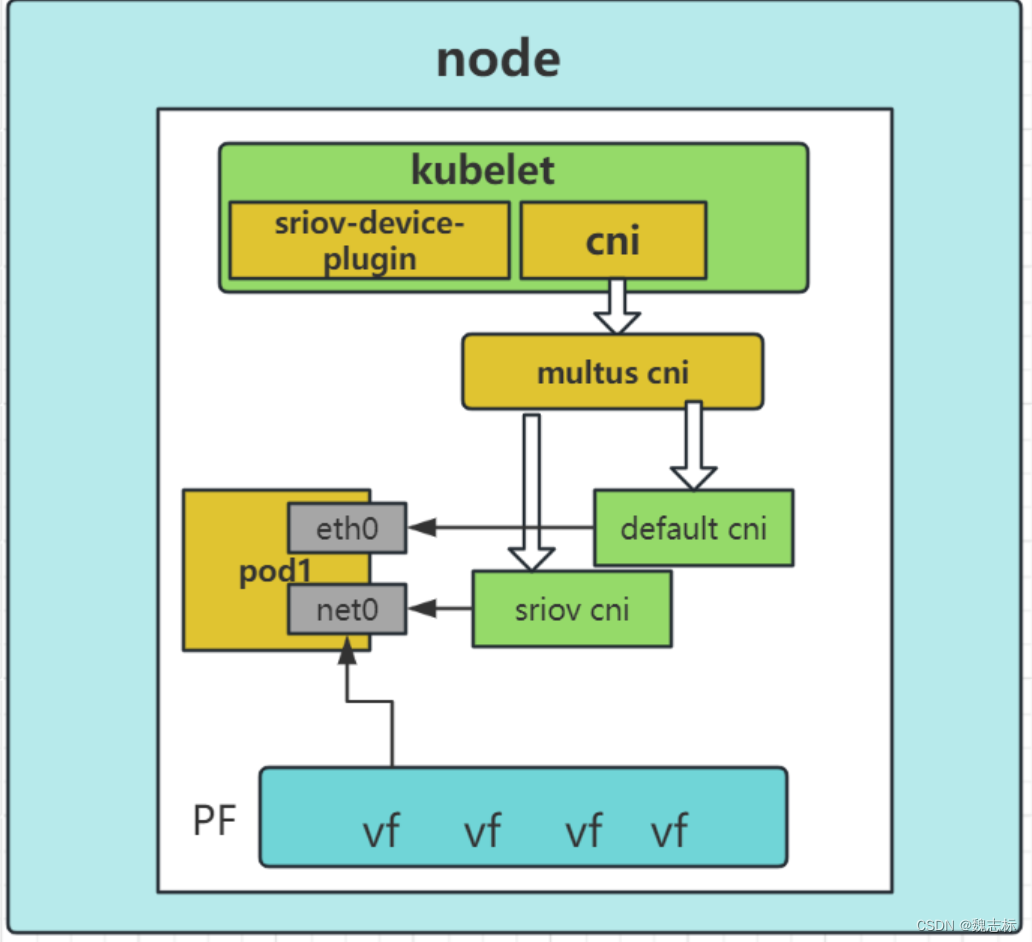

本文介绍的是第一个模式,直接连接sr-iov虚拟功能(vf设备),如下图所示:

上图中包含了一个node节点上使用的组件:kubelet、sriov-device-plugin、sriov-cni和multus-cni。

节点上的vf设备需要提前生成,然后由sriov-device-plugin将vf设备发布到k8s集群中。

在pod创建的时候,由kubelet调用multus-cni,multus-cni分别调用默认cni和sriov-cni插件为pod构建网络环境。

sriov-cni就是将主机上的vf设备添加进容器的网络命名空间中并配置ip地址。

三、环境准备

- k8s环境

[root@node1 ~]# kubectl get node name status roles age version node1 ready control-plane,master 47d v1.23.17 node2 ready control-plane,master 47d v1.23.17 node3 ready control-plane,master 47d v1.23.17

- 硬件环境

[root@node1 ~]# lspci -nn | grep -i eth 23:00.0 ethernet controller [0200]: intel corporation i350 gigabit network connection [8086:1521] (rev 01) 23:00.1 ethernet controller [0200]: intel corporation i350 gigabit network connection [8086:1521] (rev 01) 41:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] 41:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] 42:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] 42:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] 63:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] 63:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] a1:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] a1:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015] [root@node1 ~]# 本环境将使用mellanox technologies mt27710进行实验测试。 ########确认网卡是否支持sriov [root@node1 ~]# lspci -v -s 41:00.0 41:00.0 ethernet controller: mellanox technologies mt27710 family [connectx-4 lx] subsystem: mellanox technologies stand-up connectx-4 lx en, 25gbe dual-port sfp28, pcie3.0 x8, mcx4121a-acat physical slot: 19 flags: bus master, fast devsel, latency 0, irq 195, iommu group 56 memory at 2bf48000000 (64-bit, prefetchable) [size=32m] expansion rom at c6f00000 [disabled] [size=1m] capabilities: [60] express endpoint, msi 00 capabilities: [48] vital product data capabilities: [9c] msi-x: enable+ count=64 masked- capabilities: [c0] vendor specific information: len=18 <?> capabilities: [40] power management version 3 capabilities: [100] advanced error reporting capabilities: [150] alternative routing-id interpretation (ari) capabilities: [180] single root i/o virtualization (sr-iov) ##支持sriov capabilities: [1c0] secondary pci express capabilities: [230] access control services kernel driver in use: mlx5_core kernel modules: mlx5_core ####网卡支持的驱动类型

- 开启vf

[root@node1 ~]# echo 8 > /sys/class/net/ens19f0/device/sriov_numvfs

####物理机查看开启的vf

[root@node1 ~]# ip link

1: lo: <loopback,up,lower_up> mtu 65536 qdisc noqueue state unknown mode default group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: ens19f0: <broadcast,multicast,promisc,up,lower_up> mtu 9000 qdisc mq state up mode default group default qlen 1000

link/ether e8:eb:d3:33:be:ea brd ff:ff:ff:ff:ff:ff

vf 0 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 1 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 2 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 3 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 4 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 5 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 6 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

vf 7 link/ether 00:00:00:00:00:00, spoof checking off, link-state auto, trust off, query_rss off

###确认vf被开启

[root@node1 ~]# lspci -nn | grep -i ether

23:00.0 ethernet controller [0200]: intel corporation i350 gigabit network connection [8086:1521] (rev 01)

23:00.1 ethernet controller [0200]: intel corporation i350 gigabit network connection [8086:1521] (rev 01)

41:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

41:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

41:00.2 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:00.3 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:00.4 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:00.5 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:00.6 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:00.7 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:01.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

41:01.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx virtual function] [15b3:1016]

42:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

42:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

63:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

63:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

a1:00.0 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

a1:00.1 ethernet controller [0200]: mellanox technologies mt27710 family [connectx-4 lx] [15b3:1015]

#####ip a查看在系统中被识别

[root@node1 ~]# ip a | grep ens19f0v

18: ens19f0v0: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

19: ens19f0v1: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

20: ens19f0v2: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

21: ens19f0v3: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

22: ens19f0v4: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

23: ens19f0v5: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

24: ens19f0v6: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

25: ens19f0v7: <broadcast,multicast,allmulti,promisc,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

[root@node1 ~]#四、sriov安装

- sriov-device-plugin安装

[root@node1 ~]# git clone https://github.com/k8snetworkplumbingwg/sriov-network-device-plugin.git

[root@node1 ~]# cd sriov-network-device-plugin/

[root@node1 ~]# make image ###编译镜像

[root@node1 ~]#

或者直接通过pull 命令下载镜像

[root@node1 ~]# docker pull ghcr.io/k8snetworkplumbingwg/sriov-network-device-plugin:latest-amd

##############################

sr-iov设备的pf资源和vf资源需要发布到k8s集群中以供pod使用,所以这边需要用到device-plugin,device-plugin的pod是用daemonset部署的,运行在每个node节点上,节点上的kubelet服务会通过grpc方式调用device-plugin里的listandwatch接口获取节点上的所有sr-iov设备device信息,device-plugin也会通过register方法向kubelet注册自己的服务,当kubelet需要为pod分配sr-iov设备时,会调用device-plugin的allocate方法,传入deviceid,获取设备的详细信息。

##############修改configmap,主要是用于筛选节点上的sr-iov的vf设备,注册vf到k8s集群

[root@node1 ~]# vim sriov-network-device-plugin/deployments/configmap.yaml

apiversion: v1

kind: configmap

metadata:

name: sriovdp-config

namespace: kube-system

data:

config.json: |

{

"resourcelist": [{

"resourceprefix": "mellanox.com",

"resourcename": "mellanox_sriov_switchdev_mt27710_ens19f0_vf",

"selectors": {

"drivers": ["mlx5_core"],

"pfnames": ["ens19f0#0-7"] ###填写被系统中识别到设备名称也可以使用设备厂商的vendors,配置方式多种

}

}

]

}

#######部署sriov-device-plugin

[root@node1 ~]# kubectl create -f deployments/configmap.yaml

[root@node1 ~]# kubectl create -f deployments/sriovdp-daemonset.yaml

######查看sriov已经启动

[root@node1 ~]# kubectl get po -a -o wide | grep sriov

kube-system kube-sriov-device-plugin-amd64-d7ctb 1/1 running 0 6d5h 172.28.30.165 node3 <none> <none>

kube-system kube-sriov-device-plugin-amd64-h86dl 1/1 running 0 6d5h 172.28.30.164 node2 <none> <none>

kube-system kube-sriov-device-plugin-amd64-rlpwb 1/1 running 0 6d5h 172.28.30.163 node1 <none> <none>

[root@node1 ~]#

#####describe node查看vf已经被注册到节点

[root@node1 ~]# kubectl describe node node1

---------

capacity:

cpu: 128

devices.kubevirt.io/kvm: 1k

devices.kubevirt.io/tun: 1k

devices.kubevirt.io/vhost-net: 1k

ephemeral-storage: 256374468ki

hugepages-1gi: 120gi

mellanox.com/mellanox_sriov_switchdev_mt27710_ens19f0_vf: 8 ##已经被注册

memory: 527839304ki

pods: 110

allocatable:

cpu: 112

devices.kubevirt.io/kvm: 1k

devices.kubevirt.io/tun: 1k

devices.kubevirt.io/vhost-net: 1k

ephemeral-storage: 236274709318

hugepages-1gi: 120gi

mellanox.com/mellanox_sriov_switchdev_mt27710_ens19f0_vf: 8 ##可分配数量

- sriov cni安装

[root@node1 ~]# git clone https://github.com/k8snetworkplumbingwg/sriov-cni.git [root@node1 ~]# cd sriov-cni [root@node1 ~]# make ###编译sriov cni [root@node1 ~]# cp build/sriov /opt/cni/bin/ #每个sriov节点都要拷贝以及执行下面修改权限的命令 [root@node1 ~]# chmod 777 /opt/cni/bin/sriov

sriov-cni主要做的事情:

首先sriov-cni部署后,会在/opt/cni/bin目录下放一个sriov的可执行文件。

然后,当kubelet会调用multus-cni插件,然后multus-cni插件里会调用delegates数组里的插件,delegates数组中会有sr-iov信息,然后通过执行/opt/cni/bin/sriov命令为容器构建网络环境,这边构建的网络环境的工作有:

根据kubelet分配的sriov设备id找到设备,并将其添加到容器的网络命名空间中为该设备添加ip地址

- multus安装

安装步骤可以参考

五、pod使用sriov

- 创建net-attach-def

[root@node1 ~]# vim sriov-attach.yaml

apiversion: "k8s.cni.cncf.io/v1"

kind: networkattachmentdefinition

metadata:

name: sriov-attach

annotations:

k8s.v1.cni.cncf.io/resourcename: mellanox.com/mellanox_sriov_switchdev_mt27710_ens19f0_vf

spec:

config: '{

"cniversion": "0.3.1",

"name": "sriov-attach",

"type": "sriov",

"ipam": {

"type": "calico-ipam",

"range": "222.0.0.0/8"

}

}'

[root@node1 ~]# kubectl apply -f sriov-attach.yaml

networkattachmentdefinition.k8s.cni.cncf.io/sriov-attach created

[root@node1 ~]# kubectl get net-attach-def

name age

sriov-attach 12s

- 定义pod yaml

[root@node1 ~]# cat sriov-attach.yaml

---

apiversion: apps/v1

kind: deployment

metadata:

name: sriov

labels:

app: sriov-attach

spec:

replicas: 1

selector:

matchlabels:

app: sriov-attach

template:

metadata:

annotations:

k8s.v1.cni.cncf.io/networks: sriov-attach

labels:

app: sriov-attach

spec:

containers:

- name: sriov-attach

image: docker.io/library/nginx:latest

imagepullpolicy: ifnotpresent

resources:

requests:

cpu: 1

memory: 1gi

mellanox.com/mellanox_sriov_switchdev_mt27710_ens19f0_vf: '1'

limits:

cpu: 1

memory: 1gi

mellanox.com/mellanox_sriov_switchdev_mt27710_ens19f0_vf: '1'

[root@node1 ~]#

#####启动pod测试

[root@node1 ~]# kubectl apply -f sriov-attach.yaml

deployment.apps/sriov created

[root@node1 ~]# kubectl get po -o wide

name ready status restarts age ip node nominated node readiness gates

sriov-65c8f754f9-jlcd5 1/1 running 0 6s 172.25.36.87 node1 <none> <none>- 查看pod

#########1:describe pod查看资源分配情况

[root@node1 wzb]# kubectl describe po sriov-65c8f754f9-jlcd5

name: sriov-65c8f754f9-jlcd5

namespace: default

priority: 0

node: node1/172.28.30.163

start time: wed, 28 feb 2024 20:56:24 +0800

labels: app=sriov-attach

pod-template-hash=65c8f754f9

annotations: cni.projectcalico.org/containerid: 21ec82394a00c893e5304577b59984441bd3adac82929b5f9b5538f988245bf5

cni.projectcalico.org/podip: 172.25.36.87/32

cni.projectcalico.org/podips: 172.25.36.87/32

k8s.v1.cni.cncf.io/network-status:

[{

"name": "k8s-pod-network",

"ips": [

"172.25.36.87"

],

"default": true,

"dns": {}

},{

"name": "default/sriov-attach",

"interface": "net1",

"ips": [

"172.25.36.90"

],

"mac": "f6:c2:e5:d1:7b:fa",

"dns": {},

"device-info": {

"type": "pci",

"version": "1.1.0",

"pci": {

"pci-address": "0000:41:00.6"

}

}

}]

k8s.v1.cni.cncf.io/networks: sriov-attach

status: running

ip: 172.25.36.87

ips:

ip: 172.25.36.87

controlled by: replicaset/sriov-65c8f754f9

containers:

nginx:

container id: containerd://6d5246c3e36a125ba60bad6af63f8bffe4710d78c2e14e6afb0d466c3f0f5d6e

image: docker.io/library/nginx:latest

image id: sha256:12766a6745eea133de9fdcd03ff720fa971fdaf21113d4bc72b417c123b15619

port: <none>

host port: <none>

state: running

started: wed, 28 feb 2024 20:56:28 +0800

ready: true

restart count: 0

limits:

cpu: 1

intel.com/intel_sriov_switchdev_mt27710_ens19f0_vf: 1

memory: 1gi

requests:

cpu: 1

intel.com/intel_sriov_switchdev_mt27710_ens19f0_vf: 1 ##pod中已经分配了sriov资源

memory: 1gi

environment: <none>

mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-pnl9d (ro)

conditions:

type status

initialized true

ready true

containersready true

podscheduled true

volumes:

kube-api-access-pnl9d:

type: projected (a volume that contains injected data from multiple sources)

tokenexpirationseconds: 3607

configmapname: kube-root-ca.crt

configmapoptional: <nil>

downwardapi: true

qos class: guaranteed

node-selectors: <none>

tolerations: node.kubernetes.io/not-ready:noexecute op=exists for 300s

node.kubernetes.io/unreachable:noexecute op=exists for 300s

events:

type reason age from message

---- ------ ---- ---- -------

normal scheduled 105s default-scheduler successfully assigned default/sriov-65c8f754f9-jlcd5 to node1

normal addedinterface 103s multus add eth0 [172.25.36.87/32] from k8s-pod-network

normal addedinterface 102s multus add net1 [172.25.36.90/26] from default/sriov-attach ####sriov网卡正常被添加

normal pulled 102s kubelet container image "docker.io/library/nginx:latest" already present on machine

normal created 102s kubelet created container nginx

normal started 102s kubelet started container nginx

[root@node1 wzb]#

#######################

进入pod内部查看,已经有网卡net1 获取到地址

[root@node1 ~]# crictl ps | grep sriov-attach

6d5246c3e36a1 12766a6745eea 4 minutes ago running nginx 0 21ec82394a00c

[root@node1 ~]# crictl inspect 6d5246c3e36a1 | grep -i pid

"pid": 2775224,

"pid": 1

"type": "pid"

[root@node1 ~]# ns

nsec3hash nsenter nslookup nss-policy-check nstat nsupdate

[root@node1 ~]# nsenter -t 2775224 -n bash

[root@node1 ~]# ip a

1: lo: <loopback,up,lower_up> mtu 65536 qdisc noqueue state unknown group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ip_vti0@none: <noarp> mtu 1480 qdisc noop state down group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if30729: <broadcast,multicast,up,lower_up> mtu 9000 qdisc noqueue state up group default

link/ether ae:f9:85:03:13:2f brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.25.36.87/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::acf9:85ff:fe03:132f/64 scope link

valid_lft forever preferred_lft forever

21: net1: <broadcast,multicast,up,lower_up> mtu 9000 qdisc mq state up group default qlen 1000

link/ether f6:c2:e5:d1:7b:fa brd ff:ff:ff:ff:ff:ff

inet 172.25.36.90/26 brd 172.25.36.127 scope global net1

valid_lft forever preferred_lft forever

inet6 fe80::f4c2:e5ff:fed1:7bfa/64 scope link

valid_lft forever preferred_lft forever

[root@node1 ~]#

总结

以上为个人经验,希望能给大家一个参考,也希望大家多多支持代码网。

发表评论