文章目录

1.rbd-provisioner驱动介绍

rbd-provisioner和csi-provisioner都是storageclass对接ceph集群块存储的驱动客户端,csi客户端部署相对复杂,并且镜像拉取很费力,rbd客户端部署非常简单,相当于开箱即用。

无论使用哪种类型的驱动都可以,不过使用rbd客户端时,会遇到一个大坑,如下所示。

任何配置都正确,ceph可执行命令也全部安装了,但是使用storageclass为pvc分配pv时,依旧报以下错误:

events:

type reason age from message

---- ------ ---- ---- -------

warning provisioningfailed 50s (x15 over 18m) persistentvolume-controller failed to provision volume with storageclass "rbd-storageclass": failed to create rbd image: executable file not found in $path, command output:

提示创建rbd块存储的命令找不到,但是每个节点都安装了ceph的可执行命令,这是因为k8s创建rbd块设备是通过kube-controller-manage这组件调用rbd客户端的api来创建rbd块存储的,如果k8s集群是以kubeadm方式部署的,所有的组件都以pod形式部署,虽然node节点都安装了ceph的命令,但是kube-controller-manage组件是以容器运行的,容器中并没有这个命令,那么就会产生该问题。

解决方法就是手动部署一套外部的rbd-provisioner客户端,在客户端的容器里就包含了rbd的一些可执行命令,从而解决这个问题。

rbd-provisioner项目地址:https://github.com/kubernetes-retired/external-storage/tree/master/ceph/rbd/deploy/rbac

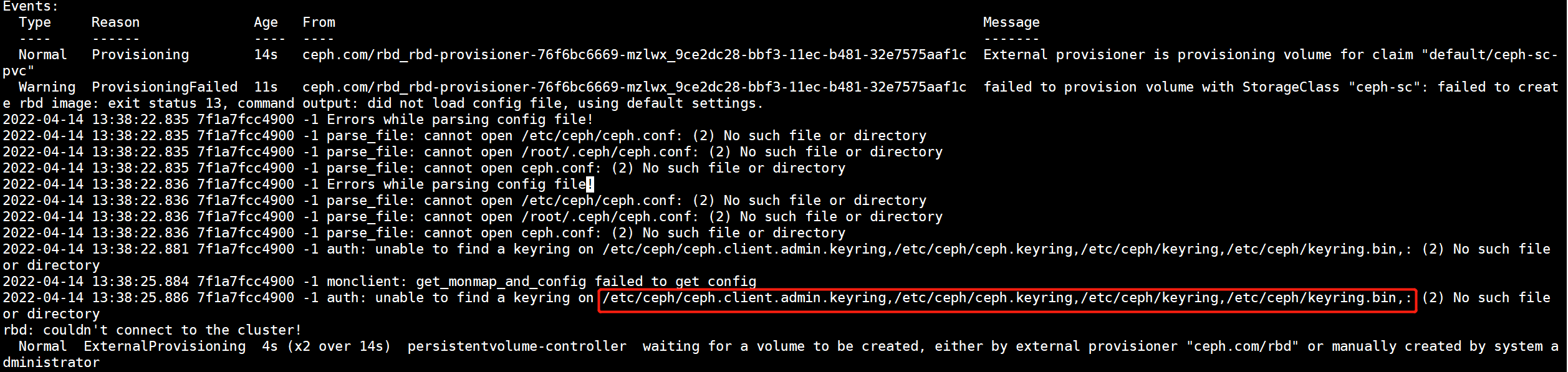

另外还有一个需要注意的地方,使用外部的rbd-provisioner驱动时,一定要将ceph集群的/etc/ceph/ceph.client.admin.keyring文件上传到各个节点,否则也会报错,如下图所示

2.在k8s集群中部署外部的rbd-provisioner驱动

2.1.将ceph集群的认证文件和配置上传到k8s的各个节点

外部的rbd-provisioner驱动容器需要通过ceph的认证文件和配置文件才能够连接到集群,先将配置文件拷贝到各个k8s node节点上,然后在驱动的资源编排文件中通过hostpath的方式将配置文件挂载到容器中。

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.client.admin.keyring root@192.168.20.10:/etc/ceph/

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.client.admin.keyring root@192.168.20.11:/etc/ceph/

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.client.admin.keyring root@192.168.20.12:/etc/ceph/

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.conf root@192.168.20.10:/etc/ceph/

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.conf root@192.168.20.11:/etc/ceph/

[root@ceph-node-1 ~]# scp /etc/ceph/ceph.conf root@192.168.20.12:/etc/ceph/

2.2.获取外部rbd-provisioner驱动的资源编排文件

外部rbd-provisioner驱动的资源编排文件在github中托管,github地址:https://github.com/kubernetes-retired/external-storage/tree/master/ceph/rbd/deploy/rbac,主要是一些rbac授权的资源编排文件和资源的deployment编排文件。

完整的资源编排文件内容如下:

[root@k8s-master rbd-provisioner]# vim rbd-provisioner.yaml

kind: clusterrole

apiversion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

rules:

- apigroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apigroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apigroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apigroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apigroups: [""]

resources: ["services"]

resourcenames: ["kube-dns", "coredns"]

verbs: ["list", "get"]

- apigroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apigroups: [""]

resources: ["endpoints"] #增加了endpoints资源的授权

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apigroups: [""]

resources: ["secrets"] #增加了secrets资源的授权

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: clusterrolebinding

apiversion: rbac.authorization.k8s.io/v1

metadata:

name: rbd-provisioner

subjects:

- kind: serviceaccount

name: rbd-provisioner

namespace: kube-system

roleref:

kind: clusterrole

name: rbd-provisioner

apigroup: rbac.authorization.k8s.io

---

apiversion: rbac.authorization.k8s.io/v1

kind: role

metadata:

name: rbd-provisioner

namespace: kube-system

rules:

- apigroups: [""]

resources: ["secrets"]

verbs: ["get"]

- apigroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

apiversion: rbac.authorization.k8s.io/v1

kind: rolebinding

metadata:

name: rbd-provisioner

namespace: kube-system

roleref:

apigroup: rbac.authorization.k8s.io

kind: role

name: rbd-provisioner

subjects:

- kind: serviceaccount

name: rbd-provisioner

namespace: kube-system

---

apiversion: apps/v1

kind: deployment

metadata:

name: rbd-provisioner

namespace: kube-system

labels:

app: rbd-provisioner

spec:

replicas: 1

selector:

matchlabels:

app: rbd-provisioner

strategy:

type: recreate

template:

metadata:

labels:

app: rbd-provisioner

spec:

containers:

- name: rbd-provisioner

image: "quay.io/external_storage/rbd-provisioner:latest"

volumemounts: #挂载到容器的/etc/ceph目录中

- name: ceph-conf

mountpath: /etc/ceph

env:

- name: provisioner_name

value: ceph.com/rbd

serviceaccount: rbd-provisioner

volumes: #添加一个数据卷,将ceph的配置文件挂载到容器中

- name: ceph-conf

hostpath:

path: /etc/ceph

---

apiversion: v1

kind: serviceaccount

metadata:

name: rbd-provisioner

namespace: kube-system

2.3.在集群中部署rbd-provisioner驱动程序

1)创建rbd-provisioner驱动的所有资源控制器

[root@k8s-master rbd-provisioner]# kubectl apply -f rbd-provisioner.yaml

serviceaccount/rbd-provisioner created

clusterrole.rbac.authorization.k8s.io/rbd-provisioner created

clusterrolebinding.rbac.authorization.k8s.io/rbd-provisioner created

role.rbac.authorization.k8s.io/rbd-provisioner created

rolebinding.rbac.authorization.k8s.io/rbd-provisioner created

deployment.apps/rbd-provisioner created

2)查看资源的状态

一定要确保rbd容器的状态是running,否则后续一切不可用

[root@k8s-master rbd-provisioner]# kubectl get pod -n kube-system | grep rbd

rbd-provisioner-596454b778-8p648 1/1 running 0 88m

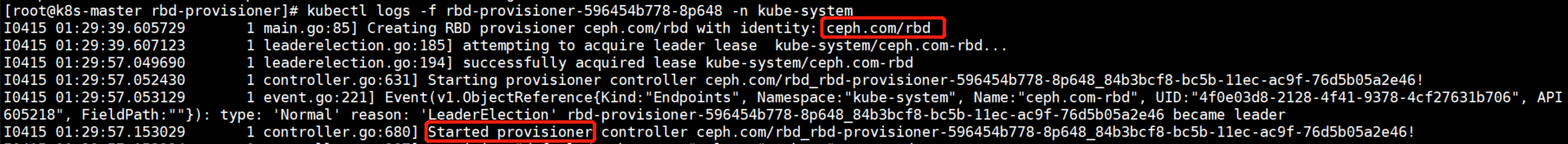

rbd启动成功后,日志输出如下所示,可以看到驱动的名称和启动信息。

2.4.进入rbd-provisioner容器中查看ceph的配置文件是否挂载

容器一定要能读到ceph的配置文件,否则是无法连接到集群创建rbd块存储的。

[root@k8s-master rbd-provisioner]# kubectl exec -it rbd-provisioner-596454b778-8p648 bash -n kube-system

[root@rbd-provisioner-596454b778-8p648 /]# ll /etc/ceph/

total 12

-rw-r--r-- 1 root root 151 apr 14 14:08 ceph.client.admin.keyring

-rw-r--r-- 1 root root 623 apr 14 14:17 ceph.conf

-rwxr-xr-x 1 root root 2910 oct 30 2018 rbdmap

2.5.查看rbd-provisioner容器中ceph相关命令的版本

这一步很关键,如果rbd-provisioner容器中的ceph相关命令版本与物理机ceph集群的版本不同的话,也是无法连接到ceph集群创建rbd块存储的。

1)查看物理机上ceph的版本

[root@ceph-node-1 ~]# ceph -v

ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

2)查看rbd-provisioner容器中ceph的版本

[root@k8s-master rbd-provisioner]# kubectl exec -it rbd-provisioner-596454b778-8p648 bash -n kube-system

[root@rbd-provisioner-596454b778-8p648 /]# ceph -v

ceph version v13.2.1 (564bdc4ae87418a232fc901524470e1a0f76d641) nautilus (stable)

发现rbd-provisioner容器中ceph的版本与物理机中ceph集群的版本并不吻合,这也会产生问题,导致无法正常连接ceph集群,需要将容器中ceph相关命令的版本进行升级。

3)升级rbd-provisioner容器中ceph的版本

1.进入到rbd-provisioner容器中

[root@k8s-master rbd-provisioner]# kubectl exec -it rbd-provisioner-596454b778-8p648 bash -n kube-system

2.准备ceph的yum源

[root@rbd-provisioner-596454b778-8p648 /]# vim /etc/yum.repos.d/ceph.repo

[noarch]

name=noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

enabled=1

gpgcheck=0

[x86_64]

name=x86_64

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

enabled=1

gpgcheck=0

3.清理yum缓存

[root@rbd-provisioner-596454b778-8p648 /]# yum clean all

[root@rbd-provisioner-596454b778-8p648 /]# yum makecache

4.更新ceph的版本

[root@rbd-provisioner-596454b778-8p648 /]# yum -y update

到此为止,rbd-provisioner驱动程序在集群中就真正部署完成了。

3.在ceph集群中创建资源池以及认证用户

1)创建资源池

[root@ceph-node-1 ~]# ceph osd pool create kubernetes_data 16 16

pool 'kubernetes_data' created

2)创建认证用户

[root@ceph-node-1 ~]# ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=kubernetes_data'

[client.kubernetes]

key = aqblrvribbqzjraad3lacyaxrlotvtio6e+10a==

4.在k8s集群中创建一个storageclass资源

rbd-provisioner驱动程序和ceph资源池已经准备就绪,下面来创建一个storageclass资源使用ceph的rbd块存储作为底层存储系统。

4.1.将ceph集群认证用户的信息存储在secret中

storageclass连接ceph集群时,会用到两个认证用户,当然都使用同一个也没有问题,连接集群使用admin用户,操作资源池使用kubernetes用户,admin用户的secret资源会存放在kube-system命名空间下,kubernetes用户的secret资源会存放在于storageclass相同的命名空间下,当然也可以将kubernetes用户的secret资源存放在kube-system命名空间中,storageclass引用secret时可以指定命名空间。

1)将认证用户的key进行base64加密

如果不进行base64加密也会报错。

1.admin用户加密

[root@ceph-node-1 ~]# ceph auth get-key client.admin | base64

qvfcsvdvaglfbwfgt0jbqtzkcjzpdfvlsglmvlzpzvlgvnbsb2c9pq==

2.kubernetes用户加密

[root@ceph-node-1 ~]# ceph auth get-key client.kubernetes | base64

qvfcbfjwumliynf6sljbquqzbgfjwwf4umxvvfzuaw82zssxmee9pq==

2)编写secret资源编排文件

将两个secret都存放在kube-system命名空间下,创建storageclass时,指定secret的命名空间即可,无需频繁创建secret。

[root@k8s-masterrbd-provisioner-yaml]# vim rbd-secret.yaml

apiversion: v1

kind: secret

metadata:

name: ceph-admin-secret

namespace: kube-system

type: "kubernetes.io/rbd"

data:

key: qvfcsvdvaglfbwfgt0jbqtzkcjzpdfvlsglmvlzpzvlgvnbsb2c9pqo=

---

apiversion: v1

kind: secret

metadata:

name: ceph-kubernetes-secret

namespace: kube-system

type: "kubernetes.io/rbd"

data:

key: qvfcbfjwumliynf6sljbquqzbgfjwwf4umxvvfzuaw82zssxmee9pqo=

3)创建secret资源

[root@k8s-masterrbd-provisioner-yaml]# kubectl apply -f rbd-secret.yaml

secret/ceph-admin-secret created

secret/ceph-kubernetes-secret created

4.2.创建一个storageclass存储资源

创建一个storageclass存储类资源,使用ceph集群的rbd作为底层存储。

特别需要注意的一点,在指定provisioner驱动名称时,一定要填写对外部rbd-provisioner的驱动名称,否则是有问题的。

1)编写资源编排文件

[root@k8s-masterrbd-provisioner-yaml]# vim rbd-storageclass.yaml

apiversion: storage.k8s.io/v1

kind: storageclass

metadata:

name: rbd-storageclass

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

provisioner: ceph.com/rbd #指定外部rbd-provisioner驱动的名称

reclaimpolicy: retain

parameters:

monitors: 192.168.20.20:6789,192.168.20.21:6789,192.168.20.22:6789 #monitor组件的地址

adminid: admin #连接集群使用的用户凭据,使用amdin用户

adminsecretname: ceph-admin-secret #admin用户的secret资源名称

adminsecretnamespace: kube-system #secret资源所在的命名空间

pool: kubernetes_data #资源池的名称

userid: kubernetes #访问资源池的用户名称,使用kubernetes用户

usersecretname: ceph-kubernets-secret #kubernetes用户的secret资源名称

usersecretnamespace: kube-system #资源所在的命名空间

fstype: ext4

imageformat: "2"

imagefeatures: "layering"

2)创建并查看资源的状态

[root@k8s-masterrbd-provisioner-yaml]# kubectl apply -f rbd-storageclass.yaml

storageclass.storage.k8s.io/rbd-storageclass created

[root@k8s-masterrbd-provisioner-yaml]# kubectl get sc

name provisioner reclaimpolicy volumebindingmode allowvolumeexpansion age

rbd-storageclass (default) ceph.com/rbd retain immediate false 6m59s

5.创建pvc资源并使用storageclass为其自动分配pv

5.1.创建一个pvc资源

1)编写资源编排文件

[root@k8s-masterrbd-provisioner-yaml]# vim rbd-sc-pvc.yaml

apiversion: v1

kind: persistentvolumeclaim

metadata:

name: rbd-sc-pvc

spec:

accessmodes:

- readwriteonce

resources:

requests:

storage: 1gi

storageclassname: rbd-storageclass #使用各个创建的storageclass为其分配pv

2)创建pvc并观察状态

[root@k8s-masterrbd-provisioner-yaml]# kubectl apply -f rbd-sc-pvc.yaml

persistentvolumeclaim/rbd-sc-pvc created

[root@k8s-masterrbd-provisioner-yaml]# kubectl get pvc

name status volume capacity access modes storageclass age

rbd-sc-pvc bound pvc-d5adca45-8593-4925-89f7-2347aab92e51 1gi rwo rbd-storageclass 32m

可以看到已经是bound状态了,已经为其自动的分配了pv。

[root@k8s-masterrbd-provisioner-yaml]# kubectl get pv

name capacity access modes reclaim policy status claim

pvc-d5adca45-8593-4925-89f7-2347aab92e51 1gi rwo retain bound default/rbd-sc-pvc rbd-storageclass 32m

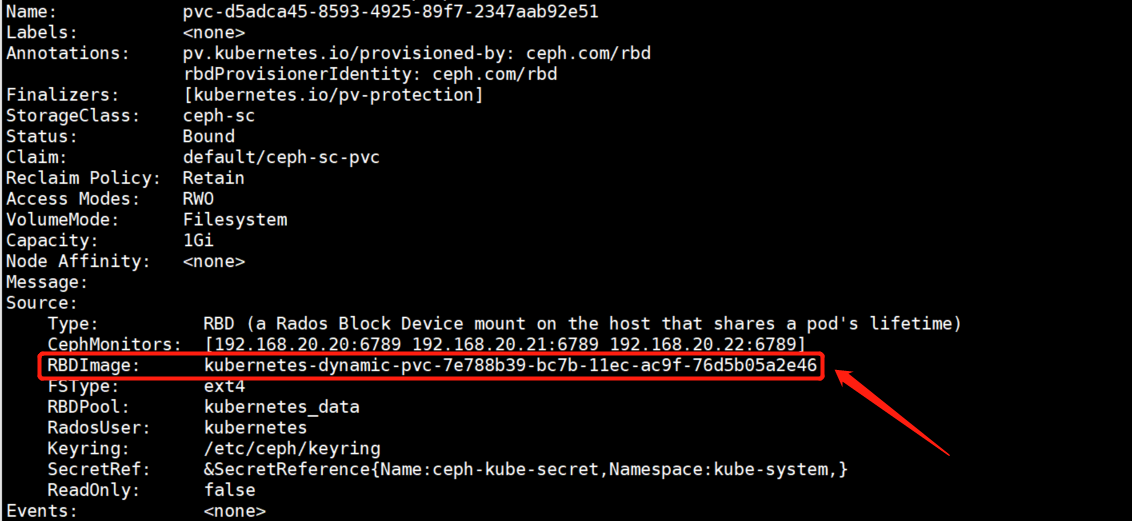

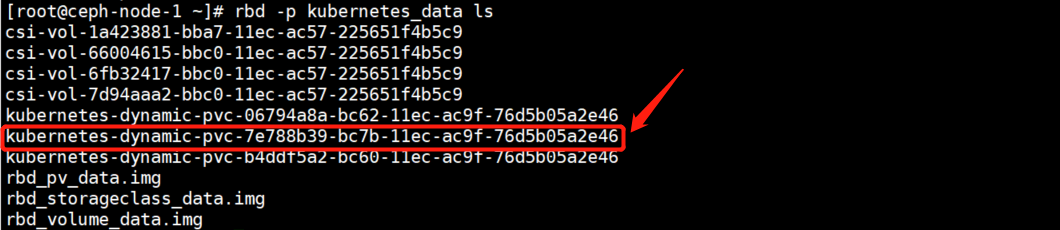

5.2.观察分配的pv与ceph资源池中rbd块设备的关联关系

在pv的详细信息中就可以看到pv对应在ceph资源池中的rbd块设备。

在资源池中查看块设备列表,与pv中显示的块设备名称一一对应。

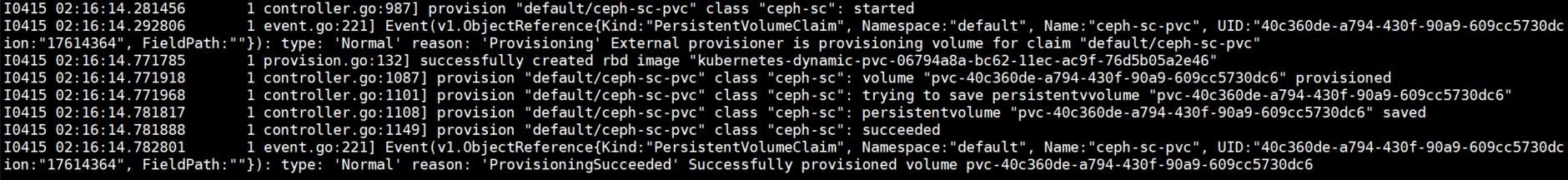

5.3.观察rbd-provisioner容器日志现象

在rbd-provisioner容器的日志中也可以看到看到pvc使用storageclass分配pv的过程,也可以看到创建rbd的过程。

6.创建pod资源挂载pvc

pvc已经准备就绪了,下面可以创建一个pod资源,去挂载pvc,验证数据存储是否存在问题。

1)编写资源编排文件

[root@k8s-masterrbd-provisioner-yaml]# vim rbd-sc-pvc-pod.yaml

apiversion: v1

kind: pod

metadata:

name: rbd-sc-pvc-pod

spec:

containers:

- image: nginx:1.15

name: nginx

ports:

- name: web

containerport: 80

protocol: tcp

volumemounts:

- name: data

mountpath: /var/www/html

volumes:

- name: data

persistentvolumeclaim:

claimname: rbd-sc-pvc

2)创建资源并查看资源的状态

[root@k8s-masterrbd-provisioner-yaml]# kubectl apply -f rbd-sc-pvc-pod.yaml

pod/rbd-sc-pvc-pod created

[root@k8s-masterrbd-provisioner-yaml]# kubectl get pod

name ready status restarts age

rbd-sc-pvc-pod 1/1 running 0 2m41s

3)pod已经启动成功进入容器中验证数据持久化

[root@k8s-masterrbd-provisioner-yaml]# kubectl exec -it rbd-sc-pvc-pod bash

root@rbd-sc-pvc-pod:/# df -ht /var/www/html

filesystem type size used avail use% mounted on

/dev/rbd1 ext4 976m 2.6m 958m 1% /var/www/html

root@rbd-sc-pvc-pod:/# cd /var/www/html

root@rbd-sc-pvc-pod:/var/www/html# touch file{1..10}.txt

root@rbd-sc-pvc-pod:/var/www/html# ls

file1.txt file10.txt file2.txt file3.txt file4.txt file5.txt file6.txt file7.txt file8.txt file9.txt lost+found

7.创建statefulset控制器使用storageclass为每个pod分配独立的存储

创建一个statefulset资源控制器使用storageclass为每个pod分配独立的存储,创建完成后,在ceph资源池中观察rbd块存储。

7.1.创建一个statefulset资源

1)编写资源编排文件

要声明storageclass创建的pvc的访问模式是单主机可读可写。

[root@k8s-masterrbd-provisioner-yaml]# vim rbd-statefulset.yaml

apiversion: apps/v1

kind: statefulset

metadata:

name: nginx

spec:

selector:

matchlabels:

app: nginx

servicename: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.15

volumemounts:

- name: web-data

mountpath: /var/www/html

volumeclaimtemplates:

- metadata:

name: web-data

spec:

accessmodes: [ "readwriteonce" ]

storageclassname: "rbd-storageclass" #使用storageclass

resources:

requests:

storage: 1gi

2)创建资源并查看资源的状态

[root@k8s-masterrbd-provisioner-yaml]# kubectl apply -f rbd-statefulset.yaml

statefulset.apps/nginx created

[root@k8s-masterrbd-provisioner-yaml]# kubectl get pod,statefulset

name ready status restarts age

pod/rbd-sc-pvc-pod ·1/1 running 0 8m29s

pod/nginx-0 1/1 running 0 2m40s

pod/nginx-1 1/1 running 0 2m21s

pod/nginx-2 1/1 running 0 2m1s

name ready age

statefulset.apps/nginx 3/3 2m40s

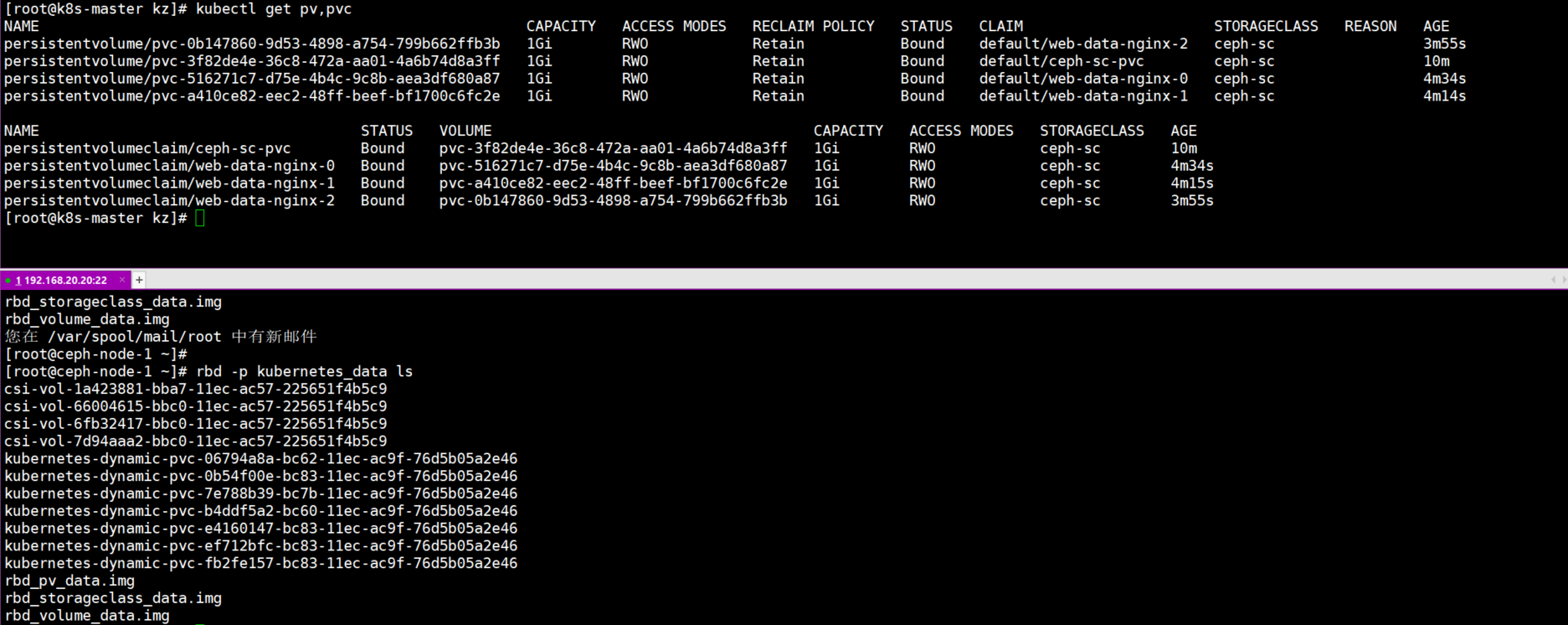

7.2.查看pv与pvc以及rbd块设备

一一对应。

8.使用外部的rbd-provisioner故障拍错锦囊

8.1.报错一:无法找到可执行命令

报错内容如下所示:

events:

type reason age from message

---- ------ ---- ---- -------

warning provisioningfailed 4s persistentvolume-controller failed to provision volume with storageclass "rbd-storageclass": failed to create rbd image: executable file not found in $path, command output:

解决方案:部署外部的rbd-provisioner驱动。

如果部署了外部的rbd-provisioner驱动依旧有此报错,那么就检查一下storageclass中有没有指定rbd-provisioner驱动的名称。

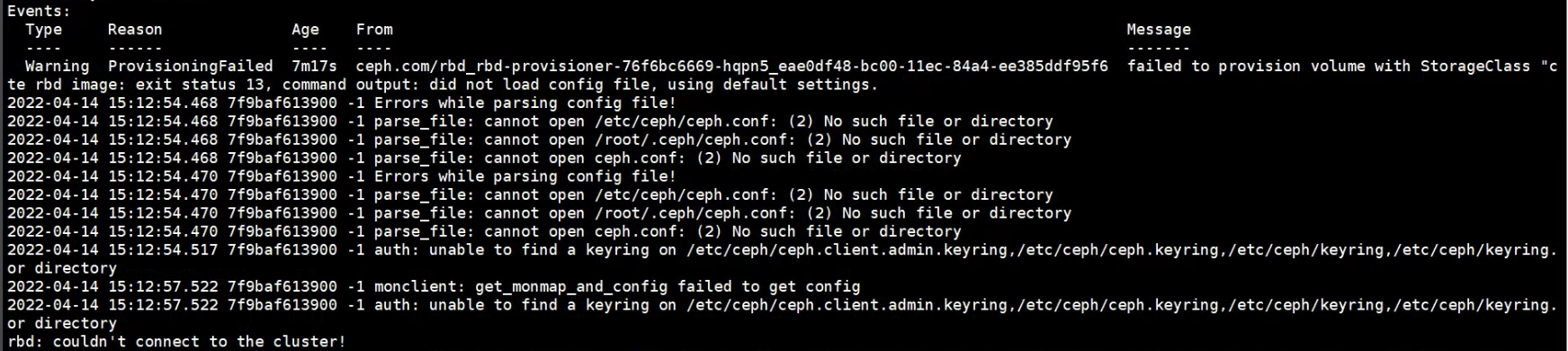

8.2.报错二:pvc的详情中报错找不到ceph配置文件无法连接集群

报错内容如下所示:

events:

type reason age from message

---- ------ ---- ---- -------

warning provisioningfailed 7m17s ceph.com/rbd_rbd-provisioner-76f6bc6669-hqpn5_eae0df48-bc00-11ec-84a4-ee385ddf95f6 failed to provision volume with storageclass "cete rbd image: exit status 13, command output: did not load config file, using default settings.

2022-04-14 15:12:54.468 7f9baf613900 -1 errors while parsing config file!

2022-04-14 15:12:54.468 7f9baf613900 -1 parse_file: cannot open /etc/ceph/ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.468 7f9baf613900 -1 parse_file: cannot open /root/.ceph/ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.468 7f9baf613900 -1 parse_file: cannot open ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.470 7f9baf613900 -1 errors while parsing config file!

2022-04-14 15:12:54.470 7f9baf613900 -1 parse_file: cannot open /etc/ceph/ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.470 7f9baf613900 -1 parse_file: cannot open /root/.ceph/ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.470 7f9baf613900 -1 parse_file: cannot open ceph.conf: (2) no such file or directory

2022-04-14 15:12:54.517 7f9baf613900 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bor directory

2022-04-14 15:12:57.522 7f9baf613900 -1 monclient: get_monmap_and_config failed to get config

2022-04-14 15:12:57.522 7f9baf613900 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bor directory

rbd: couldn't connect to the cluster!

解决方法:将ceph集群的ceph.client.admin.keyring和ceph.conf两个文件拷贝到所有的k8s node节点中。

如果已经将配置文件拷贝到所有的k8s node节点,问题依旧还在,无法解决,那么可能就不是node节点需要这些配置文件,而是rbd-provisioner驱动的容器里需要这些文件,将配置文件使用kubectl cp的方式拷贝到pod里就可以解决该问题,但是pod是会被删除的,总不能每次都收到拷贝。

最终解决方案是在rbd-provisioner驱动的deployment资源编排文件中增加一个hostpath类型的挂载,将node节点的/etc/ceph目录挂载到pod中。

spec:

containers:

- name: rbd-provisioner

image: "quay.io/external_storage/rbd-provisioner:latest"

volumemounts:

- name: ceph-conf

mountpath: /etc/ceph

env:

- name: provisioner_name

value: ceph.com/rbd

serviceaccount: rbd-provisioner

volumes:

- name: ceph-conf

hostpath:

path: /etc/ceph

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "exists"

effect: "noschedule"

8.3.报错三:rbd-provisioner无法读取secret资源

报错内容如下所示:

events:

type reason age from message

---- ------ ---- ---- -------

normal provisioning 8s (x2 over 23s) ceph.com/rbd_rbd-provisioner-76f6bc6669-mz9wb_84f6f514-bc00-11ec-894b-fe2157f7b168 external provisioner is provisioning volume for claim "default/rbd-sc-pvc"

warning provisioningfailed 8s (x2 over 23s) ceph.com/rbd_rbd-provisioner-76f6bc6669-mz9wb_84f6f514-bc00-11ec-894b-fe2157f7b168 failed to provision volume with storageclass "rbd-storageclass": failed to get admin secret from ["default"/"rbd-secret"]: secrets "rbd-secret" is forbidden: user "system:serviceaccount:kube-system:rbd-provisioner" cannot get resource "secrets" in api group "" in the namespace "default"

normal externalprovisioning 1s (x4 over 23s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "ceph.com/rbd" or manually created by system administrator

由于rbac授权导致的,rbd-provisioner资源位于kube-system命名空间下,而secret资源在default命名空间下,故而无法读取。

解决方法:在rbd-provisioner驱动的clusterrole资源编排文件中增加上以下配置即可解决。

- apigroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apigroups: [""]

resources: ["secrets"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

8.4.报错四:rbd-provisioner获取集群配置失败

报错内容如下所示:

events:

type reason age from message

---- ------ ---- ---- -------

normal provisioning 17s ceph.com/rbd_rbd-provisioner-596454b778-vtvp2_cfe5a372-bc89-11ec-8245-dec0ad1cd55d external provisioner is provisioning volume for claim "default/rbd-sc-pvc"

warning provisioningfailed 14s ceph.com/rbd_rbd-provisioner-596454b778-vtvp2_cfe5a372-bc89-11ec-8245-dec0ad1cd55d failed to provision volume with storageclass "rbd-storageclass": failed to create rbd image: exit status 13, command output: 2022-04-15 07:28:08.232 7f5c3ef0c900 -1 monclient: get_monmap_and_config failed to get config

rbd: couldn't connect to the cluster!

normal externalprovisioning 3s (x2 over 17s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "ceph.com/rbd" or manually created by system administrator

获取集群配置失败,配置文件都准备好了,理论上不可能再出现这种情况,遇到这种情况后,先查看配置文件的权限,可以执行chmod +r ceph.client.admin.keyring这条命令给文件加一个读取权限。

如果依旧存在问题,那么很有可能是rbd-provisioner驱动容器中ceph相关的命令与ceph集群的版本不同导致,如果版本不同的话,将ceph相关命令升级一下版本即可解决。

1)查看物理机上ceph的版本

[root@ceph-node-1 ~]# ceph -v

ceph version 14.2.22 (ca74598065096e6fcbd8433c8779a2be0c889351) nautilus (stable)

2)查看rbd-provisioner容器中ceph的版本

[root@k8s-master rbd-provisioner]# kubectl exec -it rbd-provisioner-596454b778-8p648 bash -n kube-system

[root@rbd-provisioner-596454b778-8p648 /]# ceph -v

ceph version v13.2.1 (564bdc4ae87418a232fc901524470e1a0f76d641) nautilus (stable)

3)升级rbd-provisioner容器中ceph的版本

1.进入到rbd-provisioner容器中

[root@k8s-master rbd-provisioner]# kubectl exec -it rbd-provisioner-596454b778-8p648 bash -n kube-system

2.准备ceph的yum源

[root@rbd-provisioner-596454b778-8p648 /]# vim /etc/yum.repos.d/ceph.repo

[noarch]

name=noarch

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

enabled=1

gpgcheck=0

[x86_64]

name=x86_64

baseurl=https://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

enabled=1

gpgcheck=0

3.清理yum缓存

[root@k8s-master rbd-provisioner]# yum clean all

[root@k8s-master rbd-provisioner]# yum makecache

4.更新ceph的版本

[root@k8s-master rbd-provisioner]# yum -y update

发表评论