一、环境准备与规划

1.1 集群架构

- 节点1 (

192.168.65.137): 初始主节点,同时部署 mysql router - 节点2 (

192.168.65.142): 从节点 - 节点3 (

192.168.65.143): 从节点 - 关键软件:mysql server 8.0.44, mysql shell 8.0.37, mysql router 8.0.37

重要提示:请将下文所有配置中的示例ip替换为您服务器的实际ip地址。

1.2 前置准备

- 下载所需软件包至所有节点的

/opt/packages/目录。 - (可选)为离线环境准备依赖包:

dnf reinstall --downloadonly --downloaddir=./deps \ libaio numactl-libs openssl libtirpc ncurses-compat-libs \ libstdc++ libgcc pcre2 libedit numactl

二、基础环境与mysql安装(所有节点)

在所有节点上执行以下整合脚本。

#!/bin/bash

# ========== mysql 基础安装脚本 (all nodes) ==========

# 1. 创建用户和目录

groupadd mysql

useradd -r -g mysql -s /bin/false mysql

mkdir -p /data1/mysql/{data,logs,tmp}

chown -r mysql:mysql /data1/mysql

# 关闭selinux

sudo sed -i 's/selinux=enforcing/selinux=disabled/g' /etc/selinux/config

sudo reboot

# 2. 安装依赖(在线优先,离线备用)

echo “正在安装系统依赖...”

dnf install -y libaio numactl-libs openssl libtirpc ncurses-compat-libs 2>/dev/null || {

echo “在线安装失败,尝试使用离线依赖包...”

rpm -ivh ./deps/*.rpm --nodeps 2>/dev/null || true

}

# 3. 解压并安装 mysql

echo “正在安装 mysql...”

tar -xf /data1/mysql-8.0.44-linux-glibc2.28-x86_64.tar.xz

mv /data1/mysql-8.0.44-linux-glibc2.28-x86_64/* /data1/mysql/

# 4. 创建软链接

ln -sf /data1/mysql/bin/mysql /usr/local/bin/mysql

ln -sf /data1/mysql/bin/mysqld /usr/local/bin/mysqld

ln -sf /data1/mysql/bin/mysqld_safe /usr/local/bin/mysqld_safe

# 5. 创建主配置文件 /etc/my.cnf

# 注意:初始时 plugin-load 行被注释,将在动态配置后启用。

cat > /etc/my.cnf << 'eof'

[mysqld]

basedir=/data1/mysql

datadir=/data1/mysql/data

socket=/data1/mysql/mysql.sock

port=3306

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

#default_authentication_plugin=mysql_native_password

max_connections=1000

max_connect_errors=100000

table_open_cache=2000

thread_cache_size=100

tmp_table_size=64m

max_heap_table_size=64m

log-error=/data1/mysql/logs/mysql-error.log

slow_query_log=1

slow_query_log_file=/data1/mysql/logs/mysql-slow.log

long_query_time=2

log-bin=/data1/mysql/logs/mysql-bin

binlog_format=row

binlog_expire_logs_seconds=604800

innodb_buffer_pool_size=1g

innodb_log_file_size=256m

innodb_flush_method=o_direct

innodb_flush_log_at_trx_commit=1

innodb_file_per_table=1

server_id=1

report_host=192.168.65.137

gtid_mode=on

enforce_gtid_consistency=on

binlog_checksum=none

log_slave_updates=on

binlog_row_image=full

#初始注释,步骤3.2中启用

#plugin-load="group_replication.so"

disabled_storage_engines="myisam,blackhole,federated,archive,memory"

#ssl_ca=/etc/mysql/ca.pem

#ssl_cert=/etc/mysql/server-cert.pem

#ssl_key=/etc/mysql/server-key.pem

#require_secure_transport=on

[mysql]

default-character-set=utf8mb4

socket=/data1/mysql/mysql.sock

[client]

socket=/data1/mysql/mysql.sock

eof

# 6. 初始化 mysql (使用 --initialize-insecure)

/data1/mysql/bin/mysqld --defaults-file=/etc/my.cnf \

--initialize-insecure --user=mysql \

--basedir=/data1/mysql --datadir=/data1/mysql/data

# 7. 配置并启动 systemd 服务

cat > /etc/systemd/system/mysqld.service << 'eof'

[unit]

description=mysql server

after=network.target

[service]

type=simple

user=mysql

group=mysql

execstart=/usr/local/bin/mysqld --defaults-file=/etc/my.cnf

limitnofile=65535

restart=on-failure

restartsec=10

timeoutsec=300

[install]

wantedby=multi-user.target

eof

systemctl daemon-reload

systemctl start mysqld

systemctl enable mysqld

三、配置 group replication 集群

3.1 【关键步骤】所有节点:动态配置插件与参数

在每个节点上执行以下sql。执行前,请确保 /etc/my.cnf 中 plugin-load 行已被注释。

mysql -uroot << 'eof' -- 1. 安装插件 install plugin group_replication soname 'group_replication.so'; -- 2. 设置全局变量 (请替换ip为当前节点实际ip) set global group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'; set global group_replication_local_address = '192.168.65.137:33061'; set global group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; set global group_replication_ip_allowlist = '10.0.1.0/24,127.0.0.1/32,localhost'; set global group_replication_bootstrap_group = off; set global group_replication_single_primary_mode = on; -- 3. 使用 persist 使配置持久化(重启后生效) set persist group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'; set persist group_replication_local_address = '192.168.65.137:33061'; set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; set persist group_replication_ip_allowlist = '10.0.1.0/24,127.0.0.1/32,localhost'; eof

3.2 【关键步骤】所有节点:持久化插件配置

动态配置验证无误后,使插件配置永久生效:

# 1. 编辑配置文件,取消 plugin-load 行的注释 sed -i 's/^#plugin-load=“group_replication.so”/plugin-load=“group_replication.so”/' /etc/my.cnf # 2. 重启mysql服务 systemctl restart mysqld

3.3 【主节点】引导集群

在初始主节点 (192.168.65.137) 执行:

# 1. 设置管理用户和权限(同您提供的命令) mysql -uroot << 'eof' alter user 'root'@'localhost' identified by 'mysql#password'; create user 'repl'@'%' identified by 'mysql#password'; grant replication slave on *.* to 'repl'@'%'; create user 'clusteradmin'@'%' identified by 'mysql#password'; grant all privileges on *.* to 'clusteradmin'@'%' with grant option; grant group_replication_admin on *.* to 'clusteradmin'@'%'; flush privileges; eof #允许任何ip登录 mysql -uroot -p'mysql#password' -h127.0.0.1 -p3306 -e "create user if not exists 'root'@'%' identified by 'mysql#password';grant all privileges on *.* to 'root'@'%' with grant option;flush privileges;" # 2. 引导并启动组复制 mysql -uroot -pmysql#password << 'eof' set global group_replication_bootstrap_group=on; change master to master_user='repl', master_password='mysql#password' for channel 'group_replication_recovery'; start group_replication; set global group_replication_bootstrap_group=off; eof

3.4 【从节点】加入集群

在其他节点上执行:

mysql -uroot -pmysql#password << 'eof' change master to master_user='repl', master_password='mysql#password' for channel 'group_replication_recovery'; start group_replication; eof

四、防火墙配置(所有节点)

# 1. 开放mysql及组复制通信端口

firewall-cmd --permanent --add-port=3306/tcp

firewall-cmd --permanent --add-port=33061/tcp

# 2. (可选)在主节点开放mysql router端口

# 判断如果是主节点(例如通过ip判断),则开放router端口

if [[ “$(hostname -i)“ =~ 192.168.65.137 ]]; then

firewall-cmd --permanent --add-port=6446/tcp

firewall-cmd --permanent --add-port=6447/tcp

fi

# 3. 重新加载配置

firewall-cmd --reload

# 4. 验证结果

firewall-cmd --list-ports

五、部署 mysql router(主节点)

在 192.168.65.137 上执行以下整合脚本:

#!/bin/bash # ========== mysql router 部署脚本 ========== # 1. 创建系统用户 groupadd mysqlrouter useradd -r -g mysqlrouter -s /bin/false mysqlrouter # 2. 解压软件 tar -xf mysql-router-8.0.37-linux-glibc2.17-x86_64.tar.gz -c /tmp mv /tmp/mysql-router-8.0.37-linux-glibc2.17-x86_64 /data1/mysql-router # 3. 创建软链接 ln -sf /data1/mysql-router/bin/mysqlrouter /usr/bin/mysqlrouter # 4. 创建目录与配置文件 mkdir -p /etc/mysqlrouter #引导生成配置文件 mysqlrouter --user=mysqlrouter --bootstrap root@192.168.65.137:3306 --directory /etc/mysqlrouter # 5. 设置权限 chown -r mysqlrouter:mysqlrouter /etc/mysqlrouter /data1/mysql-router # 6. 创建并启动系统服务 cat > /etc/systemd/system/mysqlrouter.service << 'eof' [unit] description=mysql router after=network.target mysqld.service wants=network.target mysqld.service documentation=man:mysqlrouter [service] type=forking execstart=/etc/mysqlrouter/start.sh workingdirectory=/etc/mysqlrouter user=mysqlrouter group=mysqlrouter pidfile=/etc/mysqlrouter/mysqlrouter.pid environment="path=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin" environment="router_pid=/etc/mysqlrouter/mysqlrouter.pid" restart=on-failure restartsec=5 startlimitinterval=100 startlimitburst=10 timeoutstartsec=300 timeoutstopsec=30 killsignal=sigterm killmode=mixed [install] wantedby=multi-user.target eof systemctl daemon-reload && systemctl start mysqlrouter && systemctl status mysqlrouter echo “mysql router 部署完成!”

六、mysql shell 安装和配置(主节点)

6.1 安装 mysql shell

# 1. 解压安装包 tar -xf mysql-shell-8.0.37-linux-glibc2.17-x86_64.tar.gz mv mysql-shell-8.0.37-linux-glibc2.17-x86_64 /data1/mysql-shell # 2. 创建软链接 ln -sf /data1/mysql-shell/bin/mysqlsh /usr/local/bin/mysqlsh ln -sf /data1/mysql-shell/bin/mysqlsh /usr/bin/mysqlsh # 3. 验证安装 mysqlsh --version

6.2 创建 innodb cluster(可选)

方式一:接管现有 group replication(推荐)

cat > /tmp/mysql_script.js << 'eof'

try {

print("连接到主节点...");

shell.connect('clusteradmin:mysql%23password@192.168.65.137:3306');

print("接管现有的 group replication 为 innodb cluster...");

var cluster = dba.createcluster('mycluster', {adoptfromgr: true});

print("集群接管完成!");

print("集群状态:");

cluster.status();

} catch(e) {

print("错误: " + e.message);

print("详细信息: " + json.stringify(e));

}

eof

mysqlsh --js -f /tmp/mysql_script.js

方式二:创建新集群

cat > /tmp/check_node.js << 'eof'

// 定义集群节点

var instances = [

{host: '192.168.65.137', port: 3306, label: '节点1'},

{host: '192.168.65.142', port: 3306, label: '节点2'},

{host: '192.168.65.143', port: 3306, label: '节点3'}

];

var username = 'clusteradmin';

var password = 'mysql#password';

// 配置实例

print("\n配置实例...");

for (var i = 0; i < instances.length; i++) {

var instance = instances[i];

print("\n配置节点 " + instance.label + " (" + instance.host + ":" + instance.port + ")...");

var primarysession = shell.connect({

host: instance.host,

port: instance.port,

user: username,

password: password

});

if (!primarysession) {

throw new error("无法连接到节点");

}

print("✓ 成功连接到节点");

print("\n2. 检查节点配置...");

var checkresult = dba.checkinstanceconfiguration({

host: instance.host,

port: instance.port,

user: username,

password: password

});

print("配置检查结果状态: " + checkresult.status);

if (checkresult.status === 'ok') {

print("\n✓ 节点配置正常");

} else if (checkresult.status === 'error') {

print("\n✗ 节点配置有错误,需要修复");

print("\n错误信息: " + checkresult.errors);

print("\n正在自动配置节点...");

var configureresult = dba.configureinstance({

host: instance.host,

port: instance.port,

user: username,

password: password

}, {restart: true});

print("\n配置结果: " + json.stringify(configureresult, null, 2));

if (configureresult.status === 'ok') {

print("\n✓ 节点配置成功");

// 重新连接

primarysession = shell.connect({

host: instance.host,

port: instance.port,

user: username,

password: password

});

} else {

throw new error("节点配置失败: " + configureresult.errors);

}

}

}

eof

mysqlsh --js -f /tmp/check_node.js

cat > /tmp/create_cluster.js << 'eof'

// 定义集群节点

var instances = [

{host: '192.168.65.137', port: 3306, label: '节点1'},

{host: '192.168.65.142', port: 3306, label: '节点2'},

{host: '192.168.65.143', port: 3306, label: '节点3'}

];

var username = 'clusteradmin';

var password = 'mysql#password';

print("\n创建集群...");

var clustername = 'mycluster';

print("\n正在创建集群: " + clustername);

try {

print("连接到主节点: " + instances[0].label);

var primarysession = shell.connect({

host: instances[0].host,

port: instances[0].port,

user: username,

password: password,

scheme: 'mysql'

});

if (!primarysession) {

throw new error("无法连接到主节点");

}

var cluster = dba.createcluster(clustername);

if (cluster) {

print("✓ 集群创建成功");

print("\n集群名称: " + clustername);

print("\n主节点: " + instances[0].host + ":" + instances[0].port);

// 检查集群状态

print("\n集群初始状态:");

var clusterstatus = cluster.status({extended: 0});

print(json.stringify(clusterstatus, null, 2));

} else {

throw new error("集群创建失败");

}

print("\n添加其他实例到集群...");

for (var i = 1; i < instances.length; i++) {

var instance = instances[i];

print("\n添加 " + instance.label + " (" + instance.host + ":" + instance.port + ") 到集群...");

try {

// 尝试添加实例

var addresult = cluster.addinstance({

host: instance.host,

port: instance.port,

user: username,

password: password

}, {

recoverymethod: 'clone'

});

print("\n ✓ " + instance.label + " 添加成功");

print("\n 恢复方法: clone");

} catch (adderr) {

print("\n ✗ 添加 " + instance.label + " 失败: " + adderr.message);

}

}

print("\n集群最终状态:");

var finalstatus = cluster.status({extended: 1});

print("\n集群成员:");

print(json.stringify(finalstatus, null, 2));

print("\n设置集群选项...");

try {

cluster.setoption("exitstateaction", "offline_mode");

cluster.setoption("memberweight", 50);

cluster.setoption("consistency", "before_on_primary_failover");

cluster.setoption("ipallowlist", "192.168.65.0/24,127.0.0.1");

cluster.setoption("failoverconsistency", "eventual");

print("✓ 集群选项设置完成");

} catch (optionerr) {

print("⚠ 设置集群选项时出错: " + optionerr.message);

}

} catch (e) {

print("\n✗ 错误发生: " + e.message);

print("错误堆栈: " + e.stack);

// 尝试获取更多错误信息

if (e.code) {

print("错误代码: " + e.code);

}

// 检查集群是否存在

try {

var existingcluster = dba.getcluster(clustername);

if (existingcluster) {

print("\n⚠ 集群已存在,当前状态:");

print(json.stringify(existingcluster.status({extended: 0}), null, 2));

}

} catch (clustererr) {

// 忽略

}

}

eof

mysqlsh --js -f /tmp/create_cluster.js

cat > /tmp/check_cluster.js << 'eof'

// 定义集群节点

var instances = [

{host: '192.168.65.137', port: 3306, label: '节点1'},

{host: '192.168.65.142', port: 3306, label: '节点2'},

{host: '192.168.65.143', port: 3306, label: '节点3'}

];

var username = 'clusteradmin';

var password = 'mysql#password';

var clustername = 'mycluster';

try {

print("验证集群...\n");

var clustervalid = dba.getcluster(clustername);

if (clustervalid) {

print("✓ 集群验证成功\n");

print("集群对象有效,可以使用以下命令管理:\n");

print(" var cluster = dba.getcluster('" + clustername + "');\n");

print(" cluster.status();\n");

print(" cluster.describe();\n");

}

print("\n=== mysql innodb cluster 创建完成 ===");

print("\n管理命令:");

print("1. 查看集群状态: cluster.status()\n");

print("2. 查看集群描述: cluster.describe()\n");

print("3. 切换主节点: cluster.setprimaryinstance('host:port')\n");

print("4. 重新扫描集群: cluster.rescan()\n");

print("5. 移除实例: cluster.removeinstance('host:port')\n");

} catch (e) {

print("\n✗ 错误发生: " + e.message);

print("错误堆栈: " + e.stack);

// 尝试获取更多错误信息

if (e.code) {

print("错误代码: " + e.code);

}

// 检查集群是否存在

try {

var existingcluster = dba.getcluster(clustername);

if (existingcluster) {

print("\n⚠ 集群已存在,当前状态:");

print(json.stringify(existingcluster.status({extended: 0}), null, 2));

}

} catch (clustererr) {

// 忽略

}

}

eof

mysqlsh --js -f /tmp/check_cluster.js

七、集群验证与基础监控

7.1 基础验证命令

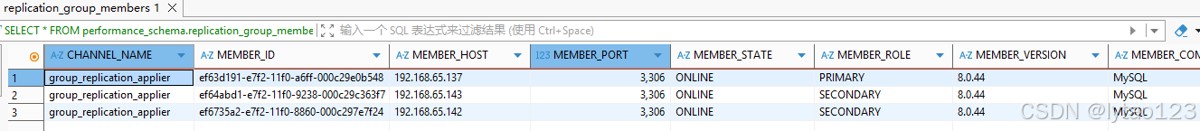

# 1. 检查集群状态

mysql -uclusteradmin -p'mysql#password' -h192.168.65.137 -e “

select member_host, member_port, member_state, member_role

from performance_schema.replication_group_members\g”

# 2. 测试router连接

mysql -uclusteradmin -p'mysql#password' -h192.168.65.137 -p6446 -e “select @@hostname;”

mysql -uclusteradmin -p'mysql#password' -h192.168.65.137 -p6447 -e “select @@hostname;”

# 3.1 测试同步,主节点执行

mysql -uclusteradmin -pmysql#password -h192.168.65.137 << eof

create database if not exists cluster_test;

use cluster_test;

create table if not exists test_sync (

id int auto_increment primary key,

node_name varchar(50),

created_at timestamp default current_timestamp

);

insert into test_sync (node_name) values ('from_node1');

select * from test_sync;

eof

# 3.2 每个从节点看是否有数据

mysql -uclusteradmin -pmysql#password -h192.168.65.142 -e "select * from cluster_test.test_sync;"

mysql -uclusteradmin -pmysql#password -h192.168.65.143 -e "select * from cluster_test.test_sync;"

7.2 日常监控命令

# 查看集群成员状态 mysql -uroot -p'mysql#password' -e “select * from performance_schema.replication_group_members;” # 查看复制延迟 mysql -uroot -p'mysql#password' -e “select * from performance_schema.replication_group_member_stats\g” # 检查错误日志 tail -50 /data1/mysql/logs/mysql-error.log

7.3 故障转移测试

# 模拟主节点故障 # 1. 停止主节点的mysql服务 systemctl stop mysqld # 2. 观察集群自动选举新主节点 mysql -uroot -pmysql#password -h192.168.65.142 -e "select * from performance_schema.replication_group_members;" # 3. 通过router测试连接 mysql -uroot -pmysql#password -h192.168.65.137 -p6446 -e "select @@hostname;" # 4. 恢复原主节点 systemctl start mysqld

八、常见问题与解决方案

8.1 mysql 8.0 单节点安装和初始化问题

问题1:依赖库缺失问题(openeuler 23.03 系统)

问题现象

error while loading shared libraries: 1. libaio.so.1: cannot open shared object file 2. libnuma.so.1: cannot open shared object file

解决方案

# openeuler 23.03 系统 sudo dnf install libaio libaio-devel numactl numactl-devel openssl openssl-devel # 安装后运行 sudo ldconfig # 验证依赖库 ldd /data1/mysql/bin/mysqld | grep -e "libaio|libnuma"

问题2:systemd 服务启动失败 (203/exec)

问题现象

process: 30526 execstart=/usr/local/mysql/bin/mysqld --defaults-file=/etc/my.cnf (code=exited, status=203/exec)

解决方案

# 1. 检查 mysqld 路径是否正确 ls -la /data1/mysql/bin/mysqld # 2. 修正 systemd 服务文件(已在上面配置中修正) # 3. 设置正确权限 sudo chmod 755 /data1/mysql/bin/mysqld # 4. 重新加载 systemd sudo systemctl daemon-reload sudo systemctl restart mysql

8.2 group replication 集群问题

问题1:节点状态为 recovering 是否正常?

问题现象

channel_name member_id member_host member_port member_state member_role group_replication_applier 113b5a00-d7fa-11f0-b01c-fa163e5fc9a1 192.168.65.137 3306 online primary group_replication_applier 11476a61-d7fa-11f0-8f8b-fa163ed8b49b 192.168.65.142 3306 recovering secondary group_replication_applier 115ee1ec-d7fa-11f0-9029-fa163e2d65c6 192.168.65.143 3306 recovering secondary

解答与解决方案

这是正常的! recovering 状态表示节点正在从主节点同步数据。

解决步骤:

# 1. 查看恢复进度

mysql -h192.168.65.142 -uroot -pmysql#password -e "

select

channel_name,

service_state,

remaining_delay,

count_transactions_retries

from performance_schema.replication_connection_status

where channel_name = 'group_replication_applier';

"

# 2. 查看恢复错误(如果有)

mysql -h192.168.65.142 -uroot -pmysql#password -e "

select

channel_name,

last_error_number,

last_error_message,

last_error_timestamp

from performance_schema.replication_applier_status_by_worker

where last_error_number != 0;

"

# 3. 查看恢复线程状态

mysql -h192.168.65.142 -uroot -pmysql#password -e "show processlist;" | grep -i recover

# 4. 如果长时间处于 recovering(超过30分钟),尝试:

mysql -h192.168.65.142 -uroot -pmysql#password << 'eof'

stop group_replication;

start group_replication;

eof

问题2:本地事务多于组事务错误

问题现象

[error] [my-011526] [repl] plugin group_replication reported: 'this member has more executed transactions than those present in the group. local transactions: 11476a61-d7fa-11f0-8f8b-fa163ed8b49b:1-11 > group transactions: 113b5a00-d7fa-11f0-b01c-fa163e5fc9a1:1-11, aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1' [error] [my-011522] [repl] plugin group_replication reported: 'the member contains transactions not present in the group. the member will now exit the group.'

解决方案

mysql -uroot -pmysql#password << 'eof' -- 1. 停止组复制 stop group_replication; -- 2. 临时禁用只读模式 set global read_only = off; set global super_read_only = off; -- 3. 跳过问题事务 set gtid_next='113b5a00-d7fa-11f0-b01c-fa163e5fc9a1:7'; begin; commit; set gtid_next='automatic'; -- 4. 重新启动组复制(会自动设置回只读模式) start group_replication; -- 5. 检查状态 select * from performance_schema.replication_group_members; eof

问题3:ip地址不在白名单中

问题现象

[warning] [my-011735] [repl] plugin group_replication reported: '[gcs] connection attempt from ip address ::ffff:192.168.65.142 refused. address is not in the ip allowlist.' [error] [my-011735] [repl] plugin group_replication reported: '[gcs] error on opening a connection to peer node 192.168.65.143:33061 when joining a group. my local port is: 33061.'

解决方案

# 在所有节点上执行(需要持久化配置) mysql -uroot -pmysql#password << 'eof' -- 查看当前的ip白名单 show variables like 'group_replication_ip_allowlist'; -- 持久化添加ip地址到白名单(使用 set persist) set persist group_replication_ip_allowlist = '10.0.1.0/24,127.0.0.1/32,localhost'; -- 或者允许所有ip(仅测试环境) -- set persist group_replication_ip_allowlist = '0.0.0.0/0'; -- 验证持久化设置 select * from performance_schema.persisted_variables where variable_name = 'group_replication_ip_allowlist'; -- 重启组复制使设置生效 stop group_replication; start group_replication; eof # 验证配置是否已持久化 mysql -uroot -pmysql#password -e "show variables like 'group_replication_ip_allowlist';"

问题4:没有本地ip地址匹配配置

问题现象

[error] [my-011735] [repl] plugin group_replication reported: '[gcs] there is no local ip address matching the one configured for the local node (192.168.65.137:33061).'

解决方案

# 1. 查看服务器ip地址 ip addr show hostname -i # 2. 修改group replication本地地址配置(使用持久化设置) mysql -uroot -pmysql#password << 'eof' -- 查看当前配置 show variables like 'group_replication_local_address'; -- 持久化修改为正确的ip地址(使用实际ip) set persist group_replication_local_address = '192.168.65.137:33061'; -- 检查持久化变量 select * from performance_schema.persisted_variables where variable_name like 'group_replication%'; eof # 3. 重启mysql服务使持久化配置生效 sudo systemctl restart mysqld # 4. 重启组复制 mysql -uroot -pmysql#password << 'eof' stop group_replication; start group_replication; eof

问题5:33061端口未被监听

问题现象

加载完group_replication.so插件后,33061端口还是没有被监听。

解决方案

# 1. 检查插件是否正确加载 mysql -uroot -pmysql#password -e "show plugins;" | grep group_replication # 2. 检查group replication是否已启动 mysql -uroot -pmysql#password -e "show status like 'group_replication%';" # 3. 如果没有启动,配置并启动(使用持久化设置) mysql -uroot -pmysql#password << 'eof' -- 持久化配置group replication参数 set persist group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'; set persist group_replication_local_address = '192.168.65.137:33061'; set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; set persist group_replication_single_primary_mode = on; set persist group_replication_enforce_update_everywhere_checks = off; -- 临时设置引导模式 set global group_replication_bootstrap_group = on; -- 启动group replication(这会创建监听端口) start group_replication; -- 关闭引导模式 set global group_replication_bootstrap_group = off; eof # 4. 验证端口监听 netstat -tlnp | grep 33061 ss -tlnp | grep 33061 # 5. 检查防火墙(openeuler) sudo firewall-cmd --list-ports sudo firewall-cmd --add-port=33061/tcp --permanent sudo firewall-cmd --reload

问题6:初始化组通信层失败

问题现象

the start group_replication command failed as there was an error when initializing the group communication layer

解决方案

# 1. 检查关键配置

mysql -uroot -pmysql#password << 'eof'

-- 检查group replication配置

select

variable_name,

variable_value

from performance_schema.global_variables

where variable_name like 'group_replication%'

order by variable_name;

-- 检查插件状态

select plugin_name, plugin_status

from information_schema.plugins

where plugin_name like '%group_replication%';

eof

# 2. 清理并重新配置(使用持久化设置)

mysql -uroot -pmysql#password << 'eof'

-- 停止组复制

stop group_replication;

-- 重置主节点

reset master;

-- 持久化重新配置

set persist group_replication_group_name = uuid();

set persist group_replication_local_address = '192.168.65.137:33061';

set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061';

set persist group_replication_ip_allowlist = '10.0.1.0/24,127.0.0.1/32';

-- 临时设置引导模式

set global group_replication_bootstrap_group = on;

-- 重新启动

start group_replication;

set global group_replication_bootstrap_group = off;

eof

# 3. 重启mysql服务

sudo systemctl restart mysqld

问题7:初始化时未知变量错误

问题现象

[error] [my-000067] [server] unknown variable 'group_replication_group_name=aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'. [error] [my-013236] [server] the designated data directory /data1/mysql/data/ is unusable.

解决方案

# 1. 正确的初始化命令(不要在初始化时包含运行时参数) sudo -u mysql /data1/mysql/bin/mysqld --initialize \ --user=mysql \ --datadir=/data1/mysql/data \ --basedir=/data1/mysql # 2. 启动mysql后,在配置文件中添加group replication配置 cat >> /etc/my.cnf << 'eof' # group replication 配置 plugin-load-add=group_replication.so group_replication=force_plus_permanent group_replication_start_on_boot=off # 注意:这里只是设置默认值,实际使用set persist # group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa" # group_replication_local_address="192.168.65.137:33061" # group_replication_group_seeds="192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061" eof # 3. 启动mysql sudo systemctl restart mysql # 4. 使用set persist动态配置group replication参数 mysql -uroot -pmysql#password << 'eof' -- 安装插件(如果未自动加载) install plugin group_replication soname 'group_replication.so'; -- 使用set persist持久化配置 set persist group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'; set persist group_replication_local_address = '192.168.65.137:33061'; set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; eof

问题8:mysql.plugin表不存在,33061端口未被监听

问题现象

mysqld: table 'mysql.plugin' doesn't exist

没有监听到33061端口。

解决方案

# 1. 重新初始化mysql数据目录(注意:会丢失所有数据!) sudo systemctl stop mysql sudo rm -rf /data1/mysql/data/* sudo -u mysql /data1/mysql/bin/mysqld --initialize \ --user=mysql \ --datadir=/data1/mysql/data \ --basedir=/data1/mysql # 2. 获取初始密码 sudo grep 'temporary password' /data1/mysql/data/error.log # 3. 启动mysql并修改密码 sudo systemctl start mysql # 用临时密码登录并修改 mysql -uroot -p临时密码 -e "alter user 'root'@'localhost' identified by 'mysql#password';" # 4. 持久化安装和配置group replication插件 mysql -uroot -pmysql#password << 'eof' -- 安装插件 install plugin group_replication soname 'group_replication.so'; -- 验证插件 select plugin_name, plugin_status from information_schema.plugins where plugin_name = 'group_replication'; -- 持久化配置参数 set persist group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa'; set persist group_replication_local_address = '192.168.65.137:33061'; set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; set persist group_replication_bootstrap_group = off; eof # 5. 验证33061端口 sleep 5 netstat -tlnp | grep 33061

问题9:group replication函数已存在

问题现象

执行install plugin时提示:

function 'group_replication' already exists

解决方案

# 1. 检查插件是否已安装 mysql -uroot -pmysql#password -e "show plugins;" | grep group_replication # 2. 如果已安装,直接使用已安装的插件继续配置 mysql -uroot -pmysql#password << 'eof' -- 跳过安装,直接配置 set persist group_replication_group_name = uuid(); set persist group_replication_local_address = '192.168.65.137:33061'; set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061'; set persist group_replication_ip_allowlist = '10.0.1.0/24,127.0.0.1/32'; -- 临时设置引导模式 set global group_replication_bootstrap_group = on; -- 启动组复制 start group_replication; -- 关闭引导模式 set global group_replication_bootstrap_group = off; eof # 3. 如果需要重新安装(不推荐,除非插件损坏) mysql -uroot -pmysql#password << 'eof' -- 卸载插件 uninstall plugin group_replication; -- 等待几秒 do sleep(2); -- 重新安装 install plugin group_replication soname 'group_replication.so'; eof

8.3 mysql router 配置问题

mysql router 常见配置错误

常见错误

- “no metadata cache” - 配置节名称错误

- “invalid uri” - 缺少协议前缀

- “keyring not found” - 密钥文件问题

正确配置

# 关键:使用 metadata_cache(下划线),不是 metadata-cache [metadata_cache:mycluster] router_id=1 # 关键:添加 mysql:// 协议前缀 bootstrap_server_addresses=mysql://192.168.65.137:3306 user=root password="mysql#password" ttl=5 [routing:rw] bind_address=0.0.0.0 bind_port=6446 destinations=metadata-cache://mycluster/default?role=primary routing_strategy=round-robin protocol=classic [routing:ro] bind_address=0.0.0.0 bind_port=6447 destinations=metadata-cache://mycluster/default?role=secondary routing_strategy=round-robin protocol=classic

mysql router keyring 文件问题

问题现象

error: can't open file '/data1/mysql-router/mysqlrouter.key': no such file or directory

解决方案

# 1. 创建目录

sudo mkdir -p /data1/mysql-router/mysqlrouter

sudo chown -r mysqlrouter:mysqlrouter /data1/mysql-router

# 2. 创建 master key 文件

sudo -u mysqlrouter openssl rand -hex 32 > /data1/mysql-router/mysqlrouter/mysqlrouter.key

sudo chmod 600 /data1/mysql-router/mysqlrouter/mysqlrouter.key

# 3. 创建 keyring 文件

sudo -u mysqlrouter touch /data1/mysql-router/mysqlrouter/keyring

sudo chmod 600 /data1/mysql-router/mysqlrouter/keyring

# 4. 初始化 keyring(如果工具可用)

if command -v mysqlrouter_keyring > /dev/null; then

sudo -u mysqlrouter mysqlrouter_keyring init \

--master-key-file=/data1/mysql-router/mysqlrouter/mysqlrouter.key \

/data1/mysql-router/mysqlrouter/keyring

fi

8.4 常见错误排查和验证

# 1. 检查库依赖 ldd /data1/mysql/bin/mysqld # 2. 检查 systemd 日志 sudo journalctl -u mysql -n 100 --no-pager # 3. 检查 mysql 错误日志 sudo tail -f /data1/mysql/data/error.log # 4. 检查集群状态 mysql -h192.168.65.137 -uroot -pmysql#password -e "select * from performance_schema.replication_group_members;" # 5. 检查 router 状态 sudo systemctl status mysqlrouter # 6. 检查持久化配置 mysql -uroot -pmysql#password -e "select * from performance_schema.persisted_variables;"

九、重要注意事项与生产建议

9.1 成功关键点

- 按顺序执行:严格按照部署顺序,先基础后集群。

- 配置一致性:所有节点的配置文件要保持一致。

- 持久化配置:使用

set persist确保重启后配置生效。 - 网络配置:确保节点间网络通畅,端口开放。

- 时间同步:集群节点时间必须同步。

9.2 openeuler 23.03 特定注意事项

- 软件源配置:确保已配置正确的软件源。

- 依赖包名:openeuler 上包名可能与 centos 不同。

- 服务管理:使用 systemctl 管理服务。

- 防火墙:使用 firewall-cmd 命令。

- selinux:openeuler 默认使用 selinux,注意上下文配置。

9.3 推荐的部署架构

应用程序

↓

mysql router (负载均衡/故障转移)

├── 读写端口: 6446 → 主节点

└── 只读端口: 6447 → 从节点

↓

group replication 集群 (3节点)

├── 主节点: 192.168.65.137

├── 从节点: 192.168.65.142

└── 从节点: 192.168.65.143

9.4 生产环境建议

- 使用专用存储:为mysql数据目录使用高性能存储。

- 配置监控:部署prometheus + grafana监控集群。

- 定期备份:制定备份策略并定期测试恢复。

- 安全加固:配置ssl连接,限制访问ip。

- 文档记录:详细记录部署步骤和配置参数。

9.5 恢复集群

# 启动 primary 节点 mysql -h192.168.65.137 -p3306 -uclusteradmin -pmysql#password 2>/dev/null << 'sql' stop group_replication; reset master; set global group_replication_bootstrap_group=on; start group_replication; set global group_replication_bootstrap_group=off; select member_host, member_port, member_state, member_role from performance_schema.replication_group_members; sql # 去primary节点查询集群信息 mysql -h192.168.65.137 -p3306 -uclusteradmin -pmysql#password 2>/dev/null << 'sql' show variables like 'group_replication_group_name'; show variables like 'group_replication_local_address'; show variables like 'group_replication_group_seeds'; show variables like 'group_replication_bootstrap_group'; # 节点信息 select member_host, member_port, member_state, member_role from performance_schema.replication_group_members; sql # 修正节点参数问题 mysql -h192.168.65.137 -p3306 -uclusteradmin -pmysql#password 2>/dev/null << 'sql' stop group_replication; set global group_replication_bootstrap_group=off; set global group_replication_group_seeds='192.168.65.137:3306,192.168.65.142:3306,192.168.65.143:3306'; set global group_replication_local_address='192.168.65.137:3306'; start group_replication; sql # 启动 secondary 节点,需要用到集群信息 mysql -h192.168.65.142 -p3306 -uclusteradmin -pmysql#password 2>/dev/null << 'sql' stop group_replication; reset slave all; reset master; set global group_replication_bootstrap_group=off; set global group_replication_group_name='672ba05f-e7f9-11f0-8d2e-000c29e0b548'; set global group_replication_group_seeds='192.168.65.137:3306,192.168.65.142:3306,192.168.65.143:3306'; set global group_replication_local_address='192.168.65.142:3306'; change master to master_user='clusteradmin', master_password='mysql#password' for channel 'group_replication_recovery'; start group_replication; select member_host, member_port, member_state, member_role from performance_schema.replication_group_members; sql # 启动 secondary 节点,需要用到集群信息 mysql -h192.168.65.143 -p3306 -uclusteradmin -pmysql#password 2>/dev/null << 'sql' stop group_replication; reset slave all; reset master; set global group_replication_bootstrap_group=off; set global group_replication_group_name='672ba05f-e7f9-11f0-8d2e-000c29e0b548'; set global group_replication_group_seeds='192.168.65.137:3306,192.168.65.142:3306,192.168.65.143:3306'; set global group_replication_local_address='192.168.65.143:3306'; change master to master_user='clusteradmin', master_password='mysql#password' for channel 'group_replication_recovery'; start group_replication; select member_host, member_port, member_state, member_role from performance_schema.replication_group_members; sql

十、总结

通过这份手册,可以快速在openeuler 23.03上部署稳定可靠的mysql高可用集群。文档从环境准备、软件安装、集群配置、高可用部署到故障排查,提供了完整的闭环指导。每个步骤都经过验证,按照此流程可以避免大多数常见问题,构建出符合生产要求的高可用数据库集群。

安装记录:

# 下载 mysql 8.0 server

# 下载 mysql shell

# 下载 mysql router

# 下载依赖

dnf reinstall --downloadonly --downloaddir=./deps \

libaio numactl-libs openssl libtirpc ncurses-compat-libs \

libstdc++ libgcc pcre2 libedit numactl

# 1. 创建用户和目录

groupadd mysql

useradd -r -g mysql -s /bin/false mysql

mkdir -p /data1/mysql

mkdir -p /data1/mysql/{data,logs,tmp}

chown -r mysql:mysql /data1/mysql

# 2. 检查并安装依赖

dnf install -y libaio numactl-libs openssl libtirpc ncurses-compat-libs 2>/dev/null || {

echo "使用离线依赖包..."

rpm -ivh ./deps/*.rpm --nodeps 2>/dev/null || true

}

# 3. 解压并安装 mysql

tar -xf /data1/mysql-8.0.44-linux-glibc2.28-x86_64.tar.xz

mv /data1/mysql-8.0.44-linux-glibc2.28-x86_64/* /data1/mysql/

# 4. 创建软链接

ln -sf /data1/mysql/bin/mysql /usr/local/bin/mysql

ln -sf /data1/mysql/bin/mysqld /usr/local/bin/mysqld

ln -sf /data1/mysql/bin/mysqld_safe /usr/local/bin/mysqld_safe

# 5. 创建配置文件

cat > /etc/my.cnf << eof

[mysqld]

basedir=/data1/mysql

datadir=/data1/mysql/data

socket=/data1/mysql/mysql.sock

port=3306

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

default_authentication_plugin=mysql_native_password

max_connections=1000

max_connect_errors=100000

table_open_cache=2000

thread_cache_size=100

tmp_table_size=64m

max_heap_table_size=64m

log-error=/data1/mysql/logs/mysql-error.log

slow_query_log=1

slow_query_log_file=/data1/mysql/logs/mysql-slow.log

long_query_time=2

log-bin=/data1/mysql/logs/mysql-bin

binlog_format=row

binlog_expire_logs_seconds=604800

innodb_buffer_pool_size=1g

innodb_log_file_size=256m

innodb_flush_method=o_direct

innodb_flush_log_at_trx_commit=1

innodb_file_per_table=1

server_id=1

report_host=192.168.100.19

gtid_mode=on

enforce_gtid_consistency=on

master_info_repository=table

relay_log_info_repository=table

binlog_checksum=none

log_slave_updates=on

binlog_row_image=full

# plugin-load="group_replication.so"

transaction_write_set_extraction=xxhash64

disabled_storage_engines="myisam,blackhole,federated,archive,memory"

# ssl_ca=/etc/mysql/ca.pem

# ssl_cert=/etc/mysql/server-cert.pem

# ssl_key=/etc/mysql/server-key.pem

# require_secure_transport=on

[mysql]

default-character-set=utf8mb4

socket=/data1/mysql/mysql.sock

[client]

socket=/data1/mysql/mysql.sock

eof

# 6. 初始化 mysql

/data1/mysql/bin/mysqld --defaults-file=/etc/my.cnf \

--initialize-insecure --user=mysql \

--basedir=/data1/mysql --datadir=/data1/mysql/data

# 7. 创建 systemd 服务

cat > /etc/systemd/system/mysqld.service << 'eof'

[unit]

description=mysql server

after=network.target

[service]

type=simple

user=mysql

group=mysql

execstart=/usr/local/bin/mysqld --defaults-file=/etc/my.cnf

limitnofile=65535

restart=on-failure

restartsec=10

timeoutsec=300

[install]

wantedby=multi-user.target

eof

# 8. 启动全部 mysql

systemctl daemon-reload && systemctl start mysqld && systemctl enable mysqld && systemctl status mysqld

# 启动插件,每个节点的ip不一样

mysql -uroot << eof

install plugin group_replication soname 'group_replication.so';

set global gtid_mode = 'on';

set global enforce_gtid_consistency = 'on';

set global master_info_repository = 'table';

set global relay_log_info_repository = 'table';

set global binlog_checksum = 'none';

set global binlog_format = 'row';

set global transaction_write_set_extraction = 'xxhash64';

set global group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa';

set global group_replication_start_on_boot = 'off';

set global group_replication_local_address = '192.168.100.19:33061';

set global group_replication_ip_allowlist = '192.168.100.19,192.168.100.153,192.168.100.154,192.168.65.137,192.168.65.142,192.168.65.143,127.0.0.1,localhost';

set global group_replication_group_seeds = '192.168.100.19:33061,192.168.100.153:33061,192.168.100.154:33061';

set global group_replication_bootstrap_group = 'off';

set global group_replication_single_primary_mode = 'on';

set global group_replication_enforce_update_everywhere_checks = 'off';

set persist gtid_mode = 'on';

set persist enforce_gtid_consistency = 'on';

set persist master_info_repository = 'table';

set persist relay_log_info_repository = 'table';

set persist binlog_checksum = 'none';

set persist binlog_format = 'row';

set persist transaction_write_set_extraction = 'xxhash64';

set persist group_replication_group_name = 'aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa';

set persist group_replication_start_on_boot = 'off';

set persist group_replication_local_address = '192.168.100.19:33061';

set persist group_replication_ip_allowlist = '192.168.100.19,192.168.100.153,192.168.100.154,192.168.65.137,192.168.65.142,192.168.65.143,127.0.0.1,localhost';

set persist group_replication_group_seeds = '192.168.65.137:33061,192.168.65.142:33061,192.168.65.143:33061';

set persist group_replication_bootstrap_group = 'off';

set persist group_replication_single_primary_mode = 'on';

set persist group_replication_enforce_update_everywhere_checks = 'off';

eof

设置完后,在my.cnf 开启 plugin-load="group_replication.so",最后重启服务

# 确认修改

mysql -uroot << eof

-- 检查插件状态

show plugins;

-- 检查 group replication 变量

show variables like 'group_replication%';

-- 检查端口监听

show variables like 'port';

show variables like 'group_replication_local_address';

"

# 主节点上执行

# 9. 设置 root 密码

mysql -uroot << eof

alter user 'root'@'localhost' identified by 'mysql#password';

create user 'root'@'%' identified by 'mysql#password';

grant all privileges on *.* to 'root'@'%' with grant option;

create user 'repl'@'%' identified by 'mysql#password';

grant replication slave on *.* to 'repl'@'%';

grant backup_admin on *.* to 'repl'@'%';

create user 'clusteradmin'@'%' identified by 'mysql#password';

grant all privileges on *.* to 'clusteradmin'@'%' with grant option;

grant clone_admin, system_variables_admin on *.* to 'clusteradmin'@'%';

grant group_replication_admin on *.* to 'clusteradmin'@'%';

flush privileges;

eof

# 3. 配置group replication

mysql -uroot -pmysql#password << eof

-- 设置恢复通道

set global group_replication_bootstrap_group=on;

change master to master_user='repl', master_password='mysql#password'

for channel 'group_replication_recovery';

-- 启动组复制

start group_replication;

-- 关闭引导模式

set global group_replication_bootstrap_group=off;

eof

# 4. 验证主节点状态

mysql -uroot -pmysql#password << eof

select * from performance_schema.replication_group_members;

eof

# 其他节点

# 2. 加入集群

mysql -uroot -pmysql#password << eof

-- 设置恢复通道

change master to master_user='repl', master_password='mysql#password'

for channel 'group_replication_recovery';

-- 启动组复制

start group_replication;

eof

# 3. 验证状态

mysql -uroot -pmysql#password << eof

select * from performance_schema.replication_group_members;

eof

group replication(gr) 集群创建到这就行

总结

到此这篇关于mysql高可用集群部署与运维的文章就介绍到这了,更多相关mysql高可用集群部署与运维内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论