概述

本文主要介绍正则表达式的定义和基本应用方法,正则表达式是一个强大的工具,熟练掌握后可以极大地提高文本处理的效率。

1 正则表达式的概念

正则表达式(regular expression)是一种用于匹配字符串中字符组合的模式。在编程中,正则表达式被用来进行字符串的搜索、替换、提取等操作。

1.1 正则表达式基本语法

1) 普通字符

大多数字符(字母、数字、汉字等)会直接匹配它们自身。例如,正则表达式hello会匹配字符串中的"hello"。

2) 元字符

元字符是正则表达式中具有特殊含义的字符,包括:

.:匹配除换行符以外的任意字符。

^:匹配字符串的开始。

$:匹配字符串的结束。

*:匹配前面的子表达式零次或多次。

+:匹配前面的子表达式一次或多次。

?:匹配前面的子表达式零次或一次。

{n}:匹配前面的子表达式恰好n次。

{n,}:匹配前面的子表达式至少n次。

{n,m}:匹配前面的子表达式至少n次,至多m次。

[]:字符集合,匹配所包含的任意一个字符。

|:或,匹配左右任意一个表达式。

():分组,将多个字符组合成一个单元,可用于后续引用。

3) 转义字符

如果要匹配元字符本身,需要使用反斜杠\进行转义。例如,要匹配字符.,需要使用\.。

4) 预定义字符集

\d:匹配任意数字,等价于[0-9]。

\d:匹配任意非数字,等价于[^0-9]。

\w:匹配字母、数字、下划线,等价于[a-za-z0-9_]。

\w:匹配非字母、数字、下划线,等价于[^a-za-z0-9_]。

\s:匹配任意空白字符,包括空格、制表符、换行符等。

\s:匹配任意非空白字符。

1.2 正则表达式在python中的使用

python通过re模块提供正则表达式功能。常用函数包括:

1) re.match()

从字符串的起始位置匹配一个模式,如果匹配成功,返回一个匹配对象,否则返回none。

2) re.search()

扫描整个字符串并返回第一个成功的匹配。

3) re.findall()

在字符串中找到正则表达式所匹配的所有子串,并返回一个列表。

4) re.finditer()

和re.findall()类似,但返回一个迭代器,每个元素是一个匹配对象。

5) re.sub()

用于替换字符串中的匹配项。

6) re.split()

按照能够匹配的子串将字符串分割后返回列表。

2 正则表达式应用

2.1 基本语法范例

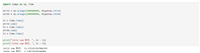

源代码

import re

# 基本匹配示例

text = "hello, my email is example@email.com and phone is 123-456-7890"

# 查找邮箱

email_pattern = r'\b[a-za-z0-9._%+-]+@[a-za-z0-9.-]+\.[a-z|a-z]{2,}\b'

emails = re.findall(email_pattern, text)

print("emails found:", emails)

# 查找电话号码

phone_pattern = r'\d{3}-\d{3}-\d{4}'

phones = re.findall(phone_pattern, text)

print("phones found:", phones)运行结果:

emails found: ['example@email.com'] phones found: ['123-456-7890'] process finished with exit code 0

2.2 元字符详解

1) 字符类

源代码

import re

def demonstrate_character_classes():

"""演示字符类"""

text = "abc123 xyz!@#"

patterns = {

r'\d': '数字', # [0-9]

r'\d': '非数字', # [^0-9]

r'\w': '单词字符', # [a-za-z0-9_]

r'\w': '非单词字符', # [^a-za-z0-9_]

r'\s': '空白字符', # [ \t\n\r\f\v]

r'\s': '非空白字符', # [^ \t\n\r\f\v]

r'[a-z]': '小写字母', # 自定义字符类

r'[^0-9]': '非数字', # 否定字符类

}

for pattern, description in patterns.items():

matches = re.findall(pattern, text)

print(f"{description} ({pattern}): {matches}")

demonstrate_character_classes()运行结果

数字 (\d): ['1', '2', '3']

非数字 (\d): ['a', 'b', 'c', ' ', 'x', 'y', 'z', '!', '@', '#']

单词字符 (\w): ['a', 'b', 'c', '1', '2', '3', 'x', 'y', 'z']

非单词字符 (\w): [' ', '!', '@', '#']

空白字符 (\s): [' ']

非空白字符 (\s): ['a', 'b', 'c', '1', '2', '3', 'x', 'y', 'z', '!', '@', '#']

小写字母 ([a-z]): ['a', 'b', 'c']

非数字 ([^0-9]): ['a', 'b', 'c', ' ', 'x', 'y', 'z', '!', '@', '#']

2) 量词

源代码

def demonstrate_quantifiers():

"""演示量词"""

text = "a aa aaa aaaa b bb bbb"

patterns = {

r'a?': '0或1个a',

r'a+': '1个或多个a',

r'a*': '0个或多个a',

r'a{2}': '恰好2个a',

r'a{2,}': '2个或更多a',

r'a{2,4}': '2到4个a',

}

for pattern, description in patterns.items():

matches = re.findall(pattern, text)

print(f"{description} ({pattern}): {matches}")

demonstrate_quantifiers()运行结果

0或1个a (a?): ['a', '', 'a', 'a', '', 'a', 'a', 'a', '', 'a', 'a', 'a', 'a', '', '', '', '', '', '', '', '', '', '']

1个或多个a (a+): ['a', 'aa', 'aaa', 'aaaa']

0个或多个a (a*): ['a', '', 'aa', '', 'aaa', '', 'aaaa', '', '', '', '', '', '', '', '', '', '']

恰好2个a (a{2}): ['aa', 'aa', 'aa', 'aa']

2个或更多a (a{2,}): ['aa', 'aaa', 'aaaa']

2到4个a (a{2,4}): ['aa', 'aaa', 'aaaa']

3) 锚点和边界

def demonstrate_anchors():

"""演示锚点"""

lines = [

"start of line",

"middle of text",

"end of line"

]

# 行首匹配

start_pattern = r'^s\w+'

# 行尾匹配

end_pattern = r'\w+line$'

# 单词边界

word_boundary = r'\bof\b'

for line in lines:

start_match = re.search(start_pattern, line)

end_match = re.search(end_pattern, line)

word_match = re.search(word_boundary, line)

print(f"line: '{line}'")

print(f" start match: {start_match.group() if start_match else 'none'}")

print(f" end match: {end_match.group() if end_match else 'none'}")

print(f" word boundary: {word_match.group() if word_match else 'none'}")

print()

demonstrate_anchors()运行结果

line: 'start of line'

start match: start

end match: none

word boundary: ofline: 'middle of text'

start match: none

end match: none

word boundary: ofline: 'end of line'

start match: none

end match: none

word boundary: of

2.3 分组和捕获

1) 分组类型

源代码

def demonstrate_groups():

"""演示分组"""

text = "john doe, jane smith, bob johnson"

# 捕获分组

capture_pattern = r'(\w+)\s(\w+)'

capture_matches = re.findall(capture_pattern, text)

print("capture groups:", capture_matches)

# 非捕获分组

non_capture_pattern = r'(?:\w+)\s(\w+)'

non_capture_matches = re.findall(non_capture_pattern, text)

print("non-capture groups (only last names):", non_capture_matches)

# 命名分组

named_pattern = r'(?p<first>\w+)\s(?p<last>\w+)'

named_matches = re.finditer(named_pattern, text)

print("named groups:")

for match in named_matches:

print(f" full: {match.group()}")

print(f" first: {match.group('first')}, last: {match.group('last')}")

demonstrate_groups()运行结果

capture groups: [('john', 'doe'), ('jane', 'smith'), ('bob', 'johnson')]

non-capture groups (only last names): ['doe', 'smith', 'johnson']

named groups:

full: john doe

first: john, last: doe

full: jane smith

first: jane, last: smith

full: bob johnson

first: bob, last: johnson

2) 回溯引用

源代码

def demonstrate_backreferences():

"""演示回溯引用"""

text = "hello hello world world test test"

# 查找重复单词

duplicate_pattern = r'\b(\w+)\s+\1\b'

duplicates = re.findall(duplicate_pattern, text)

print("duplicate words:", duplicates)

# 在替换中使用回溯引用

html_text = "<b>bold</b> and <i>italic</i>"

replacement_pattern = r'<(\w+)>(.*?)</\1>'

replaced = re.sub(replacement_pattern, r'[\1]: \2', html_text)

print("after replacement:", replaced)

demonstrate_backreferences()运行结果

duplicate words: ['hello', 'world', 'test']

after replacement: [b]: bold and [i]: italic

2.4 高级特性

1) 前瞻和后顾

源代码

def demonstrate_lookaround():

"""演示前后查找"""

text = "apple $10 orange $20 banana $30"

# 正向前瞻 - 匹配后面跟着$的数字

lookahead_pattern = r'\d+(?=\$)'

lookahead_matches = re.findall(lookahead_pattern, text)

print("positive lookahead (numbers before $):", lookahead_matches)

# 负向前瞻 - 匹配后面不跟着$的数字

negative_lookahead_pattern = r'\d+(?!\$)'

negative_matches = re.findall(negative_lookahead_pattern, text)

print("negative lookahead:", negative_matches)

# 正向后顾 - 匹配前面有$的数字

lookbehind_pattern = r'(?<=\$)\d+'

lookbehind_matches = re.findall(lookbehind_pattern, text)

print("positive lookbehind (numbers after $):", lookbehind_matches)

# 负向后顾 - 匹配前面没有$的数字

negative_lookbehind_pattern = r'(?<!\$)\d+'

negative_lookbehind_matches = re.findall(negative_lookbehind_pattern, text)

print("negative lookbehind:", negative_lookbehind_matches)

demonstrate_lookaround()运行结果

positive lookahead (numbers before $): []

negative lookahead: ['10', '20', '30']

positive lookbehind (numbers after $): ['10', '20', '30']

negative lookbehind: ['0', '0', '0']

2) 条件匹配

def demonstrate_conditional_matching():

"""演示条件匹配"""

text = """

<div>content</div>

<span>other content</span>

<div class="special">special content</div>

"""

# 条件匹配:如果标签有class="special",则匹配特殊模式

# 这个例子比较复杂,实际中可能需要分步处理

pattern = r'<(\w+)(?:\s+class="special")?>(.*?)</\1>'

matches = re.findall(pattern, text)

print("conditional matches:")

for tag, content in matches:

print(f" tag: {tag}, content: '{content.strip()}'")

demonstrate_conditional_matching()运行结果

conditional matches:

tag: div, content: 'content'

tag: span, content: 'other content'

tag: div, content: 'special content'

3 python re模块

3.1 主要函数功能演示

测试代码如下:

def demonstrate_re_functions():

"""演示re模块主要函数"""

text = "the quick brown fox jumps over the lazy dog. the dog was lazy."

# 1. re.search() - 查找第一个匹配

first_match = re.search(r'\bfox\b', text)

print(f"re.search(): {first_match.group() if first_match else 'not found'}")

# 2. re.match() - 从字符串开始匹配

start_match = re.match(r'^the', text)

print(f"re.match(): {start_match.group() if start_match else 'not found'}")

# 3. re.findall() - 查找所有匹配

all_matches = re.findall(r'\b\w{3}\b', text) # 所有3字母单词

print(f"re.findall() 3-letter words: {all_matches}")

# 4. re.finditer() - 返回迭代器

print("re.finditer():")

for match in re.finditer(r'\b\w{4}\b', text): # 所有4字母单词

print(f" found '{match.group()}' at position {match.start()}-{match.end()}")

# 5. re.sub() - 替换

replaced = re.sub(r'\bdog\b', 'cat', text)

print(f"re.sub() result: {replaced}")

# 6. re.split() - 分割

split_result = re.split(r'\s+', text) # 按空白字符分割

print(f"re.split() first 5 words: {split_result[:5]}")

demonstrate_re_functions()运行结果:

re.search(): fox

re.match(): the

re.findall() 3-letter words: ['the', 'fox', 'the', 'dog', 'the', 'dog', 'was']

re.finditer():

found 'over' at position 26-30

found 'lazy' at position 35-39

found 'lazy' at position 57-61

re.sub() result: the quick brown fox jumps over the lazy cat. the cat was lazy.

re.split() first 5 words: ['the', 'quick', 'brown', 'fox', 'jumps']

3.2 编译正则表达式

测试代码如下:

def demonstrate_compiled_regex():

"""演示编译正则表达式"""

# 编译正则表达式(提高性能,特别是重复使用时)

email_pattern = re.compile(r'''

\b

[a-za-z0-9._%+-]+ # 用户名

@ # @符号

[a-za-z0-9.-]+ # 域名

\.[a-z|a-z]{2,} # 顶级域名

\b

''', re.verbose)

text = """

contact us at:

john.doe@company.com,

jane_smith123@sub.domain.co.uk,

invalid-email@com

"""

# 使用编译后的模式

valid_emails = email_pattern.findall(text)

print("valid emails:", valid_emails)

# 编译时使用多个标志

multi_flag_pattern = re.compile(r'^hello', re.ignorecase | re.multiline)

multi_text = "hello world\nhello there\nhello everyone"

multi_matches = multi_flag_pattern.findall(multi_text)

print("multi-flag matches:", multi_matches)

demonstrate_compiled_regex()运行结果:

valid emails: ['john.doe@company.com', 'jane_smith123@sub.domain.co.uk']

multi-flag matches: ['hello', 'hello', 'hello']

3.3 常用模式集合

源代码文件

class commonregexpatterns:

"""常用正则表达式模式"""

# 邮箱验证

email = r'^[a-za-z0-9._%+-]+@[a-za-z0-9.-]+\.[a-za-z]{2,}$'

# 手机号(中国)

phone_cn = r'^1[3-9]\d{9}$'

# url

url = r'^https?://(?:[-\w.]|(?:%[\da-fa-f]{2}))+'

# ip地址

ip_v4 = r'^(?:[0-9]{1,3}\.){3}[0-9]{1,3}$'

ip_v6 = r'^(?:[a-f0-9]{1,4}:){7}[a-f0-9]{1,4}$'

# 身份证号(中国)

id_card = r'^[1-9]\d{5}(18|19|20)\d{2}((0[1-9])|(1[0-2]))(([0-2][1-9])|10|20|30|31)\d{3}[0-9xx]$'

# 日期 (yyyy-mm-dd)

date = r'^\d{4}-(0[1-9]|1[0-2])-(0[1-9]|[12][0-9]|3[01])$'

# 时间 (hh:mm:ss)

time = r'^([01]?[0-9]|2[0-3]):[0-5][0-9]:[0-5][0-9]$'

# 汉字

chinese_char = r'^[\u4e00-\u9fa5]+$'

# 数字(整数或小数)

number = r'^-?\d+(?:\.\d+)?$'

def validate_with_patterns():

"""使用常用模式验证"""

test_cases = {

'email': [

'test@example.com',

'invalid-email',

'user@domain.co.uk'

],

'phone': [

'13812345678',

'12345678901',

'19876543210'

],

'date': [

'2023-12-25',

'2023-13-01',

'1999-02-29'

]

}

patterns = {

'email': commonregexpatterns.email,

'phone': commonregexpatterns.phone_cn,

'date': commonregexpatterns.date

}

for data_type, cases in test_cases.items():

pattern = patterns[data_type]

print(f"\nvalidating {data_type}:")

for case in cases:

is_valid = bool(re.match(pattern, case))

print(f" '{case}': {'✓ valid' if is_valid else '✗ invalid'}")

validate_with_patterns()运行结果如下:

validating email:

'test@example.com': ✓ valid

'invalid-email': ✗ invalid

'user@domain.co.uk': ✓ validvalidating phone:

'13812345678': ✓ valid

'12345678901': ✗ invalid

'19876543210': ✓ validvalidating date:

'2023-12-25': ✓ valid

'2023-13-01': ✗ invalid

'1999-02-29': ✓ valid

3.4 性能优化技巧

源代码文件

import time

def demonstrate_performance():

"""演示性能优化"""

# 测试文本

large_text = "test " * 10000 + "target" + " test" * 10000

# 方法1:直接使用re函数(每次编译)

start_time = time.time()

for _ in range(100):

re.search(r'target', large_text)

direct_time = time.time() - start_time

# 方法2:使用编译后的模式

compiled_pattern = re.compile(r'target')

start_time = time.time()

for _ in range(100):

compiled_pattern.search(large_text)

compiled_time = time.time() - start_time

print(f"direct search time: {direct_time:.4f}s")

print(f"compiled search time: {compiled_time:.4f}s")

print(f"performance improvement: {direct_time / compiled_time:.2f}x")

# 避免灾难性回溯

print("\navoiding catastrophic backtracking:")

# 不好的模式(可能引起灾难性回溯)

bad_pattern = r'(a+)+b'

# 好的模式

good_pattern = r'a+b'

test_string = "aaaaaaaaaaaaaaaaaaaaaaaa!"

try:

start_time = time.time()

re.match(bad_pattern, test_string)

bad_time = time.time() - start_time

print(f"bad pattern time: {bad_time:.4f}s")

except:

print("bad pattern caused timeout/error")

start_time = time.time()

re.match(good_pattern, test_string)

good_time = time.time() - start_time

print(f"good pattern time: {good_time:.4f}s")

demonstrate_performance()运行结果如下:

direct search time: 0.0091s

compiled search time: 0.0060s

performance improvement: 1.53xavoiding catastrophic backtracking:

bad pattern time: 0.8640s

good pattern time: 0.0000s

4 应用实践

4.1 解析字符demo

源代码文件

def regex_best_practices():

"""正则表达式最佳实践"""

# 1. 使用原始字符串

print("1. 使用原始字符串:")

bad_string = "\\section" # 需要转义反斜杠

good_string = r"\section" # 原始字符串,不需要转义

print(f" bad: {bad_string}")

print(f" good: {good_string}")

# 2. 编译重复使用的模式

print("\n2. 编译重复使用的模式:")

# 不好的做法:每次重新编译

# 好的做法:预先编译

# 3. 使用非贪婪匹配

print("\n3. 使用非贪婪匹配:")

html_text = "<div>content</div><div>more</div>"

greedy_pattern = r'<div>.*</div>' # 贪婪匹配

non_greedy_pattern = r'<div>.*?</div>' # 非贪婪匹配

greedy_match = re.search(greedy_pattern, html_text)

non_greedy_matches = re.findall(non_greedy_pattern, html_text)

print(f" greedy: {greedy_match.group() if greedy_match else 'none'}")

print(f" non-greedy: {non_greedy_matches}")

# 4. 使用字符类而不是选择分支

print("\n4. 使用字符类:")

bad_pattern = r'[0123456789]' # 冗长

good_pattern = r'[0-9]' # 简洁

better_pattern = r'\d' # 更好

test_text = "abc123"

print(f" bad pattern matches: {re.findall(bad_pattern, test_text)}")

print(f" good pattern matches: {re.findall(good_pattern, test_text)}")

print(f" better pattern matches: {re.findall(better_pattern, test_text)}")

regex_best_practices()运行结果:

1. 使用原始字符串:

bad: \section

good: \section2. 编译重复使用的模式:

3. 使用非贪婪匹配:

greedy: <div>content</div><div>more</div>

non-greedy: ['<div>content</div>', '<div>more</div>']4. 使用字符类:

bad pattern matches: ['1', '2', '3']

good pattern matches: ['1', '2', '3']

better pattern matches: ['1', '2', '3']

4.2 日志分析

源代码文件

def log_analysis_example():

"""日志分析示例"""

log_data = """

2023-12-01 10:30:15 info user john_doe logged in from 192.168.1.100

2023-12-01 10:35:22 error database connection failed

2023-12-01 10:40:05 warning high memory usage detected (85%)

2023-12-01 10:45:30 info user jane_smith accessed /api/data

2023-12-01 10:50:17 error file not found: /var/www/image.jpg

"""

# 解析日志条目

log_pattern = r'(\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2}) (\w+) (.*)'

print("log analysis:")

print("-" * 50)

for match in re.finditer(log_pattern, log_data):

timestamp, level, message = match.groups()

# 根据日志级别添加颜色

if level == 'error':

level_display = f"\033[91m{level}\033[0m" # 红色

elif level == 'warning':

level_display = f"\033[93m{level}\033[0m" # 黄色

else:

level_display = f"\033[92m{level}\033[0m" # 绿色

print(f"{timestamp} {level_display} {message}")

# 统计日志级别

level_pattern = r'\d{4}-\d{2}-\d{2} \d{2}:\d{2}:\d{2} (\w+)'

levels = re.findall(level_pattern, log_data)

from collections import counter

level_counts = counter(levels)

print("\nlog level statistics:")

for level, count in level_counts.items():

print(f" {level}: {count}")

log_analysis_example()运行结果:

log analysis:

--------------------------------------------------

2023-12-01 10:30:15 info user john_doe logged in from 192.168.1.100

2023-12-01 10:35:22 error database connection failed

2023-12-01 10:40:05 warning high memory usage detected (85%)

2023-12-01 10:45:30 info user jane_smith accessed /api/data

2023-12-01 10:50:17 error file not found: /var/www/image.jpglog level statistics:

info: 2

error: 2

warning: 1

4.3 数据提取和清洗

源代码文件

def data_cleaning_example():

"""数据清洗示例"""

dirty_data = """

names: john doe, jane smith, bob johnson

emails: john@test.com, jane@example.org, invalid-email

phones: 123-456-7890, 555.123.4567, (999) 888-7777, invalid-phone

dates: 2023/12/01, 01-12-2023, 2023.12.01, invalid-date

"""

# 定义清洗规则

cleaning_rules = {

'emails': commonregexpatterns.email,

'phones': r'\b\d{3}[-.)]\d{3}[-.]\d{4}\b',

'dates': r'\b\d{4}[-/.]\d{2}[-/.]\d{2}\b',

'names': r'\b[a-z][a-z]+ [a-z][a-z]+\b'

}

print("data cleaning results:")

print("-" * 40)

for data_type, pattern in cleaning_rules.items():

matches = re.findall(pattern, dirty_data)

print(f"{data_type.capitalize()}: {matches}")

data_cleaning_example()

运行结果:

data cleaning results:

----------------------------------------

emails: []

phones: ['123-456-7890', '555.123.4567']

dates: ['2023/12/01', '2023.12.01']

names: ['john doe', 'jane smith', 'bob johnson']

总结

到此这篇关于正则表达式的概念介绍和python实践应用的文章就介绍到这了,更多相关正则表达式介绍和python应用内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论