一、播放rtsp协议流

如果 webrtc 流以 rtsp 协议返回,流地址如:rtsp://127.0.0.1:5115/session.mpg,uniapp的 <video> 编译到android上直接就能播放,但通常会有2-3秒的延迟。

二、播放webrtc协议流

如果 webrtc 流以 webrtc 协议返回,流地址如:webrtc://127.0.0.1:1988/live/livestream,我们需要通过sdp协商、连接推流服务端、搭建音视频流通道来播放音视频流,通常有500毫秒左右的延迟。

封装 webrtcvideo 组件

<template>

<video id="rtc_media_player" width="100%" height="100%" autoplay playsinline></video>

</template>

<!-- 因为我们使用到 js 库,所以需要使用 uniapp 的 renderjs -->

<script module="webrtcvideo" lang="renderjs">

import $ from "./jquery-1.10.2.min.js";

import {prepareurl} from "./utils.js";

export default {

data() {

return {

//rtcpeerconnection 对象

peerconnection: null,

//需要播放的webrtc流地址

playurl: 'webrtc://127.0.0.1:1988/live/livestream'

}

},

methods: {

createpeerconnection() {

const that = this

//创建 webrtc 通信通道

that.peerconnection = new rtcpeerconnection(null);

//添加一个单向的音视频流收发器

that.peerconnection.addtransceiver("audio", { direction: "recvonly" });

that.peerconnection.addtransceiver("video", { direction: "recvonly" });

//收到服务器码流,将音视频流写入播放器

that.peerconnection.ontrack = (event) => {

const remotevideo = document.getelementbyid("rtc_media_player");

if (remotevideo.srcobject !== event.streams[0]) {

remotevideo.srcobject = event.streams[0];

}

};

},

async makecall() {

const that = this

const url = this.playurl

this.createpeerconnection()

//拼接服务端请求地址,如:http://192.168.0.1:1988/rtc/v1/play/

const conf = prepareurl(url);

//生成 offer sdp

const offer = await this.peerconnection.createoffer();

await this.peerconnection.setlocaldescription(offer);

var session = await new promise(function (resolve, reject) {

$.ajax({

type: "post",

url: conf.apiurl,

data: offer.sdp,

contenttype: "text/plain",

datatype: "json",

crossdomain: true,

})

.done(function (data) {

//服务端返回 answer sdp

if (data.code) {

reject(data);

return;

}

resolve(data);

})

.fail(function (reason) {

reject(reason);

});

});

//设置远端的描述信息,协商sdp,通过后搭建通道成功

await this.peerconnection.setremotedescription(

new rtcsessiondescription({ type: "answer", sdp: session.sdp })

);

session.simulator = conf.schema + '//' + conf.urlobject.server + ':' + conf.port + '/rtc/v1/nack/'

return session;

}

},

mounted() {

try {

this.makecall().then((res) => {

// webrtc 通道建立成功

})

} catch (error) {

// webrtc 通道建立失败

console.log(error)

}

}

}

</script>

utils.js

const defaultpath = "/rtc/v1/play/";

export const prepareurl = webrtcurl => {

var urlobject = parseurl(webrtcurl);

var schema = "http:";

var port = urlobject.port || 1985;

if (schema === "https:") {

port = urlobject.port || 443;

}

// @see https://github.com/rtcdn/rtcdn-draft

var api = urlobject.user_query.play || defaultpath;

if (api.lastindexof("/") !== api.length - 1) {

api += "/";

}

apiurl = schema + "//" + urlobject.server + ":" + port + api;

for (var key in urlobject.user_query) {

if (key !== "api" && key !== "play") {

apiurl += "&" + key + "=" + urlobject.user_query[key];

}

}

// replace /rtc/v1/play/&k=v to /rtc/v1/play/?k=v

var apiurl = apiurl.replace(api + "&", api + "?");

var streamurl = urlobject.url;

return {

apiurl: apiurl,

streamurl: streamurl,

schema: schema,

urlobject: urlobject,

port: port,

tid: number(parseint(new date().gettime() * math.random() * 100))

.tostring(16)

.substr(0, 7)

};

};

export const parseurl = url => {

// @see: http://stackoverflow.com/questions/10469575/how-to-use-location-object-to-parse-url-without-redirecting-the-page-in-javascri

var a = document.createelement("a");

a.href = url

.replace("rtmp://", "http://")

.replace("webrtc://", "http://")

.replace("rtc://", "http://");

var vhost = a.hostname;

var app = a.pathname.substr(1, a.pathname.lastindexof("/") - 1);

var stream = a.pathname.substr(a.pathname.lastindexof("/") + 1);

// parse the vhost in the params of app, that srs supports.

app = app.replace("...vhost...", "?vhost=");

if (app.indexof("?") >= 0) {

var params = app.substr(app.indexof("?"));

app = app.substr(0, app.indexof("?"));

if (params.indexof("vhost=") > 0) {

vhost = params.substr(params.indexof("vhost=") + "vhost=".length);

if (vhost.indexof("&") > 0) {

vhost = vhost.substr(0, vhost.indexof("&"));

}

}

}

// when vhost equals to server, and server is ip,

// the vhost is __defaultvhost__

if (a.hostname === vhost) {

var re = /^(\d+)\.(\d+)\.(\d+)\.(\d+)$/;

if (re.test(a.hostname)) {

vhost = "__defaultvhost__";

}

}

// parse the schema

var schema = "rtmp";

if (url.indexof("://") > 0) {

schema = url.substr(0, url.indexof("://"));

}

var port = a.port;

if (!port) {

if (schema === "http") {

port = 80;

} else if (schema === "https") {

port = 443;

} else if (schema === "rtmp") {

port = 1935;

}

}

var ret = {

url: url,

schema: schema,

server: a.hostname,

port: port,

vhost: vhost,

app: app,

stream: stream

};

fill_query(a.search, ret);

// for webrtc api, we use 443 if page is https, or schema specified it.

if (!ret.port) {

if (schema === "webrtc" || schema === "rtc") {

if (ret.user_query.schema === "https") {

ret.port = 443;

} else if (window.location.href.indexof("https://") === 0) {

ret.port = 443;

} else {

// for webrtc, srs use 1985 as default api port.

ret.port = 1985;

}

}

}

return ret;

};

export const fill_query = (query_string, obj) => {

// pure user query object.

obj.user_query = {};

if (query_string.length === 0) {

return;

}

// split again for angularjs.

if (query_string.indexof("?") >= 0) {

query_string = query_string.split("?")[1];

}

var queries = query_string.split("&");

for (var i = 0; i < queries.length; i++) {

var elem = queries[i];

var query = elem.split("=");

obj[query[0]] = query[1];

obj.user_query[query[0]] = query[1];

}

// alias domain for vhost.

if (obj.domain) {

obj.vhost = obj.domain;

}

};

页面中使用

<template> <videowebrtc /> </template> <script setup> import videowebrtc from "@/components/videowebrtc"; </script>

需要注意的事项:

1.spd 协商的重要标识之一为媒体描述: m=xxx <type> <code>,示例行如下:

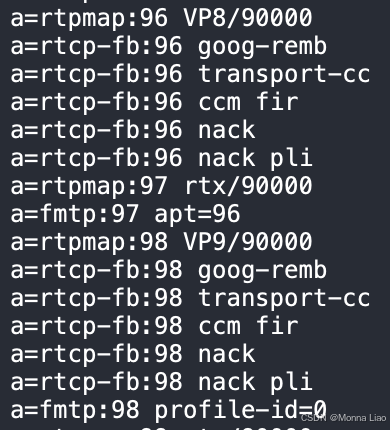

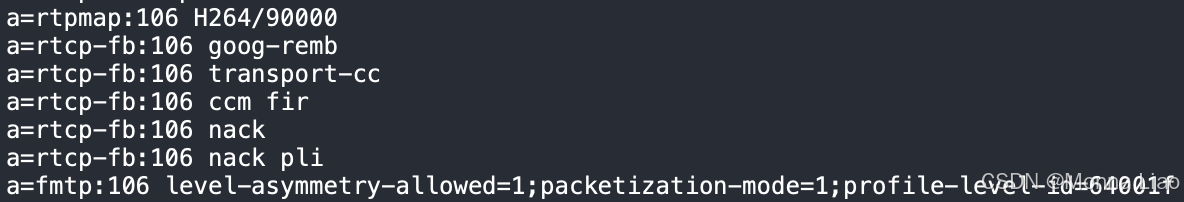

一个完整的媒体描述,从第一个m=xxx <type> <code>开始,到下一个m=xxx <type> <code>结束,以video为例,媒体描述包含了当前设备允许播放的视频流编码格式,常见如:vp8/vp9/h264 等:

对照 m=video 后边的编码发现,其包含所有 a=rtpmap 后的编码,a=rtpmap 编码后的字符串代表视频流格式,但视频编码与视频流格式却不是固定的匹配关系,也就是说,在设备a中,可能存在 a=rtpmap:106 h264/90000 表示h264,在设备b中,a=rtpmap:100 h264/90000 表示h264。

因此,如果要鉴别设备允许播放的视频流格式,我们需要观察 a=rtpmap code 后的字符串。

协商通过的部分标准为:

- offer sdp 的 m=xxx 数量需要与 answer sdp 的 m=xxx 数量保持一致;

- offer sdp 的 m=xxx 顺序需要与 answer sdp 的 m=xxx 顺序保持一致;如两者都需要将 m=audio 放在第一位,m=video放在第二位,或者反过来;

- answer sdp 返回的 m=audio 后的

<code>,需要被包含在 offer sdp 的 m=audio 后的<code>中;

offer sdp 的 m=xxx 由 addtransceiver 创建,首个参数为 audio 时,生成 m=audio,首个参数为video时,生成 m=video ,创建顺序对应 m=xxx 顺序

"recvonly" }); that.peerconnection.addtransceiver("video", { direction: "recvonly" }); ```

- 在 sdp 中存在一项

a=mid:xxxxxx在浏览器中可能为audio、video,在 android 设备上为0、1,服务端需注意与 offer sdp 匹配。 - 关于音视频流收发器,上面使用的api是

addtransceiver,但在部分android设备上会提示没有这个api,我们可以替换为getusermedia+addtrack:

data() {

return {

......

localstream: null,

......

}

},

methods: {

createpeerconnection() {

const that = this

//创建 webrtc 通信通道

that.peerconnection = new rtcpeerconnection(null);

that.localstream.gettracks().foreach((track) => {

that.peerconnection.addtrack(track, that.localstream);

});

//收到服务器码流,将音视频流写入播放器

that.peerconnection.ontrack = (event) => {

......

};

},

async makecall() {

const that = this

that.localstream = await navigator.mediadevices.getusermedia({

video: true,

audio: true,

});

const url = this.playurl

......

......

}

}

需要注意的是,

navigator.mediadevices.getusermedia获取的是设备摄像头、录音的媒体流,所以设备首先要具备摄像、录音功能,并开启对应权限,否则 api 将调用失败。

三、音视频实时通讯

这种 p2p 场景的流播放,通常需要使用 websocket 建立服务器连接,然后同时播放本地、服务端的流。

<template>

<div>local video</div>

<video id="localvideo" autoplay playsinline></video>

<div>remote video</div>

<video id="remotevideo" autoplay playsinline></video>

</template>

<script module="webrtcvideo" lang="renderjs">

import $ from "./jquery-1.10.2.min.js";

export default {

data() {

return {

signalingserverurl: "ws://127.0.0.1:8085",

iceserversurl: 'stun:stun.l.google.com:19302',

localstream: null,

peerconnection: null

}

},

methods: {

async startlocalstream(){

try {

this.localstream = await navigator.mediadevices.getusermedia({

video: true,

audio: true,

});

document.getelementbyid("localvideo").srcobject = this.localstream;

}catch (err) {

console.error("error accessing media devices.", err);

}

},

createpeerconnection() {

const configuration = { iceservers: [{

urls: this.iceserversurl

}]};

this.peerconnection = new rtcpeerconnection(configuration);

this.localstream.gettracks().foreach((track) => {

this.peerconnection.addtrack(track, this.localstream);

});

this.peerconnection.onicecandidate = (event) => {

if (event.candidate) {

ws.send(

json.stringify({

type: "candidate",

candidate: event.candidate,

})

);

}

};

this.peerconnection.ontrack = (event) => {

const remotevideo = document.getelementbyid("remotevideo");

if (remotevideo.srcobject !== event.streams[0]) {

remotevideo.srcobject = event.streams[0];

}

};

},

async makecall() {

this.createpeerconnection();

const offer = await this.peerconnection.createoffer();

await this.peerconnection.setlocaldescription(offer);

ws.send(json.stringify(offer));

}

},

mounted() {

this.makecall()

const ws = new websocket(this.signalingserverurl);

ws.onopen = () => {

console.log("connected to the signaling server");

this.startlocalstream();

};

ws.onmessage = async (message) => {

const data = json.parse(message.data);

if (data.type === "offer") {

if (!this.peerconnection) createpeerconnection();

await this.peerconnection.setremotedescription(

new rtcsessiondescription(data)

);

const answer = await this.peerconnection.createanswer();

await this.peerconnection.setlocaldescription(answer);

ws.send(json.stringify(this.peerconnection.localdescription));

} else if (data.type === "answer") {

if (!this.peerconnection) createpeerconnection();

await this.peerconnection.setremotedescription(

new rtcsessiondescription(data)

);

} else if (data.type === "candidate") {

if (this.peerconnection) {

try {

await this.peerconnection.addicecandidate(

new rtcicecandidate(data.candidate)

);

} catch (e) {

console.error("error adding received ice candidate", e);

}

}

}

}

}

}

</script>

与播放webrtc协议流相比,p2p 以 websocket 替代 ajax 实现 sdp 的发送与接收,增加了本地流的播放功能,其他与播放协议流的代码一致。

总结

到此这篇关于如何基于uniapp开发android播放webrtc流的文章就介绍到这了,更多相关uniapp开发android播放webrtc流内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论