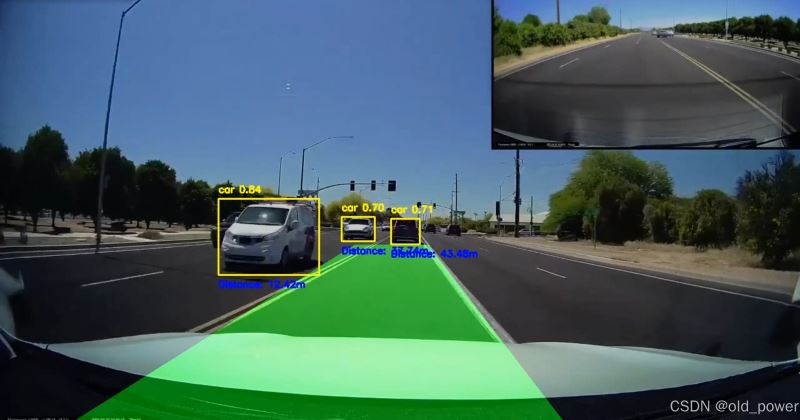

使用yolov8(you only look once)和opencv实现车道线和车辆检测,目标是创建一个可以检测道路上的车道并识别车辆的系统,并估计它们与摄像头的距离。该项目结合了计算机视觉技术和深度学习物体检测。

1、系统主要功能

- 车道检测:使用边缘检测和霍夫线变换检测道路车道。

- 汽车检测:使用 yolov8 模型识别汽车并在汽车周围绘制边界框。

- 距离估计:使用边界框大小计算检测到的汽车与摄像头的距离。

2、环境要求

- opencv:用于图像处理和车道检测。

- ultralytics yolov8:用于车辆检测。

- numpy:用于数组操作。

pip install opencv-python-headless numpy ultralytics

opencv-python 和 opencv-python-headless 区别是 opencv 的 python 包,主要区别在于是否包含 gui 相关的功能。

opencv-python

- 包含 gui 功能:支持窗口显示、鼠标事件等图形界面操作。

- 依赖:需要 gui 库(如 gtk、qt)支持。

- 适用场景:适用于需要显示图像或与用户交互的环境,如桌面应用。

opencv-python-headless

- 不包含 gui 功能:去除了窗口显示和用户交互功能。

- 依赖:无需 gui 库,适合无图形界面的环境。

- 适用场景:适用于服务器或无图形界面的环境,如远程服务器、docker 容器。

3、代码

import cv2

import numpy as np

import math

import time

from ultralytics import yolo # yolov8 module

# function to mask out the region of interest

def region_of_interest(img, vertices):

mask = np.zeros_like(img)

match_mask_color = 255

cv2.fillpoly(mask, vertices, match_mask_color)

masked_image = cv2.bitwise_and(img, mask)

return masked_image

# function to draw the filled polygon between the lane lines

def draw_lane_lines(img, left_line, right_line, color=[0, 255, 0], thickness=10):

line_img = np.zeros_like(img)

poly_pts = np.array([[

(left_line[0], left_line[1]),

(left_line[2], left_line[3]),

(right_line[2], right_line[3]),

(right_line[0], right_line[1])

]], dtype=np.int32)

# fill the polygon between the lines

cv2.fillpoly(line_img, poly_pts, color)

# overlay the polygon onto the original image

img = cv2.addweighted(img, 0.8, line_img, 0.5, 0.0)

return img

# the lane detection pipeline

def pipeline(image):

height = image.shape[0]

width = image.shape[1]

region_of_interest_vertices = [

(0, height),

(width / 2, height / 2),

(width, height),

]

# convert to grayscale and apply canny edge detection

gray_image = cv2.cvtcolor(image, cv2.color_rgb2gray)

cannyed_image = cv2.canny(gray_image, 100, 200)

# mask out the region of interest

cropped_image = region_of_interest(

cannyed_image,

np.array([region_of_interest_vertices], np.int32)

)

# perform hough line transformation to detect lines

lines = cv2.houghlinesp(

cropped_image,

rho=6,

theta=np.pi / 60,

threshold=160,

lines=np.array([]),

minlinelength=40,

maxlinegap=25

)

# separating left and right lines based on slope

left_line_x = []

left_line_y = []

right_line_x = []

right_line_y = []

if lines is none:

return image

for line in lines:

for x1, y1, x2, y2 in line:

slope = (y2 - y1) / (x2 - x1) if (x2 - x1) != 0 else 0

if math.fabs(slope) < 0.5: # ignore nearly horizontal lines

continue

if slope <= 0: # left lane

left_line_x.extend([x1, x2])

left_line_y.extend([y1, y2])

else: # right lane

right_line_x.extend([x1, x2])

right_line_y.extend([y1, y2])

# fit a linear polynomial to the left and right lines

min_y = int(image.shape[0] * (3 / 5)) # slightly below the middle of the image

max_y = image.shape[0] # bottom of the image

if left_line_x and left_line_y:

poly_left = np.poly1d(np.polyfit(left_line_y, left_line_x, deg=1))

left_x_start = int(poly_left(max_y))

left_x_end = int(poly_left(min_y))

else:

left_x_start, left_x_end = 0, 0 # defaults if no lines detected

if right_line_x and right_line_y:

poly_right = np.poly1d(np.polyfit(right_line_y, right_line_x, deg=1))

right_x_start = int(poly_right(max_y))

right_x_end = int(poly_right(min_y))

else:

right_x_start, right_x_end = 0, 0 # defaults if no lines detected

# create the filled polygon between the left and right lane lines

lane_image = draw_lane_lines(

image,

[left_x_start, max_y, left_x_end, min_y],

[right_x_start, max_y, right_x_end, min_y]

)

return lane_image

# function to estimate distance based on bounding box size

def estimate_distance(bbox_width, bbox_height):

# for simplicity, assume the distance is inversely proportional to the box size

# this is a basic estimation, you may use camera calibration for more accuracy

focal_length = 1000 # example focal length, modify based on camera setup

known_width = 2.0 # approximate width of the car (in meters)

distance = (known_width * focal_length) / bbox_width # basic distance estimation

return distance

# main function to read and process video with yolov8

def process_video():

# load the yolov8 model

model = yolo('weights/yolov8n.pt')

# 或者加载官方模型

# model = yolo("yolov8n.pt") # load an official model

# open the video file

cap = cv2.videocapture('video/video.mp4')

# check if video opened successfully

if not cap.isopened():

print("error: unable to open video file.")

return

# set the desired frame rate

target_fps = 30

frame_time = 1.0 / target_fps # time per frame to maintain 30fps

# resize to 720p (1280x720)

cap.set(cv2.cap_prop_frame_width, 1280)

cap.set(cv2.cap_prop_frame_height, 720)

# loop through each frame

while cap.isopened():

ret, frame = cap.read()

if not ret:

break

# resize frame to 720p

resized_frame = cv2.resize(frame, (1280, 720))

# run the lane detection pipeline

lane_frame = pipeline(resized_frame)

# run yolov8 to detect cars in the current frame

results = model(resized_frame)

# process the detections from yolov8

for result in results:

boxes = result.boxes

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0]) # bounding box coordinates

conf = box.conf[0] # confidence score

cls = int(box.cls[0]) # class id

# only draw bounding boxes for cars with confidence >= 0.5

if model.names[cls] == 'car' and conf >= 0.5:

label = f'{model.names[cls]} {conf:.2f}'

# draw the bounding box

cv2.rectangle(lane_frame, (x1, y1), (x2, y2), (0, 255, 255), 2)

cv2.puttext(lane_frame, label, (x1, y1 - 10),

cv2.font_hershey_simplex, 0.5, (0, 255, 255), 2)

# estimate the distance of the car

bbox_width = x2 - x1

bbox_height = y2 - y1

distance = estimate_distance(bbox_width, bbox_height)

# display the estimated distance

distance_label = f'distance: {distance:.2f}m'

cv2.puttext(lane_frame, distance_label, (x1, y2 + 20),

cv2.font_hershey_simplex, 0.5, (255, 0, 0), 2)

# display the resulting frame with both lane detection and car detection

cv2.imshow('lane and car detection', lane_frame)

# limit the frame rate to 30fps

time.sleep(frame_time)

# break the loop when 'q' is pressed

if cv2.waitkey(1) & 0xff == ord('q'):

break

# release video capture and close windows

cap.release()

cv2.destroyallwindows()

# run the video processing function

process_video()4、工作原理

4.1 车道线检测 pipeline

车道线检测包括一下几个步骤:

step 1: 屏蔽感兴趣区域(roi)

只处理图像的下半部分(车道线通常是可见的)。

def region_of_interest(img, vertices):

mask = np.zeros_like(img)

match_mask_color = 255

cv2.fillpoly(mask, vertices, match_mask_color)

masked_image = cv2.bitwise_and(img, mask)

return masked_imagestep 2: 使用canny进行边缘检测

将图像转换为灰度,并应用canny边缘检测来突出显示边缘。

gray_image = cv2.cvtcolor(image, cv2.color_rgb2gray) cannyed_image = cv2.canny(gray_image, 100, 200)

step 3: 霍夫线变换

霍夫线变换用于检测当前车道的线段。

lines = cv2.houghlinesp(

cropped_image,

rho=6,

theta=np.pi / 60,

threshold=160,

lines=np.array([]),

minlinelength=40,

maxlinegap=25

)4.2 使用yolov8进行车辆检测

step 1: 加载yolov8模型

我们使用预训练的yolov8模型来检测每一帧中的汽车(或者使用官方提供的模型)。

from ultralytics import yolo

model = yolo('weights/yolov8n.pt')

# model = yolo('yolov8n.pt') #官方提供的模型step 2: 绘制边界框

对于每一辆检测到的汽车,绘制边界框,并显示类名(汽车)和置信度分数。

for box in boxes:

x1, y1, x2, y2 = map(int, box.xyxy[0])

conf = box.conf[0]

if model.names[cls] == 'car' and conf >= 0.5:

label = f'{model.names[cls]} {conf:.2f}'

cv2.rectangle(lane_frame, (x1, y1), (x2, y2), (0, 255, 255), 2)

cv2.puttext(lane_frame, label, (x1, y1 - 10), cv2.font_hershey_simplex, 0.5, (0, 255, 255), 2)step 3:. 距离估计

根据边界框的大小估计到每辆检测到的汽车的距离。

def estimate_distance(bbox_width, bbox_height):

focal_length = 1000 # example focal length

known_width = 2.0 # approximate width of a car (in meters)

distance = (known_width * focal_length) / bbox_width

return distancestep 4:. 视频处理 pipeline

将车道检测、车辆检测和距离估计结合到一个实时视频处理pipeline中。

while cap.isopened():

ret, frame = cap.read()

if not ret:

break

lane_frame = pipeline(resized_frame)

results = model(resized_frame)

for result in results:

# draw bounding boxes and estimate distance

cv2.imshow('lane and car detection', lane_frame)

if cv2.waitkey(1) & 0xff == ord('q'):

break5、结果

项目源码地址: https://github.com/cityisbetter/lane_detection

到此这篇关于python基于yolov8和opencv实现车道线和车辆检测的文章就介绍到这了,更多相关python yolov8和opencv车道线检测内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论