第一步:准备数据

25种鸟类数据:self.class_indict = ["非洲冠鹤", "灰顶火雀", "信天翁", "亚历山大鹦鹉", "褐胸反嘴鹬", "美洲麻鳽", "美洲骨顶", "美洲金翅雀", "美洲隼", "美洲鹨", "美洲红尾鸟", "美洲蛇鸟", "安式蜂鸟", "蚁鸟", "阿拉里皮娇鹟", "朱鹮", "白头鵰", "巴厘岛八哥", "摩金莺", "蕉林莺", "带斑阔嘴鸟", "斑尾塍鹬", "仓鸮", "燕子", "横斑蓬头䴕"]

,总共有3800张图片,每个文件夹单独放一种数据

第二步:搭建模型

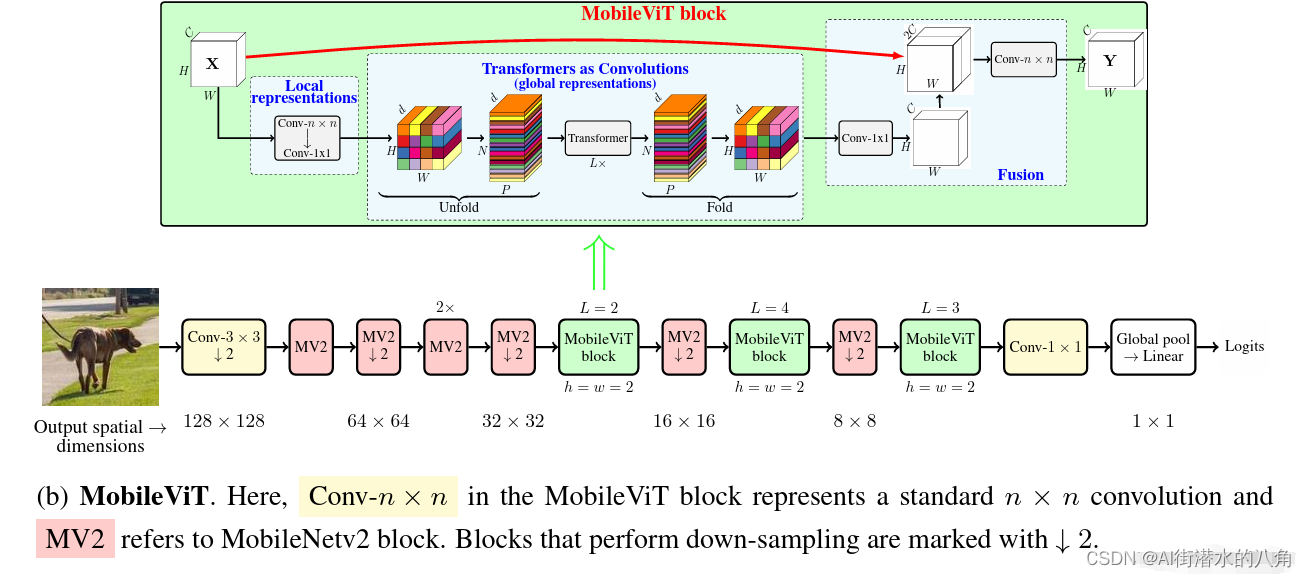

本文选择一个mobilevit网络,其原理介绍如下:

mobilevit是一种基于vit(vision transformer)架构的轻量级视觉模型,旨在适用于移动设备和嵌入式系统。vit是一种非常成功的深度学习模型,用于图像分类和其他计算机视觉任务,但通常需要大量的计算资源和参数。mobilevit的目标是在保持高性能的同时,减少模型的大小和计算需求,以便在移动设备上运行,据作者介绍,这是第一次基于轻量级cnn网络性能的轻量级vit工作,性能sota。性能优于mobilenetv3、crossvit等网络。

vision transformer结构

下图是mobilevit论文中绘制的standard visual transformer。首先将输入的图片划分成n个patch,然后通过线性变化将每个patch映射到一维向量中(token),接着加上位置偏置信息(可学习参数),再通过一系列transformer block,最后通过一个全连接层得到最终预测输出。

mobilevit结构

上面展示是标准视觉vit模型,下面来看下本次介绍的重点:mobile-vit网路结构,如下图所示:

第三步:训练代码

1)损失函数为:交叉熵损失函数

2)训练代码:

import os

import argparse

import torch

import torch.optim as optim

from torch.utils.tensorboard import summarywriter

from torchvision import transforms

from my_dataset import mydataset

from model import mobile_vit_xx_small as create_model

from utils import read_split_data, train_one_epoch, evaluate

def main(args):

device = torch.device(args.device if torch.cuda.is_available() else "cpu")

if os.path.exists("./weights") is false:

os.makedirs("./weights")

tb_writer = summarywriter()

train_images_path, train_images_label, val_images_path, val_images_label = read_split_data(args.data_path)

img_size = 224

data_transform = {

"train": transforms.compose([transforms.randomresizedcrop(img_size),

transforms.randomhorizontalflip(),

transforms.totensor(),

transforms.normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.compose([transforms.resize(int(img_size * 1.143)),

transforms.centercrop(img_size),

transforms.totensor(),

transforms.normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

# 实例化训练数据集

train_dataset = mydataset(images_path=train_images_path,

images_class=train_images_label,

transform=data_transform["train"])

# 实例化验证数据集

val_dataset = mydataset(images_path=val_images_path,

images_class=val_images_label,

transform=data_transform["val"])

batch_size = args.batch_size

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.dataloader(train_dataset,

batch_size=batch_size,

shuffle=true,

pin_memory=true,

num_workers=nw,

collate_fn=train_dataset.collate_fn)

val_loader = torch.utils.data.dataloader(val_dataset,

batch_size=batch_size,

shuffle=false,

pin_memory=true,

num_workers=nw,

collate_fn=val_dataset.collate_fn)

model = create_model(num_classes=args.num_classes).to(device)

if args.weights != "":

assert os.path.exists(args.weights), "weights file: '{}' not exist.".format(args.weights)

weights_dict = torch.load(args.weights, map_location=device)

weights_dict = weights_dict["model"] if "model" in weights_dict else weights_dict

# 删除有关分类类别的权重

for k in list(weights_dict.keys()):

if "classifier" in k:

del weights_dict[k]

print(model.load_state_dict(weights_dict, strict=false))

if args.freeze_layers:

for name, para in model.named_parameters():

# 除head外,其他权重全部冻结

if "classifier" not in name:

para.requires_grad_(false)

else:

print("training {}".format(name))

pg = [p for p in model.parameters() if p.requires_grad]

optimizer = optim.adamw(pg, lr=args.lr, weight_decay=1e-2)

best_acc = 0.

for epoch in range(args.epochs):

# train

train_loss, train_acc = train_one_epoch(model=model,

optimizer=optimizer,

data_loader=train_loader,

device=device,

epoch=epoch)

# validate

val_loss, val_acc = evaluate(model=model,

data_loader=val_loader,

device=device,

epoch=epoch)

tags = ["train_loss", "train_acc", "val_loss", "val_acc", "learning_rate"]

tb_writer.add_scalar(tags[0], train_loss, epoch)

tb_writer.add_scalar(tags[1], train_acc, epoch)

tb_writer.add_scalar(tags[2], val_loss, epoch)

tb_writer.add_scalar(tags[3], val_acc, epoch)

tb_writer.add_scalar(tags[4], optimizer.param_groups[0]["lr"], epoch)

if val_acc > best_acc:

best_acc = val_acc

torch.save(model.state_dict(), "./weights/best_model.pth")

torch.save(model.state_dict(), "./weights/latest_model.pth")

if __name__ == '__main__':

parser = argparse.argumentparser()

parser.add_argument('--num_classes', type=int, default=25)

parser.add_argument('--epochs', type=int, default=100)

parser.add_argument('--batch-size', type=int, default=4)

parser.add_argument('--lr', type=float, default=0.0002)

# 数据集所在根目录

# https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

parser.add_argument('--data-path', type=str,

default=r"g:\demo\data\bird_dataset\birds\train")

# 预训练权重路径,如果不想载入就设置为空字符

parser.add_argument('--weights', type=str, default='./mobilevit_xxs.pt',

help='initial weights path')

# 是否冻结权重

parser.add_argument('--freeze-layers', type=bool, default=false)

parser.add_argument('--device', default='cuda:0', help='device id (i.e. 0 or 0,1 or cpu)')

opt = parser.parse_args()

main(opt)

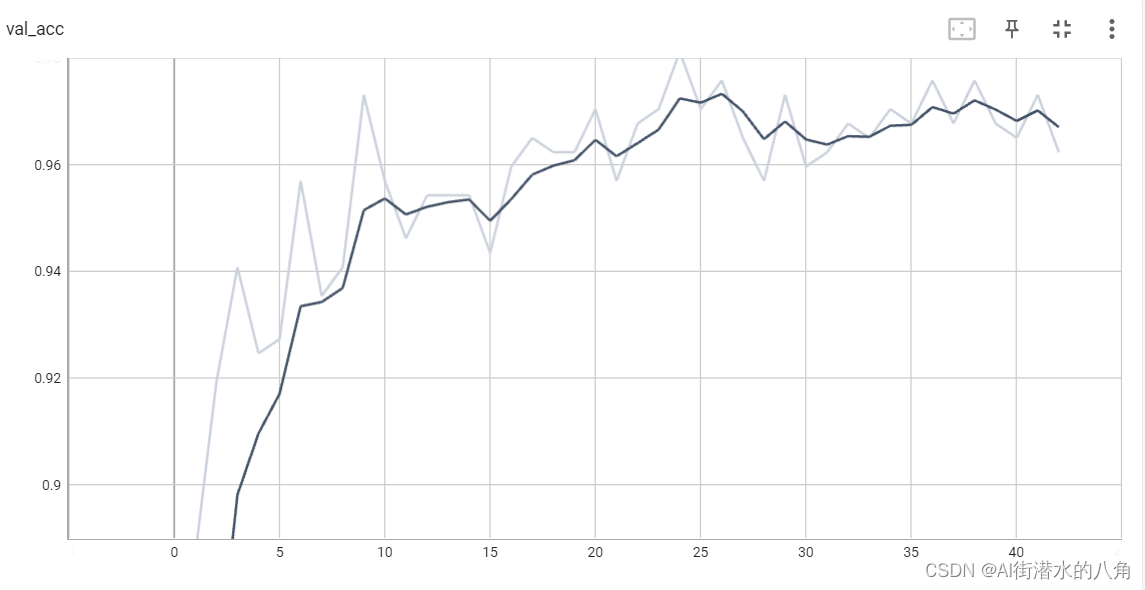

第四步:统计正确率

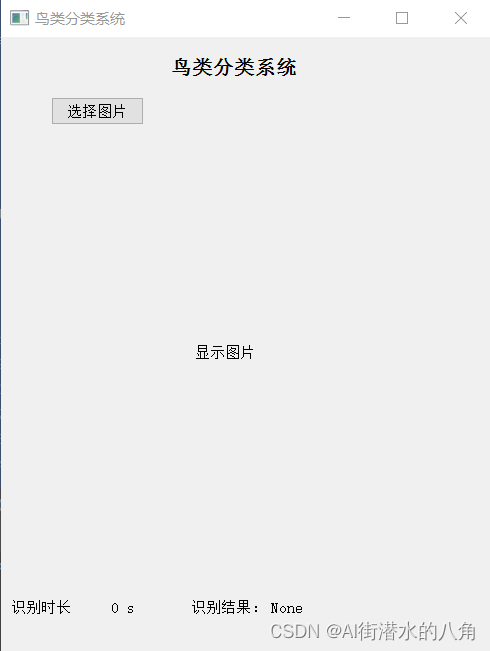

第五步:搭建gui界面

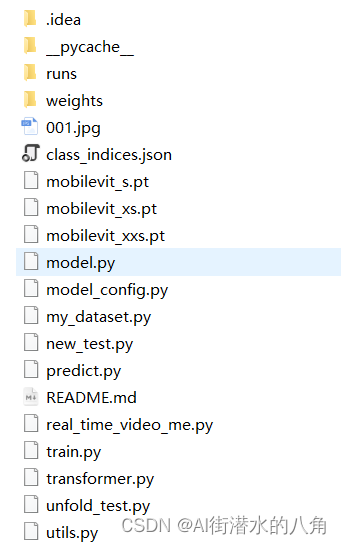

第六步:整个工程的内容

有训练代码和训练好的模型以及训练过程,提供数据,提供gui界面代码

代码的下载路径(新窗口打开链接):基于pytorch框架的深度学习mobilevit神经网络鸟类识别分类系统源码

有问题可以私信或者留言,有问必答

](/images/newimg/nimg6.png)

发表评论