问题

我们在设计表结构的时候,设计规范里面有一条如下规则:对于可变长度的字段,在满足条件的前提下,尽可能使用较短的变长字段长度。为什么这么规定,主要基于两个方面

基于存储空间的考虑

基于性能的考虑

网上说varchar(50)和varchar(500)存储空间上是一样的,真的是这样吗?基于性能考虑,是因为过长的字段会影响到查询性能?

本文我将带着这两个问题探讨验证一下:

验证存储空间的区别

1、准备两张表

create table `category_info_varchar_50` ( `id` bigint(20) not null auto_increment comment '主键', `name` varchar(50) not null comment '分类名称', `is_show` tinyint(4) not null default '0' comment '是否展示:0 禁用,1启用', `sort` int(11) not null default '0' comment '序号', `deleted` tinyint(1) default '0' comment '是否删除', `create_time` datetime not null comment '创建时间', `update_time` datetime not null comment '更新时间', primary key (`id`) using btree, key `idx_name` (`name`) using btree comment '名称索引' ) engine=innodb default charset=utf8mb4 comment='分类'; create table `category_info_varchar_500` ( `id` bigint(20) not null auto_increment comment '主键', `name` varchar(500) not null comment '分类名称', `is_show` tinyint(4) not null default '0' comment '是否展示:0 禁用,1启用', `sort` int(11) not null default '0' comment '序号', `deleted` tinyint(1) default '0' comment '是否删除', `create_time` datetime not null comment '创建时间', `update_time` datetime not null comment '更新时间', primary key (`id`) using btree, key `idx_name` (`name`) using btree comment '名称索引' ) engine=innodb auto_increment=288135 default charset=utf8mb4 comment='分类';

2、准备数据

给每张表插入相同的数据,为了凸显不同,插入100万条数据

delimiter $$

create procedure batchinsertdata(in total int)

begin

declare start_idx int default 1;

declare end_idx int;

declare batch_size int default 500;

declare insert_values text;

set end_idx = least(total, start_idx + batch_size - 1);

while start_idx <= total do

set insert_values = '';

while start_idx <= end_idx do

set insert_values = concat(insert_values, concat('(\'name', start_idx, '\', 0, 0, 0, now(), now()),'));

set start_idx = start_idx + 1;

end while;

set insert_values = left(insert_values, length(insert_values) - 1); -- remove the trailing comma

set @sql = concat('insert into category_info_varchar_50 (name, is_show, sort, deleted, create_time, update_time) values ', insert_values, ';');

prepare stmt from @sql;

execute stmt;

set @sql = concat('insert into category_info_varchar_500 (name, is_show, sort, deleted, create_time, update_time) values ', insert_values, ';');

prepare stmt from @sql;

execute stmt;

set end_idx = least(total, start_idx + batch_size - 1);

end while;

end$$

delimiter ;

call batchinsertdata(1000000);3、验证存储空间

查询第一张表sql

select

table_schema as "数据库",

table_name as "表名",

table_rows as "记录数",

truncate ( data_length / 1024 / 1024, 2 ) as "数据容量(mb)",

truncate ( index_length / 1024 / 1024, 2 ) as "索引容量(mb)"

from

information_schema.tables

where

table_schema = 'test_mysql_field'

and table_name = 'category_info_varchar_50'

order by

data_length desc,

index_length desc;查询结果

查询第二张表sql

select

table_schema as "数据库",

table_name as "表名",

table_rows as "记录数",

truncate ( data_length / 1024 / 1024, 2 ) as "数据容量(mb)",

truncate ( index_length / 1024 / 1024, 2 ) as "索引容量(mb)"

from

information_schema.tables

where

table_schema = 'test_mysql_field'

and table_name = 'category_info_varchar_500'

order by

data_length desc,

index_length desc;查询结果

4、结论

两张表在占用空间上确实是一样的,并无差别。

验证性能区别

1、验证索引覆盖查询

select name from category_info_varchar_50 where name = 'name100000' -- 耗时0.012s select name from category_info_varchar_500 where name = 'name100000' -- 耗时0.012s select name from category_info_varchar_50 order by name; -- 耗时0.370s select name from category_info_varchar_500 order by name; -- 耗时0.379s

通过索引覆盖查询性能差别不大

2、验证索引查询

select * from category_info_varchar_50 where name = 'name100000'

--耗时 0.012s

select * from category_info_varchar_500 where name = 'name100000'

--耗时 0.012s

select * from category_info_varchar_50 where name in('name100','name1000','name100000','name10000','name1100000',

'name200','name2000','name200000','name20000','name2200000','name300','name3000','name300000','name30000','name3300000',

'name400','name4000','name400000','name40000','name4400000','name500','name5000','name500000','name50000','name5500000',

'name600','name6000','name600000','name60000','name6600000','name700','name7000','name700000','name70000','name7700000','name800',

'name8000','name800000','name80000','name6600000','name900','name9000','name900000','name90000','name9900000')

-- 耗时 0.011s -0.014s

-- 增加 order by name 耗时 0.012s - 0.015s

select * from category_info_varchar_50 where name in('name100','name1000','name100000','name10000','name1100000',

'name200','name2000','name200000','name20000','name2200000','name300','name3000','name300000','name30000','name3300000',

'name400','name4000','name400000','name40000','name4400000','name500','name5000','name500000','name50000','name5500000',

'name600','name6000','name600000','name60000','name6600000','name700','name7000','name700000','name70000','name7700000','name800',

'name8000','name800000','name80000','name6600000','name900','name9000','name900000','name90000','name9900000')

-- 耗时 0.012s -0.014s

-- 增加 order by name 耗时 0.014s - 0.017s索引范围查询性能基本相同, 增加了order by后开始有一定性能差别;

3、验证全表查询和排序

全表无排序

全表有排序

select * from category_info_varchar_50 order by name ; --耗时 1.498s select * from category_info_varchar_500 order by name ; --耗时 4.875s

结论:

全表扫描无排序情况下,两者性能无差异,在全表有排序的情况下, 两种性能差异巨大;

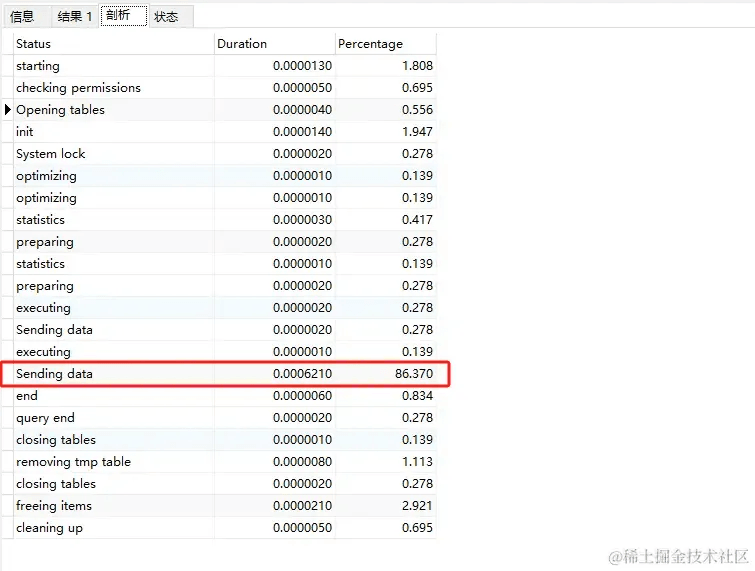

分析原因

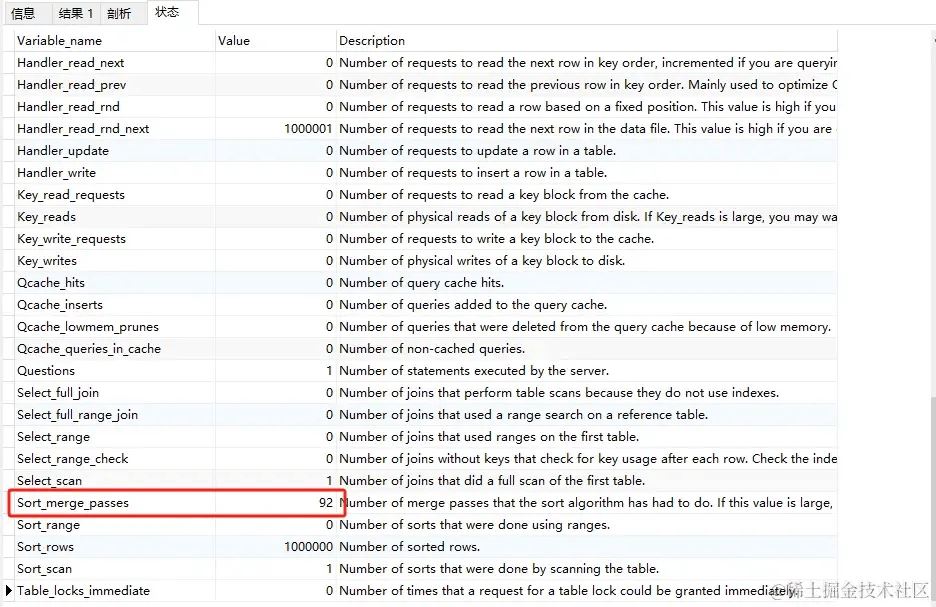

varchar50 全表执行sql分析

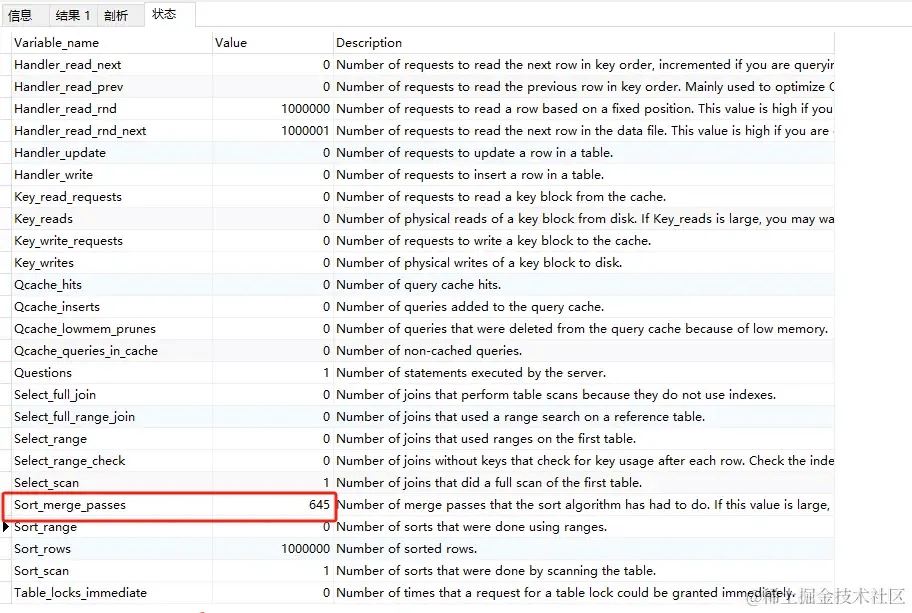

我发现86%的时花在数据传输上,接下来我们看状态部分,关注created_tmp_files和sort_merge_passes

created_tmp_files为3

sort_merge_passes为95

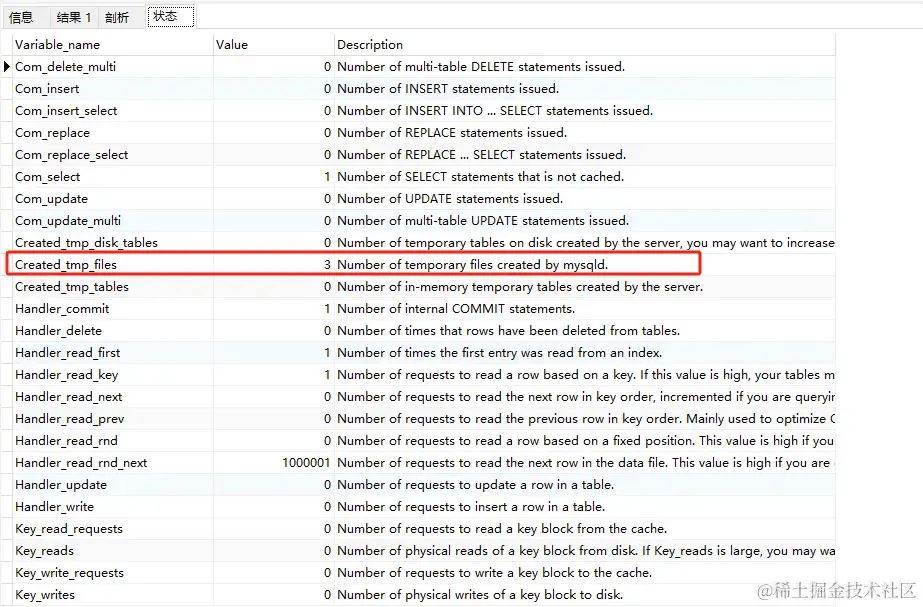

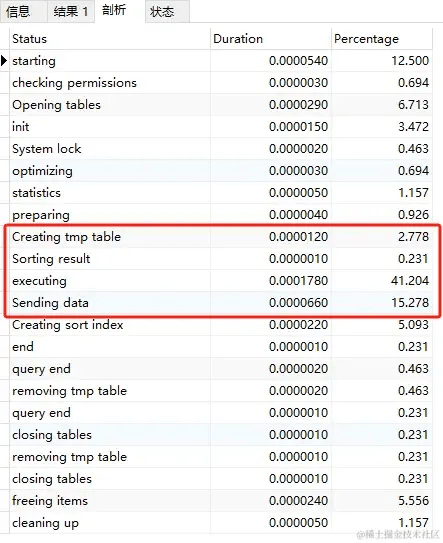

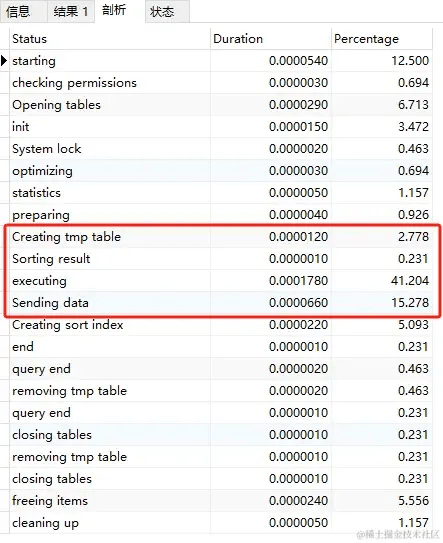

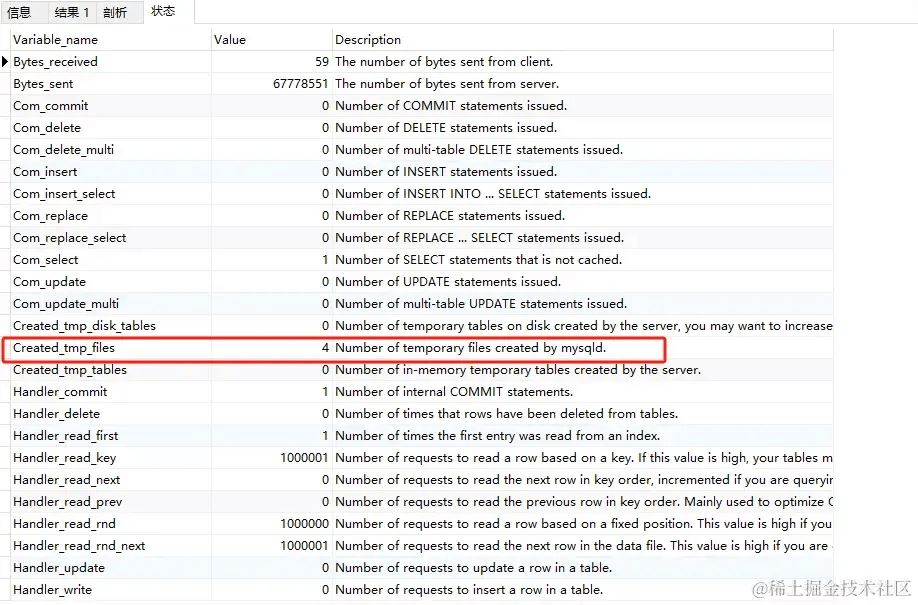

varchar500 全表执行sql分析

增加了临时表排序

created_tmp_files 为 4

sort_merge_passes为645

关于sort_merge_passes, mysql给出了如下描述:

number of merge passes that the sort algorithm has had to do. if this value is large, you may want to increase the value of the sort_buffer_size.

其实sort_merge_passes对应的就是mysql做归并排序的次数,也就是说,如果sort_merge_passes值比较大,说明sort_buffer和要排序的数据差距越大,我们可以通过增大sort_buffer_size或者让填入sort_buffer_size的键值对更小来缓解sort_merge_passes归并排序的次数。

最终结论

至此,我们不难发现,当我们最该字段进行排序操作的时候,mysql会根据该字段的设计的长度进行内存预估,如果设计过大的可变长度,会导致内存预估的值超出sort_buffer_size的大小,导致mysql采用磁盘临时文件排序,最终影响查询性能。

发表评论