【ue5/ue4】超详细教程接入科大讯飞语音唤醒sdk并初始持久监听(10102错误码解决)

先导

windows环境下

**ue版本为ue4.27及以下

你需要具备一定的c++基础,或全程一对一对照我的代码编写你的代码

使用offline voice recognition插件作为录音插件(仅做录音插件使用)

基于https://github.com/zhangmei126/xunfei二次开发

语音识别部分参考csdnue4如何接入科大讯飞的语音识别

在此基础上增加了语音唤醒功能,实际上语音唤醒与上述文章中是两个模块

由于讯飞插件的使用需要调用msplogin,也就是需要先注册

其插件中的speechinit()方法已经为我们注册好了,如果可以自己写注册的话,后述本文语音唤醒部分是不分引擎版本的

语音唤醒环境配置

参考ue4如何接入科大讯飞的语音识别接入科大讯飞sdk以及使用offline voice recognition插件了后,在plugins中确保他们都是开启的状态

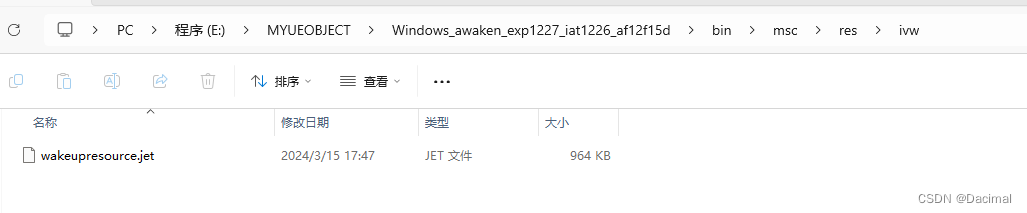

你要确保你的sdk下载的是windows版本以及一下sdk文件包

你要确保你的sdk下载后正确导入了且appid已经拥有了正确的配置

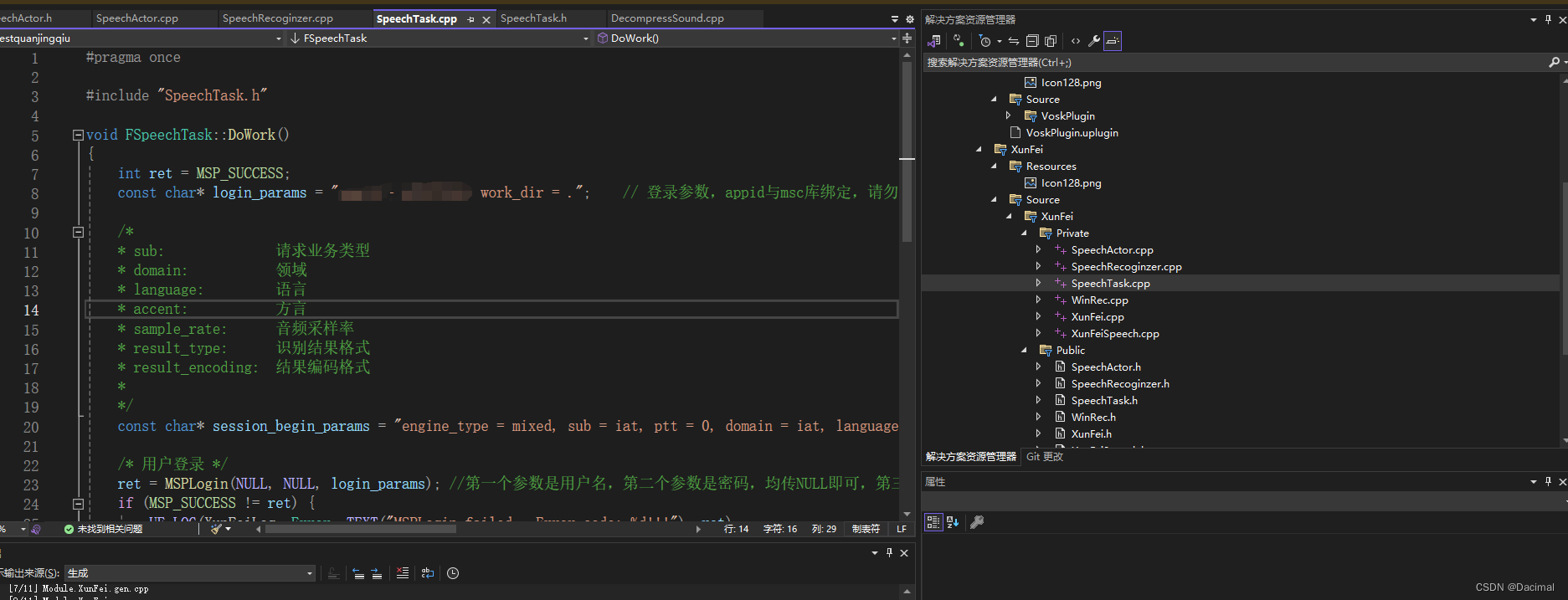

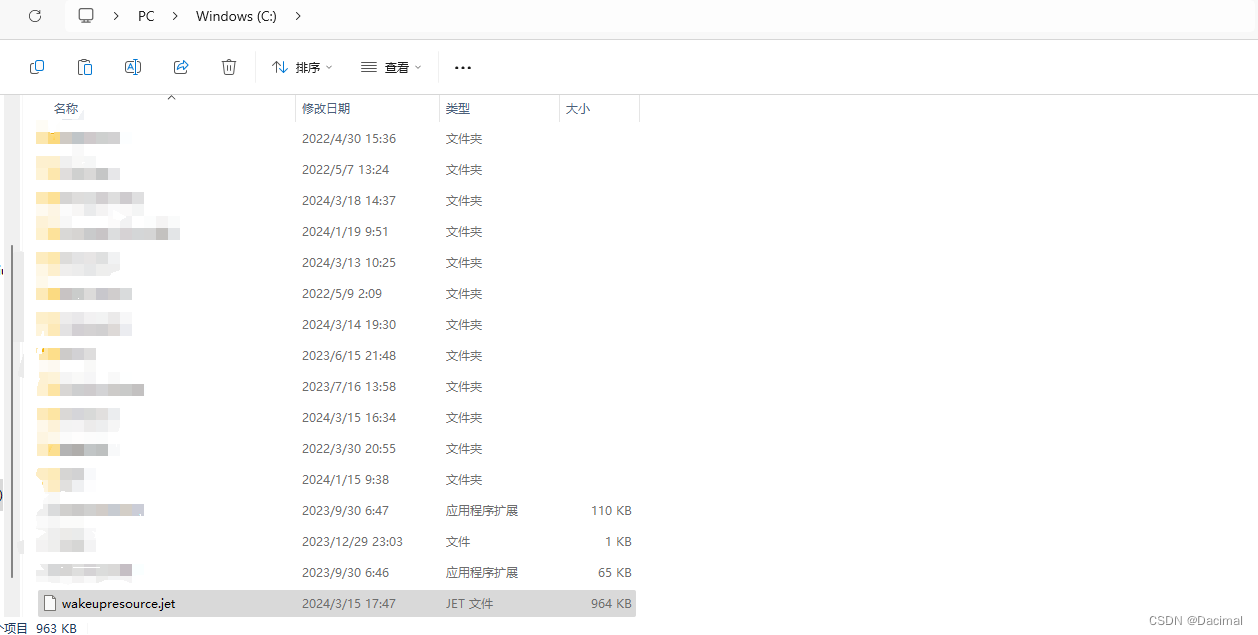

你要确保你sdk下载后将windows_awaken_exp1227_iat1226_af12f15d\bin\msc\res\ivw\wakeupresource.jet放置到了你的c盘根目录下

to

如果你是为了10102错误码来此文章:

此处是讯飞的坑,讯飞的wakeupresource.jet的路径必须是绝对路径

c++中也就必须要使用转义符"\\"即

const char* params = "ivw_threshold=0:5, ivw_res_path =fo|c:\\wakeupresource.jet";

至此10102解决了,就这么简单,但是很坑

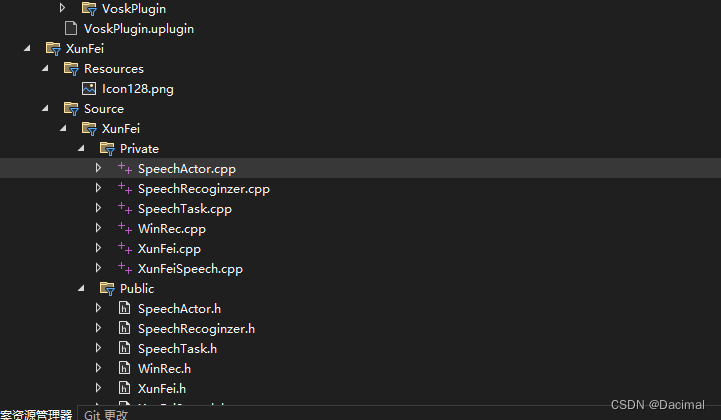

打开visualstudio

- 打开讯飞插件文件夹

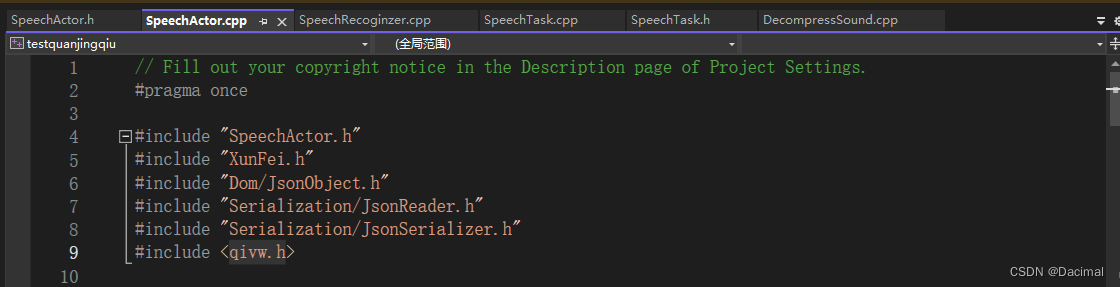

- 在speechactor.cpp中引入qivw.h

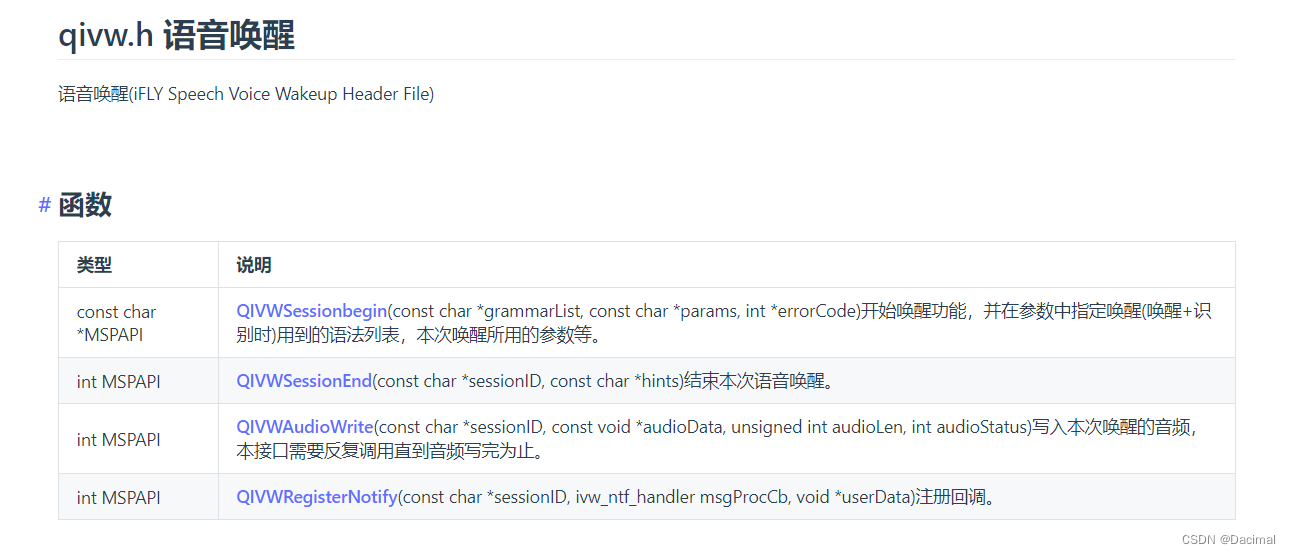

qivw.h语音唤醒具备以下方法

- 在speechactor.h中

#pragma once

#include "speechtask.h"

#include "coreminimal.h"

#include "gameframework/actor.h"

#include "speechactor.generated.h"

uclass()

class xunfei_api aspeechactor : public aactor

{

generated_body()

private:

fstring result;

declare_dynamic_multicast_delegate(fwakeupbufferdelegate);

public:

// sets default values for this actor's properties

aspeechactor();

protected:

// called when the game starts or when spawned

virtual void beginplay() override;

public:

// called every frame

virtual void tick(float deltatime) override;

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "speechinit", keywords = "speech recognition initialization"))

void speechinit();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "speechopen", keywords = "speech recognition open"))

void speechopen();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "speechstop", keywords = "speech recognition stop"))

void speechstop();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "speechquit", keywords = "speech recognition quit"))

void speechquit();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "speechresult", keywords = "speech recognition getresult"))

fstring speechresult();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "wakeupstart", keywords = "speech recognition getresult"))

fstring wakeupstart();

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "wakeupend", keywords = "speech recognition getresult"))

bool wakeupend(fstring sessionid);

ufunction(blueprintcallable, category = "xunfei", meta = (displayname = "wakeupbuffer", keywords = "speech recognition getresult"))

bool wakeupbuffer(tarray<uint8> myarray, fstring sessionid);

//这是一个回调函数

uproperty(blueprintassignable)

fwakeupbufferdelegate onwakeupbuffer;

};

- 在speechactor.cpp中

// fill out your copyright notice in the description page of project settings.

#pragma once

#include "speechactor.h"

#include "xunfei.h"

#include "dom/jsonobject.h"

#include "serialization/jsonreader.h"

#include "serialization/jsonserializer.h"

#include <qivw.h>

// sets default values

aspeechactor::aspeechactor() :

result{}

{

// set this actor to call tick() every frame. you can turn this off to improve performance if you don't need it.

primaryactortick.bcanevertick = false;

}

// called when the game starts or when spawned

void aspeechactor::beginplay()

{

super::beginplay();

}

// called every frame

void aspeechactor::tick(float deltatime)

{

super::tick(deltatime);

}

void aspeechactor::speechinit()

{

fautodeleteasynctask<fspeechtask>* speechtask = new fautodeleteasynctask<fspeechtask>();

if (speechtask)

{

speechtask->startbackgroundtask();

}

else

{

ue_log(xunfeilog, error, text("xunfei task object could not be create !"));

return;

}

ue_log(xunfeilog, log, text("xunfei task stopped !"));

return;

}

void aspeechactor::speechopen()

{

xunfeispeech->setstart();

return;

}

void aspeechactor::speechstop()

{

xunfeispeech->setstop();

return;

}

void aspeechactor::speechquit()

{

xunfeispeech->setquit();

sleep(300);

return;

}

fstring aspeechactor::speechresult()

{

result = fstring(utf8_to_tchar(xunfeispeech->get_result()));

fstring lajistring("{\"sn\":2,\"ls\":true,\"bg\":0,\"ed\":0,\"ws\":[{\"bg\":0,\"cw\":[{\"sc\":0.00,\"w\":\"\"}]}]}");

int32 lajiindex = result.find(*lajistring);

if (lajiindex != -1)

{

result.removefromend(lajistring);

}

tsharedptr<fjsonobject> jsonobject;

tsharedref< tjsonreader<tchar> > reader = tjsonreaderfactory<tchar>::create(result);

if (fjsonserializer::deserialize(reader, jsonobject))

{

result.reset();

tarray< tsharedptr<fjsonvalue> > temparray = jsonobject->getarrayfield("ws");

for (auto rs : temparray)

{

result.append((rs->asobject()->getarrayfield("cw"))[0]->asobject()->getstringfield("w"));

}

}

ue_log(xunfeilog, log, text("%s"), *result);

return result;

}

// 在 cb_ivw_msg_proc 静态函数中进行事件触发

int cb_ivw_msg_proc(const char* sessionid1, int msg, int param1, int param2, const void* info, void* userdata)

{

if (msp_ivw_msg_error == msg) //唤醒出错消息

{

ue_log(logtemp, warning, text("不在"));

return 0;

}

else if (msp_ivw_msg_wakeup == msg) //唤醒成功消息

{

ue_log(logtemp, warning, text("imhere"));

if (userdata) {

aspeechactor* mythis = reinterpret_cast<aspeechactor*>(userdata);

if (mythis) {

ue_log(logtemp, warning, text("diaoyongle"));

mythis->onwakeupbuffer.broadcast();

return 1;

}

}

}

return 0;

}

fstring aspeechactor::wakeupstart()

{

int err_code = msp_success;

const char* params = "ivw_threshold=0:5, ivw_res_path =fo|c:\\wakeupresource.jet";

int ret = 0;

const char* sessionid = qivwsessionbegin(null, params, &ret);

err_code = qivwregisternotify(sessionid,cb_ivw_msg_proc, this);

if (err_code != msp_success)

{

ue_log(logtemp, warning, text("qivwregisternotify failed, error code is: %d"), ret);

}

else {

ue_log(logtemp, warning, text("qivwregisternotify success, error code is: %d"), ret);

}

if (msp_success != ret)

{

ue_log(logtemp, warning, text("qivwsessionbegin failed, error code is: %d"),ret);

}

return fstring(sessionid);

ue_log(logtemp, warning, text("qivwsessionbegin is working"));

}

bool aspeechactor::wakeupend(fstring sessionid)

{

int ret = qivwsessionend(tchar_to_ansi(*sessionid) , "normal end");

if (msp_success != ret)

{

ue_log(logtemp, warning, text("qivwsessionend failed, error code is: %d"), ret);

return false;

}

ue_log(logtemp, warning, text("qivwsessionisend"));

return true;

}

bool aspeechactor::wakeupbuffer(tarray<uint8> bitarray, fstring sessionid)

{

int ret = 0;

if (bitarray.num() == 0)

{

return false;

}

else

{

int audio_len = bitarray.num();

int audio_status =2; // 设置音频状态,这里假设为msp_audio_sample_last

ret = qivwaudiowrite(tchar_to_ansi(*sessionid), bitarray.getdata(), audio_len, audio_status);

if (msp_success != ret)

{

printf("qivwaudiowrite failed, error code is: %d", ret);

return false;

}

return true;

}

}

至此准备工作完成

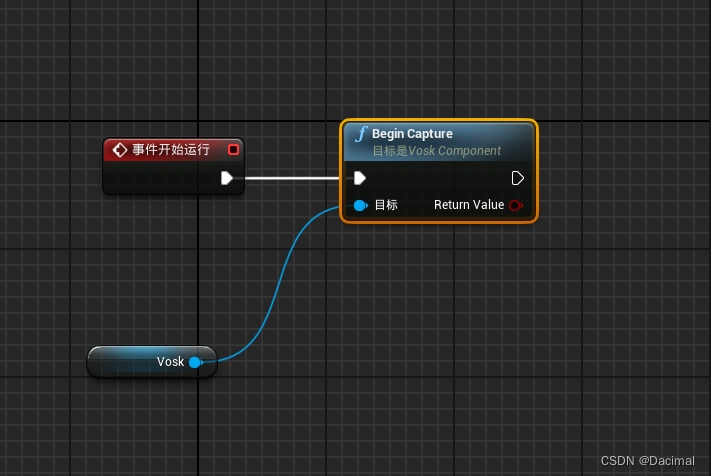

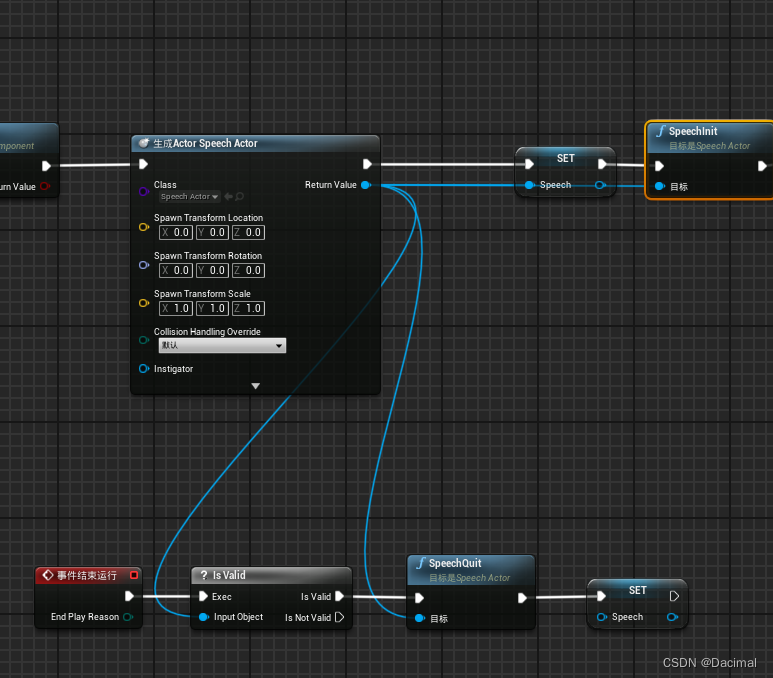

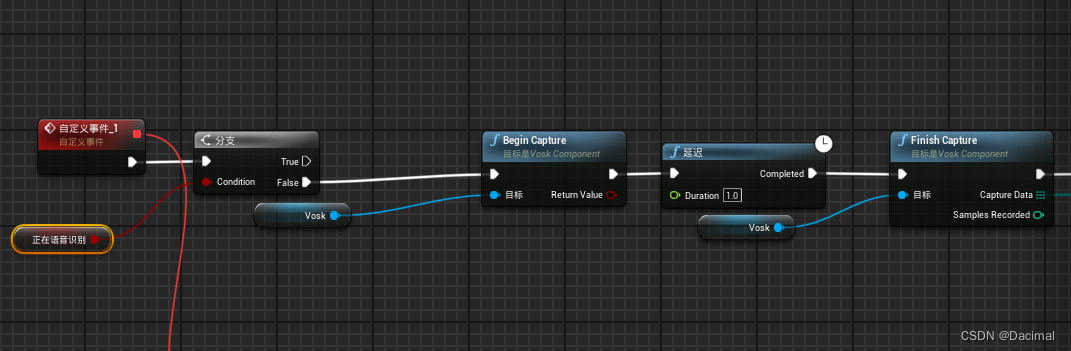

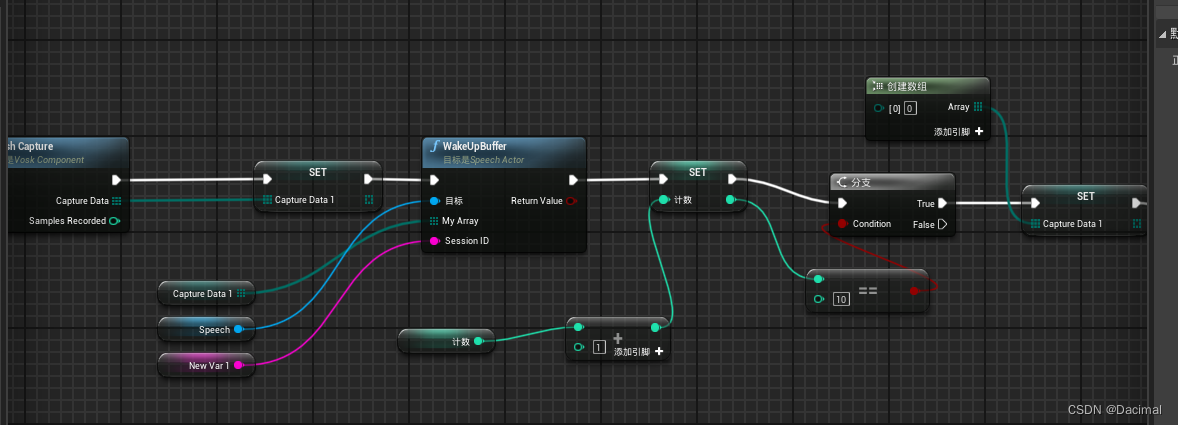

打开我们的蓝图

-

在确保你的offline voice recognition插件打开的前提下添加vosk插件

-

考虑本文的应用环境需要他初始化时就持续监听

故而让其开始运行时就开启录音(语音唤醒需要录音文件才能监听)

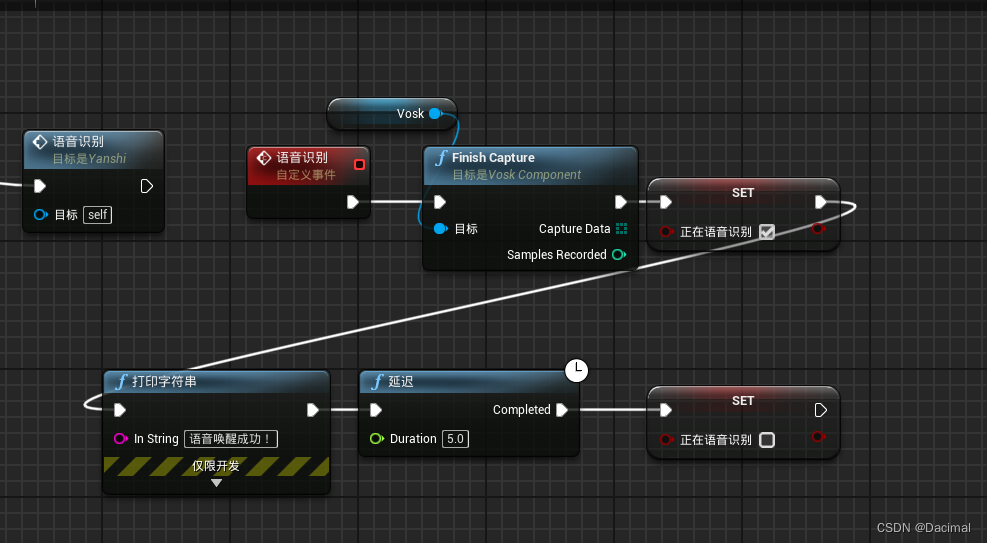

- 接下里我们需要注册讯飞,并且在运行结束释放讯飞注册

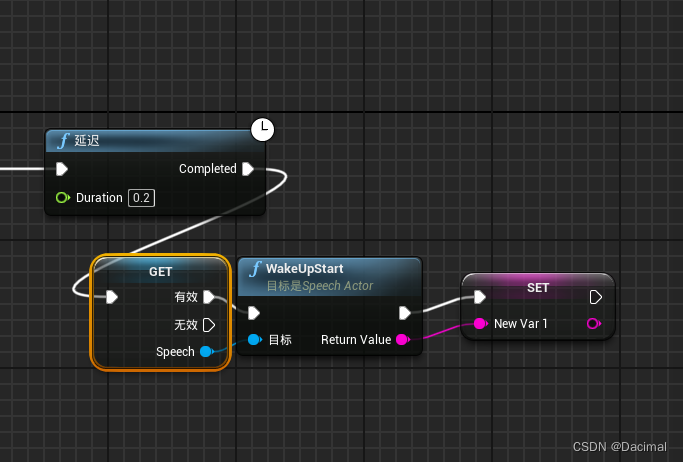

4.讯飞注册后等待两秒注册我们的wakeup语音唤醒

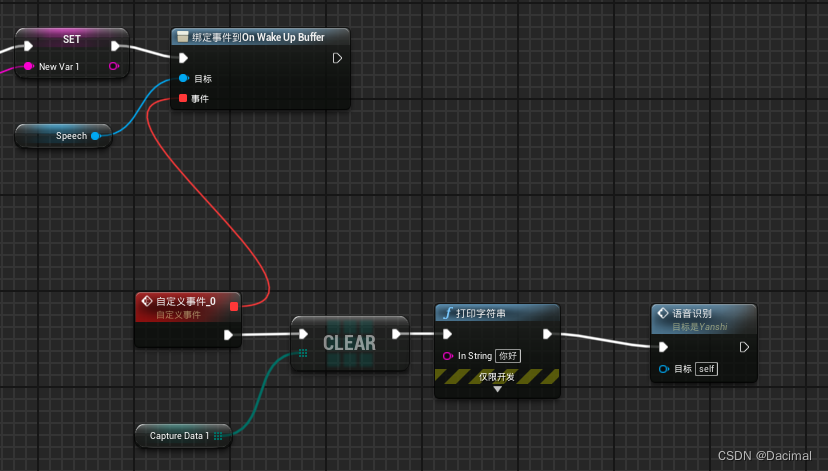

- 绑定一个唤醒事件

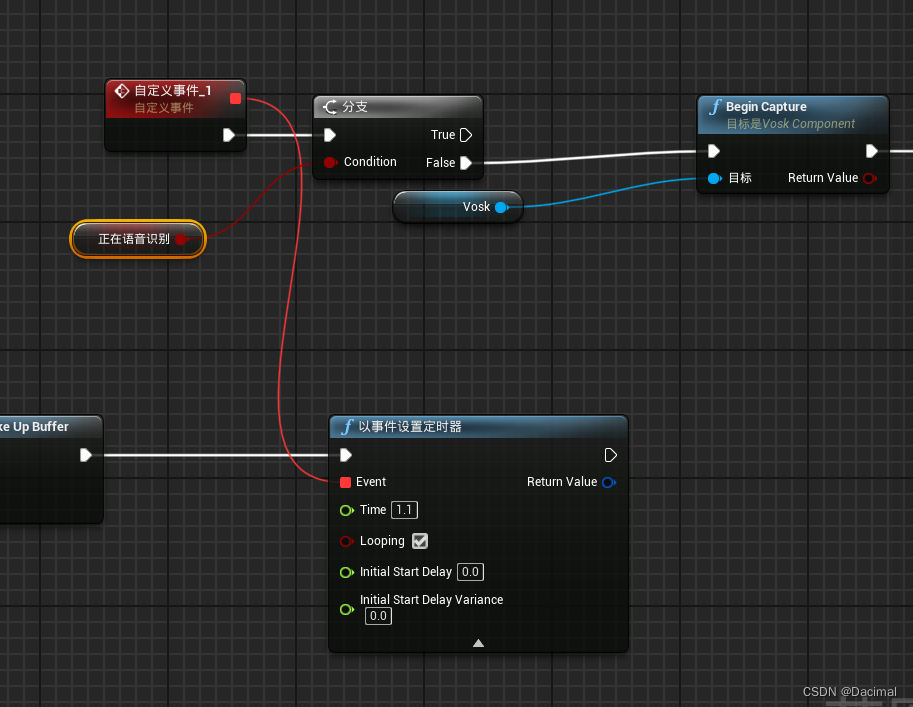

3. 在设置一个1.1秒的定时器

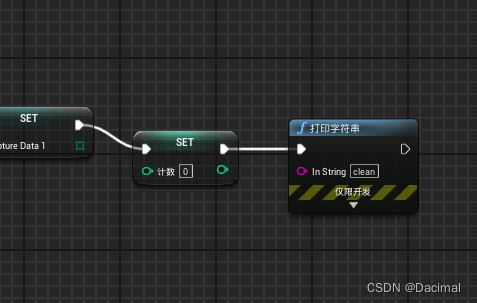

4. 定时器内部(正在语音识别默认值为false)

5. 语音唤醒的自定义事件(唤醒五秒钟后恢复继续监听)

蓝图文件以绑定

author:dacimal

定制化开发联系:19815779273(不闲聊)

发表评论