文章目录

1、简介

https://pypi.org/project/speechrecognition/

https://github.com/uberi/speech_recognition

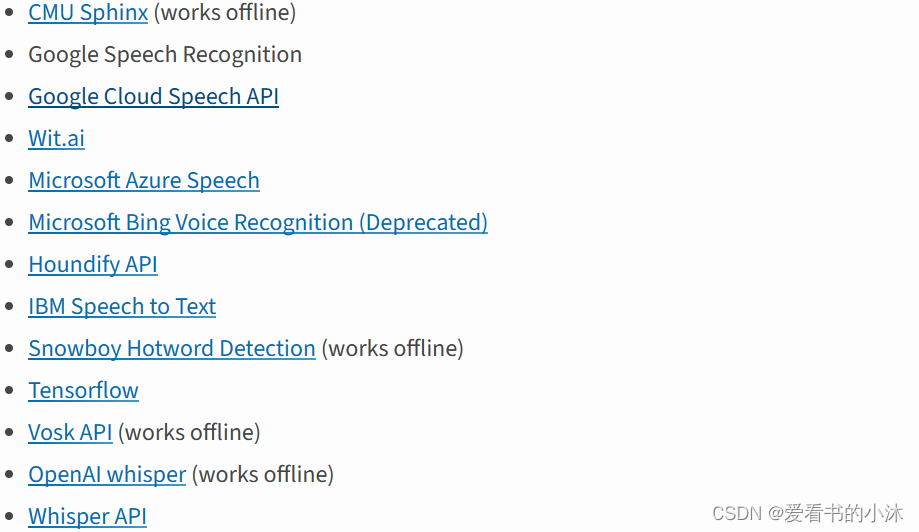

speech recognition engine/api 支持如下接口:

recognize_bing():microsoft bing speech

recognize_google(): google web speech api

recognize_google_cloud():google cloud speech - requires installation of the google-cloud-speech package

recognize_houndify(): houndify by soundhound

recognize_ibm():ibm speech to text

recognize_sphinx():cmu sphinx - requires installing pocketsphinx

recognize_wit():wit.ai

以上几个中只有 recognition_sphinx()可与cmu sphinx 引擎脱机工作, 其他六个都需要连接互联网。另外,speechrecognition 附带 google web speech api 的默认 api 密钥,可直接使用它。其他的 api 都需要使用 api 密钥或用户名/密码组合进行身份验证。

2、安装和测试

-

python 3.8+ (required)

-

pyaudio 0.2.11+ (required only if you need to use microphone input, microphone)

-

pocketsphinx (required only if you need to use the sphinx recognizer, recognizer_instance.recognize_sphinx)

-

google api client library for python (required only if you need to use the google cloud speech api, recognizer_instance.recognize_google_cloud)

-

flac encoder (required only if the system is not x86-based windows/linux/os x)

-

vosk (required only if you need to use vosk api speech recognition recognizer_instance.recognize_vosk)

-

whisper (required only if you need to use whisper recognizer_instance.recognize_whisper)

-

openai (required only if you need to use whisper api speech recognition recognizer_instance.recognize_whisper_api)

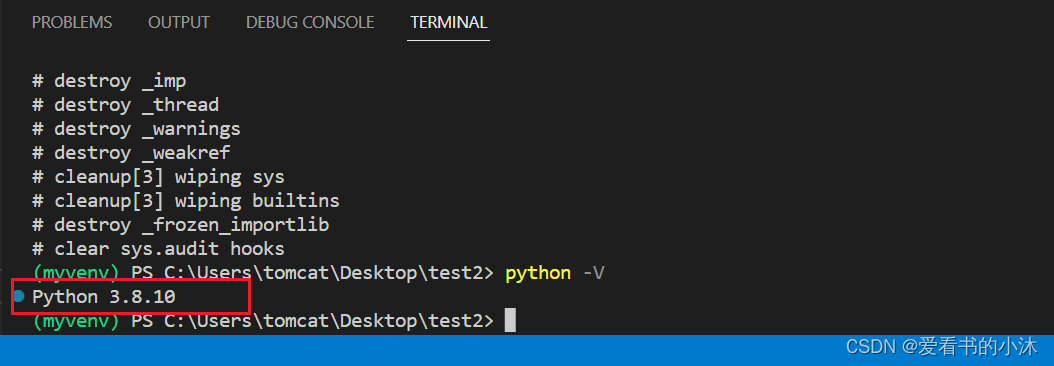

2.1 安装python

https://www.python.org/downloads/

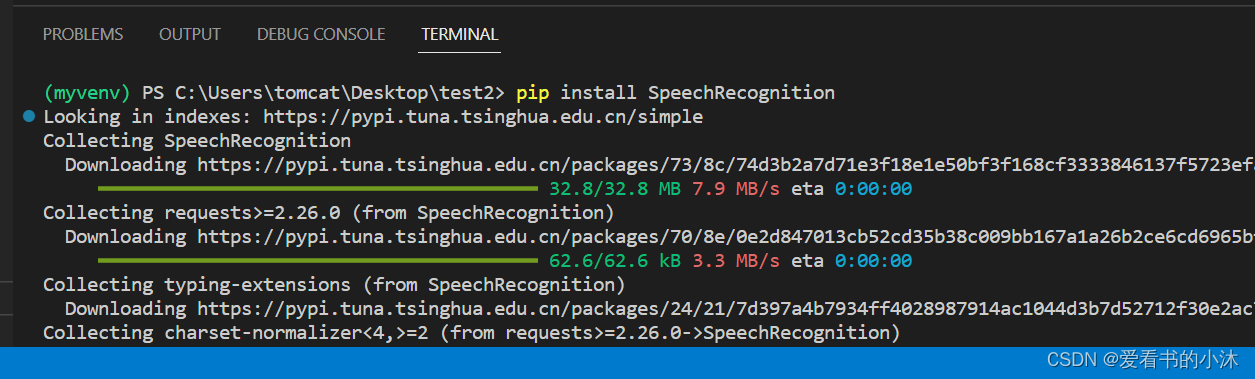

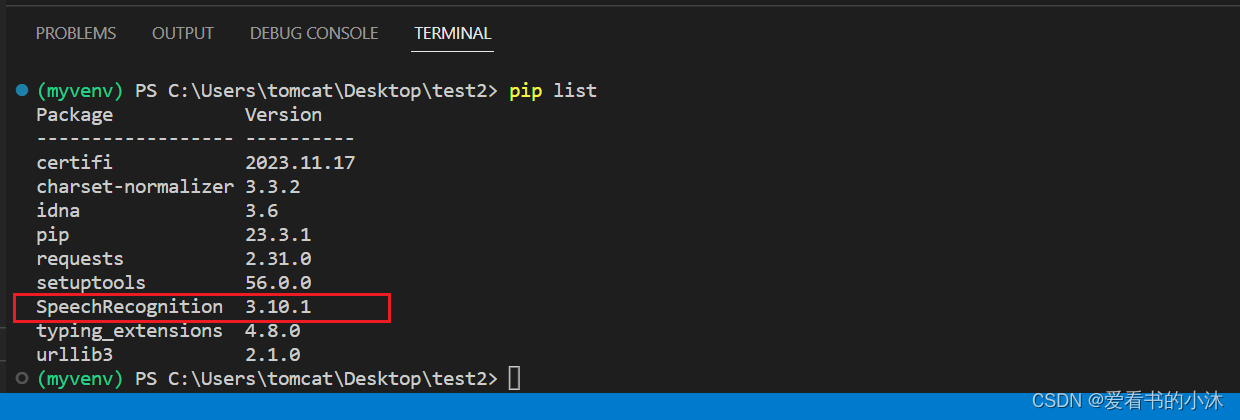

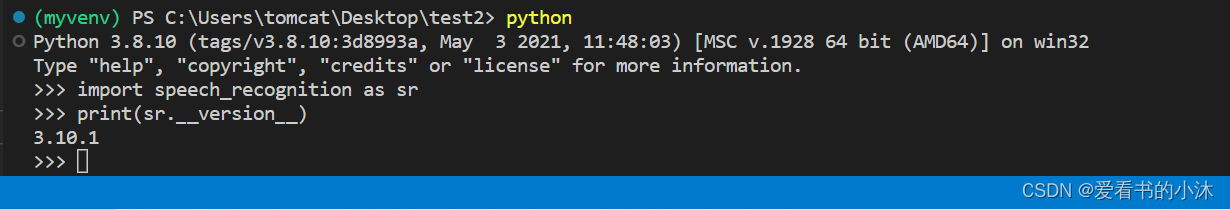

2.2 安装speechrecognition

安装库speechrecognition:

#python -m pip install --upgrade pip

#pip install 包名 -i https://pypi.tuna.tsinghua.edu.cn/simple/

#pip install 包名 -i http://pypi.douban.com/simple/ --trusted-host pypi.douban.com

#pip install 包名 -i https://pypi.org/simple

pip install speechrecognition

import speech_recognition as sr

print(sr.__version__)

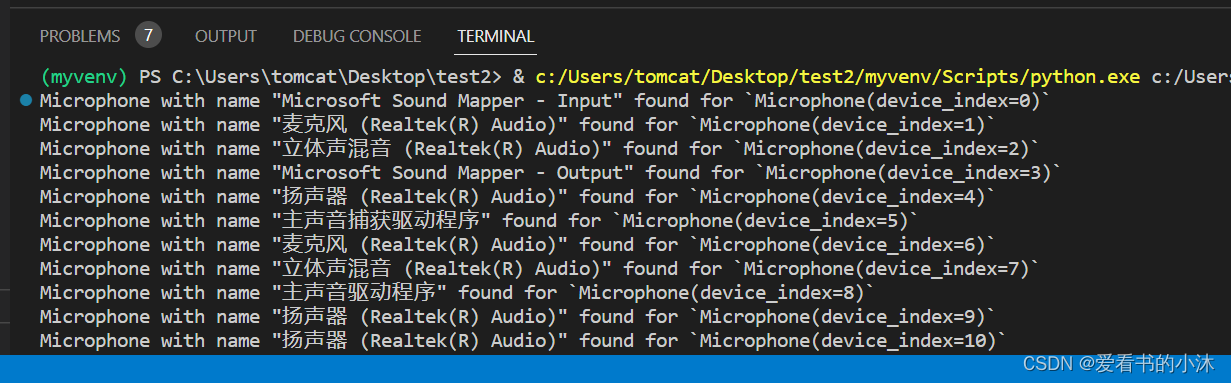

麦克风的特定于硬件的索引获取:

import speech_recognition as sr

for index, name in enumerate(sr.microphone.list_microphone_names()):

print("microphone with name \"{1}\" found for `microphone(device_index={0})`".format(index, name))

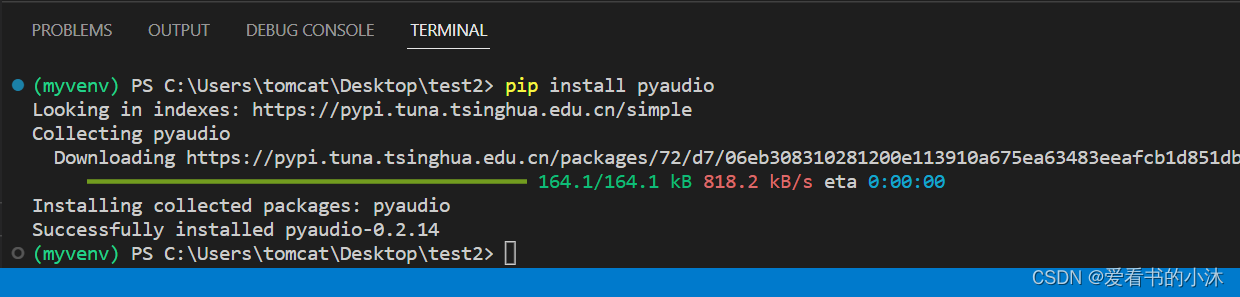

2.3 安装pyaudio

pip install pyaudio

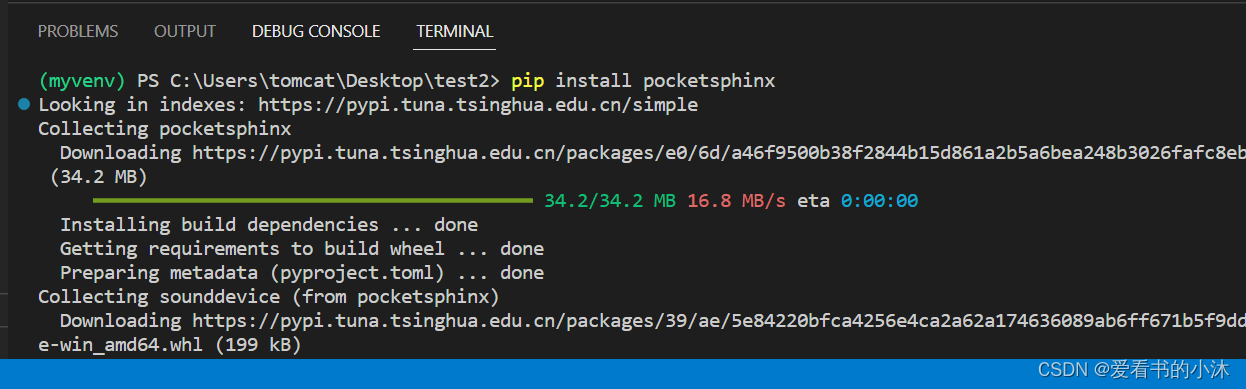

2.4 安装pocketsphinx(offline)

pip install pocketsphinx

或者https://www.lfd.uci.edu/~gohlke/pythonlibs/#pocketsphinx找到编译好的本地库文件进行安装。

在这里使用的是recognize_sphinx()语音识别器,它可以脱机工作,但是必须安装pocketsphinx库.

若要进行中文识别,还需要两样东西。

1、语音文件(speechrecognition对文件格式有要求);

speechrecognition支持语音文件类型:

wav: 必须是 pcm/lpcm 格式

aiff

aiff-c

flac: 必须是初始 flac 格式;ogg-flac 格式不可用

2、中文声学模型、语言模型和字典文件;

pocketsphinx需要安装的中文语言、声学模型。

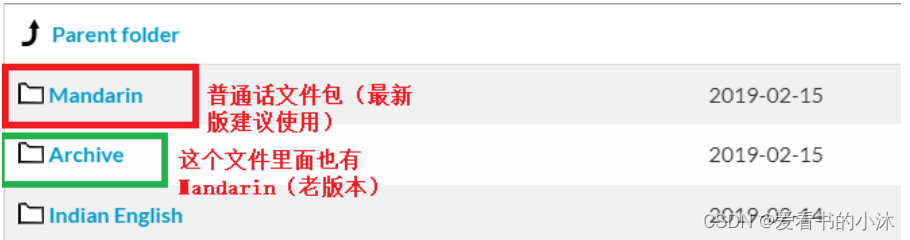

https://sourceforge.net/projects/cmusphinx/files/acoustic%20and%20language%20models/mandarin/

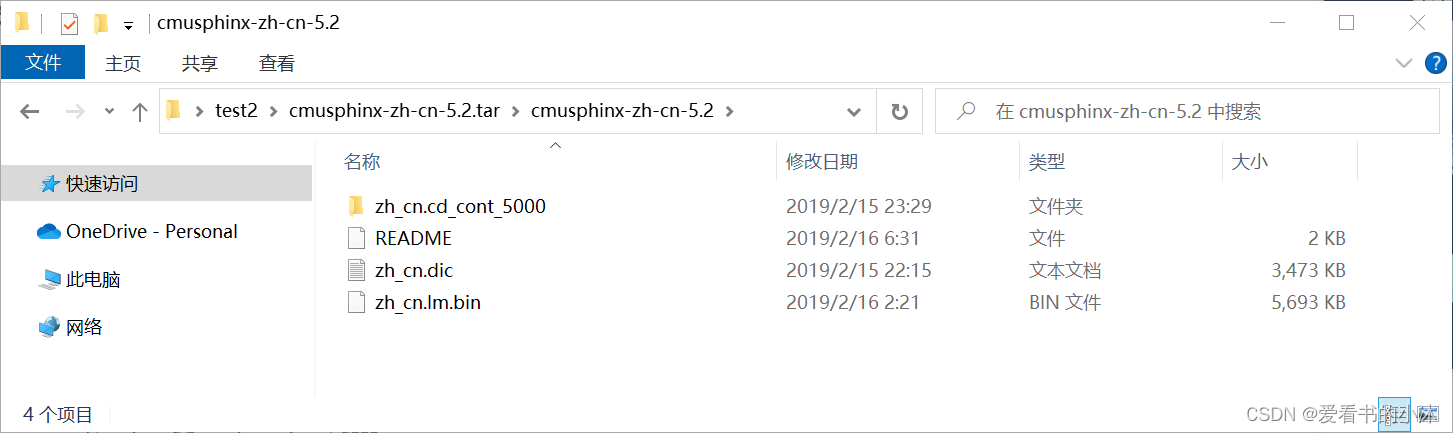

下载cmusphinx-zh-cn-5.2.tar.gz并解压:

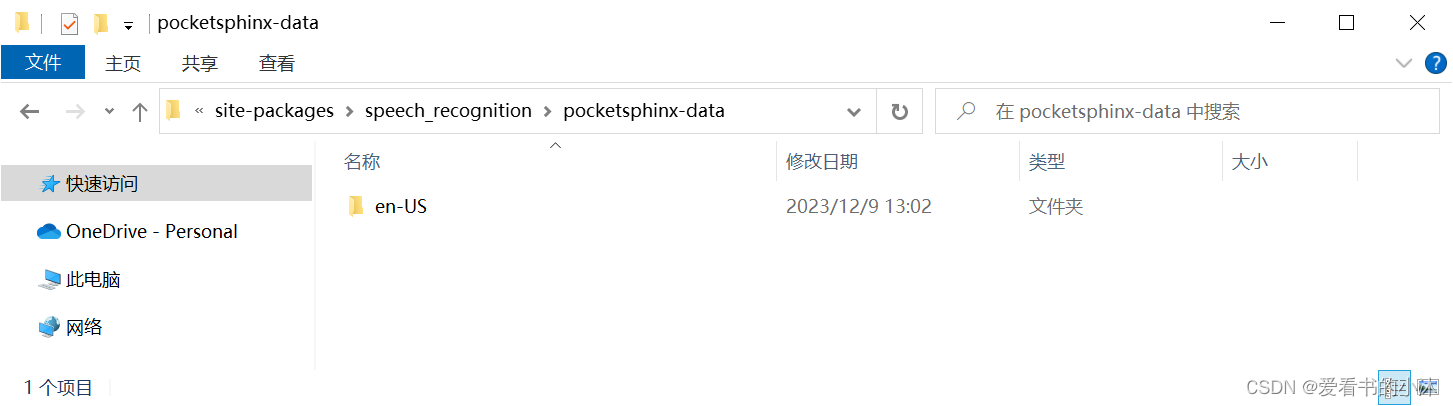

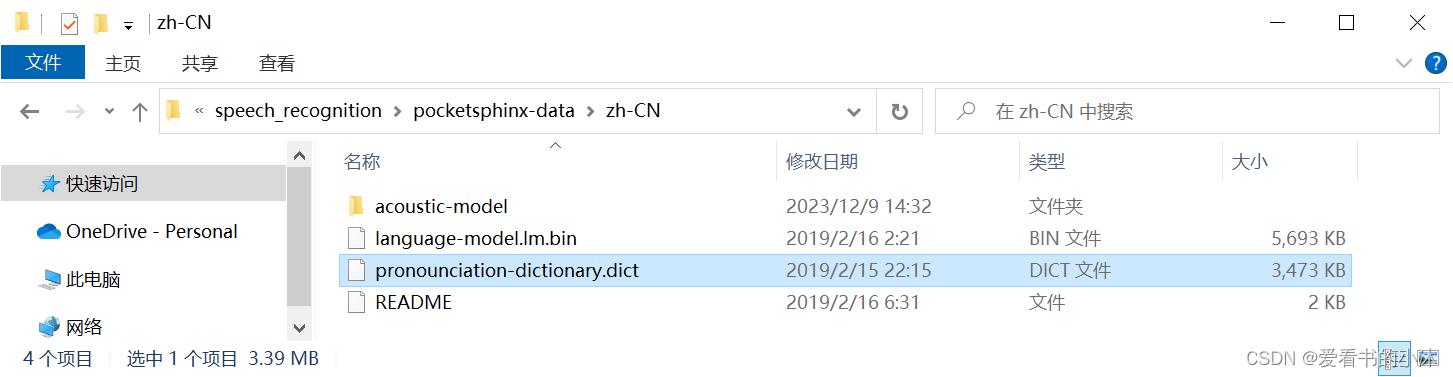

在python安装目录下找到lib\site-packages\speech_recognition:

点击进入pocketsphinx-data文件夹,并新建文件夹zh-cn:

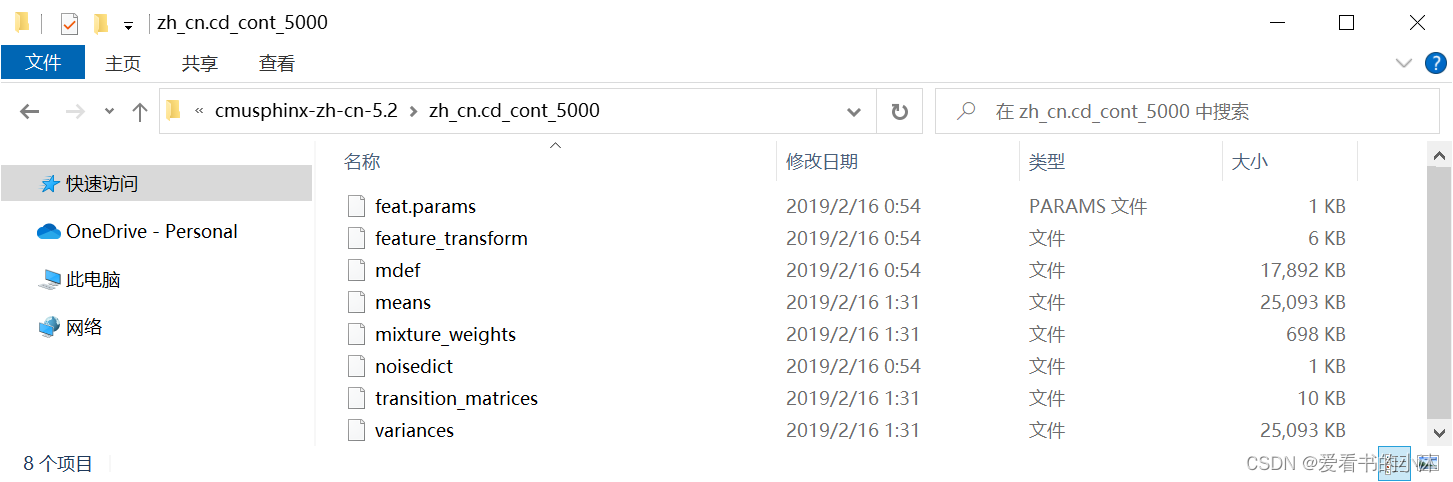

在这个文件夹中添加进入刚刚解压的文件,需要注意:把解压出来的zh_cn.cd_cont_5000文件夹重命名为acoustic-model、zh_cn.lm.bin命名为language-model.lm.bin、zh_cn.dic中dic改为pronounciation-dictionary.dict格式。

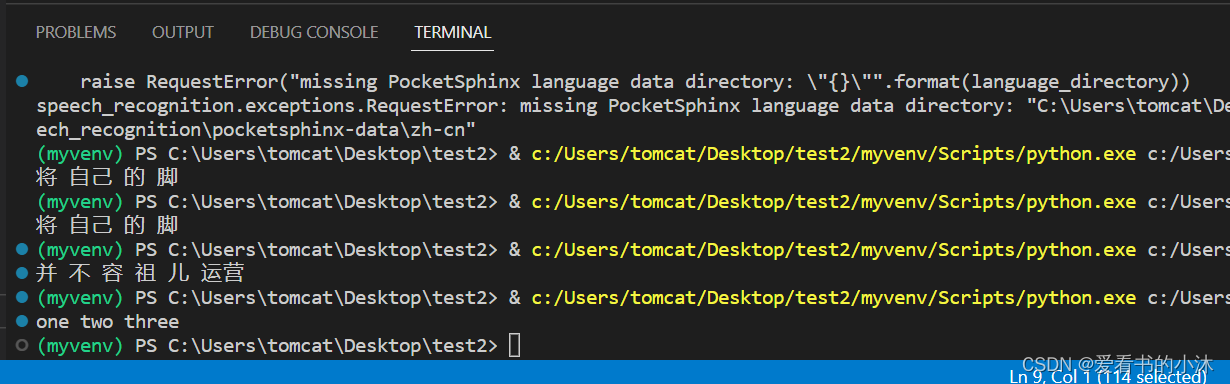

编写脚本测试:

import speech_recognition as sr

r = sr.recognizer() #调用识别器

test = sr.audiofile("chinese.flac") #导入语音文件

with test as source:

# r.adjust_for_ambient_noise(source)

audio = r.record(source) #使用 record() 从文件中获取数据

type(audio)

# c=r.recognize_sphinx(audio, language='zh-cn') #识别输出

c=r.recognize_sphinx(audio, language='en-us') #识别输出

print(c)

import speech_recognition as sr

# obtain path to "english.wav" in the same folder as this script

from os import path

audio_file = path.join(path.dirname(path.realpath(__file__)), "english.wav")

# audio_file = path.join(path.dirname(path.realpath(__file__)), "french.aiff")

# audio_file = path.join(path.dirname(path.realpath(__file__)), "chinese.flac")

# use the audio file as the audio source

r = sr.recognizer()

with sr.audiofile(audio_file) as source:

audio = r.record(source) # read the entire audio file

# recognize speech using sphinx

try:

print("sphinx thinks you said " + r.recognize_sphinx(audio))

except sr.unknownvalueerror:

print("sphinx could not understand audio")

except sr.requesterror as e:

print("sphinx error; {0}".format(e))

import speech_recognition as sr

recognizer = sr.recognizer()

with sr.microphone() as source:

# recognizer.adjust_for_ambient_noise(source)

audio = recognizer.listen(source)

c=recognizer.recognize_sphinx(audio, language='zh-cn') #识别输出

# c=r.recognize_sphinx(audio, language='en-us') #识别输出

print(c)

import speech_recognition as sr

# obtain audio from the microphone

r = sr.recognizer()

with sr.microphone() as source:

print("say something!")

audio = r.listen(source)

# recognize speech using sphinx

try:

print("sphinx thinks you said " + r.recognize_sphinx(audio))

except sr.unknownvalueerror:

print("sphinx could not understand audio")

except sr.requesterror as e:

print("sphinx error; {0}".format(e))

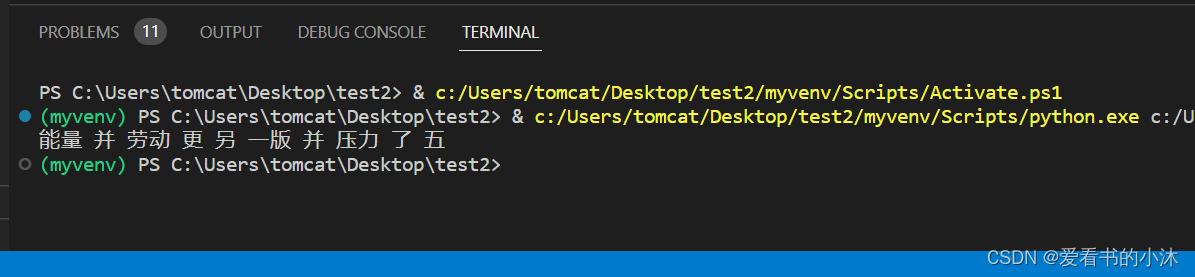

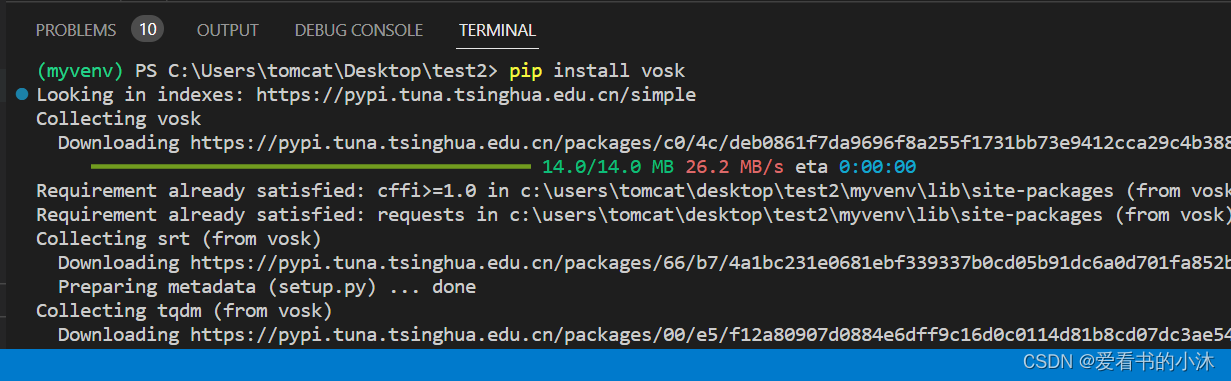

2.5 安装vosk (offline)

python3 -m pip install vosk

您还必须安装 vosk 模型:

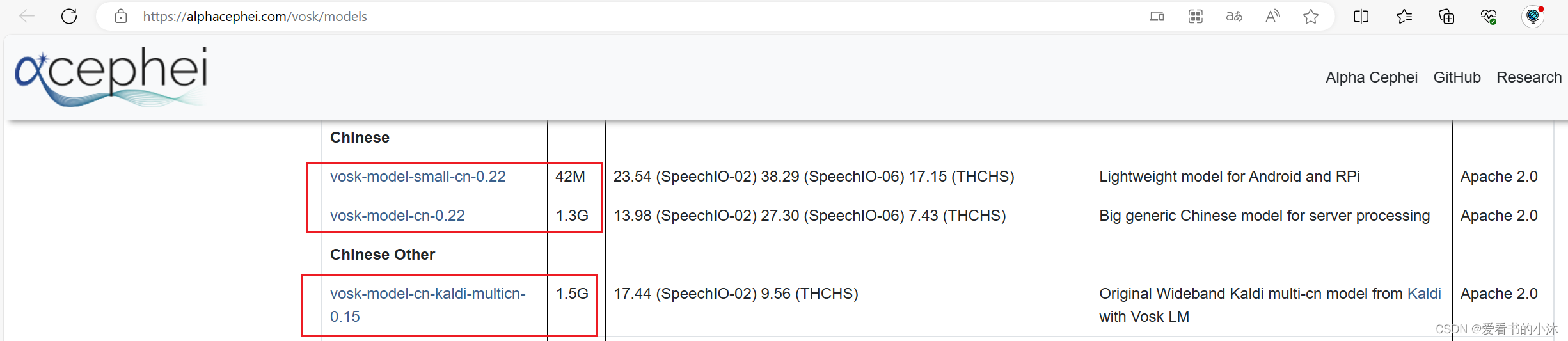

以下是可供下载的模型。您必须将它们放在项目的模型文件夹中,例如“your-project-folder/models/your-vosk-model”

https://alphacephei.com/vosk/models

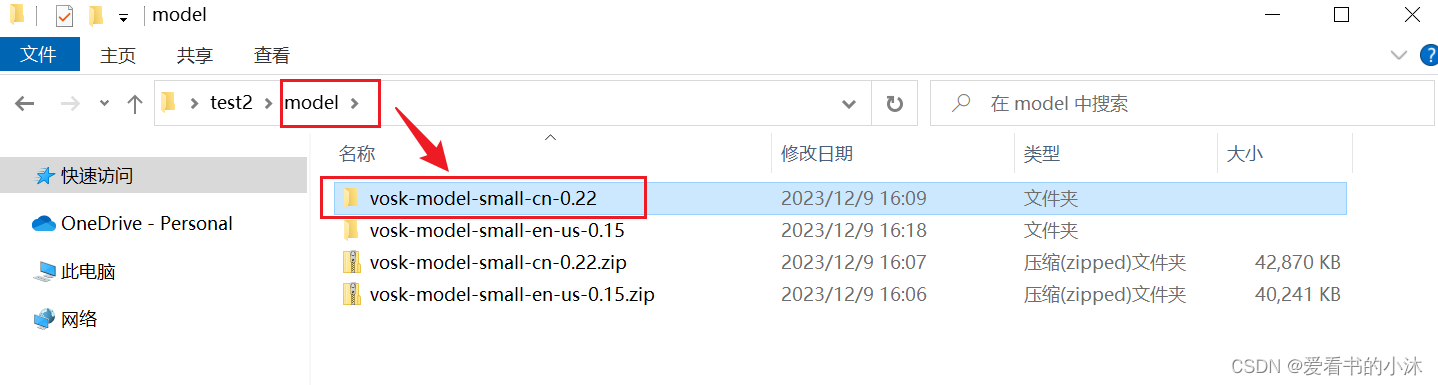

在测试脚本的所在文件夹,新建model子文件夹,然后把上面下载的模型解压到里面如下:

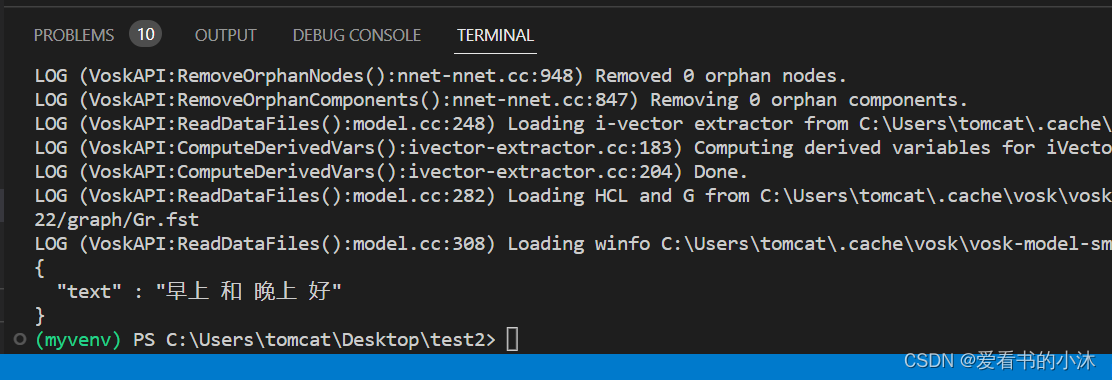

编写脚本:

import speech_recognition as sr

from vosk import kaldirecognizer, model

r = sr.recognizer()

with sr.microphone() as source:

audio = r.listen(source, timeout=3, phrase_time_limit=3)

r.vosk_model = model(model_name="vosk-model-small-cn-0.22")

text=r.recognize_vosk(audio, language='zh-cn')

print(text)

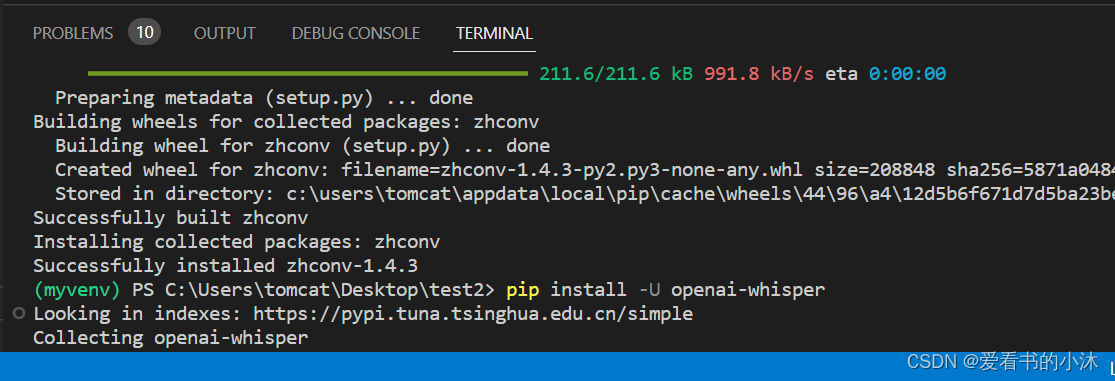

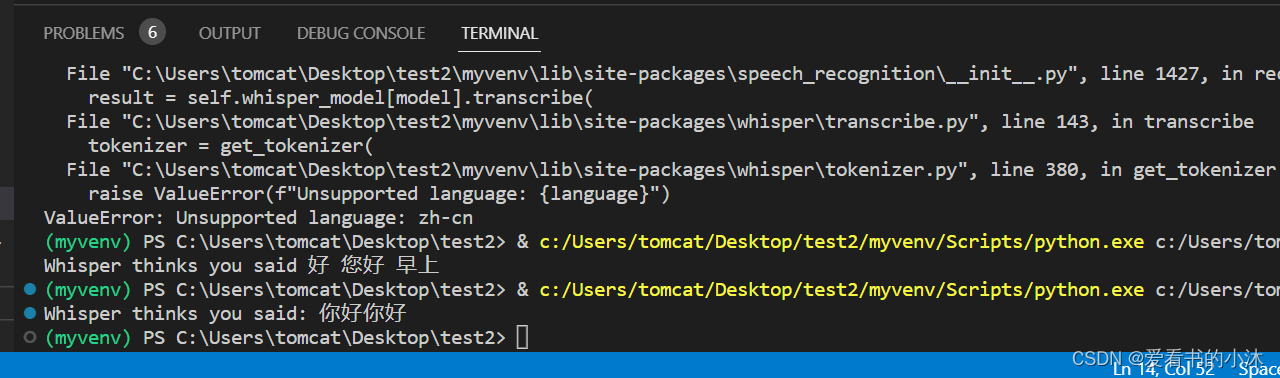

2.6 安装whisper(offline)

pip install zhconv

pip install whisper

pip install -u openai-whisper

pip3 install wheel

pip install soundfile

编写脚本:

import speech_recognition as sr

from vosk import kaldirecognizer, model

r = sr.recognizer()

with sr.microphone() as source:

audio = r.listen(source, timeout=3, phrase_time_limit=5)

# recognize speech using whisper

try:

print("whisper thinks you said: " + r.recognize_whisper(audio, language="chinese"))

except sr.unknownvalueerror:

print("whisper could not understand audio")

except sr.requesterror as e:

print("could not request results from whisper")

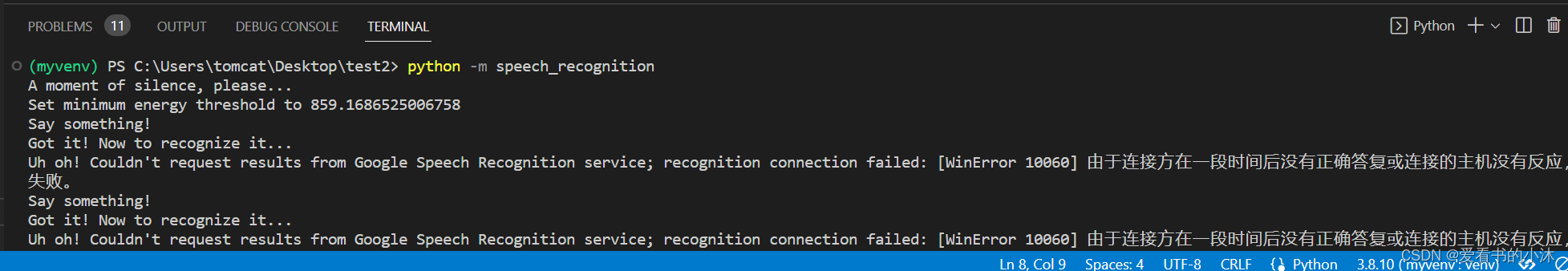

3 测试

3.1 命令

python -m speech_recognition

3.2 fastapi

import json

import os

from pprint import pprint

import speech_recognition

import torch

import uvicorn

from fastapi import fastapi, httpexception

from pydantic import basemodel

import soundfile

import whisper

import vosk

class responsemodel(basemodel):

path: str

app = fastapi()

def get_path(req: responsemodel):

path = req.path

if path == "":

raise httpexception(status_code=400, detail="no path provided")

if not path.endswith(".wav"):

raise httpexception(status_code=400, detail="invalid file type")

if not os.path.exists(path):

raise httpexception(status_code=404, detail="file does not exist")

return path

@app.get("/")

def root():

return {"message": "speech-recognition api"}

@app.post("/recognize-google")

def recognize_google(req: responsemodel):

path = get_path(req)

r = speech_recognition.recognizer()

with speech_recognition.audiofile(path) as source:

audio = r.record(source)

return r.recognize_google(audio, language='ja-jp', show_all=true)

@app.post("/recognize-vosk")

def recognize_vosk(req: responsemodel):

path = get_path(req)

r = speech_recognition.recognizer()

with speech_recognition.audiofile(path) as source:

audio = r.record(source)

return json.loads(r.recognize_vosk(audio, language='ja'))

@app.post("/recognize-whisper")

def recognize_whisper(req: responsemodel):

path = get_path(req)

r = speech_recognition.recognizer()

with speech_recognition.audiofile(path) as source:

audio = r.record(source)

result = r.recognize_whisper(audio, language='ja')

try:

return json.loads(result)

except:

return {"text": result}

if __name__ == "__main__":

host = os.environ.get('host', '0.0.0.0')

port: int = os.environ.get('port', 8080)

uvicorn.run("main:app", host=host, port=int(port))

3.3 google

import speech_recognition as sr

import webbrowser as wb

import speak

chrome_path = 'c:/program files (x86)/google/chrome/application/chrome.exe %s'

r = sr.recognizer()

with sr.microphone() as source:

print ('say something!')

audio = r.listen(source)

print ('done!')

try:

text = r.recognize_google(audio)

print('google thinks you said:\n' + text)

lang = 'en'

speak.tts(text, lang)

f_text = 'https://www.google.co.in/search?q=' + text

wb.get(chrome_path).open(f_text)

except exception as e:

print (e)

3.4 recognize_sphinx

import logging

import speech_recognition as sr

def audio_sphinx(filename):

logging.info('开始识别语音文件...')

# use the audio file as the audio source

r = sr.recognizer()

with sr.audiofile(filename) as source:

audio = r.record(source) # read the entire audio file

# recognize speech using sphinx

try:

print("sphinx thinks you said: " + r.recognize_sphinx(audio, language='zh-cn'))

except sr.unknownvalueerror:

print("sphinx could not understand audio")

except sr.requesterror as e:

print("sphinx error; {0}".format(e))

if __name__ == "__main__":

logging.basicconfig(level=logging.info)

wav_num = 0

while true:

r = sr.recognizer()

#启用麦克风

mic = sr.microphone()

logging.info('录音中...')

with mic as source:

#降噪

r.adjust_for_ambient_noise(source)

audio = r.listen(source)

with open(f"00{wav_num}.wav", "wb") as f:

#将麦克风录到的声音保存为wav文件

f.write(audio.get_wav_data(convert_rate=16000))

logging.info('录音结束,识别中...')

target = audio_sphinx(f"00{wav_num}.wav")

wav_num += 1

3.5 语音生成音频文件

- 方法1:

import speech_recognition as sr

# use speechrecognition to record 使用语音识别包录制音频

def my_record(rate=16000):

r = sr.recognizer()

with sr.microphone(sample_rate=rate) as source:

print("please say something")

audio = r.listen(source)

with open("voices/myvoices.wav", "wb") as f:

f.write(audio.get_wav_data())

print("录音完成!")

my_record()

- 方法2:

import wave

from pyaudio import pyaudio, paint16

framerate = 16000 # 采样率

num_samples = 2000 # 采样点

channels = 1 # 声道

sampwidth = 2 # 采样宽度2bytes

filepath = 'voices/myvoices.wav'

def save_wave_file(filepath, data):

wf = wave.open(filepath, 'wb')

wf.setnchannels(channels)

wf.setsampwidth(sampwidth)

wf.setframerate(framerate)

wf.writeframes(b''.join(data))

wf.close()

#录音

def my_record():

pa = pyaudio()

#打开一个新的音频stream

stream = pa.open(format=paint16, channels=channels,

rate=framerate, input=true, frames_per_buffer=num_samples)

my_buf = [] #存放录音数据

t = time.time()

print('正在录音...')

while time.time() < t + 10: # 设置录音时间(秒)

#循环read,每次read 2000frames

string_audio_data = stream.read(num_samples)

my_buf.append(string_audio_data)

print('录音结束.')

save_wave_file(filepath, my_buf)

stream.close()

第三届机器人、人工智能与智能控制国际会议(raiic 2024)将于2024年7月5-7日中国·绵阳举行。 raiic 2024是汇聚业界和学术界的顶级论坛,会议将邀请国内外著名专家就以传播机器人、人工智能与智能控制领域的技术进步、研究成果和应用做专题报告,同时进行学术交流。诚邀国内外相关高校和科研院所的科研人员、企业工程技术人员等参加会议。

大会网站:更多会议详情

时间地点:中国-绵阳|2024年7月5-7日

由河南省科学院、河南大学、郑州航空工业管理学院主办,河南省产学研人工智能研究院、河南大学人工智能学院、郑州航空工业管理学院计算机学院承办的第四届人工智能,大数据与算法国际学术会议 (caibda 2024)将于2024年7月5-7日于中国郑州隆重举行。caibda 2024致力于为人工智能,大数据与算法等相关领域的学者,工程师和从业人员提供一个分享最新研究成果的平台。

大会网站:更多会议详情

时间地点:中国-郑州|2024年7月5-7日

2024第四届人工智能、自动化与高性能计算国际会议(aiahpc 2024)将于2024年7月19-21日在中国·珠海召开。

大会网站:更多会议详情

时间地点:中国珠海-中山大学珠海校区|2024年7月19-21日

结语

如果您觉得该方法或代码有一点点用处,可以给作者点个赞,或打赏杯咖啡;╮( ̄▽ ̄)╭

如果您感觉方法或代码不咋地//(ㄒoㄒ)//,就在评论处留言,作者继续改进;o_o???

如果您需要相关功能的代码定制化开发,可以留言私信作者;(✿◡‿◡)

感谢各位大佬童鞋们的支持!( ´ ▽´ )ノ ( ´ ▽´)っ!!!

发表评论