使用java远程提交flink任务到yarn集群

背景

由于业务需要,使用命令行的方式提交flink任务比较麻烦,要么将后端任务部署到大数据集群,要么弄一个提交机,感觉都不是很离线。经过一些调研,发现可以实现远程的任务发布。接下来就记录一下实现过程。这里用flink on yarn 的application模式实现

环境准备

- 大数据集群,只要有hadoop就行

- 后端服务器,linux mac都行,windows不行

正式开始

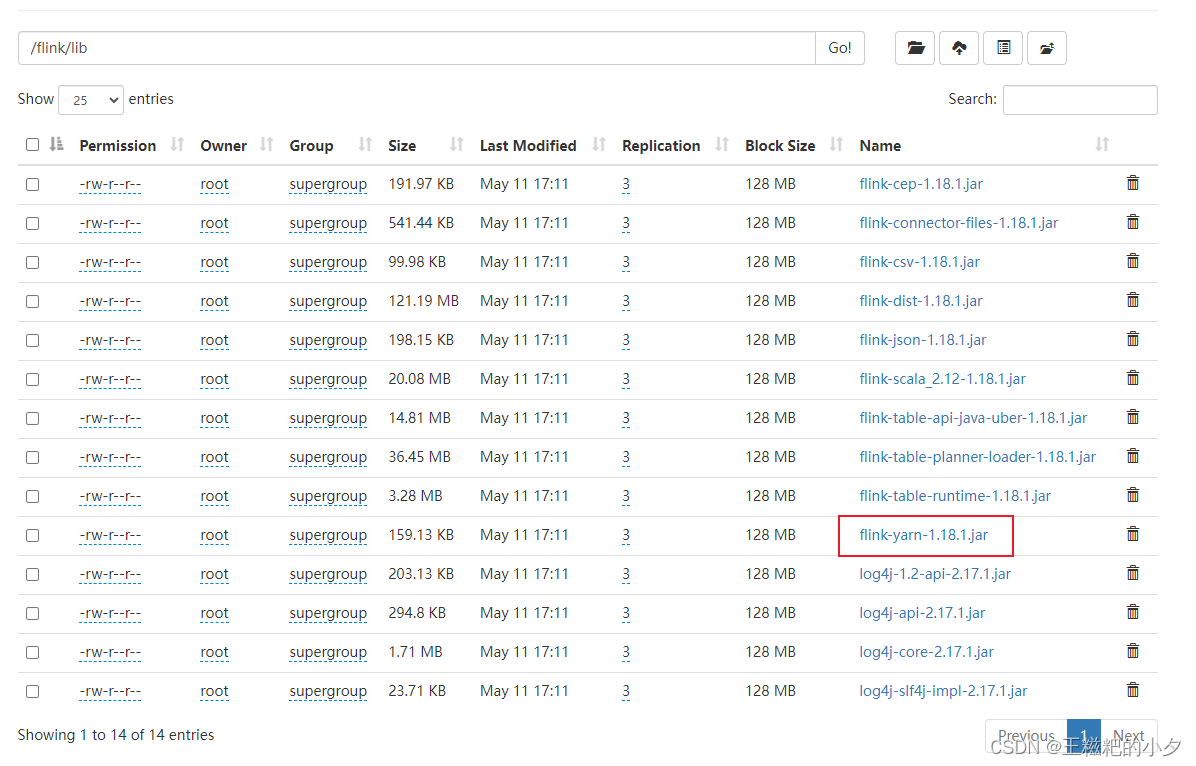

1. 上传flink jar包到hdfs

去flink官网下载你需要的版本,我这里用的是flink-1.18.1,把flink lib目录下的jar包传到hdfs中。

其中flink-yarn-1.18.1.jar需要大家自己去maven仓库下载。

2. 编写一段flink代码

随便写一段flink代码就行,我们目的是测试

package com.azt;

import org.apache.flink.streaming.api.datastream.datastreamsource;

import org.apache.flink.streaming.api.environment.streamexecutionenvironment;

import org.apache.flink.streaming.api.functions.source.sourcefunction;

import java.util.random;

import java.util.concurrent.timeunit;

public class wordcount {

public static void main(string[] args) throws exception {

streamexecutionenvironment env = streamexecutionenvironment.getexecutionenvironment();

datastreamsource<string> source = env.addsource(new sourcefunction<string>() {

@override

public void run(sourcecontext<string> ctx) throws exception {

string[] words = {"spark", "flink", "hadoop", "hdfs", "yarn"};

random random = new random();

while (true) {

ctx.collect(words[random.nextint(words.length)]);

timeunit.seconds.sleep(1);

}

}

@override

public void cancel() {

}

});

source.print();

env.execute();

}

}

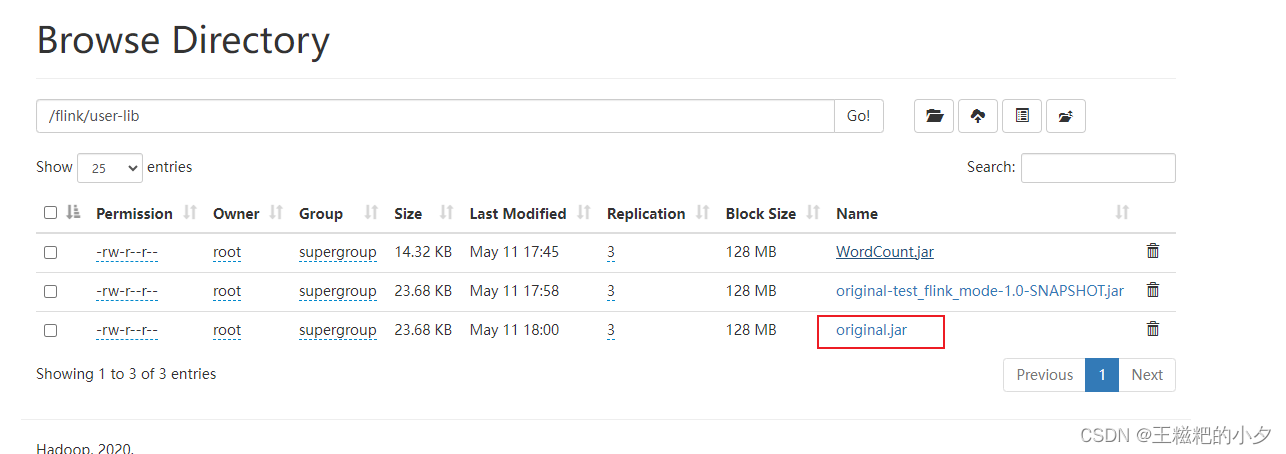

3. 打包第二步的代码,上传到hdfs

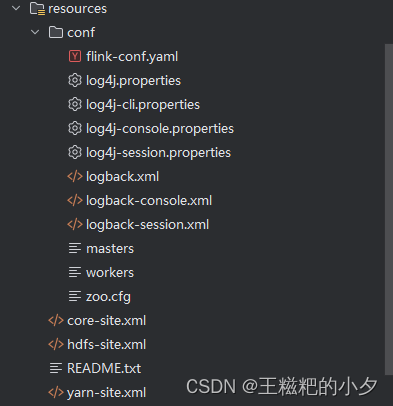

4. 拷贝配置文件

- 拷贝flink conf下的所有文件到java项目的resource中

- 拷贝hadoop配置文件到到java项目的resource中

具体看截图

5. 编写java远程提交任务的程序

这一步有个注意的地方就是,如果你跟我一样是windows电脑,那么本地用idea提交会报错;如果你是mac或者linux,那么可以直接在idea中提交任务。

package com.test;

import org.apache.flink.client.deployment.clusterdeploymentexception;

import org.apache.flink.client.deployment.clusterspecification;

import org.apache.flink.client.deployment.application.applicationconfiguration;

import org.apache.flink.client.program.clusterclient;

import org.apache.flink.client.program.clusterclientprovider;

import org.apache.flink.configuration.*;

import org.apache.flink.runtime.client.jobstatusmessage;

import org.apache.flink.yarn.yarnclientyarnclusterinformationretriever;

import org.apache.flink.yarn.yarnclusterdescriptor;

import org.apache.flink.yarn.yarnclusterinformationretriever;

import org.apache.flink.yarn.configuration.yarnconfigoptions;

import org.apache.flink.yarn.configuration.yarndeploymenttarget;

import org.apache.flink.yarn.configuration.yarnlogconfigutil;

import org.apache.hadoop.fs.path;

import org.apache.hadoop.yarn.api.records.applicationid;

import org.apache.hadoop.yarn.client.api.yarnclient;

import org.apache.hadoop.yarn.conf.yarnconfiguration;

import java.util.arraylist;

import java.util.collection;

import java.util.collections;

import java.util.list;

import java.util.concurrent.completablefuture;

import static org.apache.flink.configuration.memorysize.memoryunit.mega_bytes;

/**

* @date :2021/5/12 7:16 下午

*/

public class main {

public static void main(string[] args) throws exception {

///home/root/flink/lib/lib

system.setproperty("hadoop_user_name","root");

// string configurationdirectory = "c:\\project\\test_flink_mode\\src\\main\\resources\\conf";

string configurationdirectory = "/export/server/flink-1.18.1/conf";

org.apache.hadoop.conf.configuration conf = new org.apache.hadoop.conf.configuration();

conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.distributedfilesystem");

conf.set("fs.file.impl", org.apache.hadoop.fs.localfilesystem.class.getname());

string flinklibs = "hdfs://node1.itcast.cn/flink/lib";

string userjarpath = "hdfs://node1.itcast.cn/flink/user-lib/original.jar";

string flinkdistjar = "hdfs://node1.itcast.cn/flink/lib/flink-yarn-1.18.1.jar";

yarnclient yarnclient = yarnclient.createyarnclient();

yarnconfiguration yarnconfiguration = new yarnconfiguration();

yarnclient.init(yarnconfiguration);

yarnclient.start();

yarnclusterinformationretriever clusterinformationretriever = yarnclientyarnclusterinformationretriever

.create(yarnclient);

//获取flink的配置

configuration flinkconfiguration = globalconfiguration.loadconfiguration(

configurationdirectory);

flinkconfiguration.set(checkpointingoptions.incremental_checkpoints, true);

flinkconfiguration.set(

pipelineoptions.jars,

collections.singletonlist(

userjarpath));

yarnlogconfigutil.setlogconfigfileinconfig(flinkconfiguration,configurationdirectory);

path remotelib = new path(flinklibs);

flinkconfiguration.set(

yarnconfigoptions.provided_lib_dirs,

collections.singletonlist(remotelib.tostring()));

flinkconfiguration.set(

yarnconfigoptions.flink_dist_jar,

flinkdistjar);

//设置为application模式

flinkconfiguration.set(

deploymentoptions.target,

yarndeploymenttarget.application.getname());

//yarn application name

flinkconfiguration.set(yarnconfigoptions.application_name, "jobname");

//设置配置,可以设置很多

flinkconfiguration.set(jobmanageroptions.total_process_memory, memorysize.parse("1024",mega_bytes));

flinkconfiguration.set(taskmanageroptions.total_process_memory, memorysize.parse("1024",mega_bytes));

flinkconfiguration.set(taskmanageroptions.num_task_slots, 4);

flinkconfiguration.setinteger("parallelism.default", 4);

clusterspecification clusterspecification = new clusterspecification.clusterspecificationbuilder()

.createclusterspecification();

// 设置用户jar的参数和主类

applicationconfiguration appconfig = new applicationconfiguration(args,"com.azt.wordcount");

yarnclusterdescriptor yarnclusterdescriptor = new yarnclusterdescriptor(

flinkconfiguration,

yarnconfiguration,

yarnclient,

clusterinformationretriever,

true);

clusterclientprovider<applicationid> clusterclientprovider = null;

try {

clusterclientprovider = yarnclusterdescriptor.deployapplicationcluster(

clusterspecification,

appconfig);

} catch (clusterdeploymentexception e){

e.printstacktrace();

}

clusterclient<applicationid> clusterclient = clusterclientprovider.getclusterclient();

system.out.println(clusterclient.getwebinterfaceurl());

applicationid applicationid = clusterclient.getclusterid();

system.out.println(applicationid);

collection<jobstatusmessage> jobstatusmessages = clusterclient.listjobs().get();

int counts = 30;

while (jobstatusmessages.size() == 0 && counts > 0) {

thread.sleep(1000);

counts--;

jobstatusmessages = clusterclient.listjobs().get();

if (jobstatusmessages.size() > 0) {

break;

}

}

if (jobstatusmessages.size() > 0) {

list<string> jids = new arraylist<>();

for (jobstatusmessage jobstatusmessage : jobstatusmessages) {

jids.add(jobstatusmessage.getjobid().tohexstring());

}

system.out.println(string.join(",",jids));

}

}

}

由于我这里是windows电脑,所以我打包放到服务器上去运行

执行命令 :

不出以外的话,会打印如下日志

log4j:warn no appenders could be found for logger (org.apache.hadoop.util.shell).

log4j:warn please initialize the log4j system properly.

log4j:warn see http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

http://node2:33811

application_1715418089838_0017

6d4d6ed5277a62fc9a3a274c4f34a468

复制打印的url连接,就可以打开flink的webui了,在yarn的前端页面中也可以看到flink任务。

发表评论