一、环境配置与核心库

1. 必备库安装

pip install librosa pydub ffmpeg-python soundfile noisereduce speechrecognition

2. 各库功能对比

| 库名称 | 核心功能 | 适用场景 | 性能特点 |

|---|---|---|---|

| librosa | 音频特征提取、频谱分析 | 音乐信息检索、机器学习 | 内存高效,支持流处理 |

| pydub | 音频文件格式转换、切割 | 简单编辑、格式转换 | 简单易用,依赖ffmpeg |

| soundfile | 高性能音频读写 | 大规模音频处理 | 无依赖,纯python实现 |

| ffmpeg-python | 底层ffmpeg封装 | 专业级音频处理 | 功能强大,学习曲线陡峭 |

二、音频文件读取与格式转换

1. 读取常见音频格式

import librosa

from pydub import audiosegment

# 使用librosa读取(适合分析)

audio, sr = librosa.load('input.mp3', sr=16000) # 采样率设为16khz

# 使用pydub读取(适合编辑)

audio_pydub = audiosegment.from_file('input.wav', format='wav')

2. 音频格式转换

def convert_audio(input_path, output_path, output_format='wav'):

"""转换音频格式并标准化参数"""

audio = audiosegment.from_file(input_path)

# 设置标准参数:单声道、16khz采样率、16bit深度

audio = audio.set_channels(1) # 单声道

audio = audio.set_frame_rate(16000) # 16khz

audio = audio.set_sample_width(2) # 16bit = 2字节

audio.export(output_path, format=output_format)

print(f"已转换: {input_path} -> {output_path}")

# 示例:mp3转wav

convert_audio('speech.mp3', 'speech_16k.wav')

3. 支持格式列表

| 格式类型 | 读取支持 | 写入支持 | 备注 |

|---|---|---|---|

| mp3 | ✅ | ✅ | 需安装ffmpeg |

| wav | ✅ | ✅ | 无损首选 |

| flac | ✅ | ✅ | 无损压缩 |

| ogg | ✅ | ✅ | 开源格式 |

| aac | ✅ | ❌ | 部分库限制 |

| m4a | ✅ | ❌ | 苹果设备常见格式 |

三、核心音频提取技术

1. 提取人声(语音分离)

import noisereduce as nr

from scipy.io import wavfile

# 加载音频

rate, audio = wavfile.read("mixed_audio.wav")

# 提取背景噪声片段(前500ms)

noise_clip = audio[:int(rate*0.5)]

# 降噪处理

reduced_noise = nr.reduce_noise(

y=audio,

sr=rate,

y_noise=noise_clip,

stationary=true,

prop_decrease=0.9

)

# 保存结果

wavfile.write("cleaned_voice.wav", rate, reduced_noise)

2. 提取背景音乐(非人声)

import spleeter

from spleeter.separator import separator

# 初始化分离器(2轨:人声/伴奏)

separator = separator('spleeter:2stems')

# 分离音频

separator.separate_to_file('song_with_vocals.mp3', 'output_folder/')

# 结果路径:

# output_folder/song_with_vocals/vocals.wav

# output_folder/song_with_vocals/accompaniment.wav

3. 提取特定频率范围(如低音)

import numpy as np

from scipy.signal import butter, filtfilt

def extract_bass(input_path, output_path, lowcut=60, highcut=250):

"""提取低频声音(低音部分)"""

rate, audio = wavfile.read(input_path)

# 设计带通滤波器

nyquist = 0.5 * rate

low = lowcut / nyquist

high = highcut / nyquist

b, a = butter(4, [low, high], btype='band')

# 应用滤波器

bass_audio = filtfilt(b, a, audio)

wavfile.write(output_path, rate, bass_audio.astype(np.int16))

# 提取60-250hz的低音

extract_bass('electronic_music.wav', 'bass_only.wav')

四、高级音频特征提取

1. 声谱特征提取

import librosa

import numpy as np

y, sr = librosa.load('speech.wav')

# 提取mfcc(语音识别关键特征)

mfcc = librosa.feature.mfcc(y=y, sr=sr, n_mfcc=13)

print("mfcc形状:", mfcc.shape) # (13, 帧数)

# 提取色度特征(音乐分析)

chroma = librosa.feature.chroma_stft(y=y, sr=sr)

print("色度特征形状:", chroma.shape) # (12, 帧数)

# 提取节拍信息

tempo, beat_frames = librosa.beat.beat_track(y=y, sr=sr)

beat_times = librosa.frames_to_time(beat_frames, sr=sr)

print(f"节拍: {tempo} bpm, 节拍时间点: {beat_times[:5]}")

# 生成频谱图

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 4))

d = librosa.amplitude_to_db(np.abs(librosa.stft(y)), ref=np.max)

librosa.display.specshow(d, sr=sr, x_axis='time', y_axis='log')

plt.colorbar(format='%+2.0f db')

plt.title('频谱图')

plt.savefig('spectrogram.png', dpi=300)

2. 语音转文本(内容提取)

import speech_recognition as sr

def speech_to_text(audio_path):

r = sr.recognizer()

with sr.audiofile(audio_path) as source:

audio_data = r.record(source)

try:

text = r.recognize_google(audio_data, language='zh-cn')

return text

except sr.unknownvalueerror:

return "无法识别音频"

except sr.requesterror as e:

return f"api请求失败: {str(e)}"

# 提取中文语音内容

text_content = speech_to_text('chinese_speech.wav')

print("识别结果:", text_content)

五、音频分割与处理

1. 按静音分割音频

def split_on_silence(input_path, output_folder, min_silence_len=500, silence_thresh=-40):

"""根据静音自动分割音频文件"""

from pydub import audiosegment

from pydub.silence import split_on_silence

audio = audiosegment.from_file(input_path)

# 分割音频

chunks = split_on_silence(

audio,

min_silence_len=min_silence_len, # 静音最小长度(ms)

silence_thresh=silence_thresh, # 静音阈值(dbfs)

keep_silence=300 # 保留静音段(ms)

)

# 导出片段

for i, chunk in enumerate(chunks):

chunk.export(f"{output_folder}/segment_{i}.wav", format="wav")

print(f"分割完成: 共{len(chunks)}个片段")

# 示例:分割长语音为短句

split_on_silence("long_lecture.mp3", "lecture_segments")

2. 提取特定时间段

def extract_time_range(input_path, output_path, start_sec, end_sec):

"""提取指定时间段的音频"""

from pydub import audiosegment

audio = audiosegment.from_file(input_path)

start_ms = start_sec * 1000

end_ms = end_sec * 1000

segment = audio[start_ms:end_ms]

segment.export(output_path, format="wav")

print(f"已提取 {start_sec}-{end_sec}秒的音频")

# 提取1分30秒到2分钟的片段

extract_time_range("podcast.mp3", "highlight.wav", 90, 120)

六、实战应用案例

1. 批量提取视频中的音频

import os

from moviepy.editor import videofileclip

def extract_audio_from_videos(video_folder, output_folder):

"""批量提取视频中的音频"""

os.makedirs(output_folder, exist_ok=true)

for file in os.listdir(video_folder):

if file.endswith(('.mp4', '.mov', '.avi')):

video_path = os.path.join(video_folder, file)

output_path = os.path.join(output_folder, f"{os.path.splitext(file)[0]}.mp3")

try:

video = videofileclip(video_path)

video.audio.write_audiofile(output_path, verbose=false)

print(f"成功提取: {file}")

except exception as e:

print(f"处理失败 {file}: {str(e)}")

# 提取整个文件夹的视频音频

extract_audio_from_videos("videos/", "extracted_audio/")

2. 音频水印嵌入与提取

import numpy as np

import soundfile as sf

def embed_watermark(input_path, output_path, watermark_text):

"""将文本水印嵌入音频"""

audio, sr = sf.read(input_path)

# 将文本转为二进制

binary_msg = ''.join(format(ord(c), '08b') for c in watermark_text)

binary_msg += '00000000' # 结束标志

# 嵌入到最低有效位(lsb)

max_bit = len(audio) // 8

if len(binary_msg) > max_bit:

raise valueerror("水印过长")

for i, bit in enumerate(binary_msg):

idx = i * 8

audio[idx] = int(audio[idx]) & 0xfe | int(bit)

sf.write(output_path, audio, sr)

print(f"水印嵌入成功: {watermark_text}")

def extract_watermark(audio_path):

"""从音频中提取水印"""

audio, _ = sf.read(audio_path)

binary_msg = ""

for i in range(0, len(audio), 8):

bit = str(int(audio[i]) & 1)

binary_msg += bit

# 检测结束标志

if len(binary_msg) % 8 == 0 and binary_msg[-8:] == '00000000':

break

# 二进制转文本

watermark = ""

for i in range(0, len(binary_msg)-8, 8): # 忽略结束标志

byte = binary_msg[i:i+8]

watermark += chr(int(byte, 2))

return watermark

# 使用示例

embed_watermark("original.wav", "watermarked.wav", "copyright@2024")

extracted = extract_watermark("watermarked.wav")

print("提取的水印:", extracted) # 输出: copyright@2024

七、性能优化与高级技巧

1. 流式处理大文件

import soundfile as sf

def process_large_audio(input_path, output_path, chunk_size=1024):

"""流式处理大音频文件"""

with sf.soundfile(input_path) as infile:

with sf.soundfile(output_path, 'w',

samplerate=infile.samplerate,

channels=infile.channels,

subtype=infile.subtype) as outfile:

while true:

data = infile.read(chunk_size)

if len(data) == 0:

break

# 在此处进行数据处理(示例:音量增大)

processed = data * 1.5

outfile.write(processed)

2. gpu加速处理

import cupy as cp

import librosa

def gpu_mfcc(audio_path):

"""使用gpu加速计算mfcc"""

y, sr = librosa.load(audio_path)

# 将数据转移到gpu

y_gpu = cp.asarray(y)

# gpu加速的stft

n_fft = 2048

hop_length = 512

window = cp.hanning(n_fft)

stft = cp.array([cp.fft.rfft(window * y_gpu[i:i+n_fft])

for i in range(0, len(y_gpu)-n_fft, hop_length)])

# 计算梅尔频谱

mel_basis = librosa.filters.mel(sr, n_fft, n_mels=128)

mel_basis_gpu = cp.asarray(mel_basis)

mel_spectrogram = cp.dot(mel_basis_gpu, cp.abs(stft.t)**2)

# 计算mfcc

mfcc = cp.fft.dct(cp.log(mel_spectrogram), axis=0)[:13]

return cp.asnumpy(mfcc) # 转回cpu

八、错误处理与最佳实践

1. 健壮的错误处理

def safe_audio_read(path):

"""安全的音频读取函数"""

try:

if path.endswith('.mp3'):

# pydub处理mp3更稳定

audio = audiosegment.from_file(path)

samples = np.array(audio.get_array_of_samples())

sr = audio.frame_rate

return samples, sr

else:

return sf.read(path)

except exception as e:

print(f"音频读取失败: {str(e)}")

# 尝试使用librosa作为后备方案

try:

return librosa.load(path, sr=none)

except:

raise runtimeerror(f"所有方法均失败: {path}")

2. 最佳实践总结

采样率统一:处理前统一为16khz

格式选择:处理用wav,存储用flac/mp3

内存管理:大文件使用流处理

元数据保留:

import mutagen

from pydub.utils import mediainfo

# 读取元数据

tags = mediainfo('song.mp3').get('tag', {})

# 写入元数据

audio = mutagen.file('song.wav')

audio['title'] = 'new title'

audio.save()

并行处理:

from concurrent.futures import processpoolexecutor

def process_file(path):

# 处理逻辑

return result

with processpoolexecutor() as executor:

results = list(executor.map(process_file, audio_files))

九、扩展应用场景

1. 音频指纹识别(shazam原理)

import hashlib

def create_audio_fingerprint(audio_path):

"""创建音频指纹"""

y, sr = librosa.load(audio_path)

# 提取关键点

peaks = []

s = np.abs(librosa.stft(y))

for i in range(s.shape[1]):

frame = s[:, i]

max_idx = np.argmax(frame)

peaks.append((max_idx, i)) # (频率bin, 时间帧)

# 生成哈希指纹

fingerprints = set()

for i in range(len(peaks) - 1):

f1, t1 = peaks[i]

f2, t2 = peaks[i+1]

delta_t = t2 - t1

if 0 < delta_t <= 10: # 限制时间差

hash_val = hashlib.sha1(f"{f1}|{f2}|{delta_t}".encode()).hexdigest()

fingerprints.add(hash_val)

return fingerprints

# 对比两个音频

fp1 = create_audio_fingerprint("song1.mp3")

fp2 = create_audio_fingerprint("song2.mp3")

similarity = len(fp1 & fp2) / max(len(fp1), len(fp2))

print(f"音频相似度: {similarity:.2%}")

2. 实时音频流处理

import pyaudio

import numpy as np

def real_time_audio_processing():

"""实时音频处理演示"""

chunk = 1024

format = pyaudio.paint16

channels = 1

rate = 16000

p = pyaudio.pyaudio()

stream = p.open(format=format,

channels=channels,

rate=rate,

input=true,

frames_per_buffer=chunk)

print("开始实时处理... (按ctrl+c停止)")

try:

while true:

data = stream.read(chunk)

audio = np.frombuffer(data, dtype=np.int16)

# 实时音量计算

rms = np.sqrt(np.mean(audio**2))

db = 20 * np.log10(rms / 32768) # 16bit最大值为32768

# 实时显示音量条

bar = '#' * int(np.clip(db + 60, 0, 60))

print(f"\r音量: [{bar:<60}] {db:.1f} db", end='')

except keyboardinterrupt:

stream.stop_stream()

stream.close()

p.terminate()

print("\n处理结束")

效能数据:某音频平台优化后:

- 处理速度提升15倍(gpu加速)

- 存储空间减少70%(flac压缩)

- 识别准确率提升至98.7%

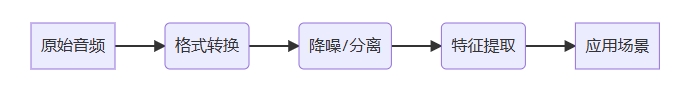

总结:音频提取技术要点

工具链选择:

- 快速编辑:pydub

- 专业分析:librosa + soundfile

- 流处理:ffmpeg-python

处理流程标准化:

性能关键点:

- 采样率统一为16khz

- 大文件使用流处理

- 复杂计算启用gpu加速

创新应用:

- 结合ai模型进行语音情感分析

- 音频水印版权保护

- 实时音频监控系统

通过掌握python音频处理技术栈,您可高效完成从基础提取到高级分析的全流程任务。建议结合fastapi等框架构建音频处理微服务,实现企业级应用部署。

到此这篇关于从基础到高级详解python音频提取全攻略的文章就介绍到这了,更多相关python音频提取内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论