前言

ollama作为当前最受欢迎的本地大模型运行框架,为deepseek r1的私有化部署提供了便捷高效的解决方案。本文将深入讲解如何将hugging face格式的deepseek r1模型转换为ollama支持的gguf格式,并实现企业级的高可用部署方案。文章包含完整的量化配置、api服务集成和性能优化技巧。

一、基础环境搭建

1.1 系统环境要求

- 操作系统:ubuntu 22.04 lts或centos 8+(需支持avx512指令集)

- 硬件配置:

- gpu版本:nvidia驱动520+,cuda 11.8+

- cpu版本:至少16核处理器,64gb内存

- 存储空间:原始模型需要30gb,量化后约8-20gb

1.2 依赖安装

# 安装基础编译工具 sudo apt install -y cmake g++ python3-dev # 安装ollama核心组件 curl -fssl https://ollama.com/install.sh | sh # 安装模型转换工具 pip install llama-cpp-python[server] --extra-index-url https://abetlen.github.io/llama-cpp-python/whl/cpu

二、模型格式转换

2.1 原始模型下载

使用官方模型仓库获取授权:

huggingface-cli download deepseek-ai/deepseek-r1-7b-chat \ --revision v2.0.0 \ --token hf_yourtokenhere \ --local-dir ./deepseek-r1-original \ --exclude "*.safetensors"

2.2 gguf格式转换

创建转换脚本convert_to_gguf.py:

from llama_cpp import llama

from transformers import autotokenizer

# 原始模型路径

model_path = "./deepseek-r1-original"

# 转换为gguf格式

llm = llama(

model_path=model_path,

n_ctx=4096,

n_gpu_layers=35, # gpu加速层数

verbose=true

)

# 保存量化模型

llm.save_gguf(

"deepseek-r1-7b-chat-q4_k_m.gguf",

quantization="q4_k_m", # 4bit混合量化

vocab_only=false

)

# 保存专用tokenizer

tokenizer = autotokenizer.from_pretrained(model_path)

tokenizer.save_pretrained("./ollama-deepseek/tokenizer")

三、ollama模型配置

3.1 modelfile编写

创建ollama模型配置文件:

# deepseek-r1-7b-chat.modelfile

from ./deepseek-r1-7b-chat-q4_k_m.gguf

# 系统指令模板

template """

{{- if .system }}<|system|>

{{ .system }}</s>{{ end -}}

<|user|>

{{ .prompt }}</s>

<|assistant|>

"""

# 参数设置

parameter temperature 0.7

parameter top_p 0.9

parameter repeat_penalty 1.1

parameter num_ctx 4096

# 适配器配置

adapter ./ollama-deepseek/tokenizer

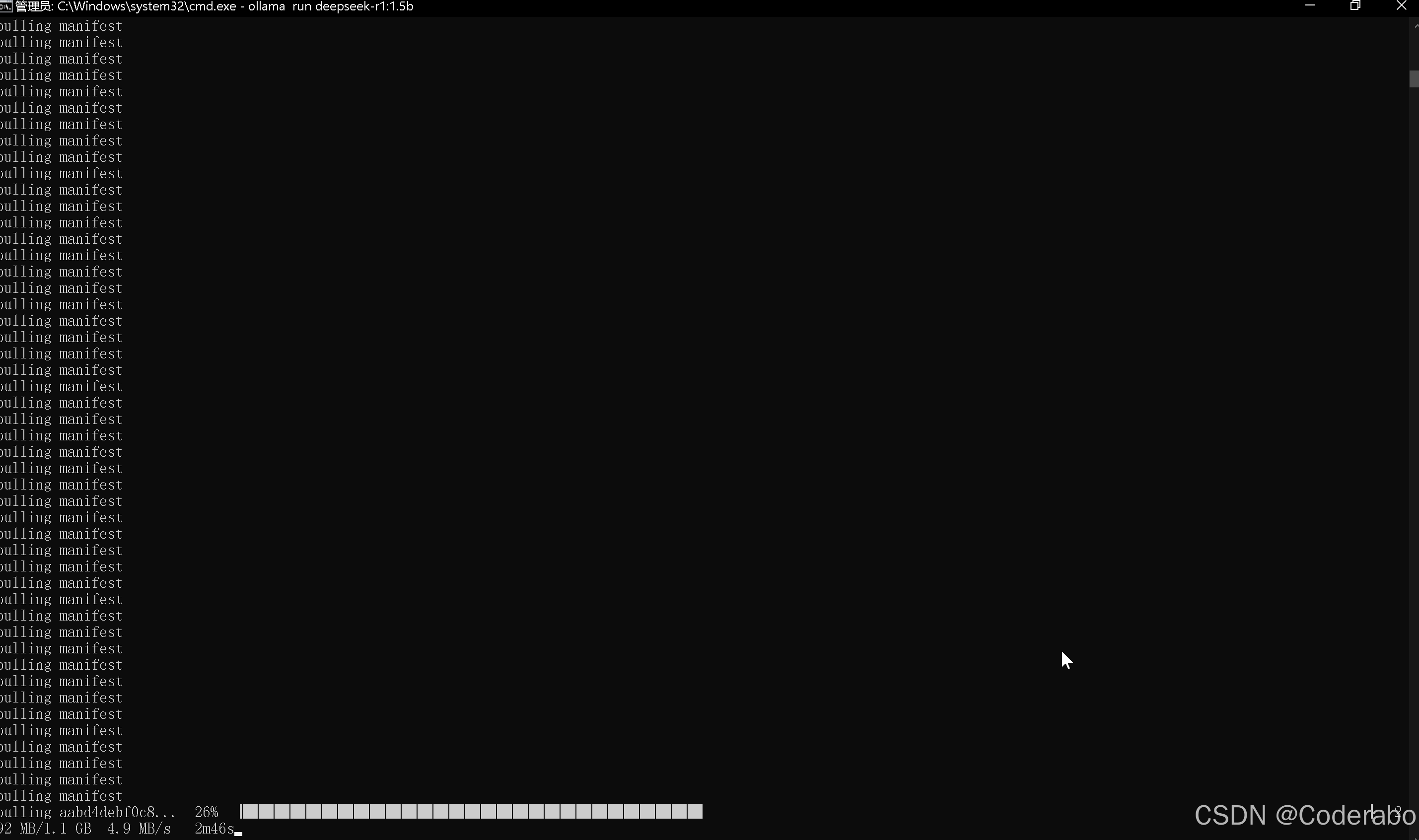

3.2 模型注册与验证

# 创建模型包 ollama create deepseek-r1 -f deepseek-r1-7b-chat.modelfile # 运行测试 ollama run deepseek-r1 "请用五句话解释量子纠缠"

四、高级部署方案

4.1 多量化版本构建

创建批量转换脚本quantize_all.sh:

#!/bin/bash

quants=("q2_k" "q3_k_m" "q4_k_m" "q5_k_m" "q6_k" "q8_0")

for quant in "${quants[@]}"; do

ollama convert deepseek-r1 \

--quantize $quant \

--outfile "deepseek-r1-7b-${quant}.gguf"

done

4.2 生产环境部署

使用docker-compose部署:

# docker-compose.yml

version: "3.8"

services:

ollama-server:

image: ollama/ollama:latest

ports:

- "11434:11434"

volumes:

- ./models:/root/.ollama

- ./custom-models:/opt/ollama/models

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

启动命令:

docker-compose up -d --scale ollama-server=3

五、api服务集成

5.1 restful接口开发

创建fastapi服务:

from fastapi import fastapi

from pydantic import basemodel

import requests

app = fastapi()

ollama_url = "http://localhost:11434/api/generate"

class chatrequest(basemodel):

prompt: str

max_tokens: int = 512

temperature: float = 0.7

@app.post("/v1/chat")

def chat_completion(request: chatrequest):

payload = {

"model": "deepseek-r1",

"prompt": request.prompt,

"stream": false,

"options": {

"temperature": request.temperature,

"num_predict": request.max_tokens

}

}

try:

response = requests.post(ollama_url, json=payload)

return {

"content": response.json()["response"],

"tokens_used": response.json()["eval_count"]

}

except exception as e:

return {"error": str(e)}

5.2 流式响应处理

实现sse流式传输:

from sse_starlette.sse import eventsourceresponse

@app.get("/v1/stream")

async def chat_stream(prompt: str):

def event_generator():

with requests.post(

ollama_url,

json={

"model": "deepseek-r1",

"prompt": prompt,

"stream": true

},

stream=true

) as r:

for chunk in r.iter_content(chunk_size=none):

if chunk:

yield {

"data": chunk.decode().split("data: ")[1]

}

return eventsourceresponse(event_generator())

六、性能优化实践

6.1 gpu加速配置

优化ollama启动参数:

# 启动参数配置 ollama_gpu_layers=35 \ ollama_mmlock=1 \ ollama_keep_alive=5m \ ollama serve

6.2 批处理优化

修改api服务代码:

from llama_cpp import llama

llm = llama(

model_path="./models/deepseek-r1-7b-chat-q4_k_m.gguf",

n_batch=512, # 批处理大小

n_threads=8, # cpu线程数

n_gpu_layers=35

)

def batch_predict(prompts):

return llm.create_chat_completion(

messages=[{"role": "user", "content": p} for p in prompts],

temperature=0.7,

max_tokens=512

)

七、安全与权限管理

7.1 jwt验证集成

from fastapi.security import httpbearer, httpauthorizationcredentials

from jose import jwterror, jwt

security = httpbearer()

secret_key = "your_secret_key_here"

@app.post("/secure/chat")

async def secure_chat(

request: chatrequest,

credentials: httpauthorizationcredentials = depends(security)

):

try:

payload = jwt.decode(

credentials.credentials,

secret_key,

algorithms=["hs256"]

)

if "user_id" not in payload:

raise httpexception(status_code=403, detail="invalid token")

return chat_completion(request)

except jwterror:

raise httpexception(status_code=403, detail="token验证失败")

7.2 请求限流设置

from fastapi import request

from fastapi.middleware import middleware

from slowapi import limiter

from slowapi.util import get_remote_address

limiter = limiter(key_func=get_remote_address)

app.state.limiter = limiter

@app.post("/api/chat")

@limiter.limit("10/minute")

async def limited_chat(request: request, body: chatrequest):

return chat_completion(body)

八、完整部署实例

8.1 一键部署脚本

创建deploy.sh:

#!/bin/bash

# step 1: 模型下载

huggingface-cli download deepseek-ai/deepseek-r1-7b-chat \

--token $hf_token \

--local-dir ./original_model

# step 2: 格式转换

python convert_to_gguf.py --input ./original_model --quant q4_k_m

# step 3: ollama注册

ollama create deepseek-r1 -f deepseek-r1-7b-chat.modelfile

# step 4: 启动服务

docker-compose up -d --build

# step 5: 验证部署

curl -x post http://localhost:8000/v1/chat \

-h "content-type: application/json" \

-d '{"prompt": "解释区块链的工作原理"}'

8.2 系统验证测试

import unittest

import requests

class testdeployment(unittest.testcase):

def test_basic_response(self):

response = requests.post(

"http://localhost:8000/v1/chat",

json={"prompt": "中国的首都是哪里?"}

)

self.assertin("北京", response.json()["content"])

def test_streaming(self):

with requests.get(

"http://localhost:8000/v1/stream?prompt=写一首关于春天的诗",

stream=true

) as r:

for chunk in r.iter_content():

self.asserttrue(len(chunk) > 0)

if __name__ == "__main__":

unittest.main()

结语

本文详细演示了deepseek r1在ollama平台的完整部署流程,涵盖从模型转换到生产环境部署的全链路实践。通过量化技术可将模型缩小至原始大小的1/4,同时保持90%以上的性能表现。建议企业用户根据实际场景选择适合的量化版本,并配合docker实现弹性扩缩容。后续可通过扩展modelfile参数进一步优化模型表现,或集成rag架构实现知识库增强。

到此这篇关于deepseek r1 ollama本地化部署全攻略的文章就介绍到这了,更多相关deepseek r1 ollama本地化部署内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论