目的:本地部署ollama后,下载了一堆模型,没有合适的界面使用,干脆自己写一个简单的。

1、本地下载并安装ollama,ollama。

2、在平台上找到deepseek模型,ollama,选哪个模型都行,看自己的机器能不能扛得住,可以先1.5b的试试再玩其他的。

3、本地cmd执行模型下载,举例:ollama pull deepseek-r1:1.5b

4、随便什么ide编写以下代码。

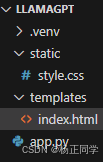

目录结构:

index.html:

<!doctype html>

<html>

<head>

<title>本地模型聊天</title>

<link rel="stylesheet" href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.0.0/css/all.min.css" rel="external nofollow" >

<link rel="stylesheet" href="/static/style.css" rel="external nofollow" >

</head>

<body>

<div class="container">

<div class="sidebar">

<div class="history-header">

<h3>聊天话题</h3>

<div>

<button onclick="createnewchat()" title="新话题"><i class="fas fa-plus"></i></button>

<button onclick="clearhistory()" title="清空记录"><i class="fas fa-trash"></i></button>

</div>

</div>

<ul id="historylist"></ul>

<div class="model-select">

<select id="modelselect">

<option value="">选择模型...</option>

</select>

</div>

</div>

<div class="chat-container">

<div id="chathistory" class="chat-history"></div>

<div class="input-area">

<input type="text" id="messageinput" placeholder="输入消息 (enter发送,shift+enter换行)"

onkeydown="handlekeypress(event)">

<button onclick="sendmessage()"><i class="fas fa-paper-plane"></i></button>

</div>

</div>

</div>

<script>

// 新增历史记录功能

let currentchatid = null;

// 初始化时加载历史记录

window.onload = async () => {

loadhistory();

const response = await fetch('/get_models');

const models = await response.json();

const select = document.getelementbyid('modelselect');

models.foreach(model => {

const option = document.createelement('option');

option.value = model;

option.textcontent = model;

select.appendchild(option);

});

};

// 保存聊天记录

function savetohistory(chatdata) {

const history = json.parse(localstorage.getitem('chathistory') || '[]');

const existingindex = history.findindex(item => item.id === chatdata.id);

if (existingindex > -1) {

// 更新现有记录

history[existingindex] = chatdata;

} else {

// 添加新记录

history.unshift(chatdata);

}

localstorage.setitem('chathistory', json.stringify(history));

loadhistory();

}

// 加载历史记录

function loadhistory() {

const history = json.parse(localstorage.getitem('chathistory') || '[]');

const list = document.getelementbyid('historylist');

list.innerhtml = history.map(chat => `

<li class="history-item" onclick="loadchat('${chat.id}')">

<div class="history-header">

<div class="history-model">${chat.model || '未指定模型'}</div>

<button class="delete-btn" onclick="deletechat('${chat.id}', event)">

<i class="fas fa-times"></i>

</button>

</div>

<div class="history-preview">${chat.messages.slice(-1)[0]?.content || '无内容'}</div>

<div class="history-time">${new date(chat.timestamp).tolocalestring()}</div>

</li>

`).join('');

}

// 回车处理

function handlekeypress(e) {

if (e.key === 'enter' && !e.shiftkey) {

e.preventdefault();

sendmessage();

}

}

// 处理消息发送

async function sendmessage() {

const input = document.getelementbyid('messageinput');

const message = input.value;

const model = document.getelementbyid('modelselect').value;

if (!message || !model) return;

const chathistory = document.getelementbyid('chathistory');

// 添加用户消息(带图标)

chathistory.innerhtml += `

<div class="message user-message">

<div class="avatar"><i class="fas fa-user"></i></div>

<div class="content">${message}</div>

</div>

`;

// 创建ai消息容器(带图标)

const aimessagediv = document.createelement('div');

aimessagediv.classname = 'message ai-message';

aimessagediv.innerhtml = `

<div class="avatar"><i class="fas fa-robot"></i></div>

<div class="content"></div>

`;

chathistory.appendchild(aimessagediv);

// 清空输入框

input.value = '';

// 滚动到底部

chathistory.scrolltop = chathistory.scrollheight;

// 发起请求

const response = await fetch('/chat', {

method: 'post',

headers: {

'content-type': 'application/json'

},

body: json.stringify({

model: model,

messages: [{content: message}]

})

});

const reader = response.body.getreader();

const decoder = new textdecoder();

let responsetext = '';

while(true) {

const { done, value } = await reader.read();

if (done) break;

const chunk = decoder.decode(value);

const lines = chunk.split('\n');

lines.foreach(line => {

if (line.startswith('data: ')) {

try {

const data = json.parse(line.slice(6));

responsetext += data.response;

aimessagediv.queryselector('.content').textcontent = responsetext;

chathistory.scrolltop = chathistory.scrollheight;

} catch(e) {

console.error('解析错误:', e);

}

}

});

}

// 初始化或更新聊天记录

if (!currentchatid) {

currentchatid = date.now().tostring();

}

// 保存到历史记录

const chatdata = {

id: currentchatid,

model: model,

timestamp: date.now(),

messages: [...existingmessages,

{role: 'user', content: message},

{role: 'assistant', content: responsetext}]

};

savetohistory(chatdata);

}

function createnewchat() {

currentchatid = date.now().tostring();

document.getelementbyid('chathistory').innerhtml = '';

document.getelementbyid('messageinput').value = '';

}

function deletechat(chatid, event) {

event.stoppropagation();

const history = json.parse(localstorage.getitem('chathistory') || '[]');

const newhistory = history.filter(item => item.id !== chatid);

localstorage.setitem('chathistory', json.stringify(newhistory));

loadhistory();

if (chatid === currentchatid) {

createnewchat();

}

}

</script>

</body>

</html> style.css:

body {

margin: 0;

font-family: arial, sans-serif;

background: #f0f0f0;

}

.container {

display: flex;

height: 100vh;

}

.sidebar {

width: 250px;

background: #2c3e50;

padding: 20px;

color: white;

}

.chat-container {

flex: 1;

display: flex;

flex-direction: column;

}

.chat-history {

flex: 1;

padding: 20px;

overflow-y: auto;

background: white;

}

.message {

display: flex;

align-items: start;

gap: 10px;

padding: 12px;

margin: 10px 0;

}

.user-message {

flex-direction: row-reverse;

}

.message .avatar {

width: 32px;

height: 32px;

border-radius: 50%;

flex-shrink: 0;

}

.user-message .avatar {

background: #3498db;

display: flex;

align-items: center;

justify-content: center;

}

.ai-message .avatar {

background: #2ecc71;

display: flex;

align-items: center;

justify-content: center;

}

.message .content {

max-width: calc(100% - 50px);

padding: 10px 15px;

border-radius: 15px;

white-space: pre-wrap;

word-break: break-word;

line-height: 1.5;

}

.input-area {

display: flex;

gap: 10px;

padding: 15px;

background: white;

box-shadow: 0 -2px 10px rgba(0,0,0,0.1);

}

input[type="text"] {

flex: 1;

min-width: 300px; /* 最小宽度 */

padding: 12px 15px;

border: 1px solid #ddd;

border-radius: 25px; /* 更圆润的边框 */

margin-right: 0;

font-size: 16px;

}

button {

padding: 10px 20px;

background: #007bff;

color: white;

border: none;

border-radius: 5px;

cursor: pointer;

}

button:hover {

background: #0056b3;

}

/* 新增历史记录样式 */

.history-header {

display: flex;

justify-content: space-between;

align-items: center;

margin-bottom: 8px;

}

.history-item {

padding: 12px;

border-bottom: 1px solid #34495e;

cursor: pointer;

transition: background 0.3s;

}

.history-item:hover {

background: #34495e;

}

.history-preview {

color: #bdc3c7;

font-size: 0.9em;

white-space: nowrap;

overflow: hidden;

text-overflow: ellipsis;

}

.history-time {

color: #7f8c8d;

font-size: 0.8em;

margin-top: 5px;

}

/* 新增代码块样式 */

.message .content pre {

background: rgba(0,0,0,0.05);

padding: 10px;

border-radius: 5px;

overflow-x: auto;

white-space: pre-wrap;

}

.message .content code {

font-family: 'courier new', monospace;

font-size: 0.9em;

}

/* 历史记录删除按钮 */

.delete-btn {

background: none;

border: none;

color: #e74c3c;

padding: 2px 5px;

cursor: pointer;

}

.delete-btn:hover {

color: #c0392b;

} app.py:

from flask import flask, render_template, request, jsonify, response

import requests

import subprocess

import time

app = flask(__name__)

ollama_host = "http://localhost:11434"

def start_ollama():

try:

# 尝试启动ollama服务

subprocess.popen(["ollama", "serve"], stdout=subprocess.devnull, stderr=subprocess.devnull)

time.sleep(2) # 等待服务启动

except exception as e:

print(f"启动ollama时出错: {e}")

@app.route('/')

def index():

return render_template('index.html')

@app.route('/get_models')

def get_models():

try:

response = requests.get(f"{ollama_host}/api/tags")

models = [model['name'] for model in response.json()['models']]

return jsonify(models)

except requests.connectionerror:

return jsonify({"error": "无法连接ollama服务,请确保已安装并运行ollama"}), 500

@app.route('/chat', methods=['post'])

def chat():

data = request.json

model = data['model']

messages = data['messages']

def generate():

try:

response = requests.post(

f"{ollama_host}/api/generate",

json={

"model": model,

"prompt": messages[-1]['content'],

"stream": true

},

stream=true

)

for line in response.iter_lines():

if line:

yield f"data: {line.decode()}\n\n"

except exception as e:

yield f"data: {str(e)}\n\n"

return response(generate(), mimetype='text/event-stream')

if __name__ == '__main__':

start_ollama()

app.run(debug=true, port=5000) 运行环境:

- python 3.7+

- flask (pip install flask)

- requests库 (pip install requests)

- ollama ,至少pull一个模型

5、运行:python app.py

6、打开 http://127.0.0.1:5000 开始使用。

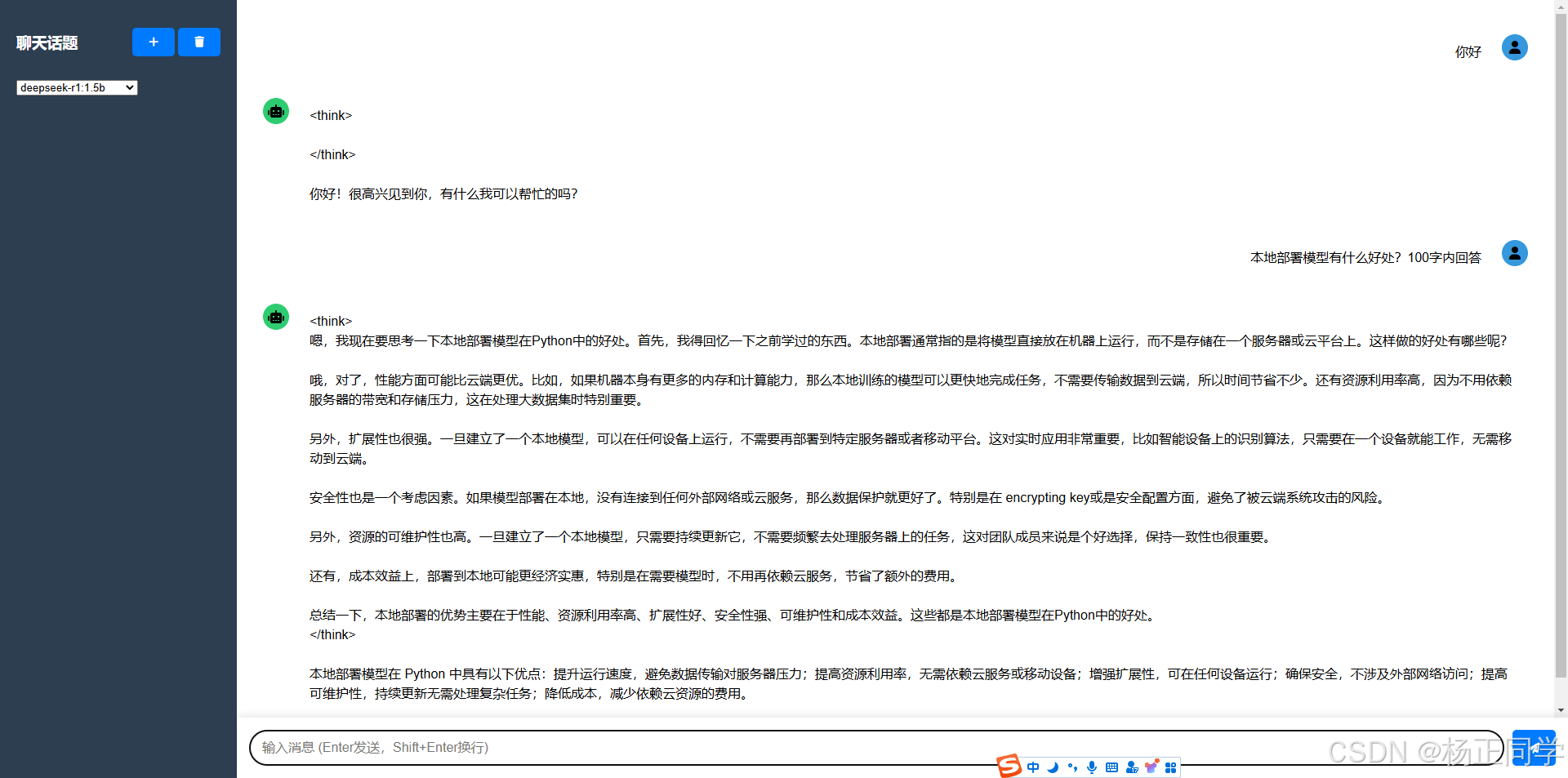

简单实现,界面如下,自己看着改:

到此这篇关于python+ollama自己写代码调用本地deepseek模型的文章就介绍到这了,更多相关python+ollama调用本地deepseek模型内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论