1. 在linux服务器上部署deepseek模型

要在 linux 上通过 ollama 安装和使用模型,您可以按照以下步骤进行操作:

步骤 1:安装 ollama

安装 ollama:

使用以下命令安装 ollama:

curl -ssfl https://ollama.com/install.sh | sh

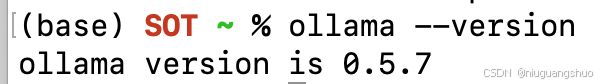

验证安装:

安装完成后,您可以通过以下命令验证 ollama 是否安装成功:

ollama --version

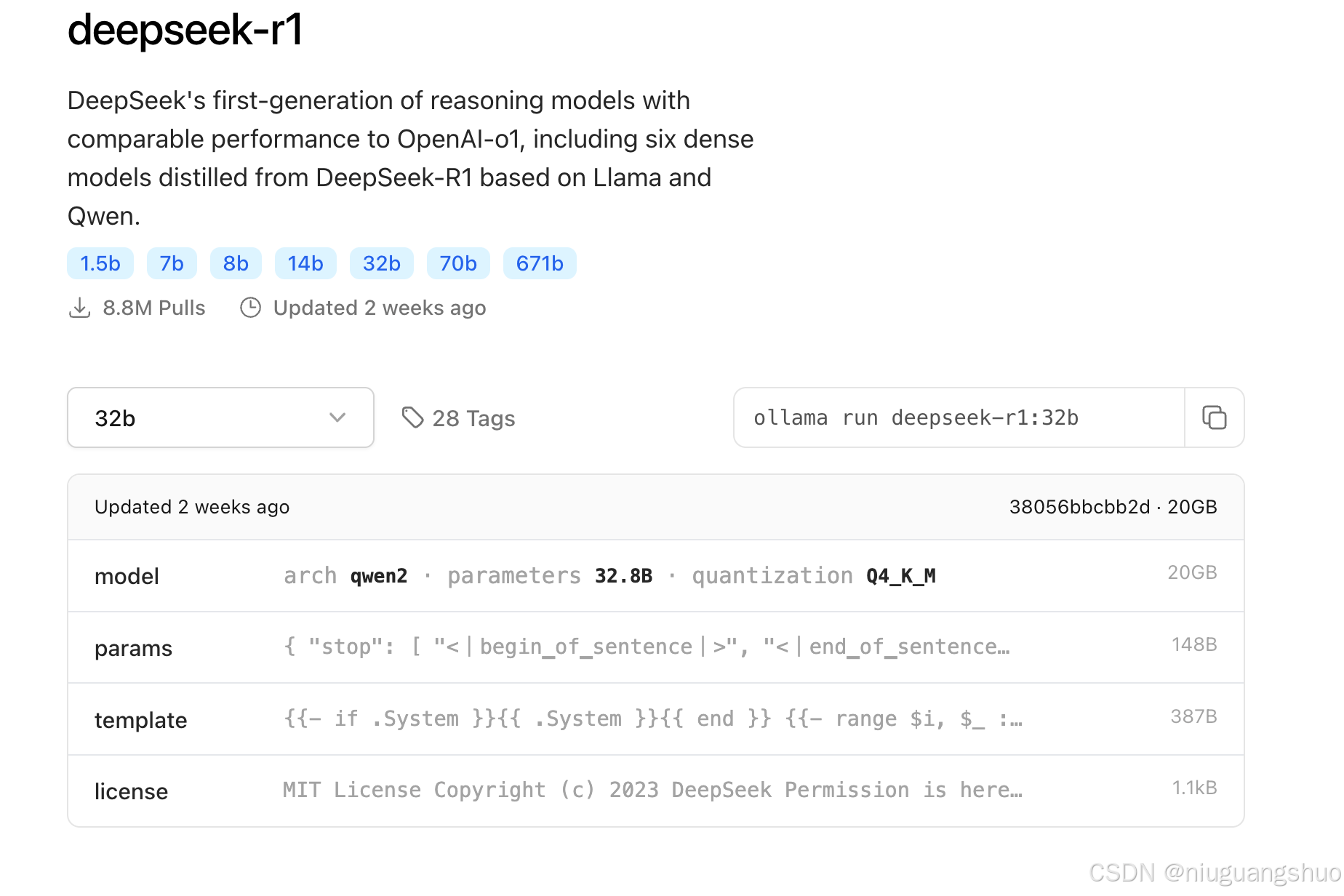

步骤 2:下载模型

ollama run deepseek-r1:32b

这将下载并启动deepseek r1 32b模型。

deepseek r1 蒸馏模型列表

| 模型名称 | 参数量 | 基础架构 | 适用场景 |

|---|---|---|---|

| deepseek-r1-distill-qwen-1.5b | 1.5b | qwen2.5 | 适合移动设备或资源受限的终端 |

| deepseek-r1-distill-qwen-7b | 7b | qwen2.5 | 适合普通文本生成工具 |

| deepseek-r1-distill-llama-8b | 8b | llama3.1 | 适合小型企业日常文本处理 |

| deepseek-r1-distill-qwen-14b | 14b | qwen2.5 | 适合桌面级应用 |

| deepseek-r1-distill-qwen-32b | 32b | qwen2.5 | 适合专业领域知识问答系统 |

| deepseek-r1-distill-llama-70b | 70b | llama3.3 | 适合科研、学术研究等高要求场景 |

rtx 4090 显卡显存为 24gb,32b 模型在 4-bit 量化下约需 22gb 显存,适合该硬件。32b 模型在推理基准测试中表现优异,接近 70b 模型的推理能力,但对硬件资源需求更低。

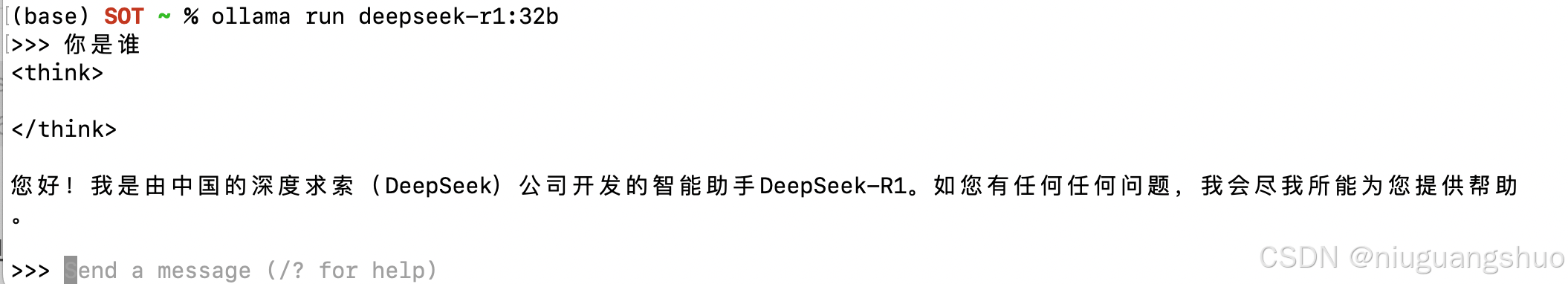

步骤 3:运行模型

ollama run deepseek-r1:32b

通过上面的步骤,已经可以直接在 linux服务器通过命令行的形式使用deepseek了。但是不够友好,下面介绍更方便的形式。

2. 在linux服务器配置ollama服务

1. 设置ollama服务配置

设置ollama_host=0.0.0.0环境变量,这使得ollama服务能够监听所有网络接口,从而允许远程访问。

sudo vi /etc/systemd/system/ollama.service

[unit] description=ollama service after=network-online.target [service] execstart=/usr/local/bin/ollama serve user=ollama group=ollama restart=always restartsec=3 environment="ollama_host=0.0.0.0" environment="path=/usr/local/cuda/bin:/home/bytedance/miniconda3/bin:/home/bytedance/miniconda3/condabin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin" [install] wantedby=default.target

2. 重新加载并重启ollama服务

sudo systemctl daemon-reload sudo systemctl restart ollama

3.验证ollama服务是否正常运行

运行以下命令,确保ollama服务正在监听所有网络接口:

sudo netstat -tulpn | grep ollama

您应该看到类似以下的输出,表明ollama服务正在监听所有网络接口(0.0.0.0):

tcp 0 0 0.0.0.0:11434 0.0.0.0:* listen - ollama

4. 配置防火墙以允许远程访问

为了确保您的linux服务器允许从外部访问ollama服务,您需要配置防火墙以允许通过端口11434的流量。

sudo ufw allow 11434/tcp sudo ufw reload

5. 验证防火墙规则

确保防火墙规则已正确添加,并且端口11434已开放。您可以使用以下命令检查防火墙状态:

sudo ufw status

状态: 激活 至 动作 来自 - -- -- 22/tcp allow anywhere 11434/tcp allow anywhere 22/tcp (v6) allow anywhere (v6) 11434/tcp (v6) allow anywhere (v6)

6. 测试远程访问

在完成上述配置后,您可以通过远程设备(如mac)测试对ollama服务的访问。

在远程设备上测试连接:

在mac上打开终端,运行以下命令以测试对ollama服务的连接:

curl http://10.37.96.186:11434/api/version

显示

{"version":"0.5.7"}测试问答

curl -x post http://10.37.96.186:11434/api/generate \

-h "content-type: application/json" \

-d '{"model": "deepseek-r1:32b", "prompt": "你是谁?"}'显示

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.118616168z","response":"\u003cthink\u003e","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.150938966z","response":"\n\n","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.175255854z","response":"\u003c/think\u003e","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.199509353z","response":"\n\n","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.223657359z","response":"您好","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.24788375z","response":"!","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.272068174z","response":"我是","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.296163417z","response":"由","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.320515728z","response":"中国的","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.344646528z","response":"深度","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.36880216z","response":"求","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.393006489z","response":"索","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.417115966z","response":"(","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.441321254z","response":"deep","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.465439117z","response":"seek","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.489619415z","response":")","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.51381827z","response":"公司","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.538012781z","response":"开发","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.562186246z","response":"的","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.586331325z","response":"智能","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.610539651z","response":"助手","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.634769989z","response":"deep","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.659134003z","response":"seek","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.683523205z","response":"-r","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.70761762z","response":"1","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.731953604z","response":"。","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.756135462z","response":"如","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.783480232z","response":"您","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.807766337z","response":"有任何","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.831964079z","response":"任何","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.856229156z","response":"问题","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.880487159z","response":",","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.904710537z","response":"我会","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.929026993z","response":"尽","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.953239249z","response":"我","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:15.977496819z","response":"所能","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:16.001763128z","response":"为您提供","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:16.026068523z","response":"帮助","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:16.050242581z","response":"。","done":false}

{"model":"deepseek-r1:32b","created_at":"2025-02-06t00:47:16.074454593z","response":"","done":true,"done_reason":"stop","context":[151644,105043,100165,30,151645,151648,271,151649,198,198,111308,6313,104198,67071,105538,102217,30918,50984,9909,33464,39350,7552,73218,100013,9370,100168,110498,33464,39350,12,49,16,1773,29524,87026,110117,99885,86119,3837,105351,99739,35946,111079,113445,100364,1773],"total_duration":3872978599,"load_duration":2811407308,"prompt_eval_count":6,"prompt_eval_duration":102000000,"eval_count":40,"eval_duration":958000000}通过上述步骤,已经成功在linux服务器上配置了ollama服务,并通过mac远程访问了deepseek模型。接下来,将介绍如何在mac上安装web ui,以便更方便地与模型进行交互。

3. 在mac上安装web ui

为了更方便地与远程linux服务器上的deepseek模型进行交互,可以在mac上安装一个web ui工具。这里我们推荐使用 open web ui,它是一个基于web的界面,支持多种ai模型,包括ollama。

1. 通过conda安装open-webui

打开终端,运行以下命令创建一个新的conda环境,并指定python版本为3.11:

conda create -n open-webui-env python=3.11 conda activate open-webui-env pip install open-webui

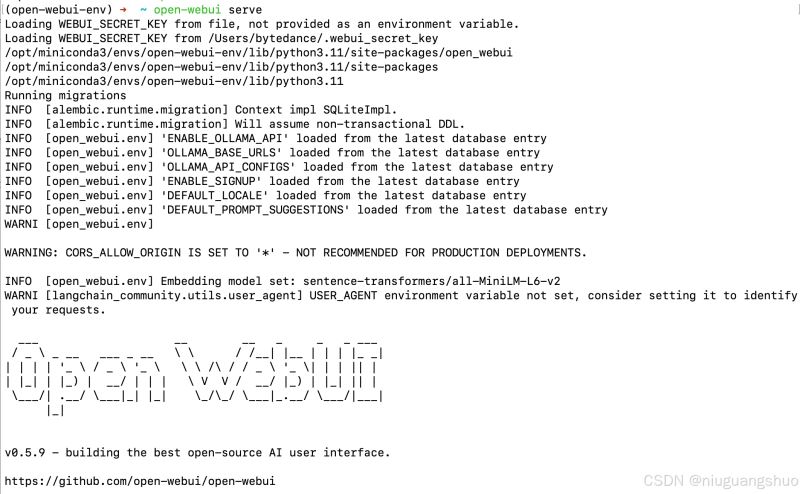

2. 启动open-webui

open-webui serve

3. 浏览器访问

http://localhost:8080/

使用管理员身份(第一个注册用户)登录

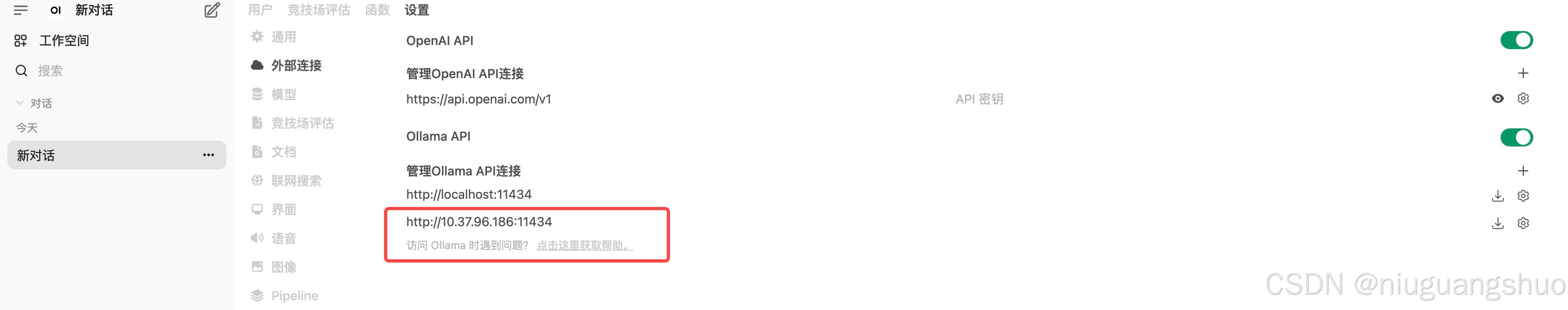

在open webui界面中,依次点击“展开左侧栏”(左上角三道杠)–>“头像”(左下角)–>管理员面板–>设置(上侧)–>外部连接

在外部连接的ollama api一栏将switch开关打开,在栏中填上http://10.37.96.186:11434(这是我的服务器地址)

点击右下角“保存”按钮

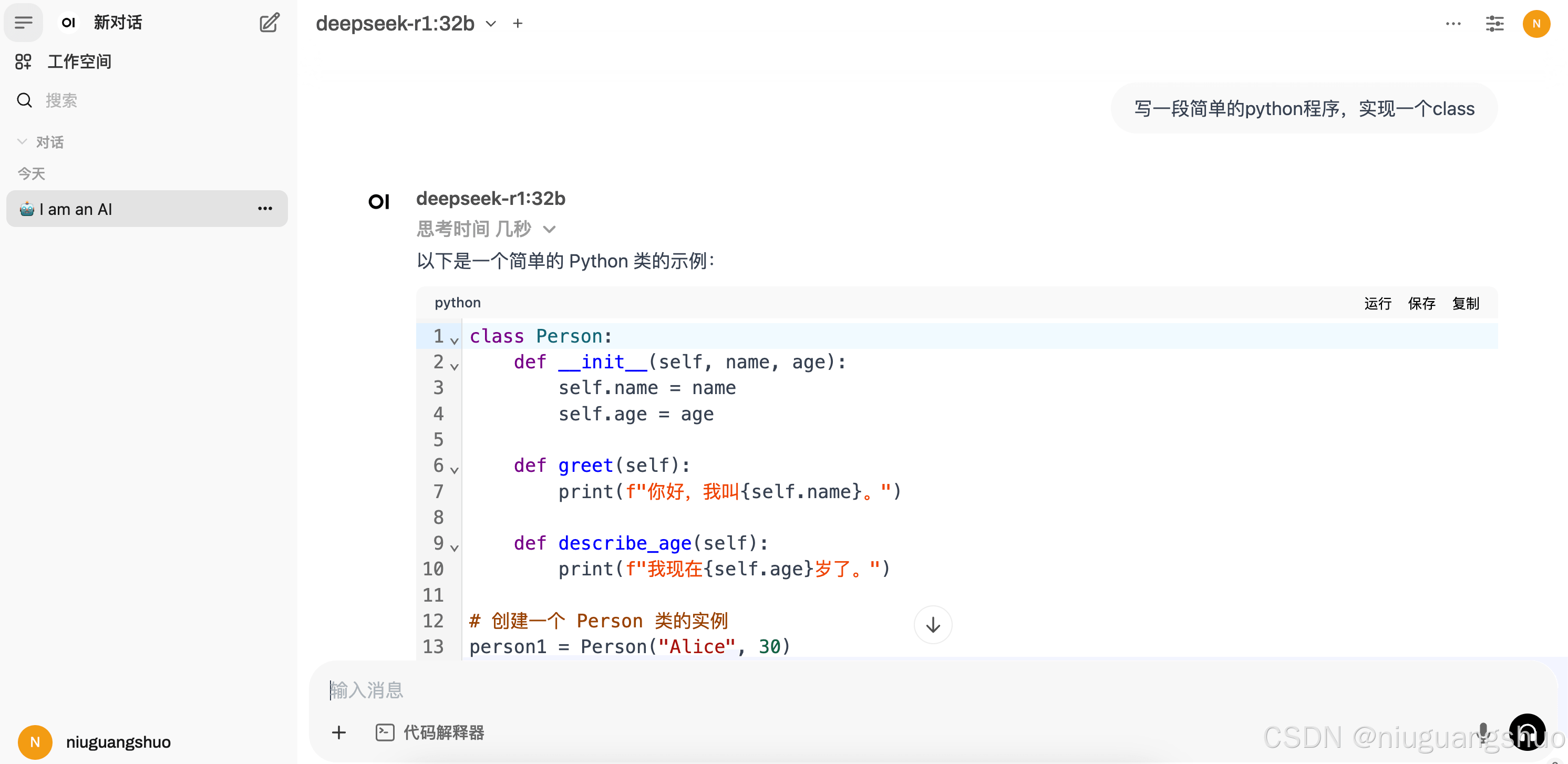

点击“新对话”(左上角),确定是否正确刷出模型列表,如果正确刷出,则设置完毕。

4. 愉快的使用本地deepseek模型

到此这篇关于在linux服务器本地部署deepseek及在mac远程web-ui访问的操作的文章就介绍到这了,更多相关linux 本地部署deepseek内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论