前置准备

目的

在物理机中基于docker服务运行两个clickhouse容器,配置并连接zookeeper服务器;依照官网对replicatedmergetree引擎的描述分别在两个容器中创建副本表;通过zookeeper对clickhouse的支持达到数据同步,最终让两台clickhouse互为数据副本(data replication).

准备

-

物理机基础环境

ip地址:192.168.182.10

-

docker容器和ip信息

服务名称 网段名称 ip地址 主机名 docker执行命令 ck节点1(第一个clickhouse容器) bridge 172.17.0.3 7409ace09488 docker run -d --name clickhouse-server-c1 --ulimit nofile=262144:262144 --volume=/software/docker/clickhouse/ck1/config:/etc/clickhouse-server/ -v /software/docker/clickhouse/ck1/data:/var/lib/clickhouse -p 8123:8123 -p 9000:9000 -p 9009:9009 yandex/clickhouse-server ck节点2(第二个clickhouse容器) bridge 172.17.0.4 b22679b9d346 docker run -d --name clickhouse-server-c2 --ulimit nofile=262144:262144 --volume=/software/docker/clickhouse/ck2/config:/etc/clickhouse-server/ -v /software/docker/clickhouse/ck2/data:/var/lib/clickhouse -p 8124:8123 -p 9001:9000 -p 9010:9009 yandex/clickhouse-server zookeeper bridge 172.17.0.5 不重要 docker run --name zookeeper -d -p 2181:2181 zookeeper -

clickhouse服务配置

3.1. ck节点1和ck节点2的config/config.d目录中新建metrika-share.xml配置文件,并写入以下配置

- metrika-share.xml 内容

<?xml version="1.0"?> <clickhouse> <zookeeper> <node> <!--因zookeeper的2181端口已对外暴露,此处可直接使用本机地址访问,防止zookeeper ip地址变动--> <host>192.168.182.10</host> <port>2181</port> </node> </zookeeper> </clickhouse>3.2 ck节点1和ck节点2的config.xml配置中搜索<include_from> 标签,添加为以下内容

<!--因docker挂载目录已映射成功,故此处可以直接连接到下列配置--> <include_from>/etc/clickhouse-server/config.d/metrika-share.xml</include_from>注意:修改配置完毕后需要重启clickhouse服务

问题复现

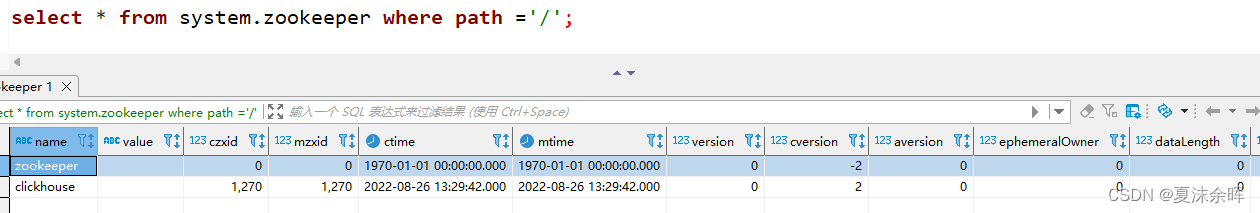

1.ck客户端中访问zookeeper正常相应,打印结果如图1所示;

图1:判断zookeeper是否正确连通

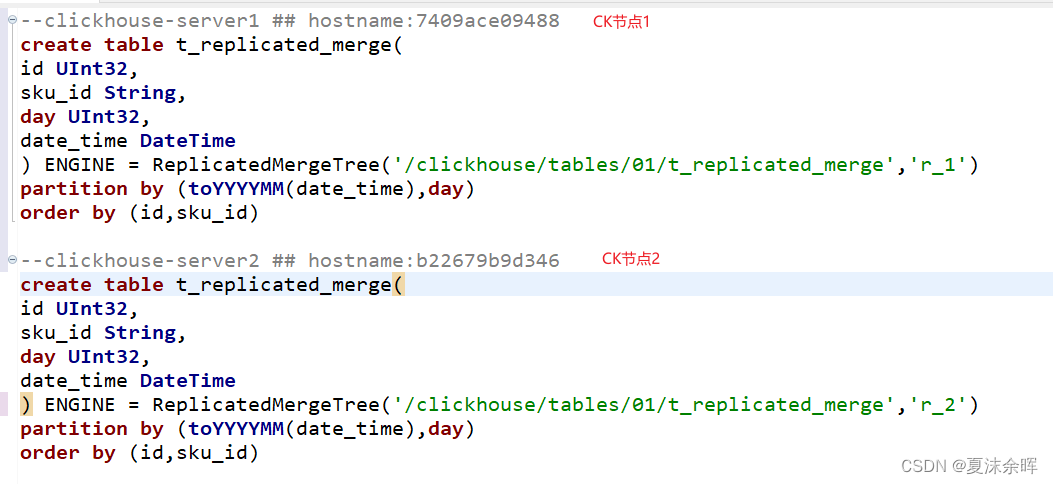

2.两个容器的ck客户端中分别创建replicatedmergetree引擎的建表语句正常创建成功,建表语句如图2所示;

图2:两个节点的建表语句.

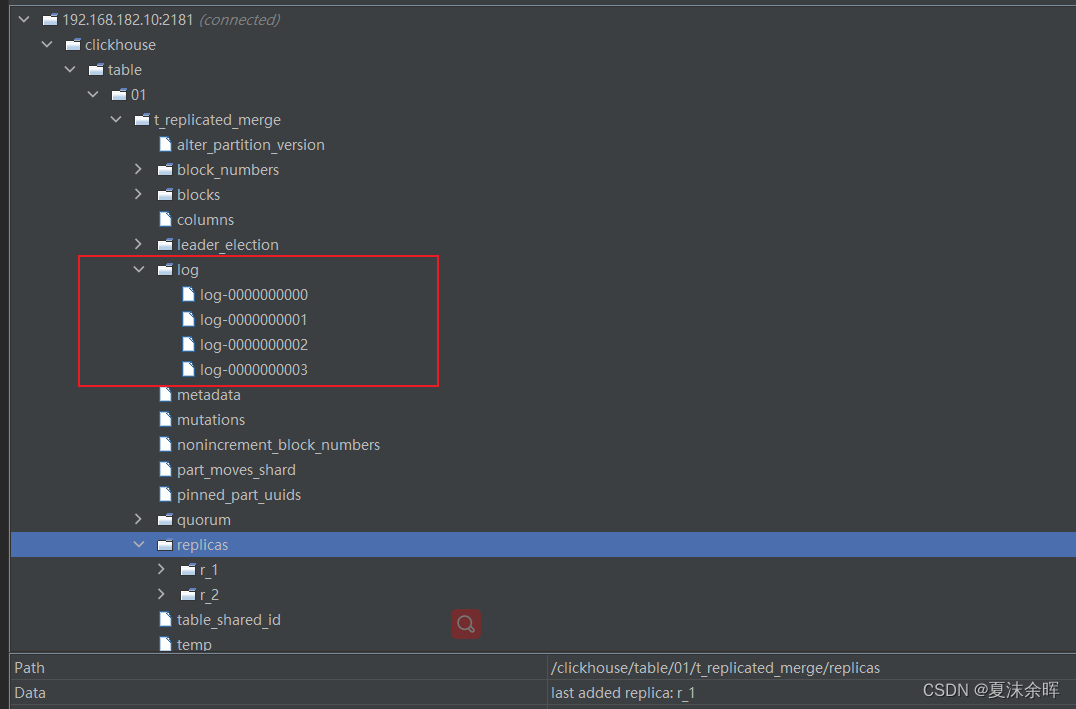

- ck节点1执行2条插入语句,结果为ck节点1存在数据,而ck节点2无数据,说明数据同步失败;同样在ck节点2执行2条插入语句,结果为ck节点2存在数据,而ck节点1无数据,使用idea的zookeeper连接工具插件查看自动创建的【/clickhouse/tables/01/t_replicated_merge】目录,目前展示图如图3所示.

图3.zookeeper中对replicatedmergetree支持的节点展示图

主要目录解释

1). 【replicas】目录为副本的信息节点,包含了副本节点的连接ip、端口号、是否存活等信息.

2). 【log】目录为数据同步目录,即副本间数据同步通过该目录下的log-xxxxx进行数据交互,其中log-xxxxx可以等价为“insert into xxx values(v1,v2)”,当然还有附加属性如客户端标识、大小之类的信息.

问题定位

立即打开ck节点1的服务端错误日志,日志名为:【clickhouse-server.err.log】(linux环境下错误日志所在位置一般为【/var/log/clickhouse-server】),发现错误【dnsresolver: cannot resolve host (b22679b9d346), error 0: b22679b9d346.xxx.const: code: 198. db::exception: not found address of host: b22679b9d346. (dns_error)】;开发ck节点2的服务日志,也是类似错误;较完整错误日志打印如下:

2022.08.26 11:03:33.409494 [ 90 ] {} <error> dnsresolver: cannot resolve host (b22679b9d346), error 0: b22679b9d346.

2022.08.26 11:03:33.409738 [ 90 ] {} <error> default.t_replicated_merge (35f8baf4-d1e4-43d9-88fc-c45e1ff8adb0): auto db::storagereplicatedmergetree::processqueueentry(replicatedmergetreequeue::selectedentryptr)::(anonymous class)::operator()(db::storagereplicatedmergetree::logentryptr &) const: code: 198. db::exception: not found address of host: b22679b9d346. (dns_error), stack trace (when copying this message, always include the lines below):

0. db::exception::exception(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int, bool) @ 0xa82d07a in /usr/bin/clickhouse

1. ? @ 0xa8e7951 in /usr/bin/clickhouse

2. ? @ 0xa8e8122 in /usr/bin/clickhouse

3. db::dnsresolver::resolvehost(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&) @ 0xa8e71fe in /usr/bin/clickhouse

4. ? @ 0xa9d9493 in /usr/bin/clickhouse

5. ? @ 0xa9dd0f9 in /usr/bin/clickhouse

6. poolbase<poco::net::httpclientsession>::get(long) @ 0xa9dc837 in /usr/bin/clickhouse

7. db::makepooledhttpsession(poco::uri const&, poco::uri const&, db::connectiontimeouts const&, unsigned long, bool) @ 0xa9dacb8 in /usr/bin/clickhouse

8. db::makepooledhttpsession(poco::uri const&, db::connectiontimeouts const&, unsigned long, bool) @ 0xa9d9819 in /usr/bin/clickhouse

9. db::updatablepooledsession::updatablepooledsession(poco::uri, db::connectiontimeouts const&, unsigned long, unsigned long) @ 0x141c76e9 in /usr/bin/clickhouse

10. db::pooledreadwritebufferfromhttp::pooledreadwritebufferfromhttp(poco::uri, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::function<void (std::__1::basic_ostream<char, std::__1::char_traits<char> >&)>, db::connectiontimeouts const&, poco::net::httpbasiccredentials const&, unsigned long, unsigned long, unsigned long) @ 0x141be51e in /usr/bin/clickhouse

11. db::datapartsexchange::fetcher::fetchpart(std::__1::shared_ptr<db::storageinmemorymetadata const> const&, std::__1::shared_ptr<db::context const>, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, int, db::connectiontimeouts const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::shared_ptr<db::throttler>, bool, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::optional<db::currentlysubmergingemergingtagger>*, bool, std::__1::shared_ptr<db::idisk>) @ 0x141bb8f2 in /usr/bin/clickhouse

12. ? @ 0x1405188d in /usr/bin/clickhouse

13. db::storagereplicatedmergetree::fetchpart(std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, std::__1::shared_ptr<db::storageinmemorymetadata const> const&, std::__1::basic_string<char, std::__1::char_traits<char>, std::__1::allocator<char> > const&, bool, unsigned long, std::__1::shared_ptr<zkutil::zookeeper>) @ 0x13fae906 in /usr/bin/clickhouse

14. db::storagereplicatedmergetree::executefetch(db::replicatedmergetreelogentry&) @ 0x13fa12b4 in /usr/bin/clickhouse

15. db::storagereplicatedmergetree::executelogentry(db::replicatedmergetreelogentry&) @ 0x13f9074d in /usr/bin/clickhouse

16. ? @ 0x1404f41f in /usr/bin/clickhouse

17. db::replicatedmergetreequeue::processentry(std::__1::function<std::__1::shared_ptr<zkutil::zookeeper> ()>, std::__1::shared_ptr<db::replicatedmergetreelogentry>&, std::__1::function<bool (std::__1::shared_ptr<db::replicatedmergetreelogentry>&)>) @ 0x14468165 in /usr/bin/clickhouse

18. db::storagereplicatedmergetree::processqueueentry(std::__1::shared_ptr<db::replicatedmergetreequeue::selectedentry>) @ 0x13fd1073 in /usr/bin/clickhouse

19. db::executablelambdaadapter::executestep() @ 0x1404fd11 in /usr/bin/clickhouse

20. db::mergetreebackgroundexecutor<db::ordinaryruntimequeue>::routine(std::__1::shared_ptr<db::taskruntimedata>) @ 0xa806ffa in /usr/bin/clickhouse

21. db::mergetreebackgroundexecutor<db::ordinaryruntimequeue>::threadfunction() @ 0xa806eb5 in /usr/bin/clickhouse

22. threadpoolimpl<threadfromglobalpool>::worker(std::__1::__list_iterator<threadfromglobalpool, void*>) @ 0xa8720aa in /usr/bin/clickhouse

23. threadfromglobalpool::threadfromglobalpool<void threadpoolimpl<threadfromglobalpool>::scheduleimpl<void>(std::__1::function<void ()>, int, std::__1::optional<unsigned long>)::'lambda0'()>(void&&, void threadpoolimpl<threadfromglobalpool>::scheduleimpl<void>(std::__1::function<void ()>, int, std::__1::optional<unsigned long>)::'lambda0'()&&...)::'lambda'()::operator()() @ 0xa873ec4 in /usr/bin/clickhouse

24. threadpoolimpl<std::__1::thread>::worker(std::__1::__list_iterator<std::__1::thread, void*>) @ 0xa86f4b7 in /usr/bin/clickhouse

25. ? @ 0xa872ebd in /usr/bin/clickhouse

26. ? @ 0x7fb368403609 in ?

27. __clone @ 0x7fb36832a293 in ?

(version 22.1.3.7 (official build))

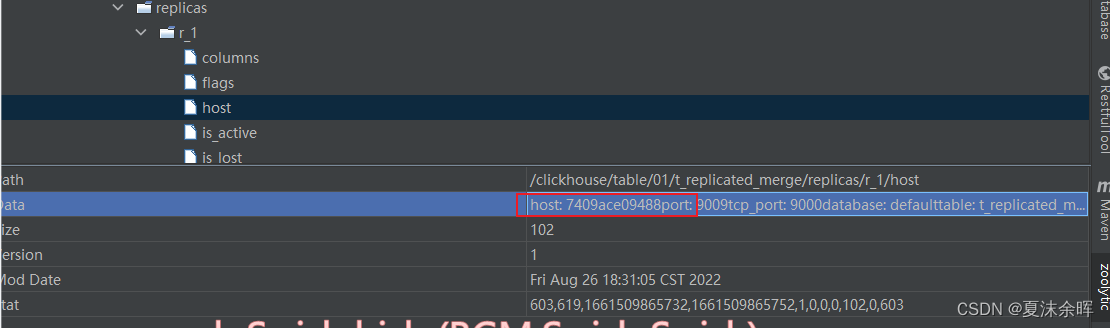

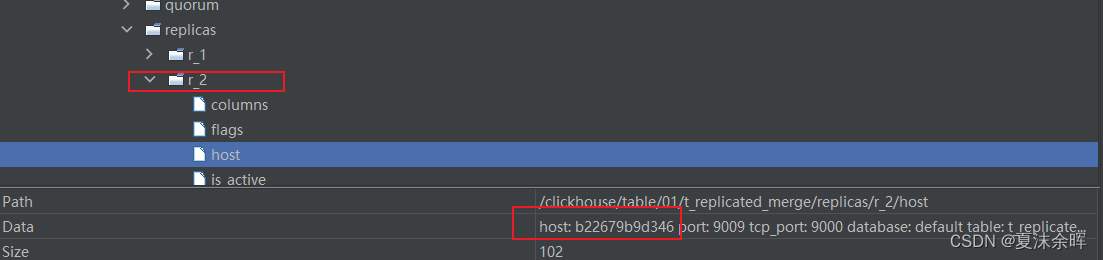

既然是找不到主机名,则要去寻找匹配的信息,正好图3提到的【replicas】目录有记录节点的主机和ip等信息,打开【replicas】目录发现【r_1(ck节点1)】和【r_2(ck节点2)】节点中记录的【host】信息正好就是错误日志中的host。【r_1】节点部分信息如图4所示,【r_2】节点部分信息如图5所示.

图4.ck节点1的节点信息截图

图5.ck节点2的节点信息截图

问题解决

方案1

经过以上步骤,再重新查询任意节点的数据,发现之前未正常写入的数据都已经正确同步! 至此,问题圆满解决.

方案缺点

- docker容器停止后,内部ip地址会发生变动,导致配置每次都需要更正;(重要)

- 副本应基于宏定义标签进行动态定值,需要额外在metrika-share.xml中配置宏标签 (废话)

- 重复配置配置文件,工作量大 (废话)

方案2

备注:该方案于2023年4月17日更新,较于【方案1】的优势便是将重要缺点补足(其他缺点解决可联系博主私发简单的执行脚本),且不用更改标签<interserver_http_host>,甚至可以应用在物理资源不足的测试/生产环境中…

思路

因docker默认网络存在先天缺陷,导致属于该网段的container-ip并未与contailer-hostname做内部绑定;但docker支持创建自定义网络,且会自动将ip同hostname进行关联,因此将多个ck容器置于同一个自定义网络中即可使其通过hostname进行通讯~

方案步骤

1.(如使用过方案1)注释ck容器的<interserver_http_host>标签

2. 执行docker命令 docker network create clickhouse-network

3. 修改原有ck节点1和ck节点2容器的docker run 命令,在其中添加== --network clickhouse-network== 选项

4. 重启服务,over~~

发表评论