diffusion模型遇到问题:缺少某个库,不能自动下载。

oserror: can't load tokenizer for 'xxxxxxxxx'. if you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. otherwise, make sure 'xxxxxxxxx' is the correct path to a directory containing all relevant files for a cliptokenizer tokenizer.

例如

file "/home/spai/code/sd/stable-diffusion/ldm/util.py", line 85, in instantiate_from_config

return get_obj_from_str(config["target"])(**config.get("params", dict()))

file "/home/spai/code/sd/stable-diffusion/ldm/modules/encoders/modules.py", line 141, in __init__

self.tokenizer = cliptokenizer.from_pretrained(version)

file "/home/spai/anaconda3/envs/metap/lib/python3.8/site-packages/transformers/tokenization_utils_base.py", line 1838, in from_pretrained

raise environmenterror(

oserror: can't load tokenizer for 'openai/clip-vit-large-patch14'. if you were trying to load it from 'https://huggingface.co/models', make sure you don't have a local directory with the same name. otherwise, make sure 'openai/clip-vit-large-patch14' is the correct path to a directory containing all relevant files for a cliptokenizer tokenizer.

原因

提示显示无法加载该模型的分词器(tokenizer),可能出现了以下情况之一:

-

本地目录与 ‘openai/clip-vit-large-patch14’ 同名:如果你的本地目录中有一个名为 ‘openai/clip-vit-large-patch14’ 的文件夹,可能会导致加载错误。请检查你的工作目录或者其他相关目录中是否有同名文件夹,并确保没有命名冲突。

-

模型路径错误:确保 ‘openai/clip-vit-large-patch14’ 是正确的模型路径,可以尝试重新确认模型路径是否正确。

-

自动下载没找到,需要手动下载,放进去。可能是防火墙、urlib、ssl、服务器等问题

解决

手动下载模型

以上面的clip缺失为例:

在huggingface.co中搜索,找到相关库

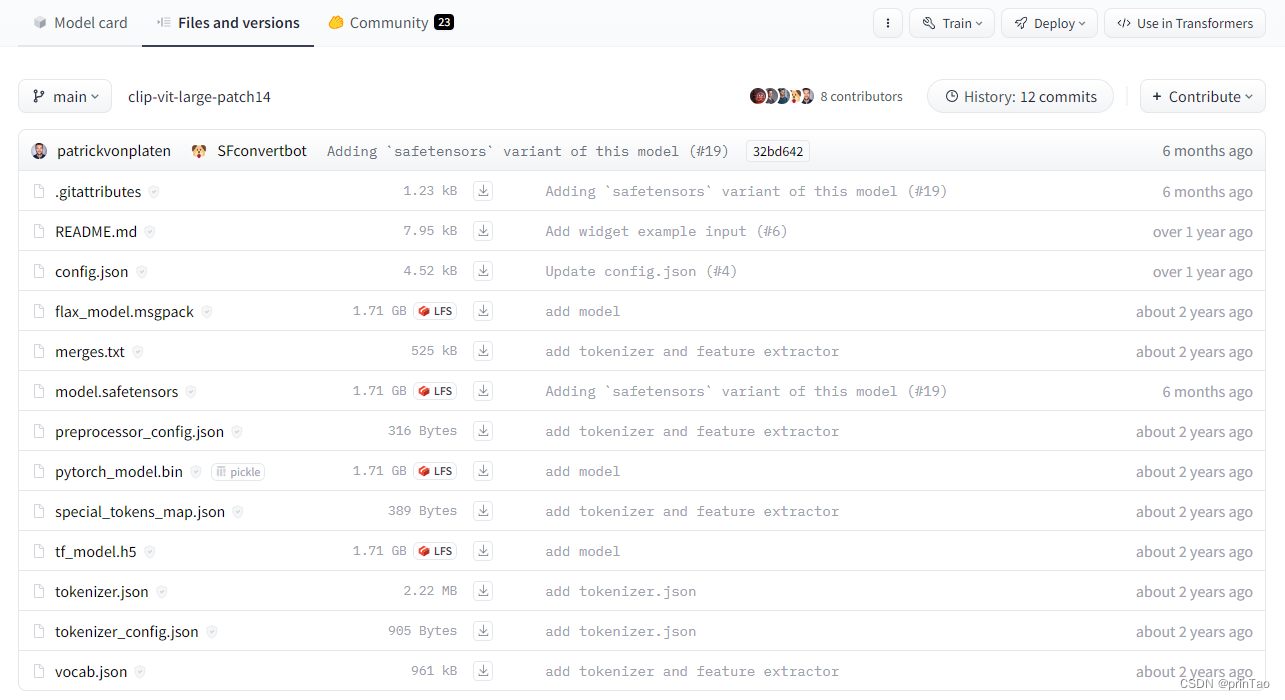

从https://huggingface.co/openai/clip-vit-large-patch14/tree/main下载所有文件

mkdir -p stable-diffusion-webui/openai/clip-vit-large-patch14

并将其移动到 本地目录/openai//clip-vit-large-patch14/

下载失败的话,看我的其他文章,用到 lfs 或者迅雷单独下载

修正网络

import ssl

ssl._create_default_https_context=ssl._create_unverified_context

为什么可以解决?

参考 https://blog.csdn.net/betrayfree/article/details/132482843

发表评论