目录

1、实战内容

爬取京东笔记本电脑商品的信息(如:价格、商品名、评论数量),存入 mysql 中

2、思路

京东需要登录才能搜索进入,所以首先从登录页面出发,自己登录,然后等待,得到 cookies,之后带着 cookies 模拟访问页面,得到页面的 response,再对 response 进行分析,将分析内容存入 mysql 中。

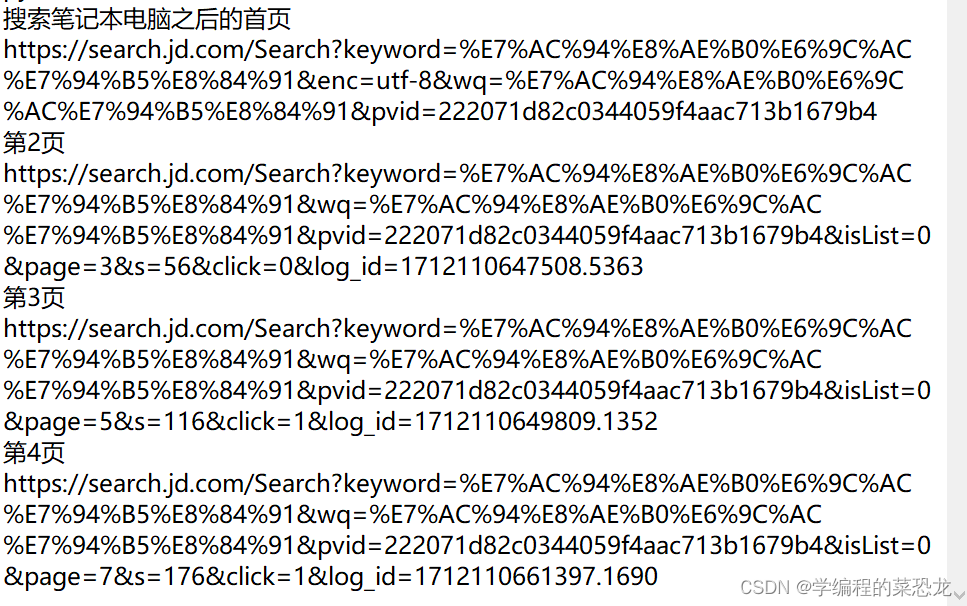

3、分析 url

来到 京东搜索 笔记本电脑,之后观察首页之后的几页的链接,如下:

不难发现,除了首页之外,其他链接的区别是从第四行的 page 开始,大胆猜测链接就到此即可,且规律也显而易见,就可以直接构造链接。

4、开始操作

1、得到 cookies

from selenium import webdriver

import json, time

def get_cookie(url):

browser = webdriver.chrome()

browser.get(url) # 进入登录页面

time.sleep(10) # 停一段时间,自己手动登录

cookies_dict_list = browser.get_cookies() # 获取list的cookies

cookies_json = json.dumps(cookies_dict_list) # 列表转换成字符串保存

with open('cookies.txt', 'w') as f:

f.write(cookies_json)

print('cookies保存成功!')

browser.close()

if __name__ == '__main__':

url_login = 'https://passport.jd.com/uc/login?' # 登录页面url

get_cookie(url_login)之后就可看到 txt 文件中的 cookies(多个),之后模拟登录构造 cookie 时,对照其中的即可.

[{"domain": ".jd.com", "expiry": 1714467009, "httponly": false, "name": "pcsycityid", "path": "/", "samesite": "lax", "secure": false, "value": "cn_420000_420100_0"}, {"domain": ".jd.com", "expiry": 1714726209, "httponly": false, "name": "iploc-djd", "path": "/", "samesite": "lax", "secure": false, "value": "17-1381-0-0"}, {"domain": ".jd.com", "expiry": 1744966205, "httponly": false, "name": "3ab9d23f7a4b3css", "path": "/", "samesite": "lax", "secure": false, "value": "jdd037tjjkumonrnqco62pwgc7odm7jrogoidpqu2bccfmuciqo2i5vaqvw5o4sgrcxdyvrrxxkox4uhigkdz3pkgor4n34aaaampbis6boyaaaaacw2ruf62nz4qj4x"}, {"domain": ".jd.com", "expiry": 1714726209, "httponly": false, "name": "areaid", "path": "/", "samesite": "lax", "secure": false, "value": "17"}, {"domain": ".jd.com", "expiry": 1713864005, "httponly": false, "name": "__jdb", "path": "/", "samesite": "lax", "secure": false, "value": "76161171.2.1713862194482764265847|1.1713862194"}, {"domain": ".jd.com", "httponly": false, "name": "__jdc", "path": "/", "samesite": "lax", "secure": false, "value": "76161171"}, {"domain": ".jd.com", "httponly": true, "name": "pinid", "path": "/", "samesite": "lax", "secure": false, "value": "r20xjxxeiqzxtfkf3qkirrv9-x-f3wj7"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": true, "name": "_tp", "path": "/", "samesite": "lax", "secure": false, "value": "wixns9ftqdjlj59x04w8g6fl%2fjckyfa3feur52oog9y%3d"}, {"domain": ".jd.com", "expiry": 1713862325, "httponly": false, "name": "_gia_d", "path": "/", "samesite": "lax", "secure": false, "value": "1"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": false, "name": "npin", "path": "/", "samesite": "lax", "secure": false, "value": "jd_5d8dd3c5fae0c"}, {"domain": ".jd.com", "httponly": true, "name": "flash", "path": "/", "samesite": "none", "secure": true, "value": "2_1tx2gsoqn74rntuh5j9_hiyfyddrkj1lowvbr9joelv6tawyrkhzrefsbwgmamxkufkqskful1zuaa7ejv-yfs5ggqtsibz1nytld2sllyj*"}, {"domain": ".jd.com", "httponly": true, "name": "thor", "path": "/", "samesite": "none", "secure": true, "value": "822a26dcaf32b0e7a92fffbc434794b69743b9393bd9418b46a25947c5dde6c8e11f6b24fc8180a2d134a279b137ace831b4042213d12da43da5286659940540769a4dacfa4aee4920a41da34717f4ab6ebcb6c39f80dd4c370630dd00cc3cdd599ffcd2c528a732610f316b83afbe0e4564a746bc571f7572b8b53a8200b6b807fe0e168428c065e1a5ae60a040942fc62faeba43345764a371d23da468d1a8"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": false, "name": "pin", "path": "/", "samesite": "lax", "secure": false, "value": "jd_5d8dd3c5fae0c"}, {"domain": ".jd.com", "expiry": 1715158194, "httponly": false, "name": "__jdv", "path": "/", "samesite": "lax", "secure": false, "value": "95931165|direct|-|none|-|1713862194483"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": false, "name": "logintype", "path": "/", "samesite": "lax", "secure": false, "value": "qq"}, {"domain": "www.jd.com", "expiry": 1745398205, "httponly": false, "name": "o2state", "path": "/", "samesite": "lax", "secure": false, "value": "{%22webp%22:true%2c%22avif%22:true}"}, {"domain": ".jd.com", "expiry": 1729414205, "httponly": false, "name": "__jda", "path": "/", "samesite": "lax", "secure": false, "value": "76161171.1713862194482764265847.1713862194.1713862194.1713862194.1"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": true, "name": "_pst", "path": "/", "samesite": "lax", "secure": false, "value": "jd_5d8dd3c5fae0c"}, {"domain": ".jd.com", "expiry": 1744966205, "httponly": false, "name": "3ab9d23f7a4b3c9b", "path": "/", "samesite": "lax", "secure": false, "value": "7tjjkumonrnqco62pwgc7odm7jrogoidpqu2bccfmuciqo2i5vaqvw5o4sgrcxdyvrrxxkox4uhigkdz3pkgor4n34"}, {"domain": ".jd.com", "expiry": 1729414213, "httponly": false, "name": "__jdu", "path": "/", "samesite": "lax", "secure": false, "value": "1713862194482764265847"}, {"domain": ".jd.com", "expiry": 1716454204, "httponly": false, "name": "unick", "path": "/", "samesite": "lax", "secure": false, "value": "jxnu-2019"}, {"domain": ".jd.com", "httponly": false, "name": "wlfstk_smdl", "path": "/", "samesite": "lax", "secure": false, "value": "vfk8t4rwomo4ptggg0jbjy5e7h4zf18b"}]2、访问页面,得到 response

当 selenium 访问时,若页面是笔记本电脑页,直接进行爬取;若不是,即在登录页,构造 cookie,添加,登录;

js 语句是使页面滑倒最低端,等待数据包刷新出来,才能得到完整的页面 response(这里的 resposne 相当于使用 request 时候的 response.text );

def get_html(url): # 此 url 为分析的笔记本电脑页面的 url

browser = webdriver.chrome()

browser.get(url)

if browser.current_url == url:

js = "var q=document.documentelement.scrolltop=100000"

browser.execute_script(js)

time.sleep(2)

responses = browser.page_source

browser.close()

return responses

else:

with open('cookies.txt', 'r', encoding='utf8') as f:

listcookies = json.loads(f.read())

for cookie in listcookies:

cookie_dict = {

'domain': '.jd.com',

'name': cookie.get('name'),

'value': cookie.get('value'),

"expires": '1746673777', # 表示 cookies到期时间

'path': '/', # expiry必须为整数,所以使用 expires

'httponly': false,

"samesite": "lax",

'secure': false

}

browser.add_cookie(cookie_dict) # 将获得的所有 cookies 都加入

time.sleep(1)

browser.get(url)

js = "var q=document.documentelement.scrolltop=100000"

browser.execute_script(js)

time.sleep(2)

response = browser.page_source

browser.close()

return response

cookies 到期时间是用时间戳来记录的,可用工具查看时间戳(unix timestamp)转换工具 - 在线工具

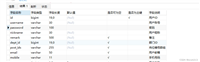

3、解析页面

这里我使用了 xpath 的解析方法,并将结果构造成字典列表,输出。

def parse(response):

html = etree.html(response)

products_lis = html.xpath('//li[contains(@class, "gl-item")]')

products_list = []

for product_li in products_lis:

product = {}

price = product_li.xpath('./div/div[@class="p-price"]/strong/i/text()')

name = product_li.xpath('./div/div[contains(@class, "p-name")]/a/em/text()')

comment = product_li.xpath('./div/div[@class="p-commit"]/strong/a/text()')

product['price'] = price[0]

product['name'] = name[0].strip()

product['comment'] = comment[0]

products_list.append(product)

print(products_list)

print(len(products_list))4、存入 mysql

上述已经得到每一个商品数据的字典,所以直接对字典或字典列表进行存储即可。

参考链接:4.4 mysql存储-csdn博客 5.2 ajax 数据爬取实战-csdn博客

5、1-3步总代码

from selenium import webdriver

import json, time

from lxml import etree

def get_cookie(url):

browser = webdriver.chrome()

browser.get(url) # 进入登录页面

time.sleep(10) # 停一段时间,自己手动登录

cookies_dict_list = browser.get_cookies() # 获取list的cookies

cookies_json = json.dumps(cookies_dict_list) # 转换成字符串保存

with open('cookies.txt', 'w') as f:

f.write(cookies_json)

print('cookies保存成功!')

browser.close()

def get_html(url):

browser = webdriver.chrome()

browser.get(url)

if browser.current_url == url:

js = "var q=document.documentelement.scrolltop=100000"

browser.execute_script(js)

time.sleep(2)

responses = browser.page_source

browser.close()

return responses

else:

with open('cookies.txt', 'r', encoding='utf8') as f:

listcookies = json.loads(f.read())

for cookie in listcookies:

cookie_dict = {

'domain': '.jd.com',

'name': cookie.get('name'),

'value': cookie.get('value'),

"expires": '1746673777',

'path': '/',

'httponly': false,

"samesite": "lax",

'secure': false

}

browser.add_cookie(cookie_dict) # 将获得的所有 cookies 都加入

time.sleep(1)

browser.get(url)

js = "var q=document.documentelement.scrolltop=100000"

browser.execute_script(js)

time.sleep(2)

response = browser.page_source

browser.close()

return response

def parse(response):

html = etree.html(response)

products_lis = html.xpath('//li[contains(@class, "gl-item")]')

products_list = []

for product_li in products_lis:

product = {}

price = product_li.xpath('./div/div[@class="p-price"]/strong/i/text()')

name = product_li.xpath('./div/div[contains(@class, "p-name")]/a/em/text()')

comment = product_li.xpath('./div/div[@class="p-commit"]/strong/a/text()')

product['price'] = price[0]

product['name'] = name[0].strip()

product['comment'] = comment[0]

products_list.append(product)

print(products_list)

print(len(products_list))

if __name__ == '__main__':

keyword = '笔记本电脑'

url_login = 'https://passport.jd.com/uc/login?' # 登录页面url

get_cookie(url_login)

for page in range(1, 20, 2):

base_url = f'https://search.jd.com/search?keyword={keyword}&wq=%e7%ac%94%e8%ae%b0%e6%9c%ac%e7%94%b5%e8%84%91&pvid=222071d82c0344059f4aac713b1679b4&islist=0&page={page}'

response = get_html(base_url)

parse(response)

文章参考:python爬虫之使用selenium爬取京东商品信息并把数据保存至mongodb数据库_seleniu获取京东cookie-csdn博客

文章到此结束,本人新手,若有错误,欢迎指正;若有疑问,欢迎讨论。若文章对你有用,点个小赞鼓励一下,谢谢大家,一起加油吧!

发表评论