audiotrack的write方法有多个重载方法:

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull byte[] audiodata, int offsetinbytes, int sizeinbytes) {

return write(audiodata, offsetinbytes, sizeinbytes, write_blocking);

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull byte[] audiodata, int offsetinbytes, int sizeinbytes,

@writemode int writemode) {

// note: we allow writes of extended integers and compressed formats from a byte array.

if (mstate == state_uninitialized || maudioformat == audioformat.encoding_pcm_float) {

return error_invalid_operation;

}

if ((writemode != write_blocking) && (writemode != write_non_blocking)) {

log.e(tag, "audiotrack.write() called with invalid blocking mode");

return error_bad_value;

}

if ( (audiodata == null) || (offsetinbytes < 0 ) || (sizeinbytes < 0)

|| (offsetinbytes + sizeinbytes < 0) // detect integer overflow

|| (offsetinbytes + sizeinbytes > audiodata.length)) {

return error_bad_value;

}

if (!blockuntiloffloaddrain(writemode)) {

return 0;

}

final int ret = native_write_byte(audiodata, offsetinbytes, sizeinbytes, maudioformat,

writemode == write_blocking);

if ((mdataloadmode == mode_static)

&& (mstate == state_no_static_data)

&& (ret > 0)) {

// benign race with respect to other apis that read mstate

mstate = state_initialized;

}

return ret;

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull short[] audiodata, int offsetinshorts, int sizeinshorts) {

return write(audiodata, offsetinshorts, sizeinshorts, write_blocking);

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull short[] audiodata, int offsetinshorts, int sizeinshorts,

@writemode int writemode) {

if (mstate == state_uninitialized

|| maudioformat == audioformat.encoding_pcm_float

// use bytebuffer or byte[] instead for later encodings

|| maudioformat > audioformat.encoding_legacy_short_array_threshold) {

return error_invalid_operation;

}

if ((writemode != write_blocking) && (writemode != write_non_blocking)) {

log.e(tag, "audiotrack.write() called with invalid blocking mode");

return error_bad_value;

}

if ( (audiodata == null) || (offsetinshorts < 0 ) || (sizeinshorts < 0)

|| (offsetinshorts + sizeinshorts < 0) // detect integer overflow

|| (offsetinshorts + sizeinshorts > audiodata.length)) {

return error_bad_value;

}

if (!blockuntiloffloaddrain(writemode)) {

return 0;

}

final int ret = native_write_short(audiodata, offsetinshorts, sizeinshorts, maudioformat,

writemode == write_blocking);

if ((mdataloadmode == mode_static)

&& (mstate == state_no_static_data)

&& (ret > 0)) {

// benign race with respect to other apis that read mstate

mstate = state_initialized;

}

return ret;

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull float[] audiodata, int offsetinfloats, int sizeinfloats,

@writemode int writemode) {

if (mstate == state_uninitialized) {

log.e(tag, "audiotrack.write() called in invalid state state_uninitialized");

return error_invalid_operation;

}

if (maudioformat != audioformat.encoding_pcm_float) {

log.e(tag, "audiotrack.write(float[] ...) requires format encoding_pcm_float");

return error_invalid_operation;

}

if ((writemode != write_blocking) && (writemode != write_non_blocking)) {

log.e(tag, "audiotrack.write() called with invalid blocking mode");

return error_bad_value;

}

if ( (audiodata == null) || (offsetinfloats < 0 ) || (sizeinfloats < 0)

|| (offsetinfloats + sizeinfloats < 0) // detect integer overflow

|| (offsetinfloats + sizeinfloats > audiodata.length)) {

log.e(tag, "audiotrack.write() called with invalid array, offset, or size");

return error_bad_value;

}

if (!blockuntiloffloaddrain(writemode)) {

return 0;

}

final int ret = native_write_float(audiodata, offsetinfloats, sizeinfloats, maudioformat,

writemode == write_blocking);

if ((mdataloadmode == mode_static)

&& (mstate == state_no_static_data)

&& (ret > 0)) {

// benign race with respect to other apis that read mstate

mstate = state_initialized;

}

return ret;

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull bytebuffer audiodata, int sizeinbytes,

@writemode int writemode) {

if (mstate == state_uninitialized) {

log.e(tag, "audiotrack.write() called in invalid state state_uninitialized");

return error_invalid_operation;

}

if ((writemode != write_blocking) && (writemode != write_non_blocking)) {

log.e(tag, "audiotrack.write() called with invalid blocking mode");

return error_bad_value;

}

if ( (audiodata == null) || (sizeinbytes < 0) || (sizeinbytes > audiodata.remaining())) {

log.e(tag, "audiotrack.write() called with invalid size (" + sizeinbytes + ") value");

return error_bad_value;

}

if (!blockuntiloffloaddrain(writemode)) {

return 0;

}

int ret = 0;

if (audiodata.isdirect()) {

ret = native_write_native_bytes(audiodata,

audiodata.position(), sizeinbytes, maudioformat,

writemode == write_blocking);

} else {

ret = native_write_byte(nioutils.unsafearray(audiodata),

nioutils.unsafearrayoffset(audiodata) + audiodata.position(),

sizeinbytes, maudioformat,

writemode == write_blocking);

}

if ((mdataloadmode == mode_static)

&& (mstate == state_no_static_data)

&& (ret > 0)) {

// benign race with respect to other apis that read mstate

mstate = state_initialized;

}

if (ret > 0) {

audiodata.position(audiodata.position() + ret);

}

return ret;

}

}

//frameworks/base/media/java/android/media/audiotrack.java

public class audiotrack extends playerbase implements audiorouting, volumeautomation

{

public int write(@nonnull bytebuffer audiodata, int sizeinbytes,

@writemode int writemode, long timestamp) {

if (mstate == state_uninitialized) {

log.e(tag, "audiotrack.write() called in invalid state state_uninitialized");

return error_invalid_operation;

}

if ((writemode != write_blocking) && (writemode != write_non_blocking)) {

log.e(tag, "audiotrack.write() called with invalid blocking mode");

return error_bad_value;

}

if (mdataloadmode != mode_stream) {

log.e(tag, "audiotrack.write() with timestamp called for non-streaming mode track");

return error_invalid_operation;

}

if ((mattributes.getflags() & audioattributes.flag_hw_av_sync) == 0) {

log.d(tag, "audiotrack.write() called on a regular audiotrack. ignoring pts...");

return write(audiodata, sizeinbytes, writemode);

}

if ((audiodata == null) || (sizeinbytes < 0) || (sizeinbytes > audiodata.remaining())) {

log.e(tag, "audiotrack.write() called with invalid size (" + sizeinbytes + ") value");

return error_bad_value;

}

if (!blockuntiloffloaddrain(writemode)) {

return 0;

}

// create timestamp header if none exists

if (mavsyncheader == null) {

mavsyncheader = bytebuffer.allocate(moffset);

mavsyncheader.order(byteorder.big_endian);

mavsyncheader.putint(0x55550002);

}

if (mavsyncbytesremaining == 0) {

mavsyncheader.putint(4, sizeinbytes);

mavsyncheader.putlong(8, timestamp);

mavsyncheader.putint(16, moffset);

mavsyncheader.position(0);

mavsyncbytesremaining = sizeinbytes;

}

// write timestamp header if not completely written already

int ret = 0;

if (mavsyncheader.remaining() != 0) {

ret = write(mavsyncheader, mavsyncheader.remaining(), writemode);

if (ret < 0) {

log.e(tag, "audiotrack.write() could not write timestamp header!");

mavsyncheader = null;

mavsyncbytesremaining = 0;

return ret;

}

if (mavsyncheader.remaining() > 0) {

log.v(tag, "audiotrack.write() partial timestamp header written.");

return 0;

}

}

// write audio data

int sizetowrite = math.min(mavsyncbytesremaining, sizeinbytes);

ret = write(audiodata, sizetowrite, writemode);

if (ret < 0) {

log.e(tag, "audiotrack.write() could not write audio data!");

mavsyncheader = null;

mavsyncbytesremaining = 0;

return ret;

}

mavsyncbytesremaining -= ret;

return ret;

}

}

上述方法主要调用如下方法:

native_write_byte

native_write_short

native_write_float

下面分别进行分析:

native_write_byte

native_write_byte为native方法:

private native final int native_write_byte(byte[] audiodata,

int offsetinbytes, int sizeinbytes, int format,

boolean isblocking);

通过查询android_media_audiotrack.cpp得到:

{"native_write_byte", "([biiiz)i", (void *)android_media_audiotrack_writearray<jbytearray>},因此会调用android_media_audiotrack_writearray方法:

//frameworks/base/core/jni/android_media_audiotrack.cpp

static jint android_media_audiotrack_writearray(jnienv *env, jobject thiz,

t javaaudiodata,

jint offsetinsamples, jint sizeinsamples,

jint javaaudioformat,

jboolean iswriteblocking) {

//alogv("android_media_audiotrack_writearray(offset=%d, sizeinsamples=%d) called",

// offsetinsamples, sizeinsamples);

sp<audiotrack> lptrack = getaudiotrack(env, thiz);

if (lptrack == null) {

jnithrowexception(env, "java/lang/illegalstateexception",

"unable to retrieve audiotrack pointer for write()");

return (jint)audio_java_invalid_operation;

}

if (javaaudiodata == null) {

aloge("null java array of audio data to play");

return (jint)audio_java_bad_value;

}

// note: we may use getprimitivearraycritical() when the jni implementation changes in such

// a way that it becomes much more efficient. when doing so, we will have to prevent the

// audiosystem callback to be called while in critical section (in case of media server

// process crash for instance)

// get the pointer for the audio data from the java array

auto caudiodata = envgetarrayelements(env, javaaudiodata, null);

if (caudiodata == null) {

aloge("error retrieving source of audio data to play");

return (jint)audio_java_bad_value; // out of memory or no data to load

}

jint sampleswritten = writetotrack(lptrack, javaaudioformat, caudiodata,

offsetinsamples, sizeinsamples, iswriteblocking == jni_true /* blocking */); //调用writetotrack方法

envreleasearrayelements(env, javaaudiodata, caudiodata, 0);

//alogv("write wrote %d (tried %d) samples in the native audiotrack with offset %d",

// (int)sampleswritten, (int)(sizeinsamples), (int)offsetinsamples);

return sampleswritten;

}

native_write_short

native_write_short为native方法:

private native final int native_write_short(short[] audiodata,

int offsetinshorts, int sizeinshorts, int format,

boolean isblocking);

通过查询android_media_audiotrack.cpp得到:

{"native_write_short", "([siiiz)i",(void *)android_media_audiotrack_writearray<jshortarray>},因此同样调用android_media_audiotrack_writearray方法。

native_write_float

native_write_float为native方法:

{"native_write_float", "([fiiiz)i",(void *)android_media_audiotrack_writearray<jfloatarray>},因此同样调用android_media_audiotrack_writearray方法。

writetotrack

在android_media_audiotrack_writearray方法中调用了writetotrack方法:

//frameworks/base/core/jni/android_media_audiotrack.cpp

static jint writetotrack(const sp<audiotrack>& track, jint audioformat, const t *data,

jint offsetinsamples, jint sizeinsamples, bool blocking) {

// give the data to the native audiotrack object (the data starts at the offset)

ssize_t written = 0;

// regular write() or copy the data to the audiotrack's shared memory?

size_t sizeinbytes = sizeinsamples * sizeof(t);

if (track->sharedbuffer() == 0) {

written = track->write(data + offsetinsamples, sizeinbytes, blocking); //调用audiotrack的write方法

// for compatibility with earlier behavior of write(), return 0 in this case

if (written == (ssize_t) would_block) {

written = 0;

}

} else {

// writing to shared memory, check for capacity

if ((size_t)sizeinbytes > track->sharedbuffer()->size()) {

sizeinbytes = track->sharedbuffer()->size();

}

memcpy(track->sharedbuffer()->unsecurepointer(), data + offsetinsamples, sizeinbytes);

written = sizeinbytes;

}

if (written >= 0) {

return written / sizeof(t);

}

return interpretwritesizeerror(written);

}

write

调用audiotrack的write方法:

//frameworks/av/media/libaudioclient/audiotrack.cpp

ssize_t audiotrack::write(const void* buffer, size_t usersize, bool blocking)

{

if (mtransfer != transfer_sync && mtransfer != transfer_sync_notif_callback) {

return invalid_operation;

}

if (isdirect()) {

automutex lock(mlock);

int32_t flags = android_atomic_and(

~(cblk_underrun | cblk_loop_cycle | cblk_loop_final | cblk_buffer_end),

&mcblk->mflags);

if (flags & cblk_invalid) {

return dead_object;

}

}

if (ssize_t(usersize) < 0 || (buffer == null && usersize != 0)) {

// validation: user is most-likely passing an error code, and it would

// make the return value ambiguous (actualsize vs error).

aloge("%s(%d): audiotrack::write(buffer=%p, size=%zu (%zd)",

__func__, mportid, buffer, usersize, usersize);

return bad_value;

}

size_t written = 0;

buffer audiobuffer;

while (usersize >= mframesize) {

audiobuffer.framecount = usersize / mframesize;

status_t err = obtainbuffer(&audiobuffer,

blocking ? &clientproxy::kforever : &clientproxy::knonblocking); //调用obtainbuffer获取缓冲区

if (err < 0) {

if (written > 0) {

break;

}

if (err == timed_out || err == -eintr) {

err = would_block;

}

return ssize_t(err);

}

size_t towrite = audiobuffer.size();

memcpy(audiobuffer.raw, buffer, towrite);

buffer = ((const char *) buffer) + towrite;

usersize -= towrite;

written += towrite;

releasebuffer(&audiobuffer);

}

if (written > 0) {

mframeswritten += written / mframesize;

if (mtransfer == transfer_sync_notif_callback) {

const sp<audiotrackthread> t = maudiotrackthread;

if (t != 0) {

// causes wake up of the playback thread, that will callback the client for

// more data (with event_can_write_more_data) in processaudiobuffer()

t->wake(); //唤醒playback线程

}

}

}

return written;

}

调用obtainbuffer方法:

//frameworks/av/media/libaudioclient/audiotrack.cpp

status_t audiotrack::obtainbuffer(buffer* audiobuffer, int32_t waitcount, size_t *noncontig)

{

if (audiobuffer == null) {

if (noncontig != null) {

*noncontig = 0;

}

return bad_value;

}

if (mtransfer != transfer_obtain) {

audiobuffer->framecount = 0;

audiobuffer->msize = 0;

audiobuffer->raw = null;

if (noncontig != null) {

*noncontig = 0;

}

return invalid_operation;

}

const struct timespec *requested;

struct timespec timeout;

if (waitcount == -1) {

requested = &clientproxy::kforever;

} else if (waitcount == 0) {

requested = &clientproxy::knonblocking;

} else if (waitcount > 0) {

time_t ms = wait_period_ms * (time_t) waitcount;

timeout.tv_sec = ms / 1000;

timeout.tv_nsec = (ms % 1000) * 1000000;

requested = &timeout;

} else {

aloge("%s(%d): invalid waitcount %d", __func__, mportid, waitcount);

requested = null;

}

return obtainbuffer(audiobuffer, requested, null /*elapsed*/, noncontig);

}

调用obtainbuffer方法:

sp<audiotrackclientproxy> mproxy; // primary owner of the memory

//frameworks/av/media/libaudioclient/audiotrack.cpp

status_t audiotrack::obtainbuffer(buffer* audiobuffer, const struct timespec *requested,

struct timespec *elapsed, size_t *noncontig)

{

// previous and new iaudiotrack sequence numbers are used to detect track re-creation

uint32_t oldsequence = 0;

proxy::buffer buffer;

status_t status = no_error;

static const int32_t kmaxtries = 5;

int32_t trycounter = kmaxtries;

do {

// obtainbuffer() is called with mutex unlocked, so keep extra references to these fields to

// keep them from going away if another thread re-creates the track during obtainbuffer()

sp<audiotrackclientproxy> proxy;

sp<imemory> imem;

{ // start of lock scope

automutex lock(mlock);

uint32_t newsequence = msequence;

// did previous obtainbuffer() fail due to media server death or voluntary invalidation?

if (status == dead_object) {

// re-create track, unless someone else has already done so

if (newsequence == oldsequence) {

status = restoretrack_l("obtainbuffer");

if (status != no_error) {

buffer.mframecount = 0;

buffer.mraw = null;

buffer.mnoncontig = 0;

break;

}

}

}

oldsequence = newsequence;

if (status == not_enough_data) {

restartifdisabled();

}

// keep the extra references

proxy = mproxy;

imem = mcblkmemory;

if (mstate == state_stopping) {

status = -eintr;

buffer.mframecount = 0;

buffer.mraw = null;

buffer.mnoncontig = 0;

break;

}

// non-blocking if track is stopped or paused

if (mstate != state_active) {

requested = &clientproxy::knonblocking;

}

} // end of lock scope

buffer.mframecount = audiobuffer->framecount;

// fixme starts the requested timeout and elapsed over from scratch

status = proxy->obtainbuffer(&buffer, requested, elapsed);

} while (((status == dead_object) || (status == not_enough_data)) && (trycounter-- > 0));

audiobuffer->framecount = buffer.mframecount;

audiobuffer->msize = buffer.mframecount * mframesize;

audiobuffer->raw = buffer.mraw;

audiobuffer->sequence = oldsequence;

if (noncontig != null) {

*noncontig = buffer.mnoncontig;

}

return status;

}

调用audiotrack的restoretrack_l方法:

sp<media::iaudiotrack> maudiotrack;

//frameworks/av/media/libaudioclient/audiotrack.cpp

status_t audiotrack::restoretrack_l(const char *from)

{

status_t result = no_error; // logged: make sure to set this before returning.

const int64_t beginns = systemtime();

mediametrics::defer defer([&] {

mediametrics::logitem(mmetricsid)

.set(amediametrics_prop_event, amediametrics_prop_event_value_restore)

.set(amediametrics_prop_executiontimens, (int64_t)(systemtime() - beginns))

.set(amediametrics_prop_state, statetostring(mstate))

.set(amediametrics_prop_status, (int32_t)result)

.set(amediametrics_prop_where, from)

.record(); });

alogw("%s(%d): dead iaudiotrack, %s, creating a new one from %s()",

__func__, mportid, isoffloadedordirect_l() ? "offloaded or direct" : "pcm", from);

++msequence;

// refresh the audio configuration cache in this process to make sure we get new

// output parameters and new iaudioflinger in createtrack_l()

audiosystem::clearaudioconfigcache();

if (isoffloadedordirect_l() || mdonotreconnect) {

// fixme re-creation of offloaded and direct tracks is not yet implemented;

// reconsider enabling for linear pcm encodings when position can be preserved.

result = dead_object;

return result;

}

// save so we can return count since creation.

munderruncountoffset = getunderruncount_l();

// save the old static buffer position

uint32_t staticposition = 0;

size_t bufferposition = 0;

int loopcount = 0;

if (mstaticproxy != 0) {

mstaticproxy->getbufferpositionandloopcount(&bufferposition, &loopcount);

staticposition = mstaticproxy->getposition().unsignedvalue();

}

// save the old startthreshold and framecount

const uint32_t originalstartthresholdinframes = mproxy->getstartthresholdinframes();

const uint32_t originalframecount = mproxy->framecount();

// see b/74409267. connecting to a bt a2dp device supporting multiple codecs

// causes a lot of churn on the service side, and it can reject starting

// playback of a previously created track. may also apply to other cases.

const int initial_retries = 3;

int retries = initial_retries;

retry:

if (retries < initial_retries) {

// see the comment for clearaudioconfigcache at the start of the function.

audiosystem::clearaudioconfigcache();

}

mflags = morigflags;

// if a new iaudiotrack is successfully created, createtrack_l() will modify the

// following member variables: maudiotrack, mcblkmemory and mcblk.

// it will also delete the strong references on previous iaudiotrack and imemory.

// if a new iaudiotrack cannot be created, the previous (dead) instance will be left intact.

result = createtrack_l();

if (result == no_error) {

// take the frames that will be lost by track recreation into account in saved position

// for streaming tracks, this is the amount we obtained from the user/client

// (not the number actually consumed at the server - those are already lost).

if (mstaticproxy == 0) {

mposition = mreleased;

}

// continue playback from last known position and restore loop.

if (mstaticproxy != 0) {

if (loopcount != 0) {

mstaticproxy->setbufferpositionandloop(bufferposition,

mloopstart, mloopend, loopcount);

} else {

mstaticproxy->setbufferposition(bufferposition);

if (bufferposition == mframecount) {

alogd("%s(%d): restoring track at end of static buffer", __func__, mportid);

}

}

}

// restore volume handler

mvolumehandler->forall([this](const volumeshaper &shaper) -> volumeshaper::status {

sp<volumeshaper::operation> operationtoend =

new volumeshaper::operation(shaper.moperation);

// todo: ideally we would restore to the exact xoffset position

// as returned by getvolumeshaperstate(), but we don't have that

// information when restoring at the client unless we periodically poll

// the server or create shared memory state.

//

// for now, we simply advance to the end of the volumeshaper effect

// if it has been started.

if (shaper.isstarted()) {

operationtoend->setnormalizedtime(1.f);

}

media::volumeshaperconfiguration config;

shaper.mconfiguration->writetoparcelable(&config);

media::volumeshaperoperation operation;

operationtoend->writetoparcelable(&operation);

status_t status;

maudiotrack->applyvolumeshaper(config, operation, &status);

return status;

});

// restore the original start threshold if different than framecount.

if (originalstartthresholdinframes != originalframecount) {

// note: mproxy->setstartthresholdinframes() call is in the proxy

// and does not trigger a restart.

// (also cblk_disabled is not set, buffers are empty after track recreation).

// any start would be triggered on the mstate == active check below.

const uint32_t currentthreshold =

mproxy->setstartthresholdinframes(originalstartthresholdinframes);

alogd_if(originalstartthresholdinframes != currentthreshold,

"%s(%d) startthresholdinframes changing from %u to %u",

__func__, mportid, originalstartthresholdinframes, currentthreshold);

}

if (mstate == state_active) {

maudiotrack->start(&result);

}

// server resets to zero so we offset

mframeswrittenserveroffset =

mstaticproxy.get() != nullptr ? staticposition : mframeswritten;

mframeswrittenatrestore = mframeswrittenserveroffset;

}

if (result != no_error) {

alogw("%s(%d): failed status %d, retries %d", __func__, mportid, result, retries);

if (--retries > 0) {

// leave time for an eventual race condition to clear before retrying

usleep(500000);

goto retry;

}

// if no retries left, set invalid bit to force restoring at next occasion

// and avoid inconsistent active state on client and server sides

if (mcblk != nullptr) {

android_atomic_or(cblk_invalid, &mcblk->mflags);

}

}

return result;

}

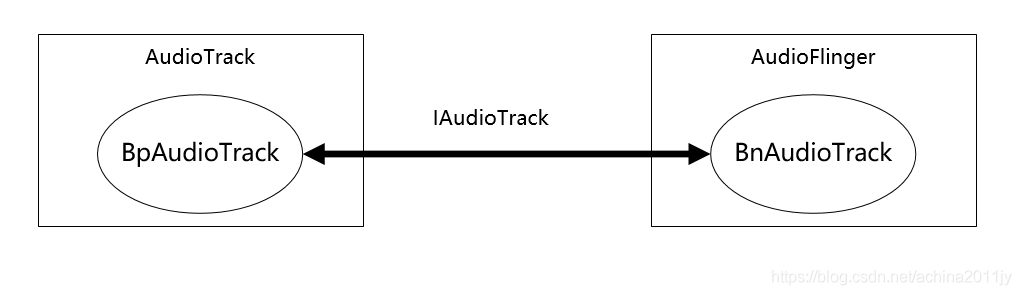

这里最重要的代码就是status = maudiotrack->start(); ,其中maudiotrack的定义为sp<iaudiotrack> maudiotrack;,iaudiotrack接口由audioflinger的bnaudiotrack实现, maudiotrack->start()会调用bnaudiotrack的start函数。

/frameworks/av/media/libaudioclient/iaudiotrack.aidl

interface iaudiotrack {

......

int start();

}

audioflinger trackhandle start

之后就是audioflinger的处理了:

发表评论