前言

这次需要对科大讯飞语音识别接口进行语音识别应用开发,前两次都是通过webapi调用接口,这次换一下,通过sdk调用接口

下面是开发的详细记录过程(基于前两次的基础上)

语音识别接口调用

第一步 来到接口详情页

网址:https://www.xfyun.cn/services/voicedictation

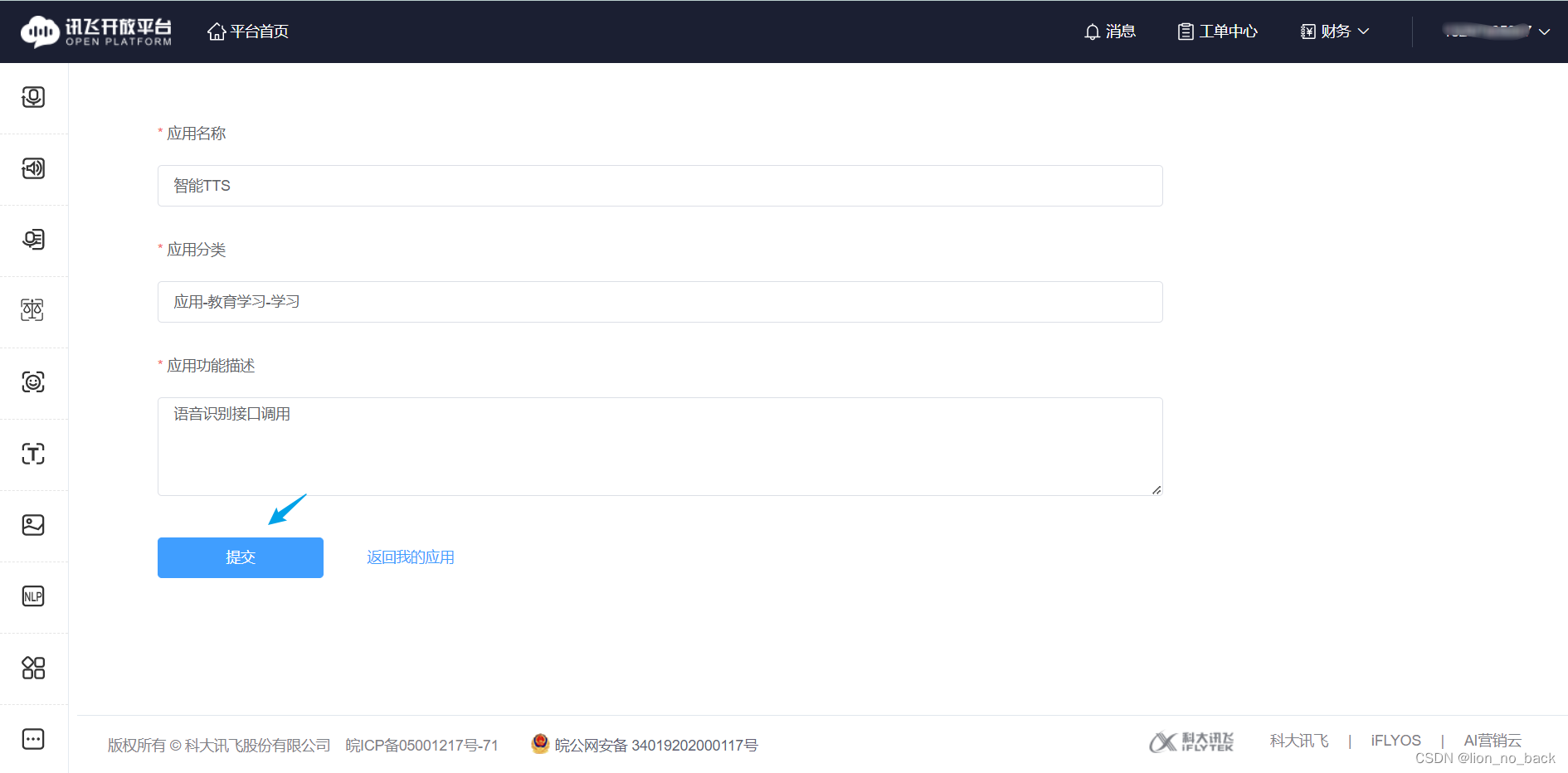

第二步 创建应用

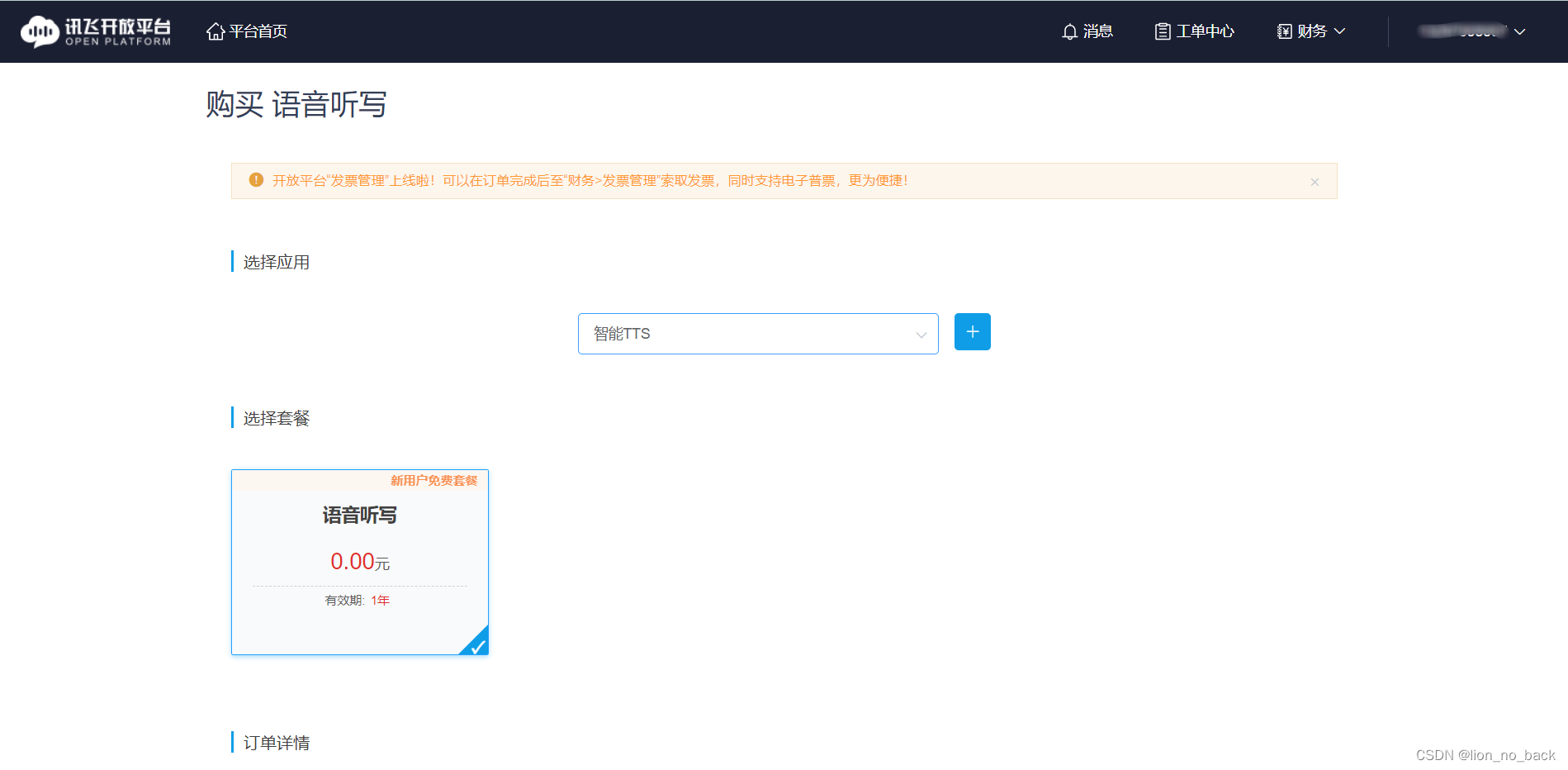

第三步 领取免费包

不领服务量为500且该包免费(貌似是不同应用都可以免费领取一个)

https://www.xfyun.cn/services/voicedictation

向下滑动即可点击领取

这一页会有程序调用接口时认证的信息

sdk调用方式只需appid。apikey或apisecret适用于webapi调用方式

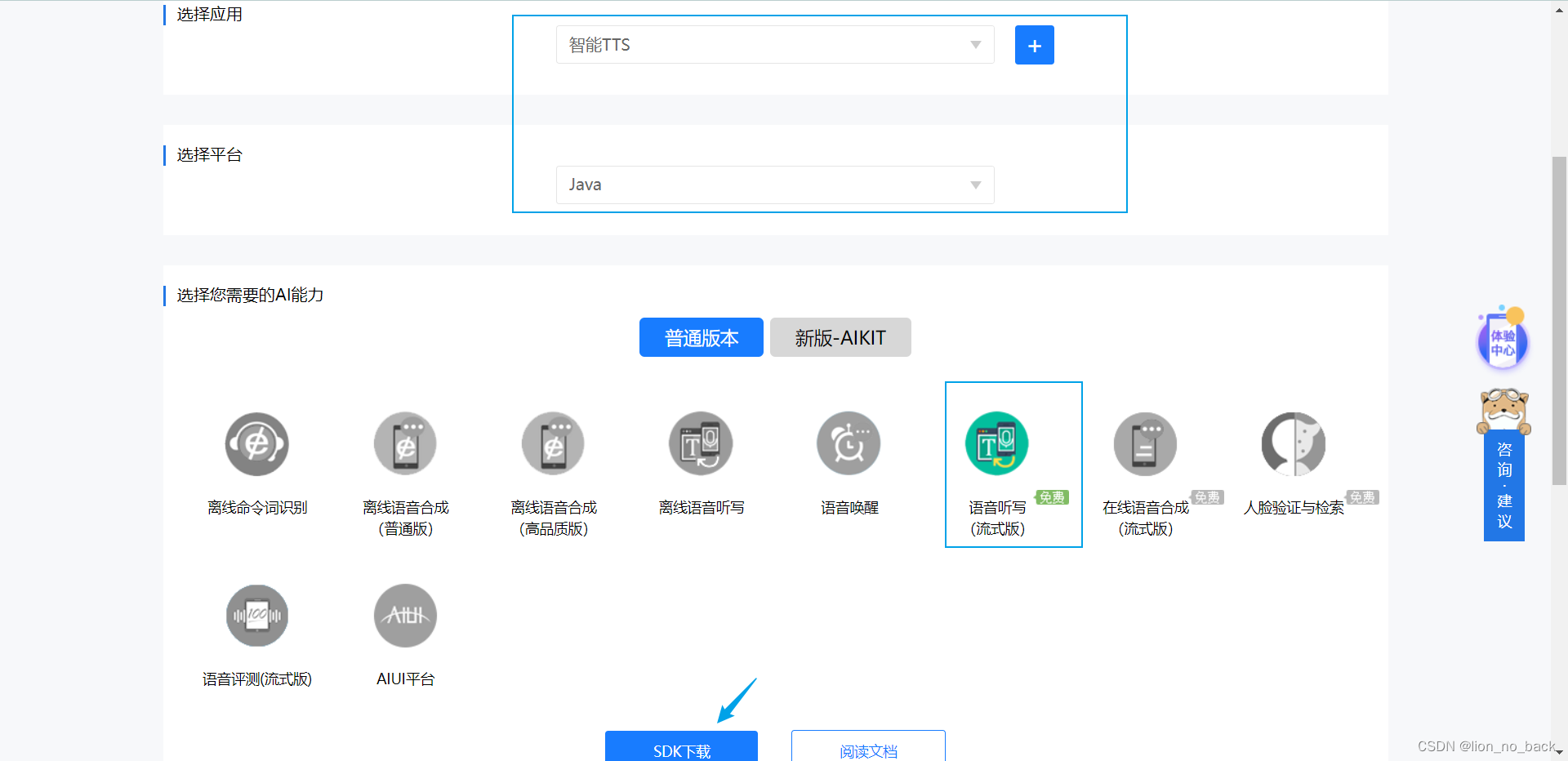

下载java sdk(一定要下载自己的sdk使用,因为其中的appid是对应的)

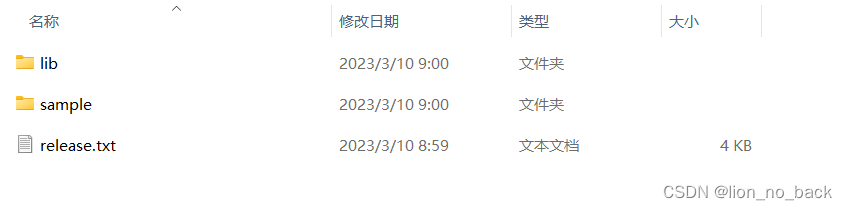

下载到本地解压后,目录如下

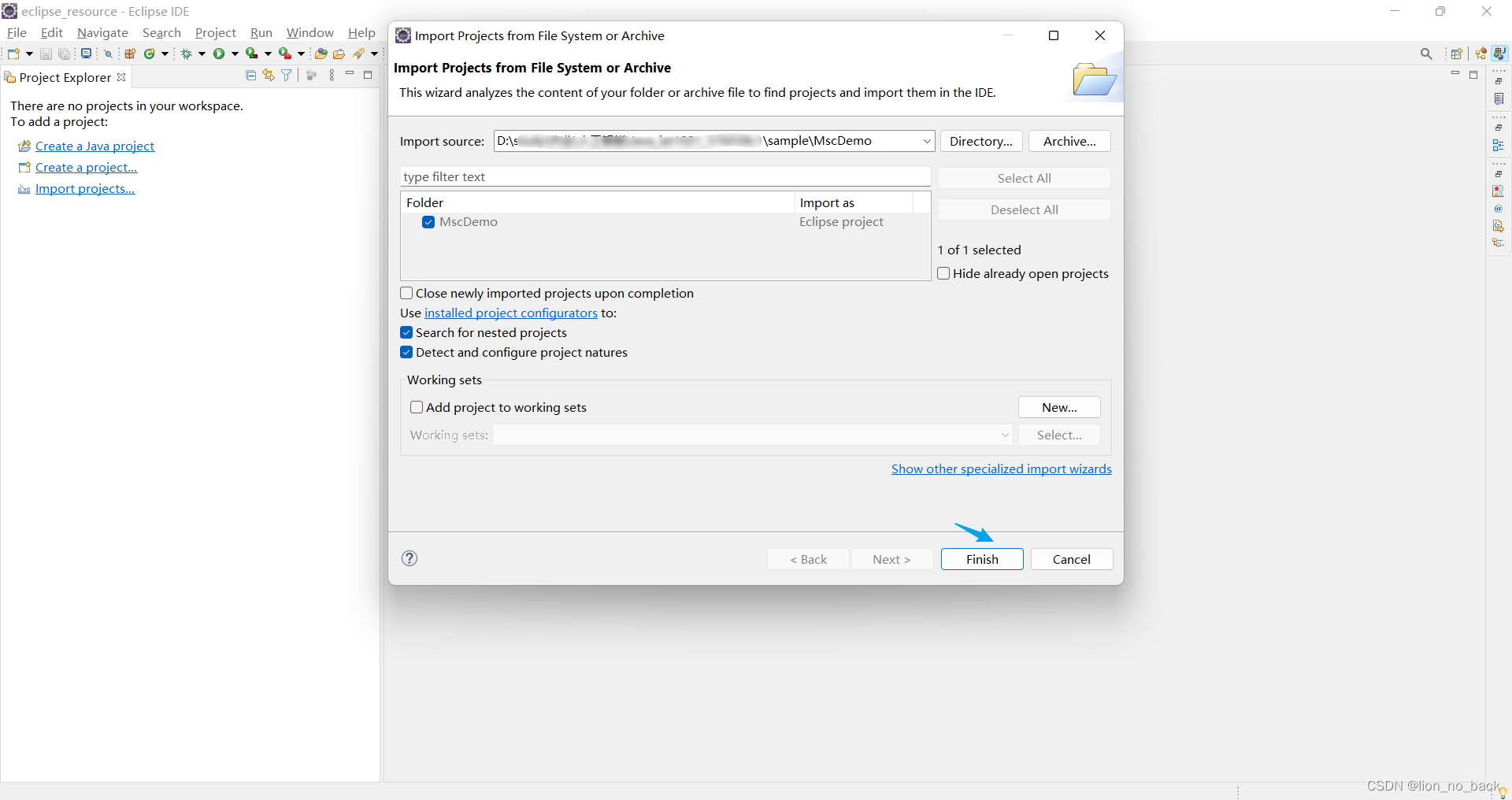

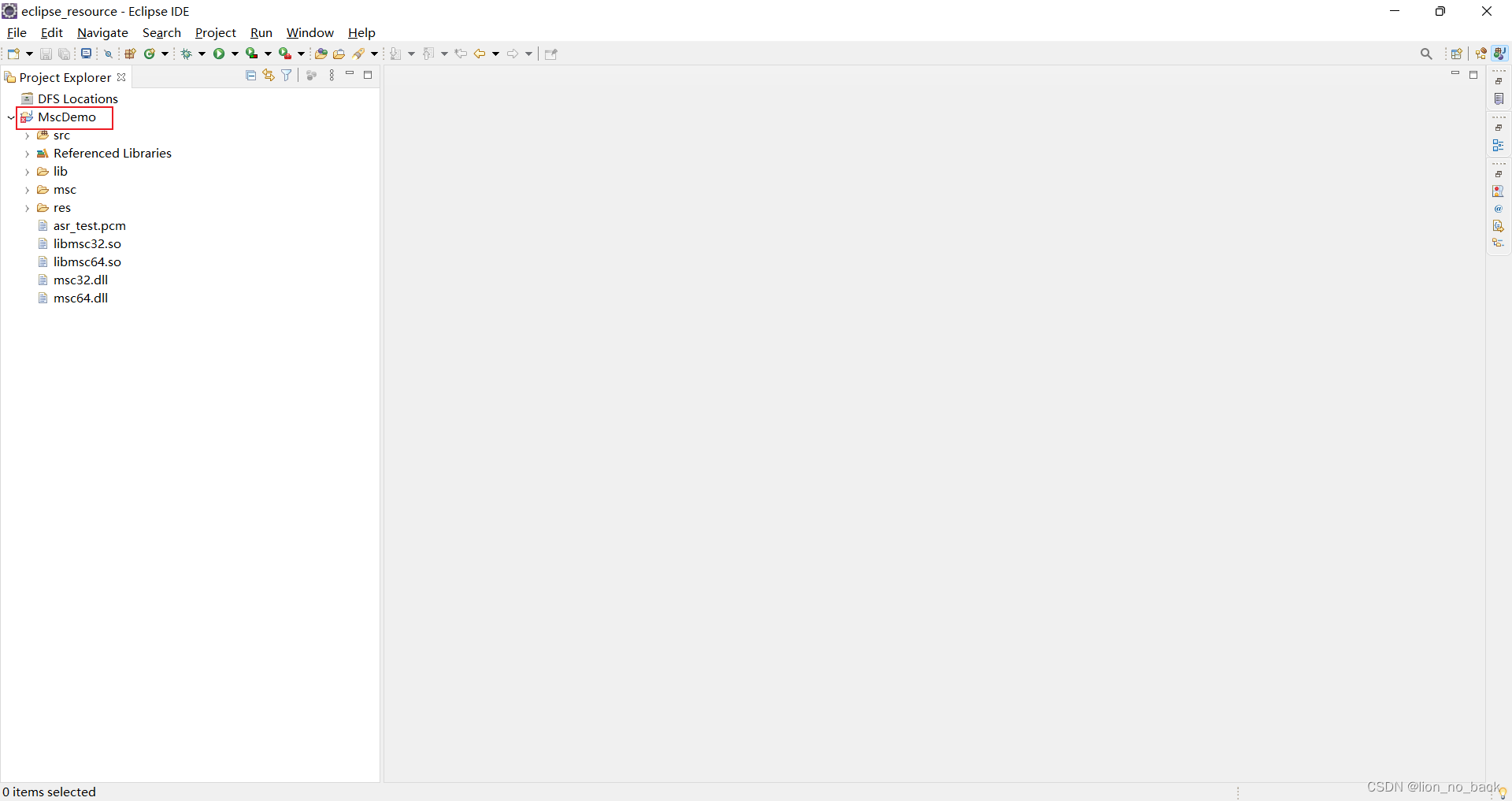

第四步 导入项目

语音听写 java sdk 文档中从eclipse java ide里导入项目的,下面详细演示步骤

发现导入的项目报错

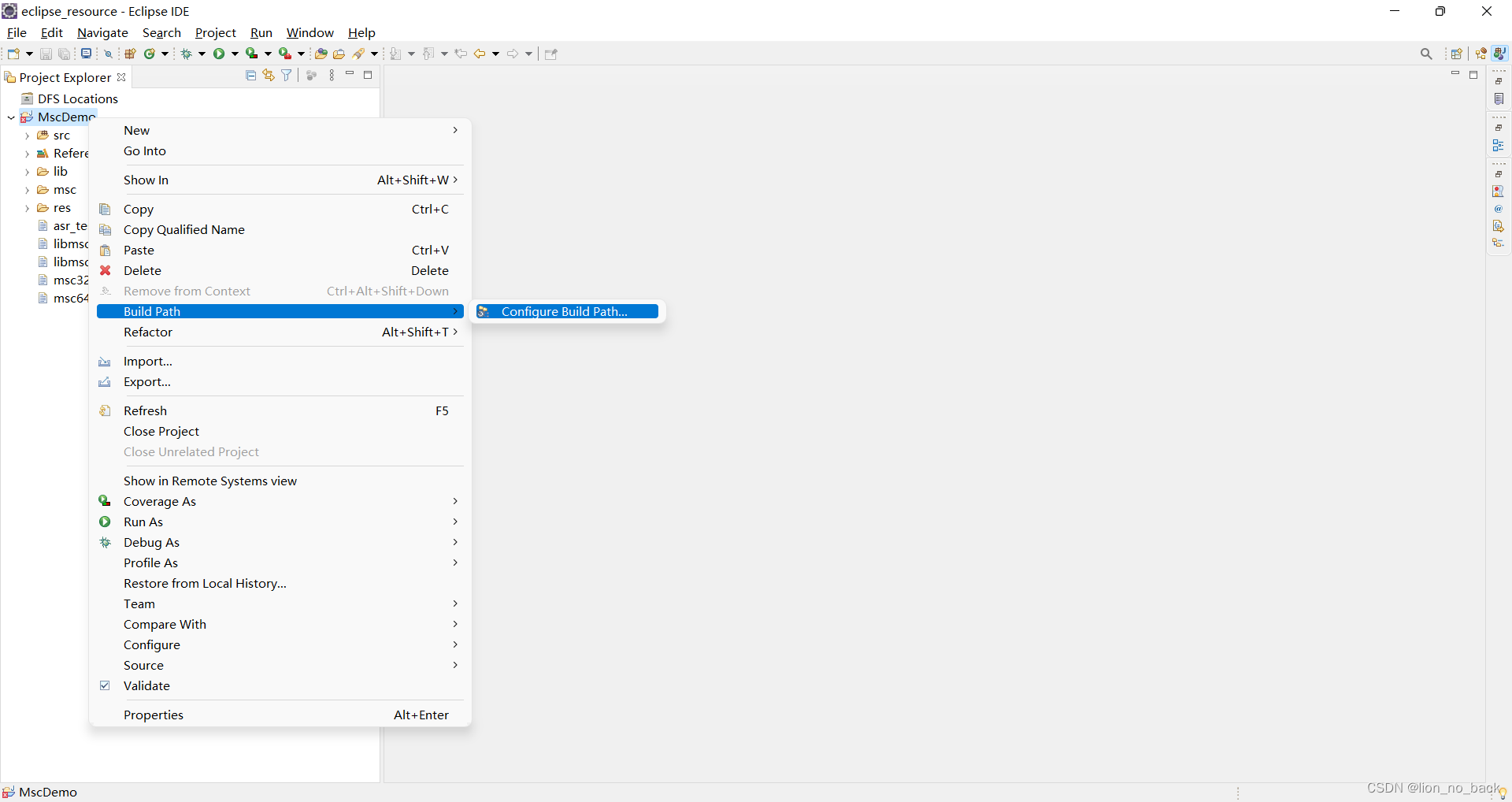

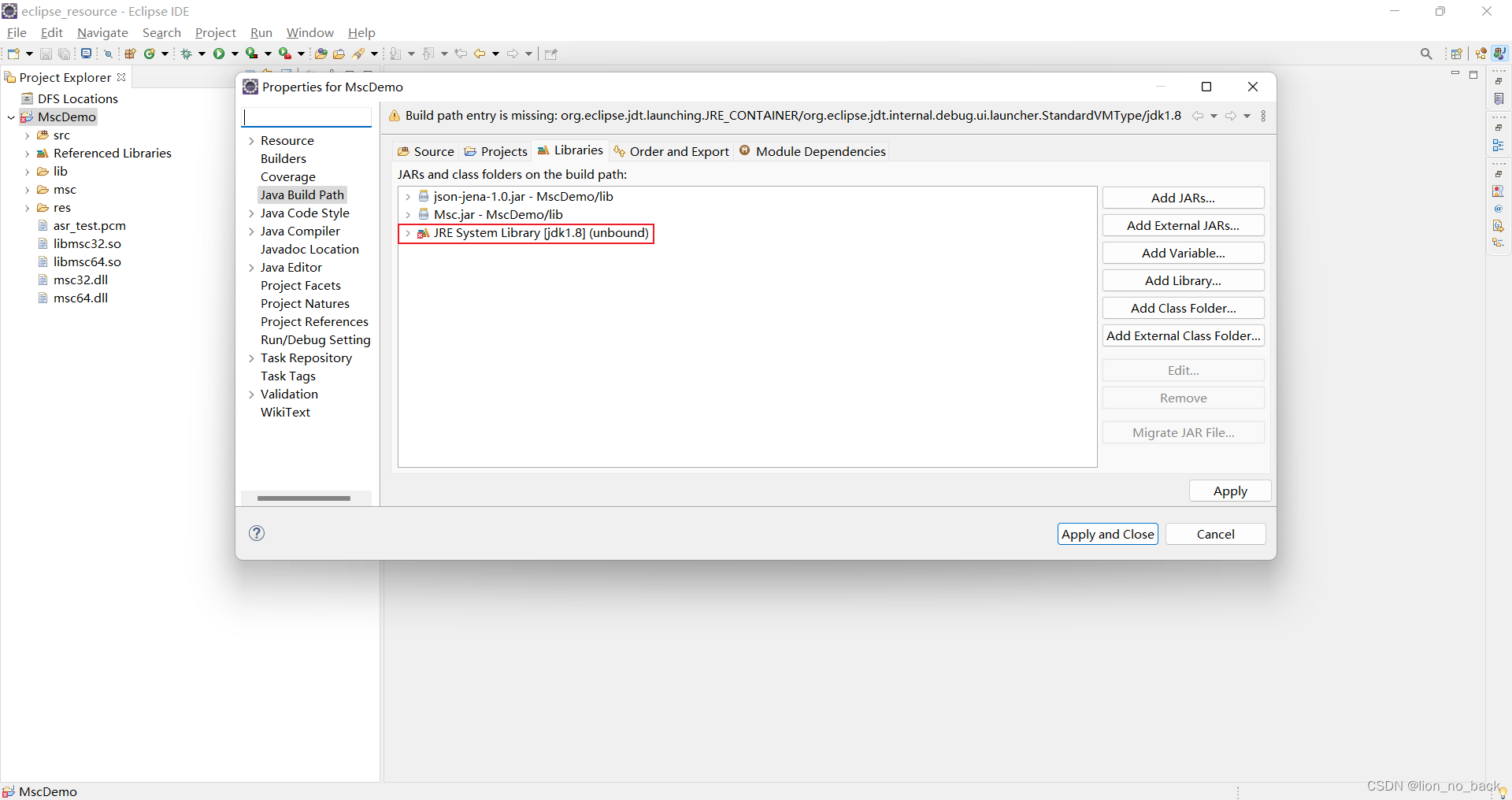

首先排错看是否是java运行环境不一致

在项目名上右键–>build path -->configuer build path

发现果然如此,文件后缀有 unbound 就是错误文件。直接选中,点击右边的remove 删除它

重新添加库

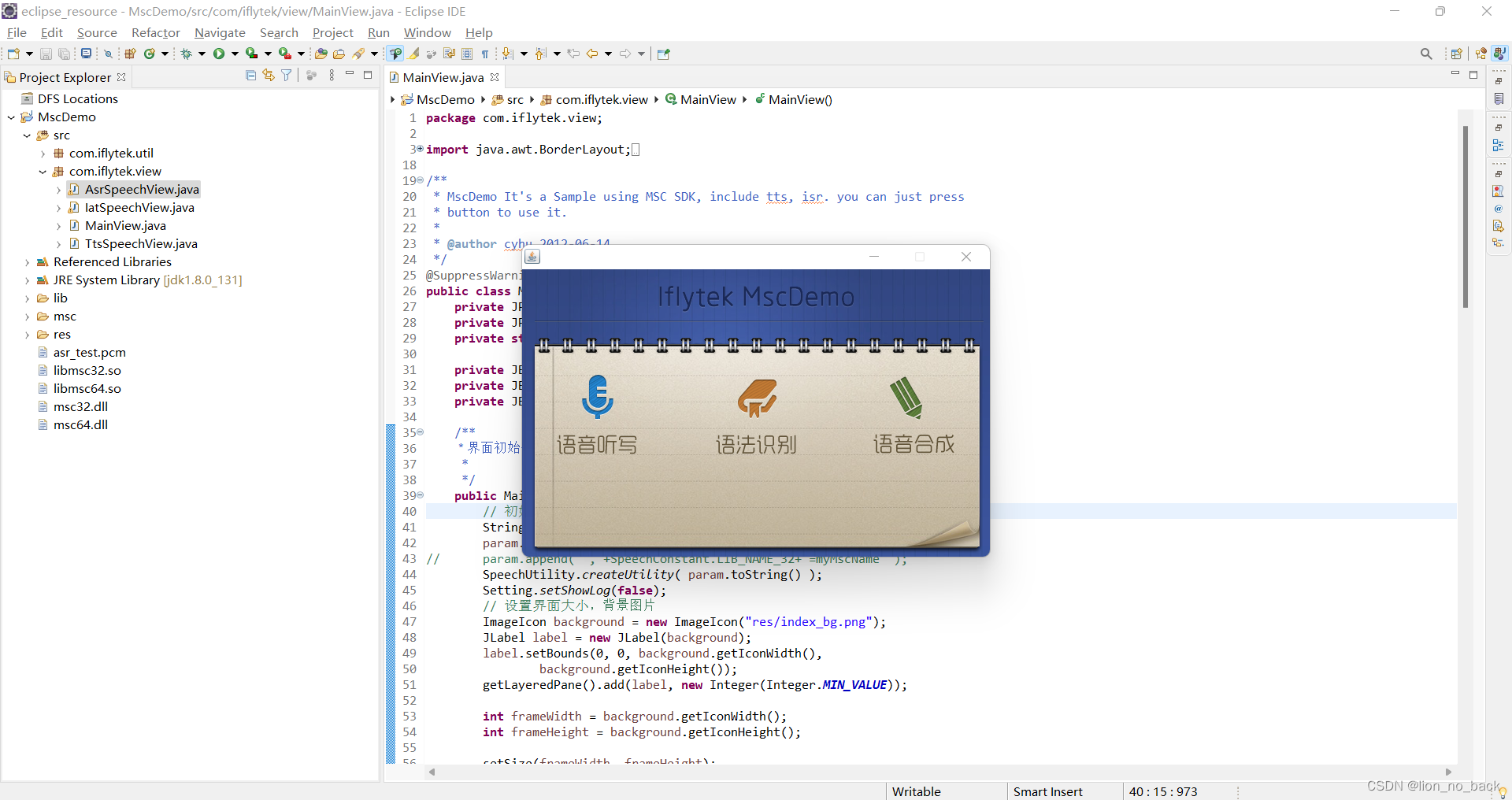

第五步 运行源代码

找到mainview.java,然后运行它(运行主程序)

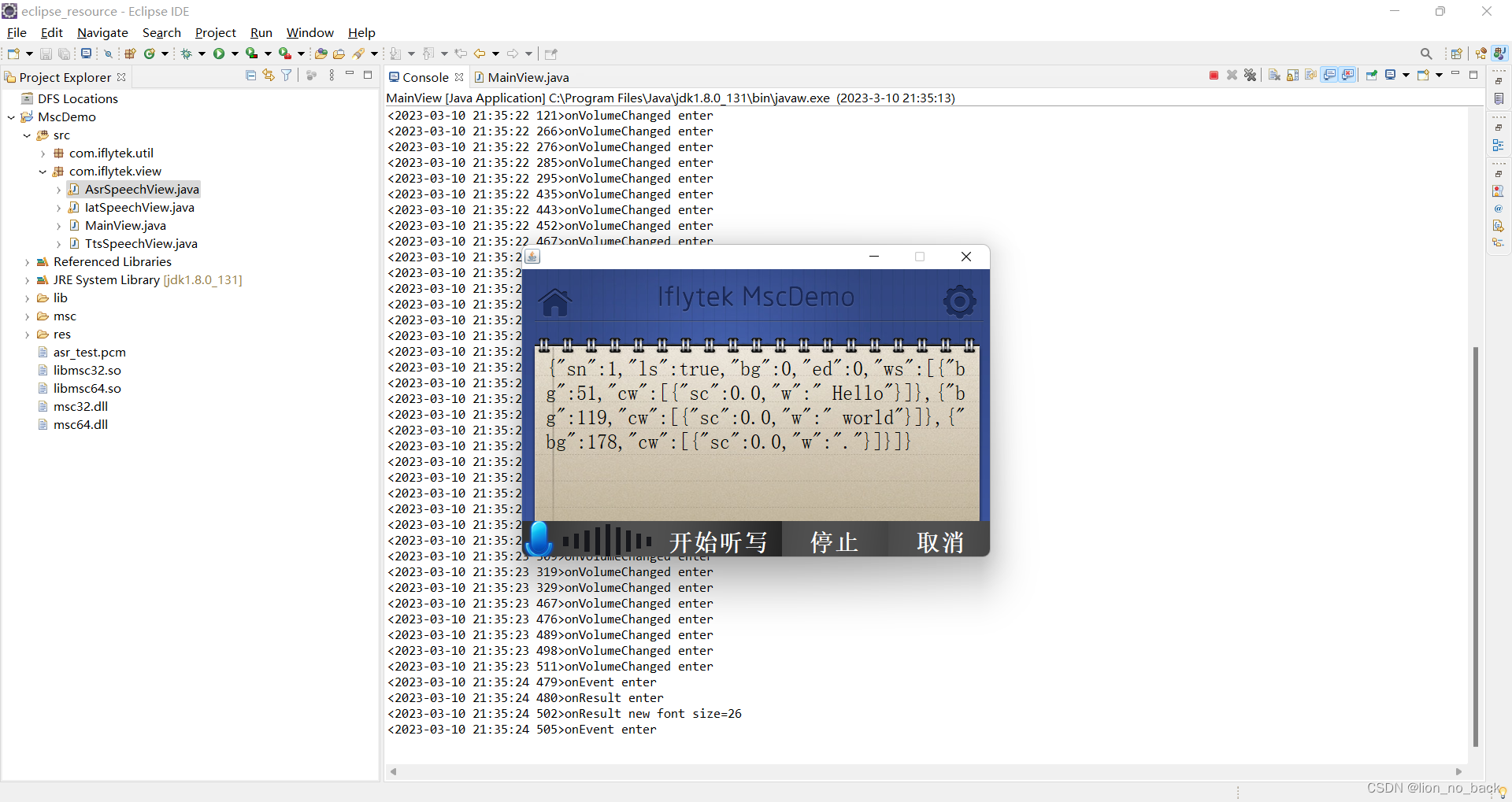

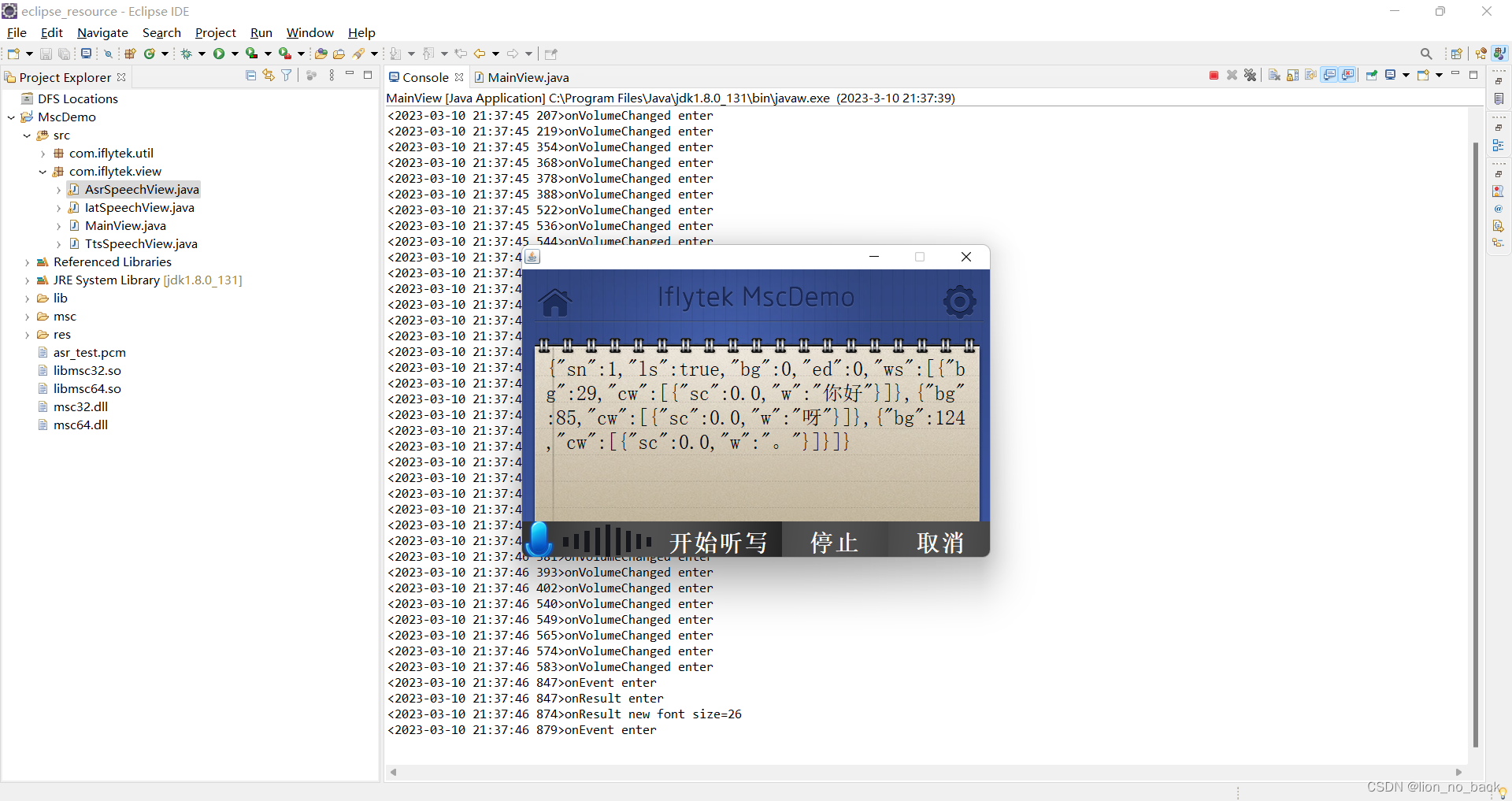

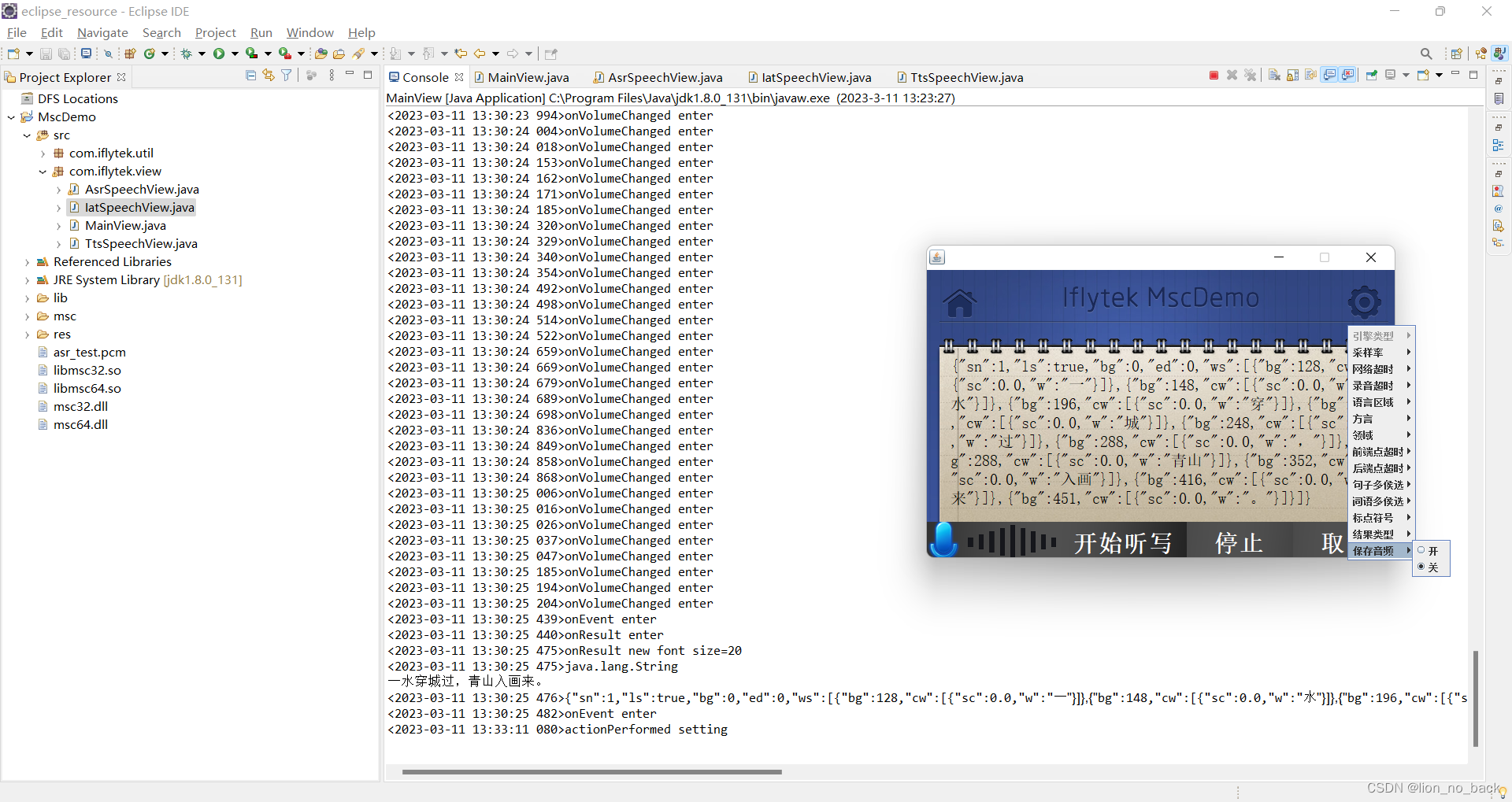

测试如下

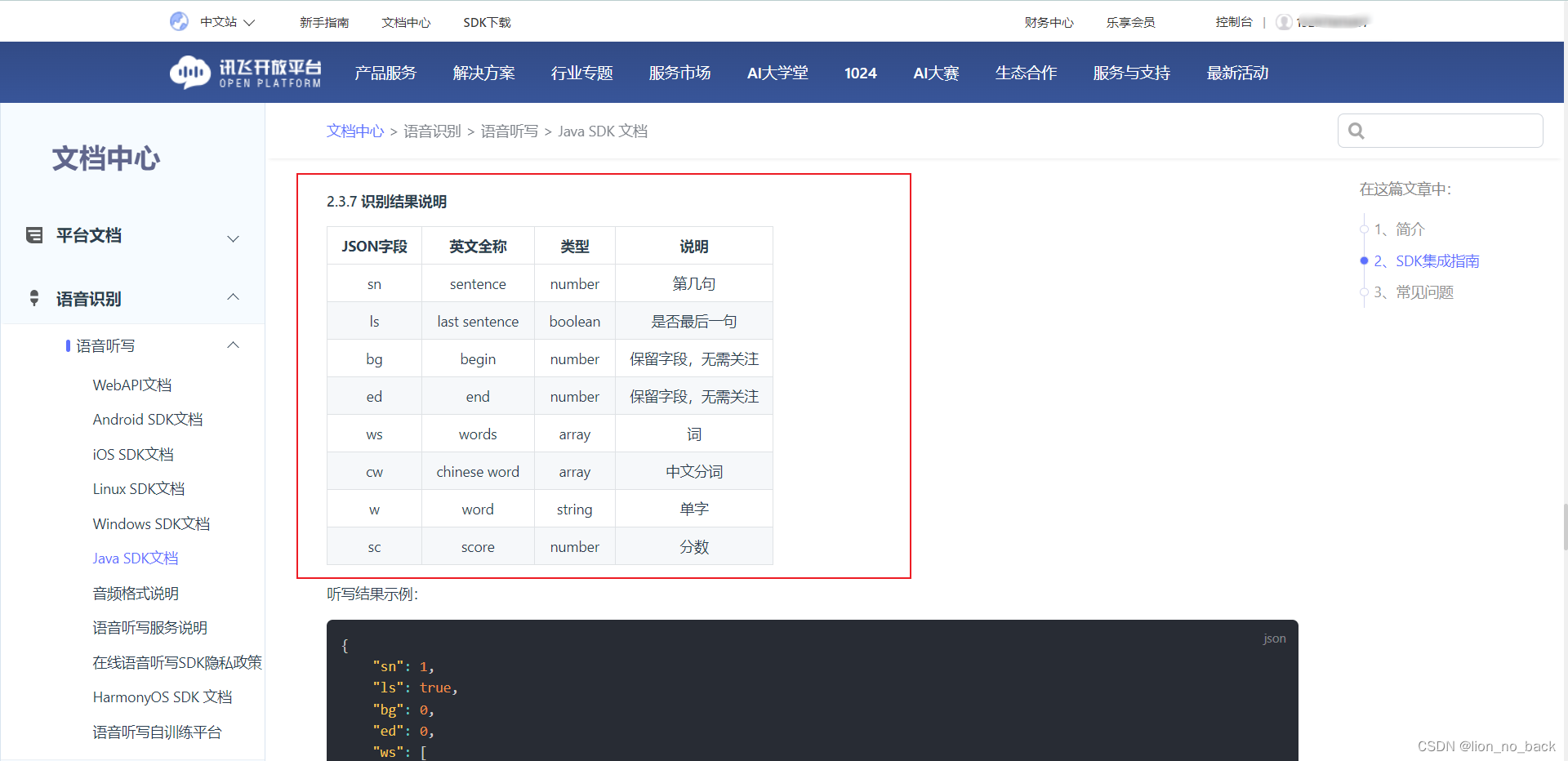

结合开发文档看看识别结果说明

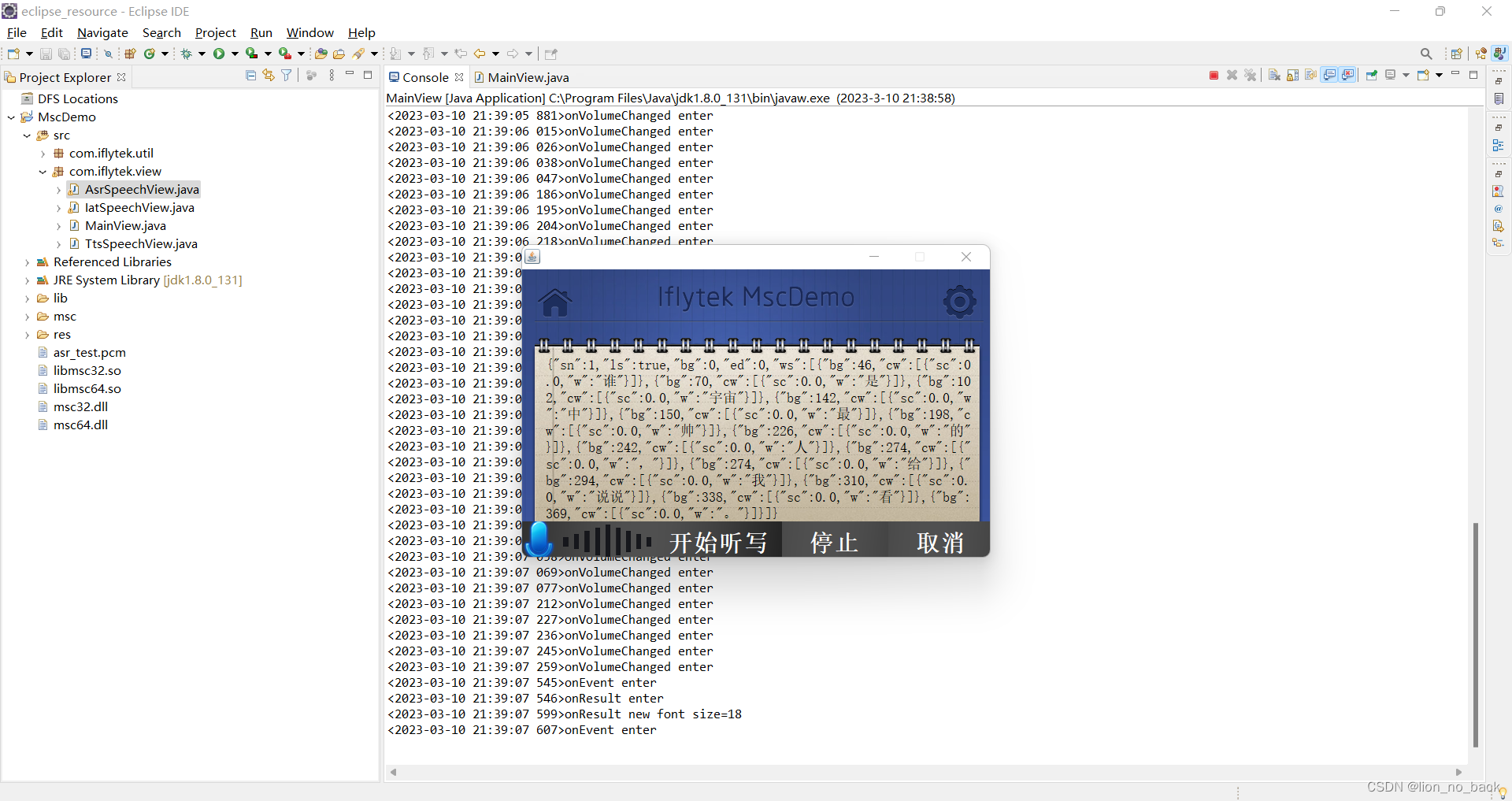

想刁难下,看看识别率如何,于是让其识别谁是宇宙中最帅的人,说说看

再识别一次发现控制台没有完整输出,不得劲儿

于是重新去看开发文档,通过了解各接口介绍及说明了解了整个实现流程

简单理解就是开始说话到结束说话,会写入音频流数据,最后会返回结果,途中如果报错会进行自动处理

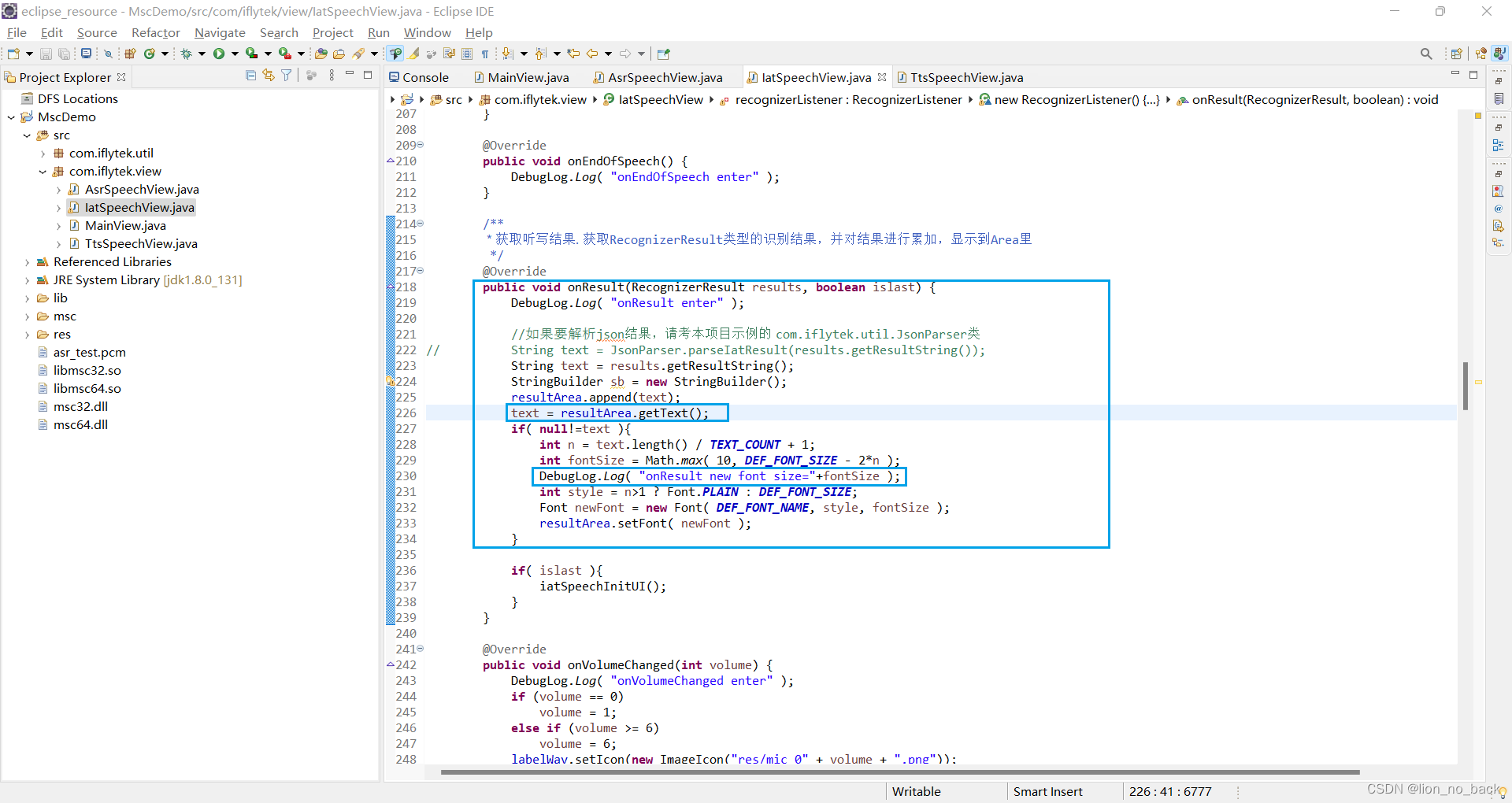

然后看源码以及文档介绍,大概了解到哪部分生成的是ui,ui上各部件绑定的事件以及内部函数调用顺序处理过程

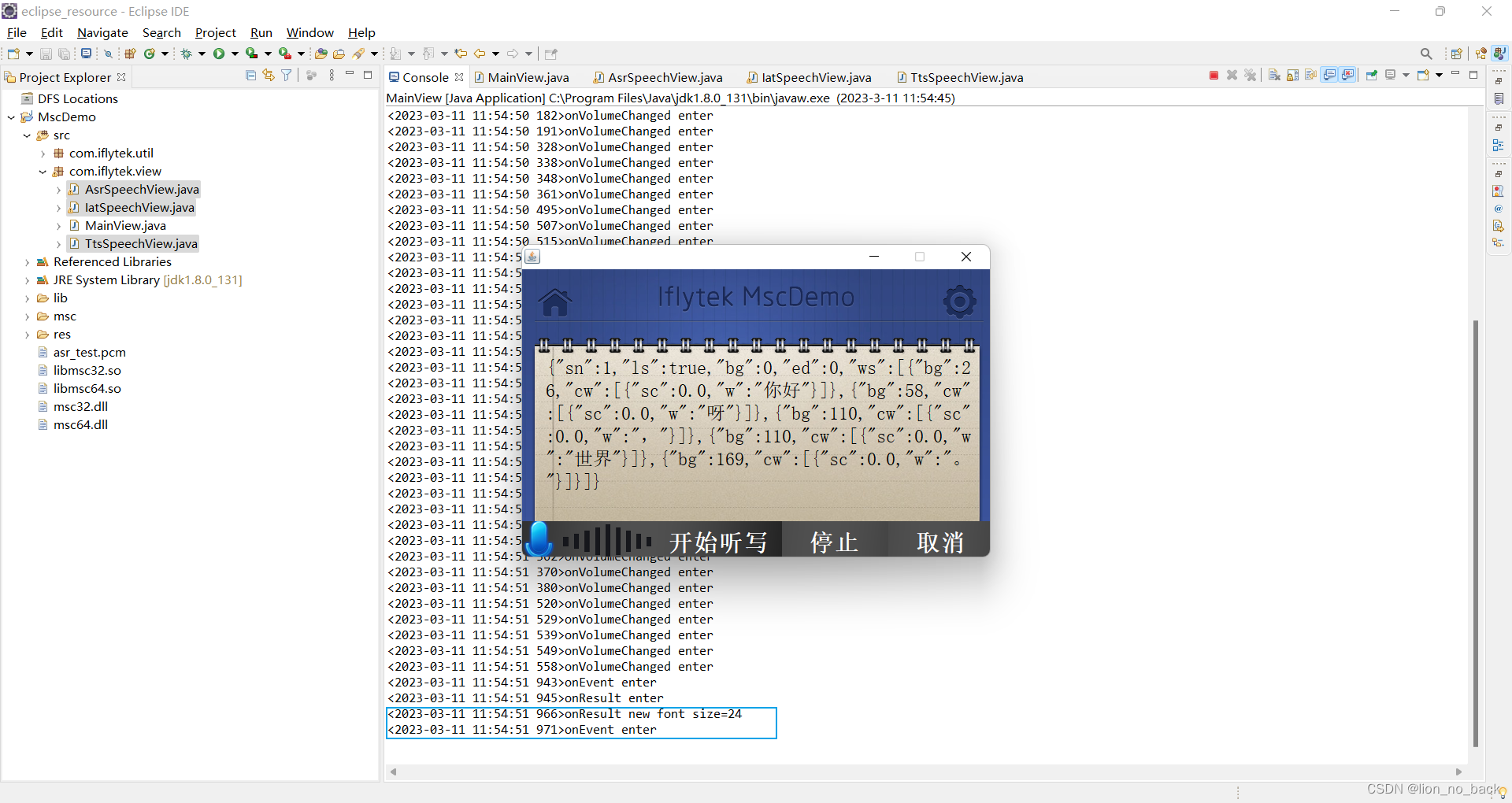

通过控制台的输出信息可以定位到日志信息最后输出的位置

可以了解到输出的text是一个json字符串,下一步便是获取json字符串中指定key的value

通过java的正则表达式提取字符串的关键信息

因为没有学过java正则表达式但学过python正则表达式,于是简单自学了下便达成了想要的效果

正则表达式测试:https://tool.oschina.net/regex/

java正则表达式

- 匹配双引号用

\" - 匹配中文用

[\u4e00-\u9fa5]

修改完后的代码如下:

只增添了提取关键词并输出这一部分代码(量很少),就说文件中原代码书写谁写不薅头发谁看了不迷糊(也就是那些大佬)

package com.iflytek.view;

import java.awt.borderlayout;

import java.awt.color;

import java.awt.font;

import java.awt.event.actionevent;

import java.awt.event.actionlistener;

import java.util.hashmap;

import java.util.linkedhashmap;

import java.util.map;

import java.util.map.entry;

import java.util.regex.matcher;

import java.util.regex.pattern;

import javax.swing.buttongroup;

import javax.swing.imageicon;

import javax.swing.jbutton;

import javax.swing.jframe;

import javax.swing.jlabel;

import javax.swing.jmenu;

import javax.swing.jmenuitem;

import javax.swing.jpanel;

import javax.swing.jpopupmenu;

import javax.swing.jradiobuttonmenuitem;

import javax.swing.jslider;

import javax.swing.jtextarea;

import javax.swing.swingconstants;

import javax.swing.event.changeevent;

import javax.swing.event.changelistener;

import com.iflytek.cloud.speech.recognizerlistener;

import com.iflytek.cloud.speech.recognizerresult;

import com.iflytek.cloud.speech.resourceutil;

import com.iflytek.cloud.speech.speechconstant;

import com.iflytek.cloud.speech.speecherror;

import com.iflytek.cloud.speech.speechrecognizer;

import com.iflytek.util.debuglog;

import com.iflytek.util.drawableutils;

public class iatspeechview extends jpanel implements actionlistener {

private static final long serialversionuid = 1l;

private jlabel labelwav;

private jbutton jbtnrecognizer;

private jbutton jbtncancel;

private jbutton jbtnstop;

private jbutton jbtnhome;

private jbutton jbtnset;

jtextarea resultarea;

// 语音听写对象

private speechrecognizer miat;

private jpopupmenu msettingmenu = new jpopupmenu( "设置" ); //主菜单

private map<string, string> mparammap = new hashmap<string, string>();

private string msavepath = "./iat_test.pcm";

private static final string val_true = "1";

private static class defaultvalue{

public static final string eng_type = speechconstant.type_cloud;

public static final string speech_timeout = "60000";

public static final string net_timeout = "20000";

public static final string language = "zh_cn";

public static final string accent = "mandarin";

public static final string domain = "iat";

public static final string vad_bos = "5000";

public static final string vad_eos = "1800";

public static final string rate = "16000";

public static final string nbest = "1";

public static final string wbest = "1";

public static final string ptt = "1";

public static final string result_type = "json";

public static final string save = "0";

}

private static final string def_font_name = "宋体";

private static final int def_font_style = font.bold;

private static final int def_font_size = 30;

private static final int text_count = 100;

/**

* 初始化按钮对象. 设置按钮背景图片、大小、鼠标点击事件 初始化文本框,设置字体类型、大小

*/

public iatspeechview() {

jbtnrecognizer = addbutton("res/button.png", "开始听写", 0, 320, 330, -1,

"res/button");

imageicon img = new imageicon("res/mic_01.png");

labelwav = new jlabel(img);

labelwav.setbounds(0, 0, img.geticonwidth(),

img.geticonheight() * 4 / 5);

jbtnrecognizer.add(labelwav, borderlayout.west);

jbtnstop = addbutton("res/button.png", "停止", 330, 320, 135, -1,

"res/button");

jbtncancel = addbutton("res/button.png", "取消", 465, 320, 135, -1,

"res/button");

jbtnhome = addbutton("res/home.png", "", 20, 20, 1, 1, "res/home");

jbtnset = addbutton( "res/setting.png", "", 534, 20, 1, 1, "res/setting" );

resultarea = new jtextarea("");

resultarea.setbounds(30, 110, 540, 400);

resultarea.setopaque(false);

resultarea.seteditable(false);

resultarea.setlinewrap(true);

resultarea.setforeground(color.black);

font font = new font(def_font_name, def_font_style, def_font_size);

resultarea.setfont(font);

setopaque(false);

setlayout(null);

add(jbtnrecognizer);

add(jbtnstop);

add(jbtncancel);

add(resultarea);

add(jbtnhome);

add(jbtnset);

// 初始化听写对象

miat=speechrecognizer.createrecognizer();

jbtnrecognizer.addactionlistener(this);

jbtnhome.addactionlistener(this);

jbtnstop.addactionlistener(this);

jbtncancel.addactionlistener(this);

jbtnset.addactionlistener( this );

initparammap();

initmenu();

}

public jbutton addbutton(string imgname, string btnname, int x, int y,

int imgwidth, int imgheight, string iconpath) {

jbutton btn = null;

imageicon img = new imageicon(imgname);

btn = drawableutils.createimagebutton(btnname, img, "center");

int width = imgwidth, height = imgheight;

if (width == 1)

width = img.geticonwidth();

else if (width == -1)

width = img.geticonheight() * 4 / 5;

if (height == 1)

height = img.geticonwidth();

else if (height == -1)

height = img.geticonheight() * 4 / 5;

btn.setbounds(x, y, width, height);

drawableutils.setmouselistener(btn, iconpath);

return btn;

}

/***

* 监听器实现. 按钮按下动作实现

*/

public void actionperformed(actionevent e) {

if (e.getsource() == jbtnrecognizer) {

setting();

resultarea.settext( "" );

if (!miat.islistening())

miat.startlistening(recognizerlistener);

else

miat.stoplistening();

} else if (e.getsource() == jbtnstop) {

miat.stoplistening();

iatspeechinitui();

} else if (e.getsource() == jbtncancel) {

miat.cancel();

iatspeechinitui();

} else if (e.getsource() == jbtnhome) {

if (null != miat ) {

miat.cancel();

miat.destroy();

}

jframe frame = mainview.getframe();

frame.getcontentpane().remove(this);

jpanel panel = ((mainview) frame).getmainjpanel();

frame.getcontentpane().add(panel);

frame.getcontentpane().validate();

frame.getcontentpane().repaint();

}else if( jbtnset.equals(e.getsource()) ){

debuglog.log( "actionperformed setting" );

msettingmenu.show( this, this.jbtnset.getx(), this.jbtnset.gety()+50 );

}

}

/**

* 听写监听器

*/

private recognizerlistener recognizerlistener = new recognizerlistener() {

@override

public void onbeginofspeech() {

debuglog.log( "onbeginofspeech enter" );

((jlabel) jbtnrecognizer.getcomponent(0)).settext("听写中...");

jbtnrecognizer.setenabled(false);

}

@override

public void onendofspeech() {

debuglog.log( "onendofspeech enter" );

}

/**

* 获取听写结果. 获取recognizerresult类型的识别结果,并对结果进行累加,显示到area里

*/

@override

public void onresult(recognizerresult results, boolean islast) {

debuglog.log( "onresult enter" );

//如果要解析json结果,请考本项目示例的 com.iflytek.util.jsonparser类

// string text = jsonparser.parseiatresult(results.getresultstring());

string text = results.getresultstring();

stringbuilder sb = new stringbuilder();

// string pattern = "[\\u4e00-\\u9fa5]+"; // 只匹配中文

string pattern = "\"w\":\"(.*?)\"";

pattern r = pattern.compile(pattern);

resultarea.append(text);

text = resultarea.gettext();

matcher m = r.matcher(text);

if( null!=text ){

int n = text.length() / text_count + 1;

int fontsize = math.max( 10, def_font_size - 2*n );

debuglog.log( "onresult new font size="+fontsize );

debuglog.log(text.getclass().getname());

while (m.find()) {

sb.append(m.group(1));

}

system.out.println(sb.tostring());

debuglog.log(text);

int style = n>1 ? font.plain : def_font_size;

font newfont = new font( def_font_name, style, fontsize );

resultarea.setfont( newfont );

}

if( islast ){

iatspeechinitui();

}

}

@override

public void onvolumechanged(int volume) {

debuglog.log( "onvolumechanged enter" );

if (volume == 0)

volume = 1;

else if (volume >= 6)

volume = 6;

labelwav.seticon(new imageicon("res/mic_0" + volume + ".png"));

}

@override

public void onerror(speecherror error) {

debuglog.log( "onerror enter" );

if (null != error){

debuglog.log("onerror code:" + error.geterrorcode());

resultarea.settext( error.geterrordescription(true) );

iatspeechinitui();

}

}

@override

public void onevent(int eventtype, int arg1, int agr2, string msg) {

debuglog.log( "onevent enter" );

//以下代码用于调试,如果出现问题可以将sid提供给讯飞开发者,用于问题定位排查

/*if(eventtype == speechevent.event_session_id) {

debuglog.log("sid=="+msg);

}*/

}

};

/**

* 听写结束,恢复初始状态

*/

public void iatspeechinitui() {

labelwav.seticon(new imageicon("res/mic_01.png"));

jbtnrecognizer.setenabled(true);

((jlabel) jbtnrecognizer.getcomponent(0)).settext("开始听写");

}

private void initparammap(){

this.mparammap.put( speechconstant.engine_type, defaultvalue.eng_type );

this.mparammap.put( speechconstant.sample_rate, defaultvalue.rate );

this.mparammap.put( speechconstant.net_timeout, defaultvalue.net_timeout );

this.mparammap.put( speechconstant.key_speech_timeout, defaultvalue.speech_timeout );

this.mparammap.put( speechconstant.language, defaultvalue.language );

this.mparammap.put( speechconstant.accent, defaultvalue.accent );

this.mparammap.put( speechconstant.domain, defaultvalue.domain );

this.mparammap.put( speechconstant.vad_bos, defaultvalue.vad_bos );

this.mparammap.put( speechconstant.vad_eos, defaultvalue.vad_eos );

this.mparammap.put( speechconstant.asr_nbest, defaultvalue.nbest );

this.mparammap.put( speechconstant.asr_wbest, defaultvalue.wbest );

this.mparammap.put( speechconstant.asr_ptt, defaultvalue.ptt );

this.mparammap.put( speechconstant.result_type, defaultvalue.result_type );

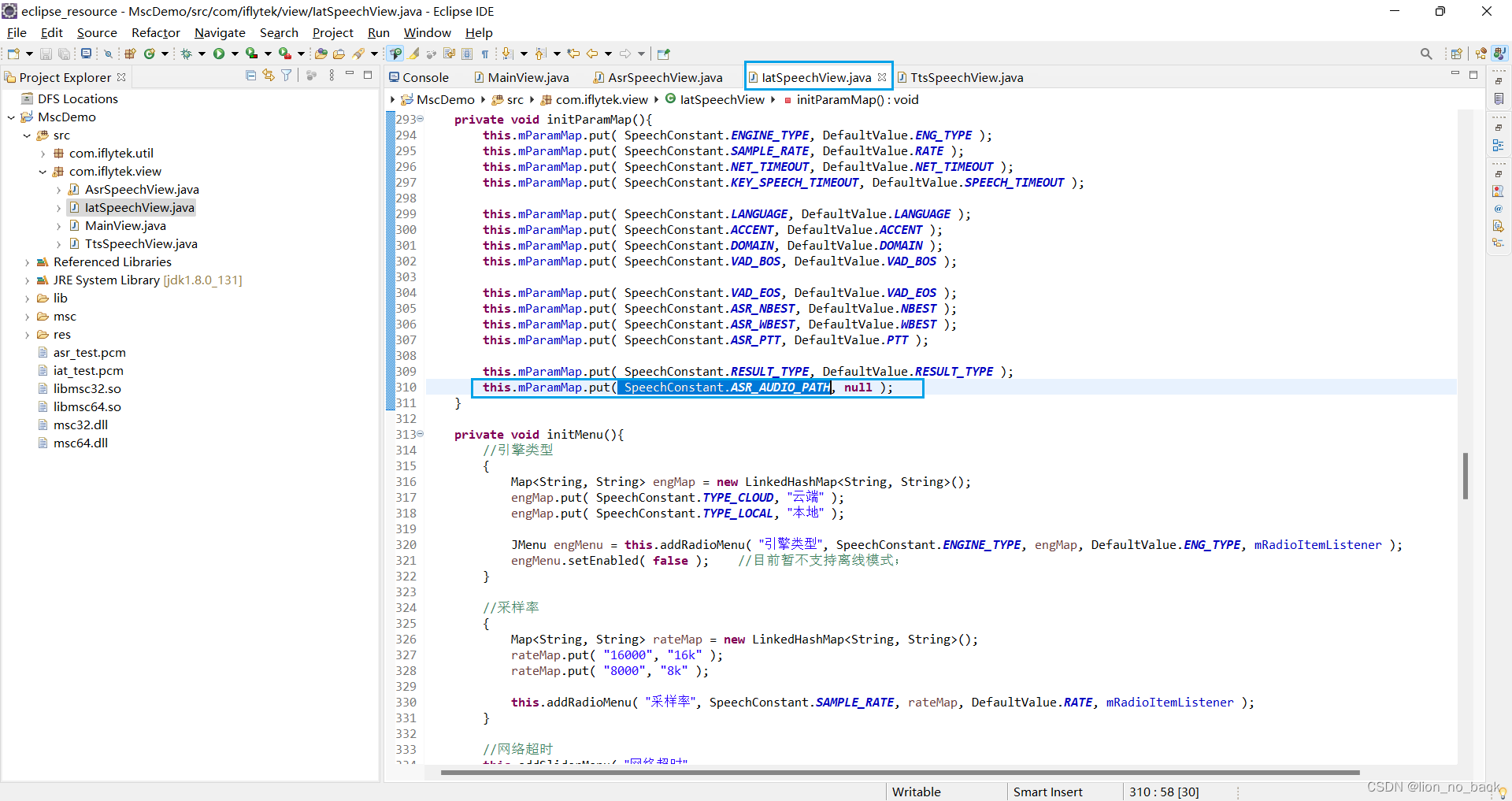

this.mparammap.put( speechconstant.asr_audio_path, null );

}

private void initmenu(){

//引擎类型

{

map<string, string> engmap = new linkedhashmap<string, string>();

engmap.put( speechconstant.type_cloud, "云端" );

engmap.put( speechconstant.type_local, "本地" );

jmenu engmenu = this.addradiomenu( "引擎类型", speechconstant.engine_type, engmap, defaultvalue.eng_type, mradioitemlistener );

engmenu.setenabled( false ); //目前暂不支持离线模式;

}

//采样率

{

map<string, string> ratemap = new linkedhashmap<string, string>();

ratemap.put( "16000", "16k" );

ratemap.put( "8000", "8k" );

this.addradiomenu( "采样率", speechconstant.sample_rate, ratemap, defaultvalue.rate, mradioitemlistener );

}

//网络超时

this.addslidermenu( "网络超时"

, speechconstant.net_timeout

, 0

, 30000

, integer.valueof(defaultvalue.net_timeout)

, mchangelistener );

//录音超时

this.addslidermenu( "录音超时"

, speechconstant.key_speech_timeout

, 0

, 60000

, integer.valueof(defaultvalue.speech_timeout)

, mchangelistener );

//语言

{

map<string, string> languagemap = new linkedhashmap<string, string>();

languagemap.put( "zh_cn", "简体中文" );

languagemap.put( "en_us", "美式英文" );

this.addradiomenu( "语言区域", speechconstant.language, languagemap, defaultvalue.language, mradioitemlistener );

}

//方言

{

map<string, string> accentmap = new linkedhashmap<string, string>();

accentmap.put( "mandarin", "普通话" );

accentmap.put( "cantonese", "粤语" );

accentmap.put( "lmz", "湖南话" );

accentmap.put( "henanese", "河南话" );

this.addradiomenu( "方言", speechconstant.accent, accentmap, defaultvalue.accent, mradioitemlistener );

}

//领域

{

map<string, string> domainmap = new linkedhashmap<string, string>();

domainmap.put( "iat", "日常用语" );

domainmap.put( "music", "音乐" );

domainmap.put( "poi", "地图" );

domainmap.put( "vedio", "视频" );

this.addradiomenu( "领域", speechconstant.domain, domainmap, defaultvalue.domain, mradioitemlistener );

}

//前端点超时

this.addslidermenu( "前端点超时"

, speechconstant.vad_bos

, 1000

, 10000

, integer.valueof(defaultvalue.vad_bos)

, mchangelistener );

//后端点超时

this.addslidermenu( "后端点超时"

, speechconstant.vad_eos

, 0

, 10000

, integer.valueof(defaultvalue.vad_eos)

, mchangelistener );

//句子多侯选

{

map<string, string> nbestmap = new linkedhashmap<string, string>();

nbestmap.put( "1", "开" );

nbestmap.put( "0", "关" );

this.addradiomenu( "句子多侯选", speechconstant.asr_nbest, nbestmap, defaultvalue.nbest, mradioitemlistener );

}

//词语多侯选

{

map<string, string> wbestmap = new linkedhashmap<string, string>();

wbestmap.put( "1", "开" );

wbestmap.put( "0", "关" );

this.addradiomenu( "词语多侯选", speechconstant.asr_wbest, wbestmap, defaultvalue.wbest, mradioitemlistener );

}

//标点符号

{

map<string, string> pttmap = new linkedhashmap<string, string>();

pttmap.put( "1", "开" );

pttmap.put( "0", "关" );

this.addradiomenu( "标点符号", speechconstant.asr_ptt, pttmap, defaultvalue.ptt, mradioitemlistener );

}

//结果类型

{

map<string, string> resultmap = new linkedhashmap<string, string>();

resultmap.put( "json", "json" );

resultmap.put( "plain", "plain" );

this.addradiomenu( "结果类型", speechconstant.result_type, resultmap, defaultvalue.result_type, mradioitemlistener );

}

//保存音频

{

map<string, string> savemap = new linkedhashmap<string, string>();

savemap.put( "1", "开" );

savemap.put( "0", "关" );

this.addradiomenu( "保存音频", speechconstant.asr_audio_path, savemap, defaultvalue.save, mradioitemlistener );

}

}//end of function initmenu

private jmenu addradiomenu( final string text, final string name, map<string, string> cmd2names, final string defaultval, actionlistener actionlistener ){

jmenu menu = new jmenu( text );

menu.setname( name );

buttongroup group = new buttongroup();

for( entry<string, string>entry : cmd2names.entryset() ){

jradiobuttonmenuitem item = new jradiobuttonmenuitem( entry.getvalue(), false );

item.setname( name );

item.setactioncommand( entry.getkey() );

item.addactionlistener( actionlistener );

if( defaultval.equals(entry.getkey()) ){

item.setselected( true );

}

group.add( item );

menu.add( item );

}

this.msettingmenu.add( menu );

return menu;

}

private void addslidermenu( final string text, final string name, final int min, final int max, final int defaultval, changelistener changelistener ){

jmenu menu = new jmenu( text );

jslider slider = new jslider( swingconstants.horizontal

, min

, max

, defaultval );

slider.addchangelistener( this.mchangelistener );

slider.setname( name );

slider.setpaintticks( true );

slider.setpaintlabels( true );

final int majartick = math.max( 1, (max-min)/5 );

slider.setmajortickspacing( majartick );

slider.setminortickspacing( majartick/2 );

menu.add( slider );

this.msettingmenu.add( menu );

}

//选择监听器

private actionlistener mradioitemlistener = new actionlistener(){

@override

public void actionperformed(actionevent event) {

debuglog.log( "mradioitemlistener actionperformed etner action command="+event.getactioncommand() );

object obj = event.getsource();

if( obj instanceof jmenuitem ){

jmenuitem item = (jmenuitem)obj;

debuglog.log( "mradioitemlistener actionperformed name="+item.getname()+", value="+event.getactioncommand() );

string value = event.getactioncommand();

if( speechconstant.asr_audio_path.equals(item.getname()) ){

value = val_true.equalsignorecase(value) ? msavepath : null;

}

mparammap.put( item.getname(), value );

}else{

debuglog.log( "mradioitemlistener actionperformed source object is not jmenuitem" );

}// end of if-else is object instance of jmenuitem

}

}; //end of mengitemlistener

//滑动条监听器

private changelistener mchangelistener = new changelistener(){

@override

public void statechanged(changeevent event) {

debuglog.log( "mchangelistener statechanged enter" );

object obj = event.getsource();

if( obj instanceof jslider ){

jslider slider = (jslider)obj;

debuglog.log( "bar name="+slider.getname()+", value="+slider.getvalue() );

mparammap.put( slider.getname(), string.valueof(slider.getvalue()) );

}else{

debuglog.log( "mchangelistener statechanged source object is not jprogressbar" );

}

}

};

void setting(){

final string engtype = this.mparammap.get(speechconstant.engine_type);

for( entry<string, string> entry : this.mparammap.entryset() ){

miat.setparameter( entry.getkey(), entry.getvalue() );

}

//本地识别时设置资源,并启动引擎

if( speechconstant.type_local.equals(engtype) ){

//启动合成引擎

miat.setparameter( resourceutil.engine_start, speechconstant.eng_asr );

//设置资源路径

final string rate = this.mparammap.get( speechconstant.sample_rate );

final string tag = rate.equals("16000") ? "16k" : "8k";

string curpath = system.getproperty("user.dir");

debuglog.log( "current path="+curpath );

string respath = resourceutil.generateresourcepath( curpath+"/asr/common.jet" )

+ ";" + resourceutil.generateresourcepath( curpath+"/asr/src_"+tag+".jet" );

system.out.println( "respath="+respath );

miat.setparameter( resourceutil.asr_res_path, respath );

}// end of if is type_local

}// end of function setting

}

最后识别效果如下

你好呀,世界

hello world和碧水穿城过,青山入画来

文件超过5m,保存在自己搭建的图床上,几年内应该不会失效

第六步 扩展与展望

到这里就算开发完成,可以扩展下,查看音频保存情况以及上传用户词表

默认没有保存音频,改为保存,结果找不着保存位置

顺藤摸瓜发现初始化情况

https://www.fly63.com/tool/filetype/ 通过在线文件格式识别识别不出来,而默认就是无法保存,保存了又无法播放,属实…

音频保存没法实现,后续的上传词表就不做了,毕竟探究到这里已经可以了

小结

尽管对源文件里很多代码并不能看懂以及并不知道如何实现语音识别等其他功能的,但终究可以慢慢学习

现阶段先拿来主义,实验以及简单改写,实现简易的二次开发

发表评论