1.查看官方文档、登录并下载我们所需的sdk。语音唤醒需要我们设置唤醒词。

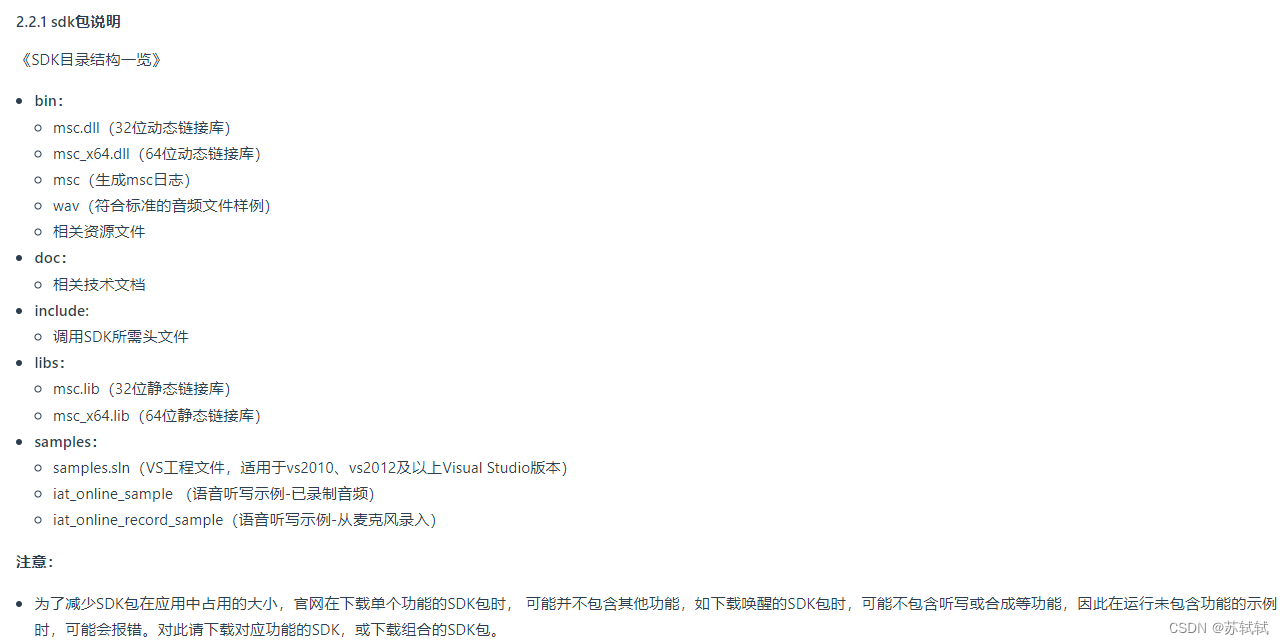

在控制台下载对应sdk,由于讯飞官方只提供了c++/c语音版本,我们需要用c#调用下载sdk的dll库文件。

2.将dll库拖进unity项目中

如果目标设备为64位,我们选择msc_x64.dll;如果是32位,我们选择msc.dll。

另外我们如果要使用语音唤醒功能,还需要wakeupresource.jet拖进unity项目中。

3.编写c#代码调用dll库

语音合成和语音唤醒部分具体请看unity 接入科大讯飞语音识别及语音合成_windwos(该up有在评论区分享demo,非常暖心!!)

【注意】在使用up主的demo时,我们需要将自己的dll库存放进去,appid换成我们自己的(appid 于讯飞开发者控制台创建应用申请所得)

以下是我在参考vain_k语音唤醒和unity 接入科大讯飞语音识别及语音合成_windwos之后稍作调整的代码:

我们在使用语音功能时要先调用登入方法,结束时要调用退出登录的方法。

using system;

using system.collections.generic;

using system.io;

using system.runtime.interopservices;

using system.text;

using system.threading;

using unity.visualscripting;

using unityengine;

/// <summary>

/// 语音工具

/// </summary>

public static class speech

{

/* appid 于讯飞开发者控制台创建应用申请所得 */

const string mappid = "appid=11111111";

//wakeupresource.jet的路径

const string path = "d:\\unityproject\\voicetotext\\assets\\speech\\plugins\\wakeupresource.jet";

const string qivw_session_begin_params = "ivw_threshold=0:1450,sst=wakeup,ivw_res_path =fo|" + path;

public static bool isawaken = false;

/// <summary>

/// 登录接口

/// </summary>

/// <returns></returns>

public static int msplogin()

{

int res = mscdll.msplogin(null, null, mappid);

if (res != 0)

{

debug.log($"login failed. error code: {res}");

}

else

debug.log("登录接口成功");

return res;

}

/// <summary>

/// 退出登录

/// </summary>

public static void msplogout()

{

int error = mscdll.msplogout();

if (error != 0)

debug.log($"logout failed. error code {error}");

else

debug.log("退出登录成功");

}

/// <summary>

/// 科大讯飞语音识别

/// </summary>

/// <param name="clipbuffer">音频数据</param>

/// <returns>识别后的字符串结果</returns>

public static string asr(byte[] clipbuffer)

{

/* 首先调用登录接口

* 登录成功返回0,否则为错误代码 */

int res = 0;

/* 调用开启一次语音识别的接口

* 接收返回的句柄,后续调用写入音频、获取结果等接口需要使用

* 调用成功error code为0,否则为错误代码

* 备注:

* 第二个参数为 开始一次语音识别的参数列表 可以再进行具体的封装

* 例如 language参数 封装枚举 switch中文 为zh_cn switch英文 为en_us

* 具体参照科大讯飞官网sdk文档 */

intptr sessionid = mscdll.qisrsessionbegin(null,

"sub=iat,domain=iat,language=zh_cn,accent=mandarin,sample_rate=16000,result_type=plain,result_encoding= utf-8", ref res);

if (res != 0)

{

debug.log($"begin failed. error code: {res}");

onerrorevent();

return null;

}

/* 用于记录端点状态 */

epstatus epstatus = epstatus.msp_ep_looking_for_speech;

/* 用于记录识别状态 */

recogstatus recognizestatus = recogstatus.msp_rec_status_success;

/* 调用音频写入接口 将需要识别的音频数据传入

* 写入成功返回0,否则为错误代码 */

res = mscdll.qisraudiowrite(sessionid, clipbuffer, (uint)clipbuffer.length, audiostatus.msp_audio_sample_continue, ref epstatus, ref recognizestatus);

if (res != 0)

{

debug.log($"write failed. error code: {res}");

mscdll.qisrsessionend(sessionid, "error");

onerrorevent();

return null;

}

res = mscdll.qisraudiowrite(sessionid, null, 0, audiostatus.msp_audio_sample_last, ref epstatus, ref recognizestatus);

if (res != 0)

{

debug.log($"write failed. error code: {res}");

mscdll.qisrsessionend(sessionid, "error");

onerrorevent();

return null;

}

/* 用于存储识别结果 */

stringbuilder sb = new stringbuilder();

/* 用于累加识别结果的长度 */

int length = 0;

/* 音频写入后 反复调用获取识别结果的接口直到获取完毕 */

while (recognizestatus != recogstatus.msp_rec_status_complete)

{

intptr curtrslt = mscdll.qisrgetresult(sessionid, ref recognizestatus, 0, ref res);

if (res != 0)

{

debug.log($"get result failed. error code: {res}");

mscdll.qisrsessionend(sessionid, "error");

onerrorevent();

return null;

}

/* 当前部分识别结果不为空 将其存入sb*/

if (null != curtrslt)

{

length += curtrslt.tostring().length;

if (length > 4096)

{

debug.log($"size not enough: {length} > 4096");

mscdll.qisrsessionend(sessionid, "error");

onerrorevent();

return sb.tostring();

}

sb.append(marshal.ptrtostringansi(curtrslt));

}

thread.sleep(150);

}

/* 获取完全部识别结果后 结束本次语音识别 */

res = mscdll.qisrsessionend(sessionid, "ao li gei !");

if (res != 0) debug.log($"end failed. error code: {res}");

/* 最终退出登录 返回识别结果*/

//msplogout();

return sb.tostring();

}

/// <summary>

/// 科大讯飞语音识别

/// </summary>

/// <param name="path">音频文件所在路径</param>

/// <returns>识别后的字符串结果</returns>

public static string asr(string path)

{

if (string.isnullorempty(path))

{

debug.log("path can not be null.");

return null;

}

byte[] clipbuffer;

try

{

clipbuffer = file.readallbytes(path);

}

catch (exception e)

{

debug.log($"exception: {e.message}");

return null;

}

return asr(clipbuffer);

}

/// <summary>

/// 科大讯飞语音识别

/// </summary>

/// <param name="clip">需要识别的audioclip</param>

/// <returns>识别后的字符串结果</returns>

public static string asr(audioclip clip)

{

byte[] clipbuffer = clip.topcm16();

return asr(clipbuffer);

}

/// <summary>

/// 科大讯飞语音合成

/// </summary>

/// <param name="content">需要合成音频的文本内容</param>

/// <returns>合成后的音频</returns>

public static audioclip tts(string content, ttsvoice voice = ttsvoice.xujiu)

{

/* 首先调用登录接口

* 登录成功返回0,否则为错误代码 */

//int res = msplogin();

int res = 0;

/* 调用开启一次语音合成的接口

* 接收返回后的句柄,后续调用写入文本等接口需要使用

* 调用成功error code为0,否则为错误代码

* 备注:

* 第一个参数为 开启一次语音合成的参数列表

* 具体参照科大讯飞官网sdk文档 */

string voicer = "";

switch (voice)

{

case ttsvoice.xiaoyan:

voicer = "xiaoyan";

break;

case ttsvoice.xujiu:

voicer = "aisjiuxu";

break;

case ttsvoice.xiaoping:

voicer = "aisxping";

break;

case ttsvoice.xiaojing:

voicer = "aisjinger";

break;

case ttsvoice.xuxiaobao:

voicer = "aisbabyxu";

break;

default:

break;

}

intptr sessionid = mscdll.qttssessionbegin($"engine_type = cloud, voice_name = {voicer}, speed = 65, pitch = 40, text_encoding = utf8, sample_rate = 16000", ref res);

if (res != 0)

{

debug.log($"begin failed. error code: {res}");

onerrorevent();

return null;

}

/* 调用写入文本的接口 将需要合成内容传入

* 调用成功返回0,否则为错误代码 */

res = mscdll.qttstextput(sessionid, content, (uint)encoding.utf8.getbytecount(content), string.empty);

if (res != 0)

{

debug.log($"put text failed. error code: {res}");

onerrorevent();

return null;

}

/* 用于记录长度 */

uint audiolength = 0;

/* 用于记录合成状态 */

synthstatus synthstatus = synthstatus.msp_tts_flag_still_have_data;

list<byte[]> byteslist = new list<byte[]>();

/* 文本写入后 调用获取合成音频的接口

* 获取成功error code为0,否则为错误代码

* 需反复调用 直到合成状态为结束 或出现错误代码 */

try

{

while (true)

{

intptr intptr = mscdll.qttsaudioget(sessionid, ref audiolength, ref synthstatus, ref res);

byte[] bytearray = new byte[(int)audiolength];

if (audiolength > 0) marshal.copy(intptr, bytearray, 0, (int)audiolength);

byteslist.add(bytearray);

thread.sleep(150);

if (synthstatus == synthstatus.msp_tts_flag_data_end || res != 0)

break;

}

}

catch (exception e)

{

onerrorevent();

debug.log($"error: {e.message}");

return null;

}

int size = 0;

for (int i = 0; i < byteslist.count; i++)

{

size += byteslist[i].length;

}

var header = getwaveheader(size);

byte[] array = header.tobytes();

byteslist.insert(0, array);

size += array.length;

byte[] bytes = new byte[size];

size = 0;

for (int i = 0; i < byteslist.count; i++)

{

byteslist[i].copyto(bytes, size);

size += byteslist[i].length;

}

audioclip clip = bytes.towav();

res = mscdll.qttssessionend(sessionid, "ao li gei !");

if (res != 0)

{

debug.log($"end failed. error code: {res}");

onerrorevent();

return clip;

}

//msplogout();

return clip;

}

/// <summary>

/// 科大讯飞语音合成

/// </summary>

/// <param name="content">需要合成的内容</param>

/// <param name="path">将合成后的音频写入指定的路径</param>

/// <returns>调用成功返回true 发生异常返回false</returns>

public static bool tts(string content, string path)

{

/* 首先调用登录接口

* 登录成功返回0,否则为错误代码 */

int res = mscdll.msplogin(null, null, mappid);

if (res != 0)

{

debug.log($"login failed. error code: {res}");

return false;

}

/* 调用开启一次语音合成的接口

* 接收返回后的句柄,后续调用写入文本等接口需要使用

* 调用成功error code为0,否则为错误代码

* 备注:

* 第一个参数为 开启一次语音合成的参数列表

* 具体参照科大讯飞官网sdk文档 */

intptr sessionid = mscdll.qttssessionbegin("engine_type = cloud, voice = xiaoyan, text_encoding = utf8, sample_rate = 16000", ref res);

if (res != 0)

{

debug.log($"begin failed. error code: {res}");

onerrorevent();

return false;

}

/* 调用写入文本的接口 将需要合成内容传入

* 调用成功返回0,否则为错误代码 */

res = mscdll.qttstextput(sessionid, content, (uint)encoding.utf8.getbytecount(content), string.empty);

if (res != 0)

{

debug.log($"put text failed. error code: {res}");

onerrorevent();

return false;

}

/* 用于记录长度 */

uint audiolength = 0;

/* 用于记录合成状态 */

synthstatus synthstatus = synthstatus.msp_tts_flag_still_have_data;

/* 开启一个流 */

memorystream ms = new memorystream();

ms.write(new byte[44], 0, 44);

/* 文本写入后 调用获取合成音频的接口

* 获取成功error code为0,否则为错误代码

* 需反复调用 直到合成状态为结束 或出现错误代码 */

try

{

while (true)

{

intptr intptr = mscdll.qttsaudioget(sessionid, ref audiolength, ref synthstatus, ref res);

byte[] bytearray = new byte[(int)audiolength];

if (audiolength > 0) marshal.copy(intptr, bytearray, 0, (int)audiolength);

ms.write(bytearray, 0, (int)audiolength);

thread.sleep(150);

if (synthstatus == synthstatus.msp_tts_flag_data_end || res != 0)

break;

}

}

catch (exception e)

{

onerrorevent();

debug.log($"error: {e.message}");

return false;

}

var header = getwaveheader((int)ms.length);

byte[] array = header.tobytes();

ms.position = 0l;

ms.write(array, 0, array.length);

ms.position = 0l;

filestream fs = new filestream(path, system.io.filemode.create, fileaccess.write);

ms.writeto(fs);

ms.close();

fs.close();

res = mscdll.qttssessionend(sessionid, "ao li gei !");

if (res != 0)

{

debug.log($"end failed. error code: {res}");

onerrorevent();

return false;

}

res = mscdll.msplogout();

if (res != 0)

{

debug.log($"logout failed. error code: {res}");

return false;

}

return true;

}

/// <summary>

/// 科大讯飞语音唤醒

/// </summary>

/// <param name="clipbuffer">音频数据</param>

/// <returns>识别后的字符串结果</returns>

public static void mscawaken(byte[] clipbuffer)

{

/* 首先调用登录接口 在外部调用*/

int errorcode = 0;

intptr sessionid = mscdll.qivwsessionbegin(null, qivw_session_begin_params, ref errorcode);

if (errorcode != 0)

{

debug.logerror("初始化语音唤醒失败!错误信息:" + errorcode);

onerrorevent();

return;

}

这个是unity 自动录音并截取音频保存 章节的方法

//byte[] playerclipbyte = audiocliptobyte(player.clip, start, end);//语音文件byte[]

int message = mscdll.qivwregisternotify(sessionid, cb_ivw_msg_proc, intptr.zero);

if (message == 0)

{

message = mscdll.qivwaudiowrite(sessionid, clipbuffer, (uint)clipbuffer.length, audiostatus.msp_audio_sample_last);

if (message != 0)

debug.logerror("写入唤醒音频失败!错误信息:" + errorcode);

}

else

{

debug.logerror("语音唤醒注册回调失败!错误信息:" + errorcode);

}

//if (message != 0)

//{

// debug.logerror("写入语音唤醒失败!错误信息:" + message);

// onerrorevent();

// return;

//}

//debug.log("qivwaudiowrite");

//message = mscdll.qivwaudiowrite(sessionid, null, 0, audiostatus.msp_audio_sample_last);

//if (message != 0)

//{

// debug.logerror("写入语音唤醒失败!错误信息:" + message);

// onerrorevent();

// return;

//}

message = mscdll.qivwsessionend(sessionid, "语音唤醒结束");

if (message != 0)

{

debug.logerror("语音唤醒结束失败!错误信息:" + message);

onerrorevent();

return;

}

/* 最终退出登录*/

}

/// <summary>

/// 语音唤醒的回调函数

/// </summary>

/// <param name="sessionid"></param>

/// <param name="msg"></param>

/// <param name="param1"></param>

/// <param name="param2"></param>

/// <param name="info"></param>

/// <param name="userdata"></param>

/// <returns></returns>

private static int cb_ivw_msg_proc(intptr sessionid, int msg, int param1, int param2, intptr info, intptr userdata)

{

if (msg == (int)ivwstatus.msp_ivw_msg_error) //唤醒出错消息

{

debug.logerror("msp_ivw_msg_error error code:" + param1);

}

else if (msg == (int)ivwstatus.msp_ivw_msg_wakeup)//唤醒成功消息

{

isawaken = true;

debug.log("唤醒成功,执行回调函数");

}

return 0;

}

/* 发生异常后调用退出登录接口 */

static void onerrorevent()

{

int res = mscdll.msplogout();

if (res != 0)

{

debug.log($"logout failed. error code: {res}");

}

}

/* 语音音频头 初始化赋值 */

static waveheader getwaveheader(int datalen)

{

return new waveheader

{

riffid = 1179011410,

filesize = datalen - 8,

rifftype = 1163280727,

fmtid = 544501094,

fmtsize = 16,

fmttag = 1,

fmtchannel = 1,

fmtsamplespersec = 16000,

avgbytespersec = 32000,

blockalign = 2,

bitspersample = 16,

dataid = 1635017060,

datasize = datalen - 44

};

}

}

public class mscdll

{

#region msp_cmn.h 通用接口

/// <summary>

/// 初始化msc 用户登录 user login.

/// 使用其他接口前必须先调用msplogin,可以在应用程序启动时调用

/// </summary>

/// <param name="usr">user name. 此参数保留 传入null即可</param>

/// <param name="pwd">password. 此参数保留 传入null即可</param>

/// <param name="parameters">parameters when user login. 每个参数和参数值通过key=value的形式组成参数对,如果有多个参数对,再用逗号进行拼接</param>

/// 通用 appid 应用id: 于讯飞开放平台申请sdk成功后获取到的appid

/// 离线 engine_start 离线引擎启动: 启用离线引擎 支持参数: ivw:唤醒 asr:识别

/// 离线 [xxx]_res_path 离线引擎资源路径: 设置ivw asr引擎离线资源路径

/// 详细格式: fo|[path]|[offset]|[length]|xx|xx

/// 单个资源路径示例: ivw_res_path=fo|res/ivw/wakeupresource.jet

/// 多个资源路径示例: asr_res_path=fo|res/asr/common.jet;fo|res/asr/sms.jet

/// <returns>return 0 if sucess, otherwise return error code. 成功返回msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int msplogin(string usr, string pwd, string parameters);

/// <summary>

/// 退出登录 user logout.

/// 本接口和msplogin配合使用 确保其他接口调用结束之后调用msplogout,否则结果不可预期

/// </summary>

/// <returns>如果函数调用成功返回msp_success,否则返回错误代码 return 0 if sucess, otherwise return error code.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int msplogout();

/// <summary>

/// 用户数据上传 upload data such as user config, custom grammar, etc.

/// </summary>

/// <param name="dataname">数据名称字符串 should be unique to diff other data.</param>

/// <param name="data">待上传数据缓冲区的起始地址 the data buffer pointer, data could be binary.</param>

/// <param name="datalen">数据长度(如果是字符串,则不包含'\0') length of data.</param>

/// <param name="_params">parameters about uploading data.</param>

/// 在线 sub = uup,dtt = userword 上传用户词表

/// 在线 sub = uup,dtt = contact 上传联系人

/// <param name="errorcode">return 0 if success, otherwise return error code.</param>

/// <returns>data id returned by server, used for special command.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr mspuploaddata(string dataname, intptr data, uint datalen, string _params, ref int errorcode);

/// <summary>

/// write data to msc, such as data to be uploaded, searching text, etc.

/// </summary>

/// <param name="data">the data buffer pointer, data could be binary.</param>

/// <param name="datalen">length of data.</param>

/// <param name="datastatus">data status, 2: first or continuous, 4: last.</param>

/// <returns>return 0 if success, otherwise return error code.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int mspappenddata(intptr data, uint datalen, uint datastatus);

/// <summary>

/// download data such as user config, etc.

/// </summary>

/// <param name="_params">parameters about data to be downloaded.</param>

/// <param name="datalen">length of received data.</param>

/// <param name="errorcode">return 0 if success, otherwise return error code.</param>

/// <returns>received data buffer pointer, data could be binary, null if failed or data does not exsit.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr mspdownloaddata(string _params, ref uint datalen, ref int errorcode);

/// <summary>

/// set param of msc. 参数设置接口、离线引擎初始化接口

/// </summary>

/// <param name="paramname">param name.</param>

/// 离线 engine_start 启动离线引擎

/// 离线 engine_destroy 销毁离线引擎

/// <param name="paramvalue">param value. 参数值</param>

/// <returns>return 0 if success, otherwise return errcode. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int mspsetparam(string paramname, string paramvalue);

/// <summary>

/// get param of msc. 获取msc的设置信息

/// </summary>

/// <param name="paramname">param name. 参数名,一次调用只支持查询一个参数</param>

/// 在线 upflow 上行数据量

/// 在线 downflow 下行数据量

/// <param name="paramvalue">param value.</param>

/// 输入: buffer首地址

/// 输出: 向该buffer写入获取到的信息

/// <param name="valuelen">param value (buffer) length.</param>

/// 输入: buffer的大小

/// 输出: 信息实际长度(不含'\0')

/// <returns>return 0 if success, otherwise return errcode. 函数调用成功返回msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int mspgetparam(string paramname, ref byte[] paramvalue, ref uint valuelen);

/// <summary>

/// get version of msc or local engine. 获取msc或本地引擎版本信息

/// </summary>

/// <param name="vername">version name, could be "msc", "aitalk", "aisound", "ivw". 参数名,一次调用只支持查询一个参数</param>

/// 离线 ver_msc msc版本号

/// 离线 ver_asr 离线识别版本号,目前不支持

/// 离线 ver_tts 离线合成版本号

/// 离线 ver_ivw 离线唤醒版本号

/// <param name="errorcode">return 0 if success, otherwise return error code. 如果函数调用成功返回msp_success,否则返回错误代码</param>

/// <returns>return version value if success, null if fail. 成功返回缓冲区指针,失败或数据不存在返回null</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr mspgetversion(string vername, ref int errorcode);

#endregion

#region qisr.h 语音识别

/// <summary>

/// create a recognizer session to recognize audio data. 开始一次语音识别

/// </summary>

/// <param name="grammarlist">garmmars list, inline grammar support only one. 此参数保留,传入null即可</param>

/// <param name="_params">parameters when the session created.</param>

/// 通用 engine_type 引擎类型 cloud在线引擎 local离线引擎

/// 在线 sub 本次识别请求的类型 iat语音听写 asr命令词识别

/// 在线 language 语言 zh_cn简体中文 en_us英文

/// 在线 domain 领域 iat语音听写

/// 在线 accent 语言区域 mandarin普通话

/// 通用 sample_rate 音频采样率 16000 8000

/// 通用 asr_threshold 识别门限 离线语法识别结果门限值,设置只返回置信度得分大于此门限值的结果 0-100

/// 离线 asr_denoise 是否开启降噪功能 0不开启 1开启

/// 离线 asr_res_path 离线识别资源路径 离线识别资源所在路径

/// 离线 grm_build_path 离线语法生成路径 构建离线语法所生成数据的保存路径(文件夹)

/// 通用 result_type 结果格式 plain json

/// 通用 text_encoding 文本编码格式 表示参数中携带的文本编码格式

/// 离线 local_grammar 离线语法id 构建离线语法后获得的语法id

/// 通用 ptt 添加标点符号(sub=iat时有效) 0:无标点符号;1:有标点符号

/// 在线 aue 音频编码格式和压缩等级 编码算法:raw;speex;speex-wb;ico 编码等级: raw不进行压缩 speex系列0-10

/// 通用 result_encoding 识别结果字符串所用编码格式 plain:utf-8,gb2312 json:utf-8

/// 通用 vad_enable vad功能开关 是否启用vad 默认为开启vad 0(或false)为关闭

/// 通用 vad_bos 允许头部静音的最长时间 目前未开启该功能

/// 通用 vad_eos 允许尾部静音的最长时间 0-10000毫秒 默认为2000

/// <param name="errorcode">return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</param>

/// <returns>return session id of current session, null is failed. 函数调用成功返回字符串格式的sessionid,失败返回null sessionid是本次识别的句柄</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qisrsessionbegin(string grammarlist, string _params, ref int errorcode);

/// <summary>

/// writing binary audio data to recognizer. 写入本次识别的音频

/// </summary>

/// <param name="sessionid">the session id returned by recog_begin. 由qisrsessionbegin返回的句柄</param>

/// <param name="wavedata">binary data of waveform. 音频数据缓冲区起始地址</param>

/// <param name="wavelen">waveform data size in bytes. 音频数据长度,单位字节</param>

/// <param name="audiostatus">audio status. 用来告知msc音频发送是否完成</param>

/// <param name="epstatus">ep status. 端点检测(end-point detected)器所处的状态</param>

/// <param name="recogstatus">recognition status. 识别器返回的状态,提醒用户及时开始\停止获取识别结果</param>

/// 本接口需不断调用,直到音频全部写入为止 上传音频时,需更新audiostatus的值 具体来说:

/// 当写入首块音频时,将audiostatus置为msp_audio_sample_first

/// 当写入最后一块音频时,将audiostatus置为msp_audio_sample_last

/// 其余情况下,将audiostatus置为msp_audio_sample_continue

/// 同时,需定时检查两个变量: epstatus和recogstatus 具体来说:

/// 当epstatus显示已检测到后端点时,msc已不再接收音频,应及时停止音频写入

/// 当rsltstatus显示有识别结果返回时,即可从msc缓存中获取结果

/// <returns>return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qisraudiowrite(intptr sessionid, byte[] wavedata, uint wavelen, audiostatus audiostatus, ref epstatus epstatus, ref recogstatus recogstatus);

/// <summary>

/// get recognize result in specified format. 获取识别结果

/// </summary>

/// <param name="sessionid">session id returned by session begin. 由qisrsessionbegin返回的句柄</param>

/// <param name="rsltstatus">status of recognition result, 识别结果的状态,其取值范围和含义请参考qisraudiowrite 的参数recogstatus</param>

/// <param name="waittime">此参数做保留用</param>

/// <param name="errorcode">return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</param>

/// 当写入音频过程中已经有部分识别结果返回时,可以获取结果

/// 在音频写入完毕后,用户需反复调用此接口,直到识别结果获取完毕(rlststatus值为5)或返回错误码

/// 注意:如果某次成功调用后暂未获得识别结果,请将当前线程sleep一段时间,以防频繁调用浪费cpu资源

/// <returns>return 0 if success, otherwise return error code. 函数执行成功且有识别结果时,返回结果字符串指针 其他情况(失败或无结果)返回null</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qisrgetresult(intptr sessionid, ref recogstatus rsltstatus, int waittime, ref int errorcode);

/// <summary>

/// end the recognizer session, release all resource. 结束本次语音识别

/// 本接口和qisrsessionbegin对应,调用此接口后,该句柄对应的相关资源(参数、语法、音频、实例等)都会被释放,用户不应再使用该句柄

/// </summary>

/// <param name="sessionid">session id string to end. 由qisrsessionbegin返回的句柄</param>

/// <param name="hints">user hints to end session, hints will be logged to calllog. 结束本次语音识别的原因描述,为用户自定义内容</param>

/// <returns>return 0 if sucess, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qisrsessionend(intptr sessionid, string hints);

/// <summary>

/// get params related with msc. 获取当次语音识别信息,如上行流量、下行流量等

/// </summary>

/// <param name="sessionid">session id of related param, set null to got global param. 由qisrsessionbegin返回的句柄,如果为null,获取msc的设置信息</param>

/// <param name="paramname">param name,could pass more than one param split by ','';'or'\n'. 参数名,一次调用只支持查询一个参数</param>

/// 在线 sid 服务端会话id 长度为32字节

/// 在线 upflow 上行数据量

/// 在线 downflow 下行数据量

/// 通用 volume 最后一次写入的音频的音量

/// <param name="paramvalue">param value buffer, malloced by user.</param>

/// 输入: buffer首地址

/// 输出: 向该buffer写入获取到的信息

/// <param name="valuelen">pass in length of value buffer, and return length of value string.</param>

/// 输入: buffer的大小

/// 输出: 信息实际长度(不含’\0’)

/// <returns>return 0 if success, otherwise return errcode. 函数调用成功返回msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qisrgetparam(string sessionid, string paramname, ref byte[] paramvalue, ref uint valuelen);

#endregion

#region qtts.h 语音合成

/// <summary>

/// create a tts session to synthesize data. 开始一次语音合成,分配语音合成资源

/// </summary>

/// <param name="_params">parameters when the session created. 传入的参数列表</param>

/// 通用 engine_type 引擎类型 cloud在线引擎 local离线引擎

/// 通用 voice_name 发音人 不同的发音人代表了不同的音色 如男声、女声、童声等

/// 通用 speed 语速 0-100 default50

/// 通用 volume 音量 0-100 dafault50

/// 通用 pitch 语调 0-100 default50

/// 离线 tts_res_path 合成资源路径 合成资源所在路径,支持fo 方式参数设置

/// 通用 rdn 数字发音 0数值优先 1完全数值 2完全字符串 3字符串优先

/// 离线 rcn 1 的中文发音 0表示发音为yao 1表示发音为yi

/// 通用 text_encoding 文本编码格式(必传) 合成文本编码格式,支持参数,gb2312,gbk,big5,unicode,gb18030,utf8

/// 通用 sample_rate 合成音频采样率 合成音频采样率,支持参数,16000,8000,默认为16000

/// 在线 background_sound背景音 0无背景音乐 1有背景音乐

/// 在线 aue 音频编码格式和压缩等级 编码算法:raw;speex;speex-wb;ico 编码等级: raw不进行压缩 speex系列0-10

/// 在线 ttp 文本类型 text普通格式文本 cssml格式文本

/// 离线 speed_increase 语速增强 1正常 2二倍语速 4四倍语速

/// 离线 effect 合成音效 0无音效 1忽远忽近 2回声 3机器人 4合唱 5水下 6混响 7阴阳怪气

/// <param name="errorcode">return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</param>

/// <returns>return the new session id if success, otherwise return null. 函数调用成功返回字符串格式的sessionid,失败返回null sessionid是本次合成的句柄</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qttssessionbegin(string _params, ref int errorcode);

/// <summary>

/// writing text string to synthesizer. 写入要合成的文本

/// </summary>

/// <param name="sessionid">the session id returned by sesson begin. 由qttssessionbegin返回的句柄</param>

/// <param name="textstring">text buffer. 字符串指针 指向待合成的文本字符串</param>

/// <param name="textlen">text size in bytes. 合成文本长度,最大支持8192个字节</param>

/// <param name="_params">parameters when the session created. 本次合成所用的参数,只对本次合成的文本有效 目前为空</param>

/// <returns>return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qttstextput(intptr sessionid, string textstring, uint textlen, string _params);

/// <summary>

/// synthesize text to audio, and return audio information. 获取合成音频

/// </summary>

/// <param name="sessionid">session id returned by session begin. 由qttssessionbegin返回的句柄</param>

/// <param name="audiolen">synthesized audio size in bytes. 合成音频长度,单位字节</param>

/// <param name="synthstatus">synthesizing status. 合成音频状态</param>

/// <param name="errorcode">return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</param>

/// 用户需要反复获取音频,直到音频获取完毕或函数调用失败

/// 在重复获取音频时,如果暂未获得音频数据,需要将当前线程sleep一段时间,以防频繁调用浪费cpu资源

/// <returns>return current synthesized audio data buffer, size returned by qttstextsynth. 函数调用成功且有音频数据时返回非空指针 调用失败或无音频数据时,返回null</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qttsaudioget(intptr sessionid, ref uint audiolen, ref synthstatus synthstatus, ref int errorcode);

/// <summary>

/// get synthesized audio data information.

/// </summary>

/// <param name="sessionid">session id returned by session begin.</param>

/// <returns>return audio info string.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qttsaudioinfo(intptr sessionid);

/// <summary>

/// end the recognizer session, release all resource. 结束本次语音合成

/// 本接口和qttssessionbegin对应,调用此接口后,该句柄对应的相关资源(参数 合成文本 实例等)都会被释放,用户不应再使用该句柄

/// </summary>

/// <param name="sessionid">session id string to end. 由qttssessionbegin返回的句柄</param>

/// <param name="hints">user hints to end session, hints will be logged to calllog. 结束本次语音合成的原因描述,为用户自定义内容</param>

/// <returns>return 0 if success, otherwise return error code. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qttssessionend(intptr sessionid, string hints);

/// <summary>

/// set params related with msc.

/// </summary>

/// <param name="sessionid">session id of related param, set null to got global param.</param>

/// <param name="paramname">param name,could pass more than one param split by ','';'or'\n'.</param>

/// <param name="paramvalue">param value buffer, malloced by user.</param>

/// <returns>return 0 if success, otherwise return errcode.</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qttssetparam(intptr sessionid, string paramname, byte[] paramvalue);

/// <summary>

/// get params related with msc. 获取当前语音合成信息,如当前合成音频对应文本结束位置、上行流量、下行流量等

/// </summary>

/// <param name="sessionid">session id of related param, set null to got global param. 由qttssessionbegin返回的句柄,如果为null,获取msc的设置信息</param>

/// <param name="paramname">param name,could pass more than one param split by ','';'or'\n'. 参数名,一次调用只支持查询一个参数</param>

/// 在线 sid 服务端会话id 长度为32字节

/// 在线 upflow 上行数据量

/// 在线 downflow 下行数据量

/// 通用 ced 当前合成音频对应文本结束位置

/// <param name="paramvalue">param value buffer, malloced by user.</param>

/// 输入: buffer首地址

/// 输出: 向该buffer写入获取到的信息

/// <param name="valuelen">pass in length of value buffer, and return length of value string</param>

/// 输入: buffer的大小

/// 输出: 信息实际长度(不含’\0’)

/// <returns>return 0 if success, otherwise return errcode. 函数调用成功则其值为msp_success,否则返回错误代码</returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qttsgetparam(intptr sessionid, string paramname, ref byte[] paramvalue, ref uint valuelen);

#endregion

#region qivw.h 语音唤醒

/// <summary>

/// 开始唤醒功能,本次唤醒所用的参数等。

/// </summary>

/// <param name="grammarlist">保留参数,设置为null即可。</param>

/// <param name="_params"></param>

/// <param name="errorcode">函数调用成功则其值为msp_success(0),否则返回错误代码,详见错误码列表</param>

/// <returns>函数调用成功返回字符串格式的sessionid,失败返回null。sessionid是本次唤醒的句柄。</returns>

/// [dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern intptr qivwsessionbegin(string grammarlist, string _params, ref int errorcode);

/// <summary>

/// 结束本次语音唤醒。

/// </summary>

/// <param name="sessionid">由qivwsessionbegin返回的句柄。</param>

/// <param name="hints">结束本次语音唤醒的原因描述,为用户自定义内容。</param>

/// <returns>函数调用成功则其值为msp_success,否则返回错误代码</returns>

/// 本接口和qivwsessionbegin 对应,用来本次语音唤醒。调用此接口后,该句柄对应的相关资源(参数、语法、音频、实例等)都会被释放,用户不应再使用该句柄。

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qivwsessionend(intptr sessionid, string hints);

/// <summary>

/// 写入本次唤醒的音频,本接口需要反复调用直到音频写完为止。

/// </summary>

/// <param name="sessionid">由qivwsessionbegin返回的句柄。</param>

/// <param name="audiodata">音频数据缓冲区起始地址。</param>

/// <param name="audiolen">音频数据长度,单位字节。</param>

/// <param name="audiostatus">用来告知msc音频发送是否完成,典型值如下:</param>

/// 枚举常量 简介

/// msp_audio_sample_first = 1 第一块音频

/// msp_audio_sample_continue = 2 还有后继音频

/// msp_audio_sample_last = 4 最后一块音频

/// <returns></returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qivwaudiowrite(intptr sessionid, byte[] audiodata, uint audiolen, audiostatus audiostatus);

/// <summary>

/// 注册回调。

/// </summary>

/// <param name="sessionid">由qivwsessionbegin返回的句柄。</param>

/// <param name="msgproccb">注册通知的回调函数,唤醒结果将在此注册回调中返回。格式为:typedef int( *ivw_ntf_handler)( const char *sessionid, int msg,int param1, int param2, const void *info, void *userdata );

/// 参数说明查看官方文档</param>

/// <param name="userdata">用户数据</param>

/// <returns></returns>

[dllimport("msc_x64", callingconvention = callingconvention.stdcall)]

public static extern int qivwregisternotify(intptr sessionid, [marshalas(unmanagedtype.functionptr)] ivw_ntf_handler msgproccb, intptr userdata);

//定义回调函数

[unmanagedfunctionpointer(callingconvention.cdecl)]

public delegate int ivw_ntf_handler(intptr sessionid, int msg, int param1, int param2, intptr info, intptr userdata);

#endregion

}

#region qisr

/// <summary>

/// 用来告知msc音频发送是否完成

/// </summary>

public enum audiostatus

{

msp_audio_sample_init = 0x00,

msp_audio_sample_first = 0x01, //第一块音频

msp_audio_sample_continue = 0x02, //还有后继音频

msp_audio_sample_last = 0x04, //最后一块音频

}

/// <summary>

/// 端点检测(end-point detected)器所处的状态

/// </summary>

public enum epstatus

{

msp_ep_looking_for_speech = 0, //还没有检测到音频的前端点

msp_ep_in_speech = 1, //已经检测到了音频前端点,正在进行正常的音频处理

msp_ep_after_speech = 3, //检测到音频的后端点,后记的音频会被msc忽略

msp_ep_timeout = 4, //超时

msp_ep_error = 5, //出现错误

msp_ep_max_speech = 6, //音频过大

msp_ep_idle = 7, // internal state after stop and before start

}

/// <summary>

/// 识别器返回的状态,提醒用户及时开始\停止获取识别结果

/// </summary>

public enum recogstatus

{

msp_rec_status_success = 0, //识别成功,此时用户可以调用qisrgetresult来获取(部分结果)

msp_rec_status_no_match = 1, //识别结束,没有识别结果

msp_rec_status_incomplete = 2, //未完成 正在识别中

msp_rec_status_non_speech_detected = 3,

msp_rec_status_speech_detected = 4,

msp_rec_status_complete = 5, //识别结束

msp_rec_status_max_cpu_time = 6,

msp_rec_status_max_speech = 7,

msp_rec_status_stopped = 8,

msp_rec_status_rejected = 9,

msp_rec_status_no_speech_found = 10,

msp_rec_status_failure = msp_rec_status_no_match,

}

#endregion

#region qtts

/// <summary>

/// 合成状态

/// </summary>

public enum synthstatus

{

msp_tts_flag_cmd_cancleed = 0,

msp_tts_flag_still_have_data = 1, //音频还没获取完 还有后续的音频

msp_tts_flag_data_end = 2, //音频已经获取完

}

/// <summary>

/// 语音音频头

/// </summary>

public struct waveheader

{

public int riffid;

public int filesize;

public int rifftype;

public int fmtid;

public int fmtsize;

public short fmttag;

public ushort fmtchannel;

public int fmtsamplespersec;

public int avgbytespersec;

public ushort blockalign;

public ushort bitspersample;

public int dataid;

public int datasize;

}

public enum ttsvoice

{

xiaoyan = 0, //讯飞小燕

xujiu = 1, //讯飞许久

xiaoping = 2, //讯飞小萍

xiaojing = 3, //讯飞小婧

xuxiaobao = 4, //讯飞许小宝

}

public enum synthesizingstatus

{

msp_tts_flag_still_have_data = 1,

msp_tts_flag_data_end = 2,

msp_tts_flag_cmd_canceled = 4,

};

/* handwriting process flags */

public enum handwritingstatus

{

msp_hcr_data_first = 1,

msp_hcr_data_continue = 2,

msp_hcr_data_end = 4,

};

/*ivw message type */

public enum ivwstatus

{

msp_ivw_msg_wakeup = 1,

msp_ivw_msg_error = 2,

msp_ivw_msg_isr_result = 3,

msp_ivw_msg_isr_eps = 4,

msp_ivw_msg_volume = 5,

msp_ivw_msg_enroll = 6,

msp_ivw_msg_reset = 7

};

/* upload data process flags */

public enum uploadstatus

{

msp_data_sample_init = 0x00,

msp_data_sample_first = 0x01,

msp_data_sample_continue = 0x02,

msp_data_sample_last = 0x04,

};

#endregion

public class wav

{

// convert two bytes to one float in the range -1 to 1

static float bytestofloat(byte firstbyte, byte secondbyte)

{

// convert two bytes to one short (little endian)

short s = (short)((secondbyte << 8) | firstbyte);

// convert to range from -1 to (just below) 1

return s / 32768.0f;

}

static int bytestoint(byte[] bytes, int offset = 0)

{

int value = 0;

for (int i = 0; i < 4; i++)

{

value |= ((int)bytes[offset + i]) << (i * 8);

}

return value;

}

// properties

public float[] leftchannel { get; internal set; }

public float[] rightchannel { get; internal set; }

public int channelcount { get; internal set; }

public int samplecount { get; internal set; }

public int frequency { get; internal set; }

public wav(byte[] wav)

{

// determine if mono or stereo

channelcount = wav[22]; // forget byte 23 as 99.999% of wavs are 1 or 2 channels

// get the frequency

frequency = bytestoint(wav, 24);

// get past all the other sub chunks to get to the data subchunk:

int pos = 12; // first subchunk id from 12 to 16

// keep iterating until we find the data chunk (i.e. 64 61 74 61 ...... (i.e. 100 97 116 97 in decimal))

while (!(wav[pos] == 100 && wav[pos + 1] == 97 && wav[pos + 2] == 116 && wav[pos + 3] == 97))

{

pos += 4;

int chunksize = wav[pos] + wav[pos + 1] * 256 + wav[pos + 2] * 65536 + wav[pos + 3] * 16777216;

pos += 4 + chunksize;

}

pos += 8;

// pos is now positioned to start of actual sound data.

samplecount = (wav.length - pos) / 2; // 2 bytes per sample (16 bit sound mono)

if (channelcount == 2) samplecount /= 2; // 4 bytes per sample (16 bit stereo)

// allocate memory (right will be null if only mono sound)

leftchannel = new float[samplecount];

if (channelcount == 2) rightchannel = new float[samplecount];

else rightchannel = null;

// write to double array/s:

int i = 0;

while (pos < wav.length)

{

leftchannel[i] = bytestofloat(wav[pos], wav[pos + 1]);

pos += 2;

if (channelcount == 2)

{

rightchannel[i] = bytestofloat(wav[pos], wav[pos + 1]);

pos += 2;

}

i++;

}

}

public override string tostring()

{

return string.format("[wav: leftchannel={0}, rightchannel={1}, channelcount={2}, samplecount={3}, frequency={4}]", leftchannel, rightchannel, channelcount, samplecount, frequency);

}

}4.编写实际使用代码(自动录音+语音唤醒+语音合成+语音识别+通义千问大模型)

unity使用通义千问大模型参考我的文章:unity使用通义千问大模型接口

自动录音代码依然参考了该大佬的文章vain_k自动录音+截取音频保存 ,在两位大佬的基础上我进行了添加修改:

using system;

using system.collections;

using system.io;

using system.threading;

using unityengine;

public class automaticrecord : monobehaviour

{

[serializefield] private float volume;//音量

[serializefield] private float minvolume = 2;//录音关闭音量值

[serializefield] private float maxvolume = 3;//录音开启音量值

[serializefield] private int minvolume_number;//记录的小音量数量

private const int minvolume_sum = 200;//小音量总和值

private const int volume_data_length = 128; //录制的声音长度

private const int frequency = 16000; //码率

private const int lengthsec = 600; //录制时长

private audiosource audiosource; //录制的音频

private bool isrecord = false;//录音开关

private bool islogout = false;//是否已退出登录

private bool isplayed = true;//音频是否播放完毕(使当前可录入我们的问题)

private int start;//录音起点

private int end;//录音终点

private string voicetext;

private audioclip aiaudioclip;

public audiosource _audiosource;

private float time = 0;//定时器

private float timelimit = 25;

public qwturbo qw;

private void start()

{

audiosource = getcomponent<audiosource>();

audiosource.clip = microphone.start(null, true, lengthsec, frequency);

}

private void update()

{

volume = getvolume(audiosource.clip, volume_data_length);

recordopenclose();

//长时间无反应,唤醒状态关闭

if (speech.isawaken && isplayed)

{

debug.log("正在计时");

time += time.deltatime;

if(time > timelimit)

{

awakeclose();

time = 0;

}

}

}

private void ondisable()

{

if(!islogout)

speech.msplogout();

}

/// <summary>

/// 录音自动开关

/// </summary>

private void recordopenclose()

{

//上一个语音是否已经播放完毕

if (isplayed)

{

//开

if (!isrecord && volume >= maxvolume)

{

start = microphone.getposition(microphone.devices[0]);

isrecord = true;

debug.log("自动录音开启:" + start);

minvolume_number = 0;

}

//关

if (isrecord && volume < minvolume)

{

//当小音量的数量达到一定的次数再关闭

if (minvolume_number > minvolume_sum)

{

end = microphone.getposition(microphone.devices[0]);

minvolume_number = 0;

isrecord = false;

byte[] clipbuffer = audiocliptobyte(audiosource.clip, start, end);

//file.writeallbytes(application.streamingassetspath + "/audio.wav", playerclipbyte);

//当前不是被激活状态

if (!speech.isawaken)

{

if (speech.msplogin() == 0)

{

islogout = false;

speech.mscawaken(clipbuffer);

thread.sleep(150);

debug.log("是否被激活:" + speech.isawaken);

//不是关键词 仍然未被激活 退出登录

if (!speech.isawaken)

{

speech.msplogout();

islogout = true;

}

//是关键词 被激活 数字人回应

if (speech.isawaken)

{

aiaudioclip = speech.tts("我在,有什么我可以帮助你的吗?");

startcoroutine(playaudiotofinish(aiaudioclip));

}

}

}

else//当前已经是激活状态

{

//进行语音对话

voicetext = speech.asr(clipbuffer);

if (voicetext == string.empty)

{

debug.log("暂未检测到语音输入");

}

else

{

if (qw == null)

{

debug.logerror("qw为空!");

speech.msplogout();

islogout = true;

return;

}

//与通义千问对接获取收到的文字

qw.postmsg(voicetext, callback);

}

}

debug.log("自动录音关闭:" + end);

}

minvolume_number++;

}

}

}

private void callback(string backmsg)

{

//播放ai转换后音频

aiaudioclip = speech.tts(backmsg);

startcoroutine(playaudiotofinish(aiaudioclip));

}

ienumerator playaudiotofinish(audioclip audioclip)

{

_audiosource.playoneshot(audioclip);

thread.sleep((int)audioclip.length);

isplayed = false;

yield return new waitforseconds(audioclip.length);

debug.log("音频播放完毕");

isplayed = true;

time = 0;

}

private void awakeclose()

{

aiaudioclip = speech.tts("有什么需要再叫我。");

startcoroutine(playaudiotofinish(aiaudioclip));

speech.isawaken = false;

speech.msplogout();

islogout = true;

}

/// <summary>

/// 获取音量

/// </summary>

/// <param name="clip">音频片段</param>

/// <param name="lengthvolume">长度</param>

/// <returns></returns>

private float getvolume(audioclip clip, int lengthvolume)

{

if (microphone.isrecording(null))

{

float maxvolume = 0f;

//用于储存一段时间内的音频信息

float[] volumedata = new float[lengthvolume];

//获取录制的音频的开头位置

int offset = microphone.getposition(null) - (lengthvolume + 1);

if (offset < 0)

return 0f;

//获取数据

clip.getdata(volumedata, offset);

//解析数据

for (int i = 0; i < lengthvolume; i++)

{

float tempvolume = volumedata[i];

if (tempvolume > maxvolume)

maxvolume = tempvolume;

}

return maxvolume * 99;

}

return 0;

}

/// <summary>

/// clip转byte[]

/// </summary>

/// <param name="clip">音频片段</param>

/// <param name="star">开始点</param>

/// <param name="end">结束点</param>

/// <returns></returns>

public byte[] audiocliptobyte(audioclip clip, int star, int end)

{

float[] data;

if (end > star)

data = new float[end - star];

else

data = new float[clip.samples - star + end];

clip.getdata(data, star);

int rescalefactor = 32767; //to convert float to int16

byte[] outdata = new byte[data.length * 2];

for (int i = 0; i < data.length; i++)

{

short temshort = (short)(data[i] * rescalefactor);

byte[] temdata = bitconverter.getbytes(temshort);

outdata[i * 2] = temdata[0];

outdata[i * 2 + 1] = temdata[1];

}

return outdata;

}

}

5.代码中需要优化的地方

1.将多个bool值(islogout、isplayed、speech.isawake)替换为数字人的状态enum枚举类型

public enum state

{

sleep,//未被唤醒

listen,//被唤醒状态,等待被询问

think,//被唤醒状态,正在思考

speak//被唤醒状态,正在说话

}这样,全局可使用可修改。

在思考、说话状态我们不进行计时功能,只有在listen状态下开启计时功能。

2.记不清了呜呜呜,我记得好像在时间节点快结束时去询问,会出现休眠语句(“有什么需要再叫我”)和数字人回答的问题会同时响应的bug

大概是需要在结束语“有什么需要再叫我”时状态设置为state.sleep。

然后在播放音频的协程方法中添加判断条件。

以下代码仅参考思路(写前半部分和后半部分时项目已发生改变t_t)

ienumerator playingaudio(audioclip audioclip,int state = 0)

{

isendofaudio = false;

//防止结束语"有什么需要再叫我"转换状态

if(aistate._aistate != state.sleep)

aistate._aistate = state.speak;

mysource.clip = audioclip;

mysource.playoneshot(audioclip);

yield return new waitforseconds(audioclip.length);

if (aistate._aistate != state.sleep)

aistate._aistate = state.listen;

mytime = 0;

}

发表评论