创建服务器,参考

| 节点名字 | 节点ip | 系统版本 | ||

| master11 | 192.168.50.11 | centos 8.5 | ||

| slave12 | 192.168.50.12 | centos 8.5 | ||

| slave13 | 192.168.50.13 | centos 8.5 |

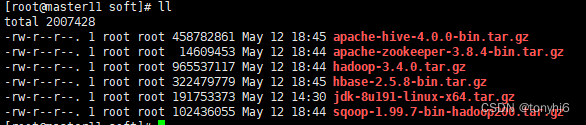

1 下载组件

hadoop:官网地址

hbase:官网地址

zookeeper:官网下载

hive:官网下载

sqoop:官网下载

为方便同学们下载,特整理到网盘

2 通过xftp 上传软件到服务器,统一放到/data/soft/

3 配置zookeeper

tar zxvf apache-zookeeper-3.8.4-bin.tar.gz

mv apache-zookeeper-3.8.4-bin/ /data/zookeeper

#修改配置文件

cd /data/zookeeper/conf

cp zoo_sample.cfg zoo.cfg

#创建数据保存目录

mkdir -p /data/zookeeper/zkdata

mkdir -p /data/zookeeper/logs

vim zoo.cfg

datadir=/tmp/zookeeper-->datadir=/data/zookeeper/zkdata

datalogdir=/data/zookeeper/logs

server.1=master11:2888:3888

server.2=slave12:2888:3888

server.3=slave13:2888:3888

#配置环境变量

vim /etc/profile

export zookeeper_home=/data/zookeeper

export path=$path:$zookeeper_home/bin

source /etc/profile#新建myid并且写入对应的myid

[root@master11 zkdata]# cat myid

1

#对应修改

slave12

myid--2

slave13

myid--34 配置hbase

tar zxvf hbase-2.5.8-bin.tar.gz

mv hbase-2.5.8/ /data/hbase

mkdir -p /data/hbase/logs

#vim /etc/profile

export hbase_log_dir=/data/hbase/logs

export hbase_manages_zk=false

export hbase_home=/data/hbase

export path=$path:$zookeeper_home/bin

#vim /data/hbase/conf/regionservers

slave12

slave13

#新建backup-masters

vim /data/hbase/conf/backup-masters

slave12

#vim /data/hbase/conf/hbase-site.xml

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!--hbase端口-->

<property>

<name>hbase.master.info.port</name>

<value>16010</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master11,slave12,slave13</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master11:9000/hbase</value>

</property>

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>5 配置hadoop

tar zxvf hadoop-3.4.0.tar.gz

mv hadoop-3.4.0/ /data/hadoop

#配置环境变量

vim /etc/profile

export hadoop_home=/data/hadoop

export path=$path:$hadoop_home/bin:$path:$hadoop_home/sbin

source /etc/profile

#查看版本

[root@master11 soft]# hadoop version

hadoop 3.4.0

source code repository git@github.com:apache/hadoop.git -r bd8b77f398f626bb7791783192ee7a5dfaeec760

compiled by root on 2024-03-04t06:35z

compiled on platform linux-x86_64

compiled with protoc 3.21.12

from source with checksum f7fe694a3613358b38812ae9c31114e

this command was run using /data/hadoop/share/hadoop/common/hadoop-common-3.4.0.jar6 修改hadoop配置文件

#core-site.xml

vim /data/hadoop/etc/hadoop/core-site.xml

#增加如下

<configuration>

<property>

<name>fs.defaultfs</name>

<value>hdfs://master11</value>

</property>

<!-- hadoop 本地数据存储目录 format 时自动生成 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/data/tmp</value>

</property>

<!-- 在 webui访问 hdfs 使用的用户名。-->

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>master11:2181,slave12:2181,slave13:2181</value>

</property>

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>10000</value>

</property>

</configuration>

#hdfs-site.xml

vim /data/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<!-- 副本数dfs.replication默认值3,可不配置 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 节点数据存储地址 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/data/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/hadoop/data/dfs/data</value>

</property>

<!-- 主备配置 -->

<!-- 为namenode集群定义一个services name -->

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!-- 声明集群有几个namenode节点 -->

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<!-- 指定 rpc通信地址 的地址 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>master11:8020</value>

</property>

<!-- 指定 rpc通信地址 的地址 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>slave12:8020</value>

</property>

<!-- http通信地址 web端访问地址 -->

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>master11:50070</value>

</property>

<!-- http通信地址 web 端访问地址 -->

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>slave12:50070</value>

</property>

<!-- 声明journalnode集群服务器 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master11:8485;slave12:8485;slave13:8485/mycluster</value>

</property>

<!-- 声明journalnode服务器数据存储目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/hadoop/data/dfs/jn</value>

</property>

<!-- 开启namenode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 隔离:同一时刻只能有一台服务器对外响应 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 配置失败自动切换实现方式,通过configuredfailoverproxyprovider这个类实现自动切换 -->

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.configuredfailoverproxyprovider</value>

</property>

<!-- 指定上述选项ssh通讯使用的密钥文件在系统中的位置。 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- 配置sshfence隔离机制超时时间(active异常,standby如果没有在30秒之内未连接上,那么standby将变成active) -->

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 开启hdfs允许创建目录的权限,配置hdfs-site.xml -->

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!-- 使用host+hostname的配置方式 -->

<property>

<name>dfs.namenode.datanode.registration.ip-hostname-check</name>

<value>false</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!-- 开启自动化: 启动zkfc -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<property>

<name>ipc.client.connect.max.retries</name>

<value>100</value>

<description>indicates the number of retries a client will make to establish a server connection.</description>

</property>

<property>

<name>ipc.client.connect.retry.interval</name>

<value>10000</value>

<description>indicates the number of milliseconds a client will wait for before retrying to establish a server connection.</description>

</property>

</configuration>

#yarn-site.xml

vi /data/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<!-- 指定yarn占电脑资源,默认8核8g -->

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://master11:19888/jobhistory/logs</value>

</property>

<!-- 指定 mr 走 shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 开启日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置日志保留时间为 7 天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>86400</value>

</property>

<!-- 主备配置 -->

<!-- 启用resourcemanager ha -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>my-yarn-cluster</value>

</property>

<!-- 声明两台resourcemanager的地址 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>slave12</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>slave13</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>slave12:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>slave13:8088</value>

</property>

<!-- 指定zookeeper集群的地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>master11:2181,slave12:2181,slave13:2181</value>

</property>

<!-- 启用自动恢复 -->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!-- 指定resourcemanager的状态信息存储在zookeeper集群 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.zkrmstatestore</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>2.1</value>

</property>

<property>

<name>mapred.child.java.opts</name>

<value>-xmx1024m</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>slave12:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>slave12:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>slave12:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>slave12:8033</value>

</property>

<property>

<name>yarn.nodemanager.address.rm1</name>

<value>slave12:8041</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>slave13:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>slave13:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>slave13:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>slave13:8033</value>

</property>

<property>

<name>yarn.nodemanager.address.rm2</name>

<value>slave13:8041</value>

</property>

<property>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:8040</value>

</property>

<property>

<description>nm webapp address.</description>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:8042</value>

</property>

<property>

<name>yarn.nodemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8041</value>

</property>

<property>

<name>yarn.application.classpath</name>

<value>/data/hadoop/etc/hadoop:/data/hadoop/share/hadoop/common/lib/*:/data/hadoop/share/hadoop/common/*:/data/hadoop/share/hadoop/hdfs:/data/hadoop/share/hadoop/hdfs/lib/*:/data/hadoop/share/hadoop/hdfs/*:/data/hadoop/share/hadoop/mapreduce/lib/*:/data/hadoop/share/hadoop/mapreduce/*:/data/hadoop/share/hadoop/yarn:/data/hadoop/share/hadoop/yarn/lib/*

:/data/hadoop/share/hadoop/yarn/*</value> </property>

</configuration>

#修改workers

vi /data/hadoop/etc/hadoop/workers

master11

slave12

slave137 分发文件和配置

#master11

cd /data/

scp -r hadoop/ slave12:/data

scp -r hadoop/ slave13:/data

scp -r hbase/ slave13:/data

scp -r hbase/ slave12:/data

scp -r zookeeper/ slave12:/data

scp -r zookeeper/ slave13:/data

#3台服务器的/etc/profile 变量一致

export java_home=/usr/local/jdk

export path=$java_home/bin:$path

classpath=.:$java_home/lib/dt.jar:$java_home/lib/tools.jar

export classpath

export hadoop_home=/data/hadoop

export path=$path:$hadoop_home/bin:$path:$hadoop_home/sbin

export zookeeper_home=/data/zookeeper

export path=$path:$zookeeper_home/bin

#

export hbase_log_dir=/data/hbase/logs

export hbase_manages_zk=false

export hbase_home=/data/hbase

export path=$path:$hbase_home/bin

export hive_home=/data/hive

export path=$path:$hive_home/bin

export hdfs_namenode_user=root

export hdfs_datanode_user=root

export hdfs_secondarynamenode_user=root

export yarn_resourcemanager_user=root

export yarn_nodemanager_user=root

export hdfs_zkfc_user=root

export hdfs_datanode_secure_user=root

export hdfs_journalnode_user=root

8 集群启动

#ha模式第一次或删除在格式化版本

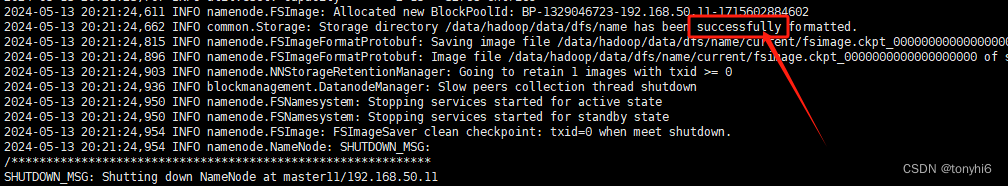

#第一次需要格式化,master11上面

start-dfs.sh

hdfs namenode -format

ll /data/hadoop/data/dfs/name/current/

total 16

-rw-r--r--. 1 root root 399 may 13 20:21 fsimage_0000000000000000000

-rw-r--r--. 1 root root 62 may 13 20:21 fsimage_0000000000000000000.md5

-rw-r--r--. 1 root root 2 may 13 20:21 seen_txid

-rw-r--r--. 1 root root 218 may 13 20:21 version

#同步数据到slave12节点(其余namenode节点)

scp -r /data/hadoop/data/dfs/name/* slave12:/data/hadoop/data/dfs/name/

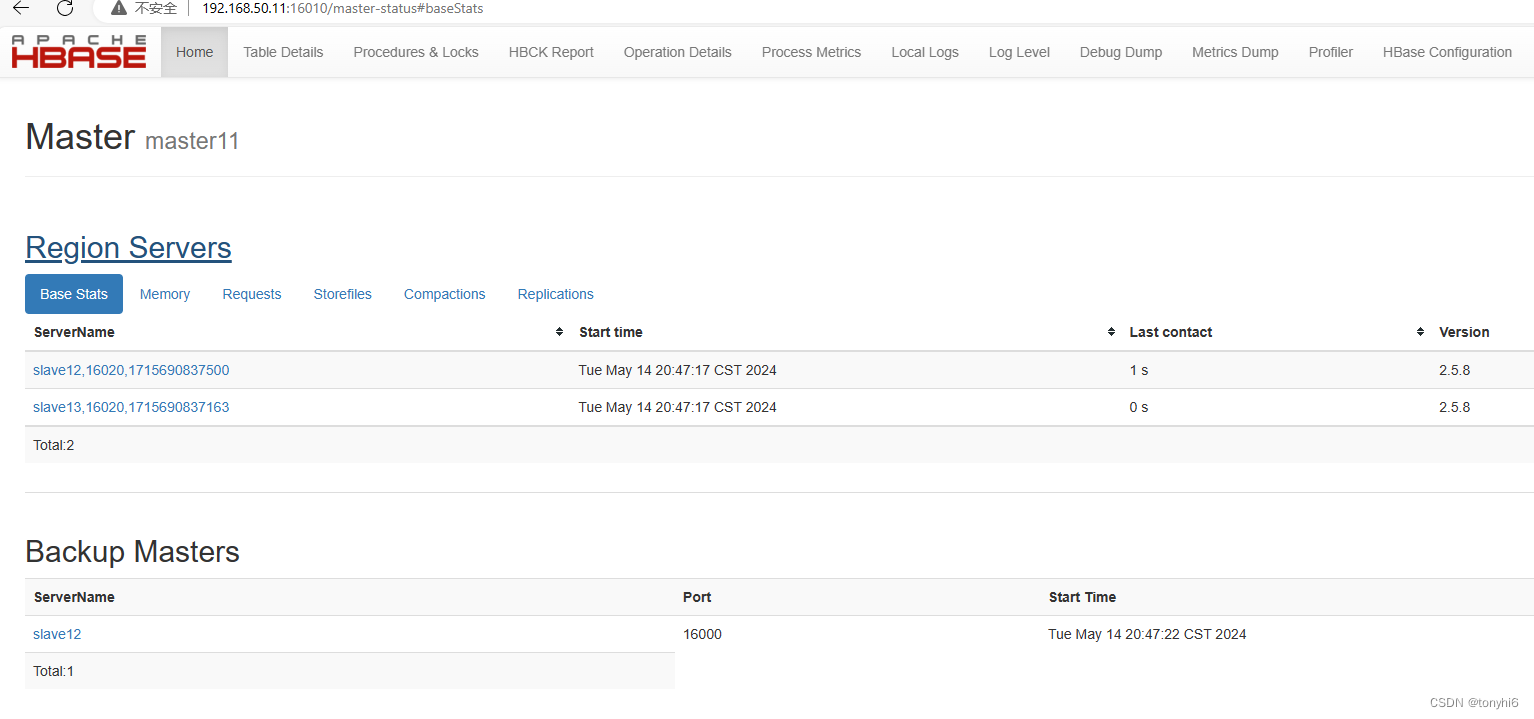

#成功如图

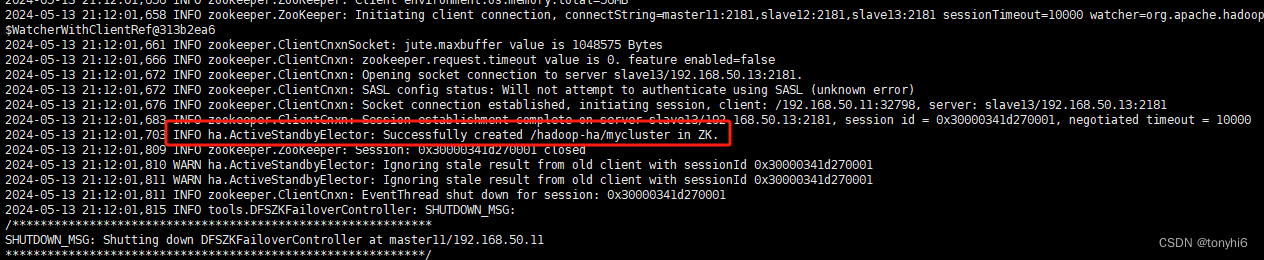

#在任意一台 namenode上初始化 zookeeper 中的 ha 状态

[root@master11 hadoop]# jps

2400 quorumpeermain

4897 jps

3620 journalnode

3383 datanode

#

hdfs zkfc -formatzk

#如下图

#集群正常启动顺序

#zookeeper,3台服务器都执行

zkserver.sh start

#查看

[root@master11 ~]# zkserver.sh status

zookeeper jmx enabled by default

using config: /data/zookeeper/bin/../conf/zoo.cfg

client port found: 2181. client address: localhost. client ssl: false.

mode: follower

[root@slave12 data]# zkserver.sh status

zookeeper jmx enabled by default

using config: /data/zookeeper/bin/../conf/zoo.cfg

client port found: 2181. client address: localhost. client ssl: false.

mode: leader

[root@slave13 ~]# zkserver.sh status

zookeeper jmx enabled by default

using config: /data/zookeeper/bin/../conf/zoo.cfg

client port found: 2181. client address: localhost. client ssl: false.

mode: follower

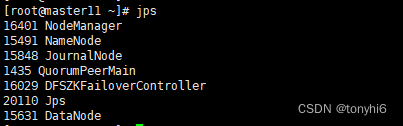

#master11 ,hadoop集群一键启动

start-all.sh start

#一键停止

stop-all.sh

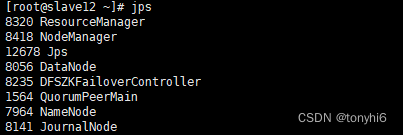

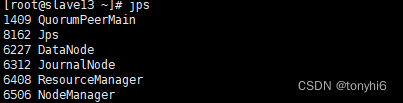

#jps 查看如图

#查看集群状态

#namenode

[root@master11 ~]# hdfs haadmin -getservicestate nn1

active

[root@master11 ~]# hdfs haadmin -getservicestate nn2

standby

[root@master11 ~]# hdfs haadmin -ns mycluster -getallservicestate

master11:8020 active

slave12:8020 standby

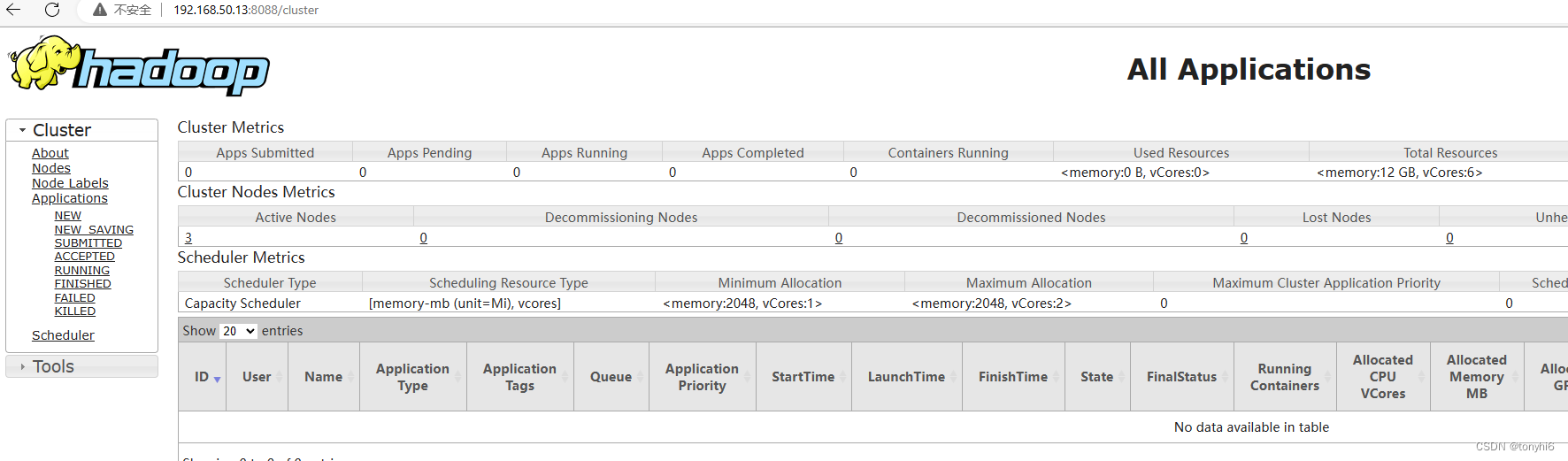

#yarn

[root@master11 ~]# yarn rmadmin -getservicestate rm1

standby

[root@master11 ~]# yarn rmadmin -getservicestate rm2

active

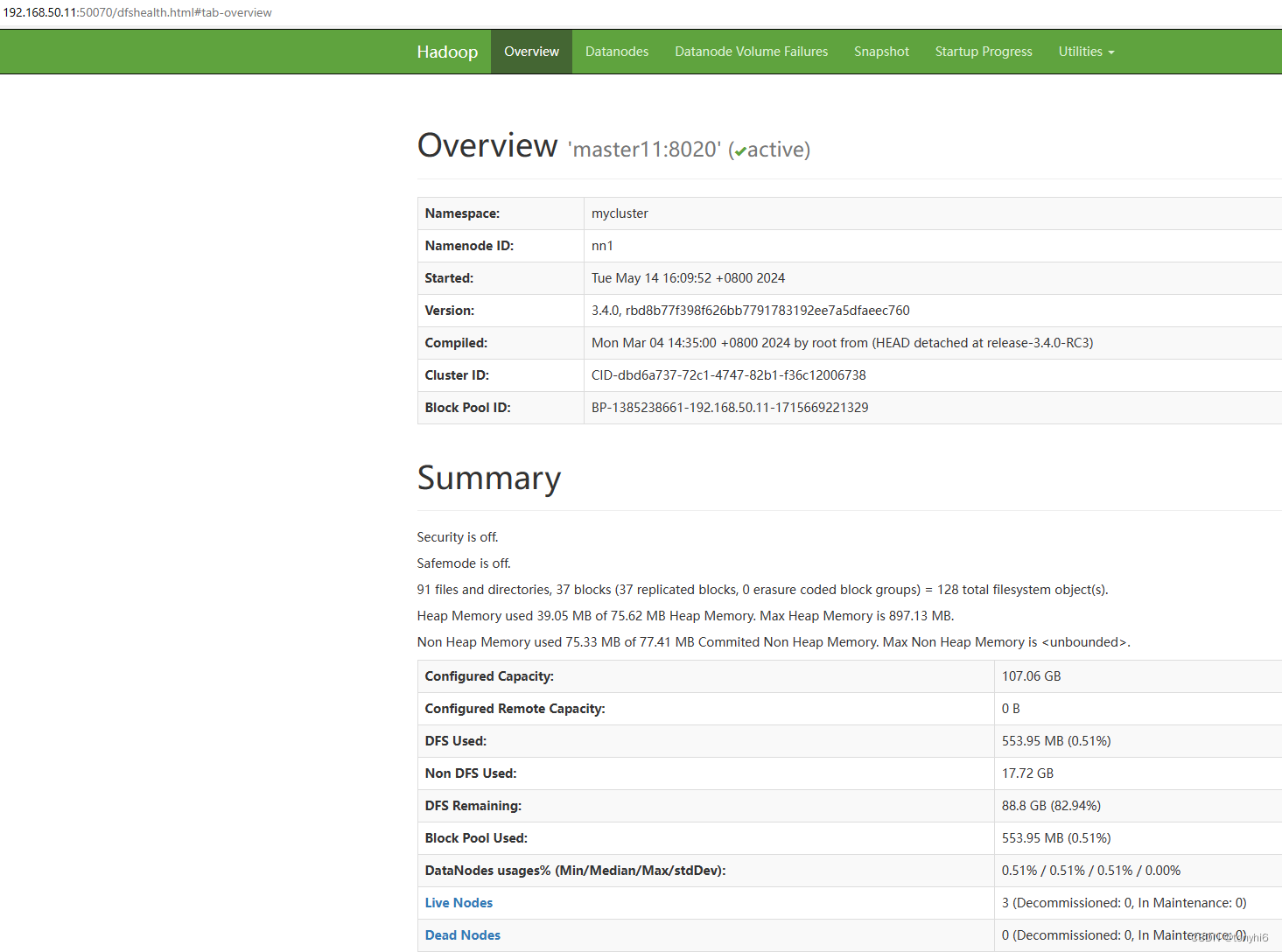

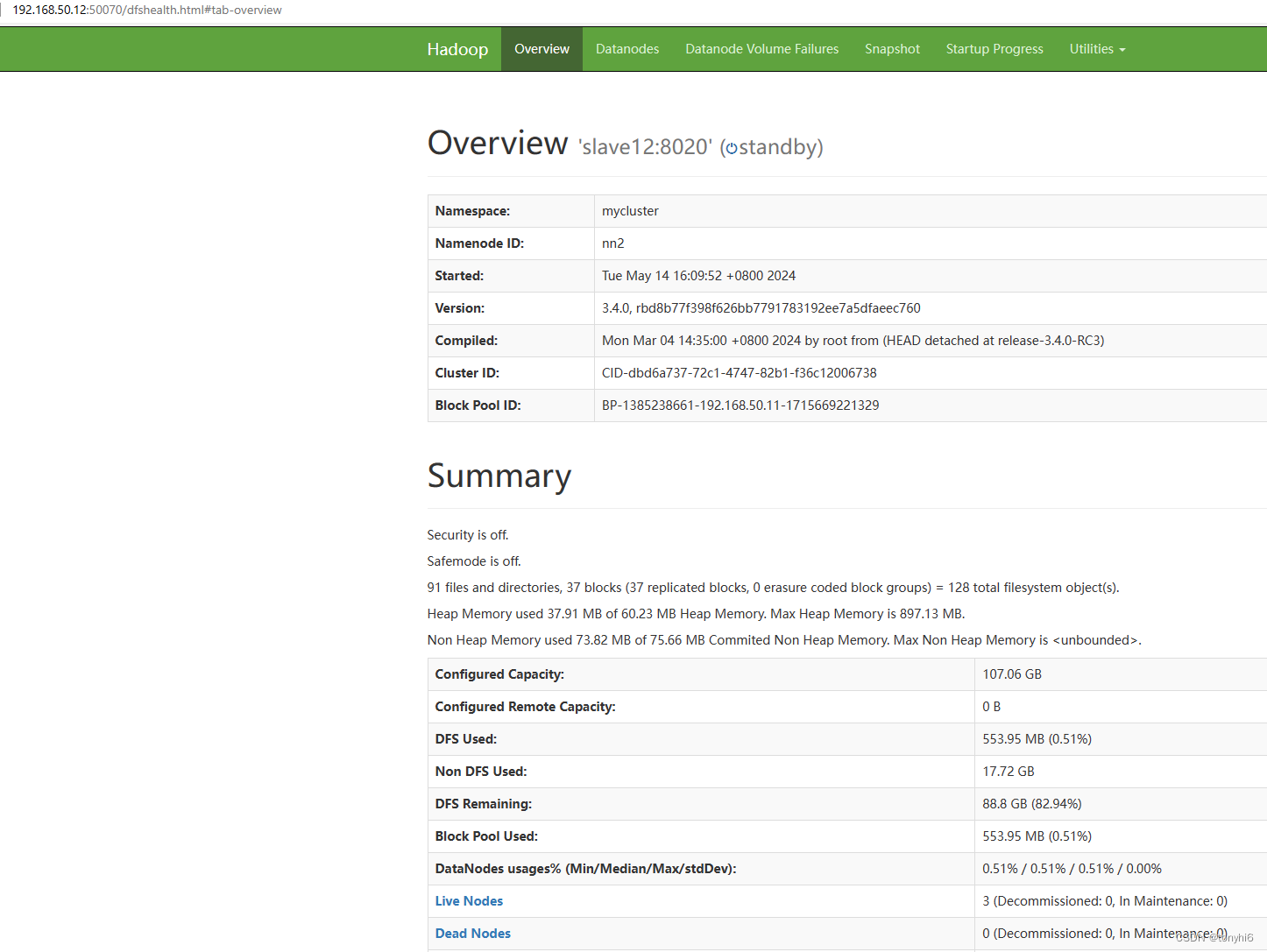

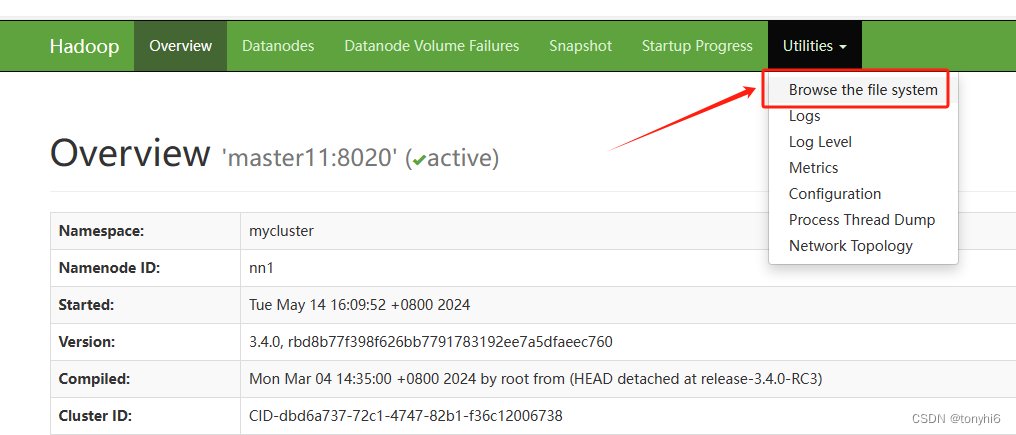

#查看hdfs web ui

#查看 yarn集群

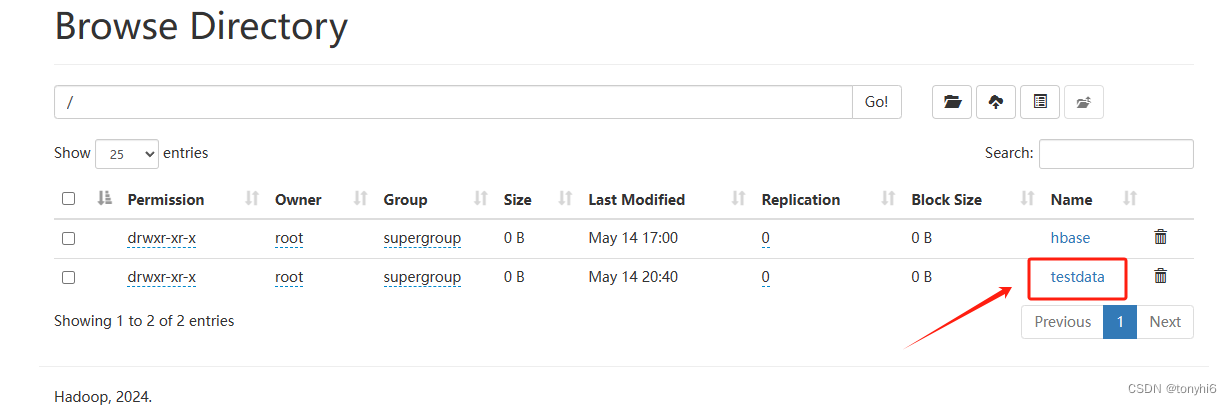

9 hadoop 测试使用

#创建目录

hdfs dfs -mkdir /testdata

#查看

[root@master11 ~]# hdfs dfs -ls /

found 2 items

drwxr-xr-x - root supergroup 0 2024-05-14 17:00 /hbase

drwxr-xr-x - root supergroup 0 2024-05-14 20:32 /testdata

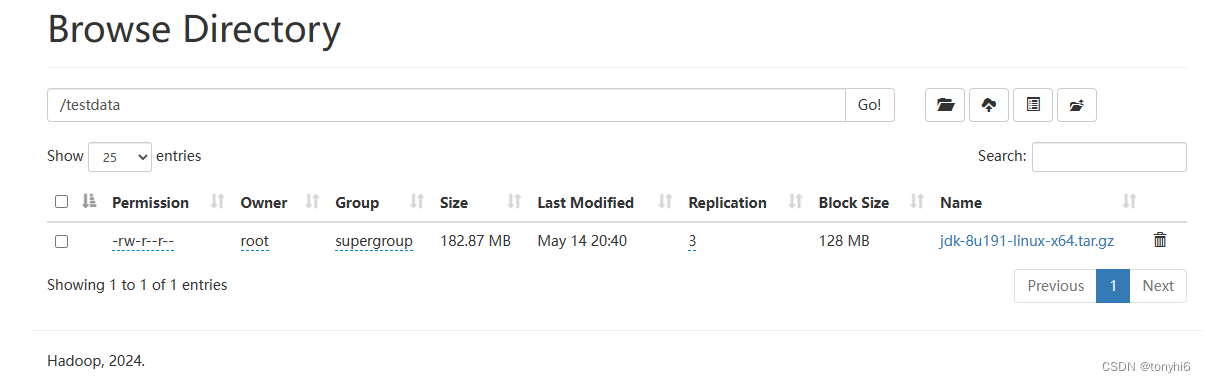

#上传文件

hdfs dfs -put jdk-8u191-linux-x64.tar.gz /testdata

#查看文件

[root@master11 soft]# hdfs dfs -ls /testdata/

found 1 items

-rw-r--r-- 3 root supergroup 191753373 2024-05-14 20:40 /testdata/jdk-8u191-linux-x64.tar.gz

10 启动hbase,hadoop的active节点

[root@master11 ~]# hdfs haadmin -getservicestate nn1

active

#启动

start-hbase.sh

#查看

[root@master11 ~]# jps

16401 nodemanager

15491 namenode

21543 hmaster

15848 journalnode

1435 quorumpeermain

16029 dfszkfailovercontroller

21902 jps

15631 datanode 11 安装hive

11 安装hive

#解压和配置环境变量

tar zxvf apache-hive-4.0.0-bin.tar.gz

mv apache-hive-4.0.0-bin/ /data/hive

#环境变量

vi /etc/profile

export hive_home=/data/hive

export path=$path:$hive_home/bin

source /etc/profile# 安装mysql ,可参考

#mysql驱动

mv mysql-connector-java-8.0.29.jar /data/hive/lib/schematool -dbtype mysql -initschema

#报错

slf4j: class path contains multiple slf4j bindings.

slf4j: found binding in [jar:file:/data/hive/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: found binding in [jar:file:/data/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: see http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

slf4j: actual binding is of type [org.apache.logging.slf4j.log4jloggerfactory]

exception in thread "main" [com.ctc.wstx.exc.wstxlazyexception] com.ctc.wstx.exc.wstxunexpectedcharexception: unexpected character '=' (code 61); expected a semi-colon after the reference for entity 'characterencoding'

at [row,col,system-id]: [5,86,"file:/data/hive/conf/hive-site.xml"]

at com.ctc.wstx.exc.wstxlazyexception.throwlazily(wstxlazyexception.java:40)

at com.ctc.wstx.sr.streamscanner.throwlazyerror(streamscanner.java:737)

at com.ctc.wstx.sr.basicstreamreader.safefinishtoken(basicstreamreader.java:3745)

at com.ctc.wstx.sr.basicstreamreader.gettextcharacters(basicstreamreader.java:914)

at org.apache.hadoop.conf.configuration$parser.parsenext(configuration.java:3434)

at org.apache.hadoop.conf.configuration$parser.parse(configuration.java:3213)

at org.apache.hadoop.conf.configuration.loadresource(configuration.java:3106)

at org.apache.hadoop.conf.configuration.loadresources(configuration.java:3072)

at org.apache.hadoop.conf.configuration.loadprops(configuration.java:2945)

at org.apache.hadoop.conf.configuration.getprops(configuration.java:2927)

at org.apache.hadoop.conf.configuration.set(configuration.java:1431)

at org.apache.hadoop.conf.configuration.set(configuration.java:1403)

at org.apache.hadoop.hive.metastore.conf.metastoreconf.newmetastoreconf(metastoreconf.java:2120)

at org.apache.hadoop.hive.metastore.conf.metastoreconf.newmetastoreconf(metastoreconf.java:2072)

at org.apache.hive.beeline.schematool.hiveschematool.main(hiveschematool.java:144)

at sun.reflect.nativemethodaccessorimpl.invoke0(native method)

at sun.reflect.nativemethodaccessorimpl.invoke(nativemethodaccessorimpl.java:62)

at sun.reflect.delegatingmethodaccessorimpl.invoke(delegatingmethodaccessorimpl.java:43)

at java.lang.reflect.method.invoke(method.java:498)

at org.apache.hadoop.util.runjar.run(runjar.java:330)

at org.apache.hadoop.util.runjar.main(runjar.java:245)

caused by: com.ctc.wstx.exc.wstxunexpectedcharexception: unexpected character '=' (code 61); expected a semi-colon after the reference for entity 'characterencoding'

at [row,col,system-id]: [5,86,"file:/data/hive/conf/hive-site.xml"]

at com.ctc.wstx.sr.streamscanner.throwunexpectedchar(streamscanner.java:666)

at com.ctc.wstx.sr.streamscanner.parseentityname(streamscanner.java:2080)

at com.ctc.wstx.sr.streamscanner.fullyresolveentity(streamscanner.java:1538)

at com.ctc.wstx.sr.basicstreamreader.readtextsecondary(basicstreamreader.java:4765)

at com.ctc.wstx.sr.basicstreamreader.finishtoken(basicstreamreader.java:3789)

at com.ctc.wstx.sr.basicstreamreader.safefinishtoken(basicstreamreader.java:3743)

... 18 more

#解决 vi /data/hive/conf/hive-site.xml

&字符 需要转义 改成 &

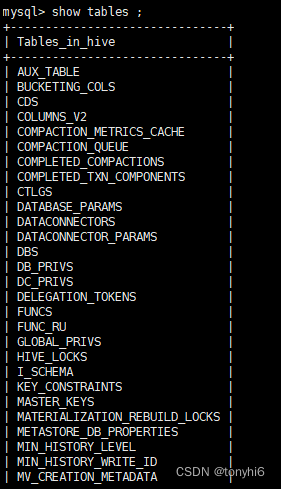

#成功提示 initialization script completed

数据库如下图

#启动,hive 在master11,mysql 安装在slave12

cd /data/hive/

nohup hive --service metastore & (启动hive元数据服务)

nohup ./bin/hiveserver2 & (启动jdbc连接服务)

#直接hive,提示“no current connection”

hive

[root@master11 hive]# hive

slf4j: class path contains multiple slf4j bindings.

slf4j: found binding in [jar:file:/data/hive/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: found binding in [jar:file:/data/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: see http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

slf4j: actual binding is of type [org.apache.logging.slf4j.log4jloggerfactory]

slf4j: class path contains multiple slf4j bindings.

slf4j: found binding in [jar:file:/data/hive/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: found binding in [jar:file:/data/hadoop/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/staticloggerbinder.class]

slf4j: see http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

slf4j: actual binding is of type [org.apache.logging.slf4j.log4jloggerfactory]

beeline version 4.0.0 by apache hive

beeline> show databases;

no current connection

beeline>

#在提示符 输入!connect jdbc:hive2://master11:10000,之后输入mysql用户和密码

beeline> !connect jdbc:hive2://master11:10000

connecting to jdbc:hive2://master11:10000

enter username for jdbc:hive2://master11:10000: root

enter password for jdbc:hive2://master11:10000: *********

connected to: apache hive (version 4.0.0)

driver: hive jdbc (version 4.0.0)

transaction isolation: transaction_repeatable_read

0: jdbc:hive2://master11:10000> show databases;

info : compiling command(queryid=root_20240514222349_ac19af6a-3c43-49fd-bcd0-25fc0e5b76c6): show databases

info : semantic analysis completed (retrial = false)

info : created hive schema: schema(fieldschemas:[fieldschema(name:database_name, type:string, comment:from deserializer)], properties:null)

info : completed compiling command(queryid=root_20240514222349_ac19af6a-3c43-49fd-bcd0-25fc0e5b76c6); time taken: 0.021 seconds

info : concurrency mode is disabled, not creating a lock manager

info : executing command(queryid=root_20240514222349_ac19af6a-3c43-49fd-bcd0-25fc0e5b76c6): show databases

info : starting task [stage-0:ddl] in serial mode

info : completed executing command(queryid=root_20240514222349_ac19af6a-3c43-49fd-bcd0-25fc0e5b76c6); time taken: 0.017 seconds

+----------------+

| database_name |

+----------------+

| default |

+----------------+

1 row selected (0.124 seconds)

0: jdbc:hive2://master11:10000>hadoop3.4.0+hbase2.5.8+zookeeper3.8.4+hive4.0+sqoop 分布式高可用集群部署安装,已完成。欢迎大家一起交流哦。下一篇,项目实战。

发表评论