k8s使用rbd作为存储

如果需要使用rbd作为后端存储的话,需要先安装ceph-common

1. ceph集群创建rbd

需要提前在ceph集群上创建pool,然后创建image

[root@ceph01 ~]# ceph osd pool create pool01 [root@ceph01 ~]# ceph osd pool application enable pool01 rbd [root@ceph01 ~]# rbd pool init pool01 [root@ceph01 ~]# rbd create pool01/test --size 10g --image-format 2 --image-feature layerin [root@ceph01 ~]# rbd info pool01/test

2. k8s编写yaml文件

apiversion: apps/v1

kind: deployment

metadata:

creationtimestamp: null

labels:

app: rbd

name: rbd

spec:

replicas: 1

selector:

matchlabels:

app: rbd

strategy: {}

template:

metadata:

creationtimestamp: null

labels:

app: rbd

spec:

volumes:

- name: test

rbd:

fstype: xfs

keyring: /root/admin.keyring

monitors:

- 192.168.200.230:6789

pool: pool01

image: test

user: admin

readonly: false

containers:

- image: nginx

imagepullpolicy: ifnotpresent

volumemounts:

- mountpath: /usr/share/nginx/html

name: test

name: nginx

resources: {}

status: {}[root@master ~]# kubectl get pods name ready status restarts age rbd-888b8b747-n56wr 1/1 running 0 26m

这个时候k8s就使用了rbd作为存储

如果这个地方一直显示containercreating的话,可能是没有安装ceph-common,也可能是你的keyring或者ceph.conf没有发放到node节点,具体可以使用describe来看

2.1 进入容器查看挂载

[root@master euler]# kubectl exec -it rbd-5db4759c-nj2b4 -- bash root@rbd-5db4759c-nj2b4:/# df -ht |grep /dev/rbd0 /dev/rbd0 xfs 10g 105m 9.9g 2% /usr/share/nginx/html

可以看到,/dev/rbd0已经被格式化成xfs并且挂载到了/usr/share/nginx/html

2.2 进入容器修改内容

root@rbd-5db4759c-nj2b4:/usr/share/nginx# cd html/ root@rbd-5db4759c-nj2b4:/usr/share/nginx/html# ls root@rbd-5db4759c-nj2b4:/usr/share/nginx/html# echo 123 > index.html root@rbd-5db4759c-nj2b4:/usr/share/nginx/html# chmod 644 index.html root@rbd-5db4759c-nj2b4:/usr/share/nginx/html# exit [root@master euler]# kubectl get pods -o wide name ready status restarts age ip node nominated node readiness gates rbd-5db4759c-nj2b4 1/1 running 0 8m5s 192.168.166.131 node1 <none> <none>

访问容器查看内容

[root@master euler]# curl 192.168.166.131 123

内容可以正常被访问到,我们将容器删除,然后让他自己重新启动一个来看看文件是否还存在

[root@master euler]# kubectl delete pods rbd-5db4759c-nj2b4 pod "rbd-5db4759c-nj2b4" deleted [root@master euler]# kubectl get pods name ready status restarts age rbd-5db4759c-v9cgm 0/1 containercreating 0 2s [root@master euler]# kubectl get pods -owide name ready status restarts age ip node nominated node readiness gates rbd-5db4759c-v9cgm 1/1 running 0 40s 192.168.166.132 node1 <none> <none> [root@master euler]# curl 192.168.166.132 123

可以看到,也是没有问题的,这样k8s就正常的使用了rbd存储

有一个问题,那就是开发人员他们并不是很了解yaml文件里面改怎么去写挂载,每种类型的存储都是不同的写法,那有没有一种方式屏蔽底层的写法,直接告诉k8s集群我想要一个什么样的存储呢?

有的,那就是pv

3. pv使用rbd

[root@master euler]# vim pvc.yaml

apiversion: v1

kind: persistentvolumeclaim

metadata:

name: myclaim

spec:

accessmodes:

- readwriteonce

volumemode: block

resources:

requests:

storage: 8gi这里的pvc使用的是块设备,8个g,目前还没有这个pv可以给到他

具体的这里不细说,cka里面有写

注意,这里是pvc,并不是pv,pvc就是开发人员定义想要的存储类型,大小,然后我可以根据你的pvc去给你创建pv,或者提前创建好pv你直接申领

[root@master euler]# vim pv.yaml

apiversion: v1

kind: persistentvolume

metadata:

name: rbdpv

spec:

capacity:

storage: 8gi

volumemode: block

accessmodes:

- readwriteonce

persistentvolumereclaimpolicy: recycle

mountoptions:

- hard

- nfsvers=4.1

rbd:

fstype: xfs

image: test

keyring: /etc/ceph/ceph.client.admin.keyring

monitors:

- 172.16.1.33

pool: rbd

readonly: false

user: admin3.1 查看pvc状态

[root@master euler]# kubectl get pvc name status volume capacity access modes storageclass age myclaim bound rbdpv 8gi rwo 11s

这个时候pv和就和pvc绑定上了,一个pv只能绑定一个pvc,同样,一个pvc也只能绑定一个pv

3.2 使用pvc

[root@master euler]# vim pod-pvc.yaml

apiversion: v1

kind: pod

metadata:

creationtimestamp: null

labels:

run: pvc-pod

name: pvc-pod

spec:

volumes:

- name: rbd

persistentvolumeclaim:

claimname: myclaim

readonly: false

containers:

- image: nginx

imagepullpolicy: ifnotpresent

name: pvc-pod

volumedevices: # 因为是使用的块设备,所以这里是volumedevices

- devicepath: /dev/rbd0

name: rbd

resources: {}

dnspolicy: clusterfirst

restartpolicy: always

status: {}

~ [root@master euler]# kubectl get pods name ready status restarts age pvc-pod 1/1 running 0 2m5s rbd-5db4759c-v9cgm 1/1 running 0 39m

3.3 进入容器查看块设备

root@pvc-pod:/# ls /dev/rbd0 /dev/rbd0

可以看到,现在rbd0已经存在于容器内部了

这样做我们每次创建pvc都需要创建对应的pv,我们可以使用动态制备

4. 动态制备

使用storageclass,但是目前欧拉使用的k8s太老了,所以需要下载欧拉fork的一个storageclass

[root@master ~]# git clone https://gitee.com/yftyxa/ceph-csi.git [root@master ~]# cd ceph-csi/deploy/ [root@master deploy]# ls ceph-conf.yaml csi-config-map-sample.yaml rbd cephcsi makefile scc.yaml cephfs nfs service-monitor.yaml

4.1 动态制备rbd

我们需要修改/root/ceph-csi/deploy/rbd/kubernetes/csi-config-map.yaml

# 先创建一个csi命名空间 [root@master ~]# kubectl create ns csi

修改文件内容

[root@master kubernetes]# vim csi-rbdplugin-provisioner.yaml

# 将第63行的内容改为false

63 - "--extra-create-metadata=false"

# 修改第二个文件

[root@master kubernetes]# vim csi-config-map.yaml

apiversion: v1

kind: configmap

metadata:

name: "ceph-csi-config"

data:

config.json: |-

[

{

"clusterid": "c1f213ae-2de3-11ef-ae15-00163e179ce3",

"monitors": ["172.16.1.33","172.16.1.32","172.16.1.31"]

}

]- 这里面的clusterid可以通过ceph -s去查看

修改第三个文件

[root@master kubernetes]# vim csidriver.yaml --- apiversion: storage.k8s.io/v1 kind: csidriver metadata: name: "rbd.csi.ceph.com" spec: attachrequired: true podinfoonmount: false # selinuxmount: true # 将这一行注释 fsgrouppolicy: file

自行编写一个文件

[root@master kubernetes]# vim csi-kms-config-map.yaml

---

apiversion: v1

kind: configmap

metadata:

name: ceph-csi-encryption-kms-config

data:

config-json: |-

{}4.2 获取admin的key

[root@ceph001 ~]# cat /etc/ceph/ceph.client.admin.keyring [client.admin] key = aqc4qnjmng4hihaa42s27yoflqobntewdgemkg== caps mds = "allow *" caps mgr = "allow *" caps mon = "allow *" caps osd = "allow *"

- aqc4qnjmng4hihaa42s27yoflqobntewdgemkg== 只要这部分

然后自行编写一个csi-secret.yaml的文件

[root@master kubernetes]# vim csi-secret.yaml apiversion: v1 kind: secret metadata: name: csi-secret stringdata: userid: admin userkey: aqc4qnjmng4hihaa42s27yoflqobntewdgemkg== adminid: admin adminkey: aqc4qnjmng4hihaa42s27yoflqobntewdgemkg== [root@master kubernetes]# kubectl apply -f csi-secret.yaml -n csi secret/csi-secret created [root@master kubernetes]# cd ../../ [root@master deploy]# kubectl apply -f ceph-conf.yaml -n csi configmap/ceph-config created [root@master deploy]# cd - /root/ceph-csi/deploy/rbd/kubernetes

4.3替换所有的namespace

[root@master kubernetes]# sed -i "s/namespace: default/namespace: csi/g" *.yaml

4.4 部署

[root@master kubernetes]# [root@master kubernetes]# kubectl apply -f . -n csi

注意:如果你的worker节点数量少于3个的话,是需要将 csi-rbdplugin-provisioner.yaml这个文件里面的replicas改小一点的。

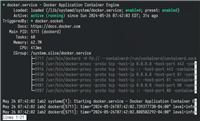

[root@master kubernetes]# kubectl get pods -n csi name ready status restarts age csi-rbdplugin-cv455 3/3 running 1 (2m14s ago) 2m46s csi-rbdplugin-pf5ld 3/3 running 0 4m36s csi-rbdplugin-provisioner-6846c4df5f-dvqqk 7/7 running 0 4m36s csi-rbdplugin-provisioner-6846c4df5f-nmcxf 7/7 running 1 (2m11s ago) 4m36s

5.使用动态制备

5.1 创建storageclass

[root@master rbd]# /root/ceph-csi/examples/rbd [root@master rbd]# grep -ev "\s*#|^$" storageclass.yaml --- apiversion: storage.k8s.io/v1 kind: storageclass metadata: name: csi-rbd-sc provisioner: rbd.csi.ceph.com parameters: clusterid: <cluster-id> pool: <rbd-pool-name> imagefeatures: "layering" csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret csi.storage.k8s.io/provisioner-secret-namespace: default csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret csi.storage.k8s.io/controller-expand-secret-namespace: default csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret csi.storage.k8s.io/node-stage-secret-namespace: default csi.storage.k8s.io/fstype: ext4 reclaimpolicy: delete allowvolumeexpansion: true mountoptions: - discard

将这里的内容复制出来

--- apiversion: storage.k8s.io/v1 kind: storageclass metadata: name: csi-rbd-sc provisioner: rbd.csi.ceph.com parameters: clusterid: c1f213ae-2de3-11ef-ae15-00163e179ce3 pool: rbd imagefeatures: "layering" csi.storage.k8s.io/provisioner-secret-name: csi-secret csi.storage.k8s.io/provisioner-secret-namespace: csi csi.storage.k8s.io/controller-expand-secret-name: csi-secret csi.storage.k8s.io/controller-expand-secret-namespace: csi csi.storage.k8s.io/node-stage-secret-name: csi-secret csi.storage.k8s.io/node-stage-secret-namespace: csi csi.storage.k8s.io/fstype: ext4 reclaimpolicy: retain allowvolumeexpansion: true mountoptions: - discard

修改成这个样子,这里面的clusterid改成自己的,secret-name自己查一下

5.2 创建pvc

[root@master euler]# cp pvc.yaml sc-pvc.yaml

[root@master euler]# vim sc-pvc.yaml

apiversion: v1

kind: persistentvolumeclaim

metadata:

name: sc-pvc

spec:

accessmodes:

- readwriteonce

volumemode: block

storageclassname: "csi-rbd-sc"

resources:

requests:

storage: 15gi

- storageclassname 可以使用 kubectl get sc查看

现在我们只需要创建pvc,他就自己可以创建pv了

[root@master euler]# kubectl apply -f sc-pvc.yaml persistentvolumeclaim/sc-pvc created [root@master euler]# kubectl get pvc name status volume capacity access modes storageclass age myclaim bound rbdpv 8gi rwo 111m sc-pvc bound pvc-dfe3497f-9ed7-4961-9265-9e7242073c28 15gi rwo csi-rbd-sc 2s

回到ceph集群查看rbd

[root@ceph001 ~]# rbd ls csi-vol-56e37046-b9d7-4ef1-a534-970a766744f3 test [root@ceph001 ~]# rbd info csi-vol-56e37046-b9d7-4ef1-a534-970a766744f3 rbd image 'csi-vol-56e37046-b9d7-4ef1-a534-970a766744f3': size 15 gib in 3840 objects order 22 (4 mib objects) snapshot_count: 0 id: 38019ee708da block_name_prefix: rbd_data.38019ee708da format: 2 features: layering op_features: flags: create_timestamp: wed jun 19 04:55:35 2024 access_timestamp: wed jun 19 04:55:35 2024 modify_timestamp: wed jun 19 04:55:35 2024

5.3 将sc设为默认

如果不设置为默认的话,每次写yaml文件都需要指定sc,将sc设为默认的话就不用每次都指定了

[root@master euler]# kubectl edit sc csi-rbd-sc

# 在注释里面写入这一行

annotations:

storageclass.kubernetes.io/is-default-class: "true"5.4 测试默认pvc

[root@master euler]# kubectl get sc name provisioner reclaimpolicy volumebindingmode allowvolumeexpansion age csi-rbd-sc (default) rbd.csi.ceph.com retain immediate true 29m

再去查看sc就会有一个default的显示

[root@master euler]# cp sc-pvc.yaml sc-pvc1.yaml

[root@master euler]# cat sc-pvc1.yaml

apiversion: v1

kind: persistentvolumeclaim

metadata:

name: sc-pvc1

spec:

accessmodes:

- readwriteonce

volumemode: block

resources:

requests:

storage: 20gi这个文件里面是没有指定storageclassname的

[root@master euler]# kubectl apply -f sc-pvc1.yaml persistentvolumeclaim/sc-pvc1 created [root@master euler]# kubectl get pvc name status volume capacity access modes storageclass age myclaim bound rbdpv 8gi rwo 138m sc-pvc bound pvc-dfe3497f-9ed7-4961-9265-9e7242073c28 15gi rwo csi-rbd-sc 27m sc-pvc1 bound pvc-167cf73b-4983-4c28-aa98-bb65bb966649 20gi rwo csi-rbd-sc 6s

这样就好了

到此这篇关于k8s使用rbd作为存储的文章就介绍到这了,更多相关k8s使用rbd内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论