1、replace替换

replace就是最简单的字符串替换,当一串字符串中有可能会出现的敏感词时,我们直接使用相应的replace方法用*替换出敏感词即可。

缺点:

文本和敏感词少的时候还可以,多的时候效率就比较差了。

示例代码:

text = '我是一个来自星星的超人,具有超人本领!'

text = text.replace("超人", '*' * len("超人")).replace("星星", '*' * len("星星"))

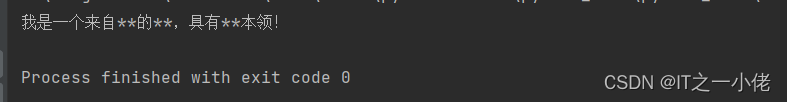

print(text) # 我是一个来自***的***,具有***本领!运行结果:

如果是多个敏感词可以用列表进行逐一替换。

示例代码:

text = '我是一个来自星星的超人,具有超人本领!'

words = ['超人', '星星']

for word in words:

text = text.replace(word, '*' * len(word))

print(text) # 我是一个来自***的***,具有***本领!运行效果:

2、正则表达式

使用正则表达式是一种简单而有效的方法,可以快速地匹配敏感词并进行过滤。在这里我们主要是使用“|”来进行匹配,“|”的意思是从多个目标字符串中选择一个进行匹配。

示例代码:

import re

def filter_words(text, words):

pattern = '|'.join(words)

return re.sub(pattern, '***', text)

if __name__ == '__main__':

text = '我是一个来自星星的超人,具有超人本领!'

words = ['超人', '星星']

res = filter_words(text, words)

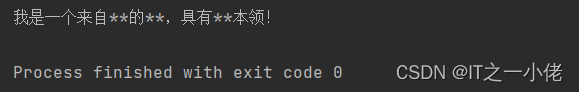

print(res) # 我是一个来自***的***,具有***本领!运行结果:

3、使用ahocorasick第三方库

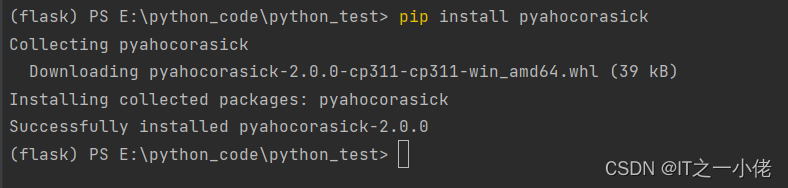

ahocorasick库安装:

pip install pyahocorasick

示例代码:

import ahocorasick

def filter_words(text, words):

a = ahocorasick.automaton()

for index, word in enumerate(words):

a.add_word(word, (index, word))

a.make_automaton()

result = []

for end_index, (insert_order, original_value) in a.iter(text):

start_index = end_index - len(original_value) + 1

result.append((start_index, end_index))

for start_index, end_index in result[::-1]:

text = text[:start_index] + '*' * (end_index - start_index + 1) + text[end_index + 1:]

return text

if __name__ == '__main__':

text = '我是一个来自星星的超人,具有超人本领!'

words = ['超人', '星星']

res = filter_words(text, words)

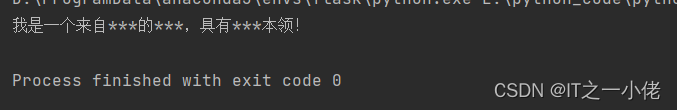

print(res) # 我是一个来自***的***,具有***本领!运行结果:

4、字典树

使用字典树是一种高效的方法,可以快速地匹配敏感词并进行过滤。

示例代码:

class treenode:

def __init__(self):

self.children = {}

self.is_end = false

class tree:

def __init__(self):

self.root = treenode()

def insert(self, word):

node = self.root

for char in word:

if char not in node.children:

node.children[char] = treenode()

node = node.children[char]

node.is_end = true

def search(self, word):

node = self.root

for char in word:

if char not in node.children:

return false

node = node.children[char]

return node.is_end

def filter_words(text, words):

tree = tree()

for word in words:

tree.insert(word)

result = []

for i in range(len(text)):

node = tree.root

for j in range(i, len(text)):

if text[j] not in node.children:

break

node = node.children[text[j]]

if node.is_end:

result.append((i, j))

for start_index, end_index in result[::-1]:

text = text[:start_index] + '*' * (end_index - start_index + 1) + text[end_index + 1:]

return text

if __name__ == '__main__':

text = '我是一个来自星星的超人,具有超人本领!'

words = ['超人', '星星']

res = filter_words(text, words)

print(res) # 我是一个来自***的***,具有***本领!运行结果:

5、dfa算法

使用dfa算法是一种高效的方法,可以快速地匹配敏感词并进行过滤。dfa的算法,即deterministic finite automaton算法,翻译成中文就是确定有穷自动机算法。它的基本思想是基于状态转移来检索敏感词,只需要扫描一次待检测文本,就能对所有敏感词进行检测。

示例代码:

class dfa:

def __init__(self, words):

self.words = words

self.build()

def build(self):

self.transitions = {}

self.fails = {}

self.outputs = {}

state = 0

for word in self.words:

current_state = 0

for char in word:

next_state = self.transitions.get((current_state, char), none)

if next_state is none:

state += 1

self.transitions[(current_state, char)] = state

current_state = state

else:

current_state = next_state

self.outputs[current_state] = word

queue = []

for (start_state, char), next_state in self.transitions.items():

if start_state == 0:

queue.append(next_state)

self.fails[next_state] = 0

while queue:

r_state = queue.pop(0)

for (state, char), next_state in self.transitions.items():

if state == r_state:

queue.append(next_state)

fail_state = self.fails[state]

while (fail_state, char) not in self.transitions and fail_state != 0:

fail_state = self.fails[fail_state]

self.fails[next_state] = self.transitions.get((fail_state, char), 0)

if self.fails[next_state] in self.outputs:

self.outputs[next_state] += ', ' + self.outputs[self.fails[next_state]]

def search(self, text):

state = 0

result = []

for i, char in enumerate(text):

while (state, char) not in self.transitions and state != 0:

state = self.fails[state]

state = self.transitions.get((state, char), 0)

if state in self.outputs:

result.append((i - len(self.outputs[state]) + 1, i))

return result

def filter_words(text, words):

dfa = dfa(words)

result = []

for start_index, end_index in dfa.search(text):

result.append((start_index, end_index))

for start_index, end_index in result[::-1]:

text = text[:start_index] + '*' * (end_index - start_index + 1) + text[end_index + 1:]

return text

if __name__ == '__main__':

text = '我是一个来自星星的超人,具有超人本领!'

words = ['超人', '星星']

res = filter_words(text, words)

print(res) # 我是一个来自***的***,具有***本领!运行结果:

到此这篇关于python实现敏感词过滤的五种方法的文章就介绍到这了,更多相关python敏感词过滤内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论