hadoop3是一个开源的分布式计算平台,它提供了存储大数据集和处理大数据集的能力。hadoop3的核心是hdfs(hadoop分布式文件系统)和mapreduce,它们共同为用户提供了高效、可靠的大数据处理能力。hadoop3引入了许多新功能,包括支持多种容器化技术、支持gpu加速、支持erasure coding等,这些新功能使得hadoop3更加适应现代大规模数据处理的需求。此外,hadoop3还提供了许多api和工具,例如hive、pig、spark等,使得用户可以更方便地处理和分析大数据。

下面是正文:要搭hadoop分布式集群环境,不用docker的话,得准备好几台虚拟机,耗时耗力电脑配置低的话还会卡,用docker容器装载hadoop主从服务是再好不过的选择了。

这里我选择用脚本制作容器并自动配置hdfs分布式集群环境,几分钟内就能自动生成hadoop环境,供学习使用

前置条件

- centos7 按理8 9应该也可以

- docker

配置文件见文末网盘链接,最新内容以网盘文件为主

介绍

默认为3节点(hadoop-master、hadoop-slave1、hadoop-slave2),如果需要增加节点修改start.sh文件,将3改成其它数量

# the default node number is 3

n=${1:-3}

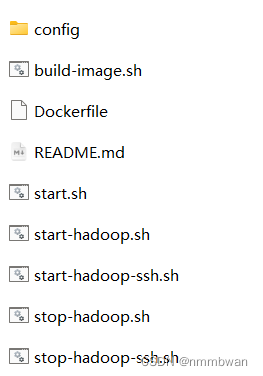

目录结构

脚本文件,其中config是hadoop配置文件

构建镜像

dockfile

# use a base image

from centos:centos7.9.2009

maintainer thly <nmmbwan@163.com>

# install openssh-server, openjdk and wget

run yum update -y && yum install -y openssh-server openssh-clients java-1.8.0-openjdk java-1.8.0-openjdk-devel wget net-tools sudo systemctl

# install hadoop 3.2.3

run wget https://mirrors.aliyun.com/apache/hadoop/core/hadoop-3.2.3/hadoop-3.2.3.tar.gz && \

tar -xzvf hadoop-3.2.3.tar.gz && \

mv hadoop-3.2.3 /usr/local/hadoop && \

rm -f hadoop-3.2.3.tar.gz

# set environment variable

env java_home=/usr/lib/jvm/java-1.8.0-openjdk

env hadoop_home=/usr/local/hadoop

env path=$path:/usr/local/hadoop/bin:/usr/local/hadoop/sbin

# create or overwrite the /etc/profile.d/my_env.sh file

run echo 'export java_home=/usr/lib/jvm/java-1.8.0-openjdk' > /etc/profile.d/my_env.sh && \

echo 'export hadoop_home=/usr/local/hadoop' >> /etc/profile.d/my_env.sh && \

echo 'export path=$path:/usr/local/hadoop/bin:/usr/local/hadoop/sbin' >> /etc/profile.d/my_env.sh

# make the script executable

run chmod +x /etc/profile.d/my_env.sh

# create user hadoop

run useradd hadoop

# set password for user hadoop to 'hadoop'

run echo 'hadoop:hadoop' | chpasswd

run echo 'root:qwer1234' | chpasswd

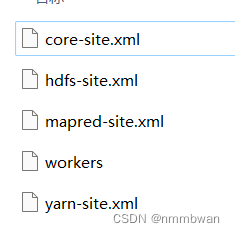

copy config/* /tmp/

run mv /tmp/hadoop-env.sh /usr/local/hadoop/etc/hadoop/hadoop-env.sh && \

mv /tmp/hdfs-site.xml $hadoop_home/etc/hadoop/hdfs-site.xml && \

mv /tmp/core-site.xml $hadoop_home/etc/hadoop/core-site.xml && \

mv /tmp/mapred-site.xml $hadoop_home/etc/hadoop/mapred-site.xml && \

mv /tmp/yarn-site.xml $hadoop_home/etc/hadoop/yarn-site.xml && \

mv /tmp/workers $hadoop_home/etc/hadoop/workers && \

# mv /tmp/start-hadoop.sh ~/start-hadoop.sh && \

mv /tmp/run-wordcount.sh ~/run-wordcount.sh

#chmod +x ~/start-hadoop.sh && \

run chmod +x ~/run-wordcount.sh && \

chmod +x $hadoop_home/sbin/start-dfs.sh && \

chmod +x $hadoop_home/sbin/start-yarn.sh

run echo 'hadoop all=(all) all' > /etc/sudoers.d/hadoop && \

chmod 440 /etc/sudoers.d/hadoop

workdir /home/hadoop

# switch to user hadoop

user hadoop

run mkdir -p ~/hdfs/namenode && \

mkdir -p ~/hdfs/datanode && \

mkdir $hadoop_home/logs && \

chmod -r 755 ~/hdfs && \

chmod -r 755 $hadoop_home/logs

#user root

#cmd [ "sh", "-c", "systemctl start sshd;bash" ]

#cmd ["/sbin/init"]

# ssh without key

run ssh-keygen -t rsa -f ~/.ssh/id_rsa -p '' && \

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# format namenodepw

# run /usr/local/hadoop/bin/hdfs namenode -format

user root

cmd [ "sh", "-c", "systemctl start sshd;bash" ]

cmd ["/sbin/init"]

生成镜像

build-image.sh脚本

#!/bin/bash

echo -e "\nbuild docker hadoop image\n"

###thly/hadoop:1.0,这个可以改成你自己的名称,改完之后,start.sh脚本里面启动的镜像就要改成####自己的这个镜像名称,注意

docker build -t thly/hadoop:3.0 .

echo -e "\n镜像打包成功,镜像名称是thly/hadoop:3.0"

运行脚本sh build-image.sh获取镜像

启动容器

运行sh start.sh启动容器, 默认启动2个datanode,可以改脚本多启动几个

#!/bin/bash

image_name="thly/hadoop:3.0"

network_name="bigdata"

subnet="172.10.1.0/24"

node_ip="172.10.1"

gateway="172.10.1.1"

# define a variable to store ip addresses and hostnames

declare -a ip_and_hostnames

# the default node number is 3

n=${1:-3}

# 检查镜像是否存在

if docker image inspect $image_name &> /dev/null; then

echo "镜像 $image_name 存在。"

else

echo "镜像 $image_name 不存在。新建镜像"

sh build-image.sh

fi

# check if the network already exists

if ! docker network inspect $network_name &> /dev/null; then

# create the network with specified subnet and gateway

docker network create --subnet=$subnet --gateway $gateway $network_name

echo "created network $network_name with subnet $subnet and gateway $gateway"

else

echo "network $network_name already exists"

fi

ip_and_hostnames["$node_ip.2"]="hadoop-master"

for ((j=1; j<$n; j++)); do

ip_and_hostnames["$node_ip.$((j+2))"]="hadoop-slave$j"

done

# start hadoop master container

docker rm -f hadoop-master &> /dev/null

echo "start hadoop-master container..."

docker run -itd \

--net=$network_name \

--ip $node_ip.2 \

-p 9870:9870 \

-p 9864:9864 \

-p 19888:19888 \

--name hadoop-master \

--hostname hadoop-master \

--privileged=true \

thly/hadoop:3.0

# start hadoop slave container

i=1

while [ $i -lt $n ]

do

docker rm -f hadoop-slave$i &> /dev/null

echo "start hadoop-slave$i container..."

if [ $i -eq 1 ]; then

port="-p 8088:8088"

else

port=""

fi

docker run -itd \

--net=$network_name \

--ip $node_ip.$((i+2)) \

--name hadoop-slave$i \

--hostname hadoop-slave$i \

$port \

-p $((9864+i)):9864 \

--privileged=true \

thly/hadoop:3.0

i=$(( $i + 1 ))

done

# 遍历关联数组并在每个容器内添加ip和主机名到 /etc/hosts 文件

for key in "${!ip_and_hostnames[@]}"; do

value="${ip_and_hostnames[$key]}"

echo "key: $key, value: $value"

# 跳过特定情况的判断条件

if [ "$value" != "hadoop-master" ]; then

echo "configure hadoop-master container"

docker exec -it hadoop-master bash -c "sudo echo '$key $value' >> /etc/hosts;sudo -u hadoop ssh-copy-id -o stricthostkeychecking=no $key"

fi

for ((i=1; i<$n; i++)); do

if [[ "$value" != hadoop-slave$i ]]; then

echo "configure hadoop-slave$i container"

docker exec -it hadoop-slave$i bash -c "sudo echo '$key $value' >> /etc/hosts;sudo -u hadoop ssh-copy-id -o stricthostkeychecking=no $key"

fi

done

done

# format namenode

docker exec -it -u hadoop hadoop-master bash -c '$hadoop_home/bin/hdfs namenode -format'

启动hadoop

这里有两种启动脚本,1使用docker exec启动,2使用ssh登录启动

1、使用docker exec启动

sh start-hadoop.sh启动hadoop服务,只需要启动namenode 等服务

#!/bin/bash

echo "starting hadoop-master dfs..."

docker exec -d -u hadoop hadoop-master bash -c '$hadoop_home/sbin/start-dfs.sh'

sleep 60

echo "hdfs started"

echo "starting hadoop-slave1 yarn..."

docker exec -d -u hadoop hadoop-slave1 bash -c '$hadoop_home/sbin/start-yarn.sh'

echo "yarn started"

echo "starting hadoop-master historyserver ..."

docker exec -d -u hadoop hadoop-master bash -c '$hadoop_home/bin/mapred --daemon start historyserver'

2、使用ssh启动

#!/bin/bash

# get hadoop-master ip address

hadoop_master_ip=$(docker inspect -f '{{range .networksettings.networks}}{{.ipaddress}}{{end}}' hadoop-master)

# get hadoop-slave1 ip address

hadoop_slave1_ip=$(docker inspect -f '{{range .networksettings.networks}}{{.ipaddress}}{{end}}' hadoop-slave1)

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop@$hadoop_master_ip '$hadoop_home/sbin/start-dfs.sh'

echo " --------------- 启动 yarn ---------------"

ssh hadoop@$hadoop_slave1_ip '$hadoop_home/sbin/start-yarn.sh'

echo " --------------- 启动 historyserver ---------------"

ssh hadoop@$hadoop_master_ip '$hadoop_home/bin/mapred --daemon start historyserver'

访问hadoop

使用http://ip:9870访问web界面,其中ip为宿主机ip地址

如果要进入hadoop环境,则使用命令docker exec -it -u hadoop hadoop-master进入docker中,hadoop所有命名执行请切换成hadoop用户

hadoop账号密码为hadoop:hadoop

hadoop账号密码:qwer1234’ | chpasswd

关闭hadoop

1、使用docker exec关闭

#!/bin/bash

echo "stopping hadoop-master historyserver ..."

docker exec -it -u hadoop hadoop-master bash -c '$hadoop_home/bin/mapred --daemon stop historyserver'

echo "stopping hadoop-slave1 yarn..."

docker exec -it -u hadoop hadoop-slave1 bash -c '$hadoop_home/sbin/stop-yarn.sh'

echo "yarn stopped"

echo "stopping hadoop-master dfs..."

docker exec -it -u hadoop hadoop-master bash -c '$hadoop_home/sbin/stop-dfs.sh'

echo "hdfs stopped"

2、使用ssh关闭

#!/bin/bash

# get hadoop-master ip address

hadoop_master_ip=$(docker inspect -f '{{range .networksettings.networks}}{{.ipaddress}}{{end}}' hadoop-master)

# get hadoop-slave1 ip address

hadoop_slave1_ip=$(docker inspect -f '{{range .networksettings.networks}}{{.ipaddress}}{{end}}' hadoop-slave1)

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop@$hadoop_master_ip '$hadoop_home/bin/mapred --daemon stop historyserver'

echo " --------------- 关闭 yarn ---------------"

ssh hadoop@$hadoop_slave1_ip '$hadoop_home/sbin/stop-yarn.sh'

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop@$hadoop_master_ip '$hadoop_home/sbin/stop-dfs.sh'

删除docker及镜像

删除hadoop-master、hadoop-slave和hadoop镜像

#!/bin/bash

prefixes=("hadoop-slave" "hadoop-master")

for prefix in "${prefixes[@]}"; do

# get a list of container ids with the specified prefix

container_ids=$(docker ps -aq --filter "name=${prefix}")

# start remove master and slave container

if [ -z "$container_ids" ]; then

echo "no containers with the prefix '${prefix}' found."

else

for container_id in $container_ids; do

# stop and remove each container

docker stop "$container_id"

docker rm "$container_id"

echo "container ${container_id} removed."

done

fi

done

docker rmi thly/hadoop:3.0

配置文件docker-hadoop

https://www.aliyundrive.com/s/ddgsifqeoml

提取码: 6n2u

参考https://zhuanlan.zhihu.com/p/56481503

发表评论