前言

keepalived 作为一个高性能的集群高可用解决方案。提供了集群节点心跳检测、健康检查以及故障切换的功能。原生支持 lvs 负载均衡集群。除了原生支持的lvs + keepalived 外,现在 nginx + keepalived 也比较常用。接下来,我将详细介绍 nginx + keepalived。

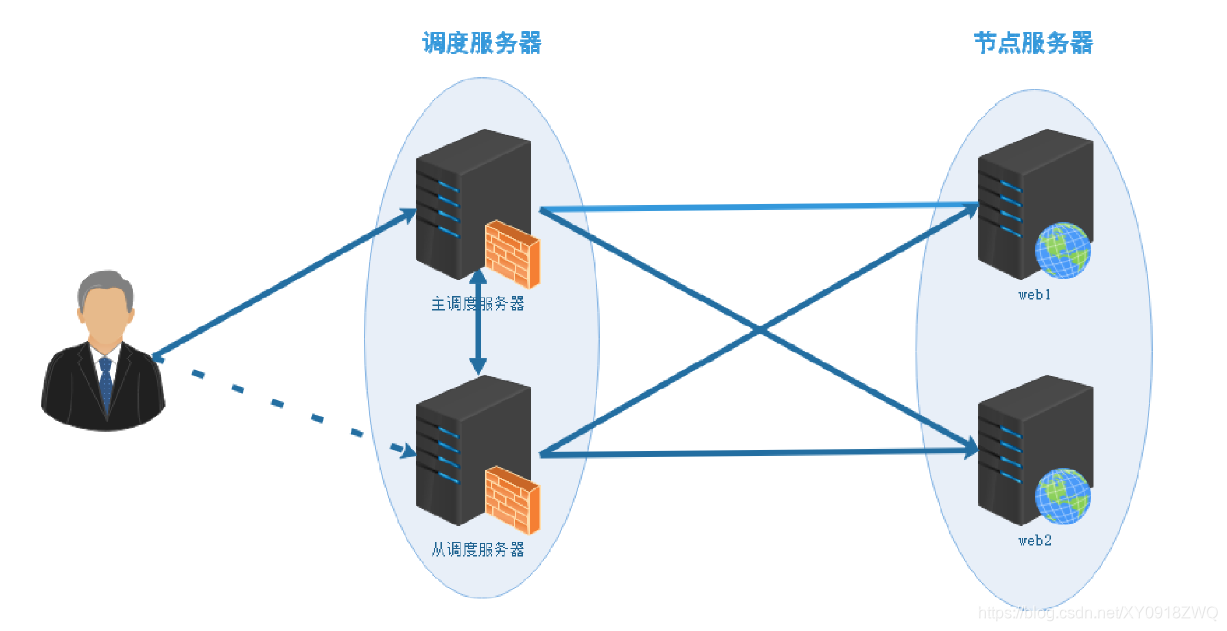

一、架构设计

负载均衡方案系统架构拓扑图

二、环境准备

| role | host | ip | software installed | os |

|---|---|---|---|---|

| nginx proxy、keepalive master | node01 | 192.168.5.11 | nginx-1.10.0、keepalived | centos 7.8 |

| nginx proxy、keepalive backup | node02 | 192.168.5.12 | nginx-1.10.0、keepalived | centos 7.8 |

| nginx web server1 | node03 | 192.168.5.13 | nginx-1.18.0 | centos 7.8 |

| nginx web server1 | node04 | 192.168.5.14 | nginx-1.18.0 | centos 7.8 |

| client | node05 | 192.168.5.15 | ---- | centos 7.8 |

三、案例部署

配置 前端 keepalived

---node01

[root@node01 ~]# vim /etc/keepalived/keepalived.conf

! configuration file for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from alexandre.cassen@firewall.loc

smtp_server 192.168.5.10

smtp_connect_timeout 30

router_id lvs_devel1

}

vrrp_script check_nginx_service {

script "/etc/keepalived/check_web_server_keepalive.sh"

#script "killall -0 nginx"

interval 2

}

vrrp_instance vi_1 {

state master

interface ens33

virtual_router_id 51

priority 200

advert_int 1

authentication {

auth_type pass

auth_pass 1111

}

track_script {

check_nginx_service

}

virtual_ipaddress {

192.168.5.100

}

}

[root@node01 ~]# systemctl restart keepalived.service

---node02

! configuration file for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from alexandre.cassen@firewall.loc

smtp_server 192.168.5.10

smtp_connect_timeout 30

router_id lvs_devel2

}

vrrp_script check_nginx_service {

script "/etc/keepalived/check_web_server_keepalive.sh"

#script "killall -0 nginx"

interval 2

}

vrrp_instance vi_1 {

state backup

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type pass

auth_pass 1111

}

track_script {

check_nginx_service

}

virtual_ipaddress {

192.168.5.100

}

}

[root@node02 ~]# systemctl restart keepalived.service

配置 前端 nginx 负载均衡

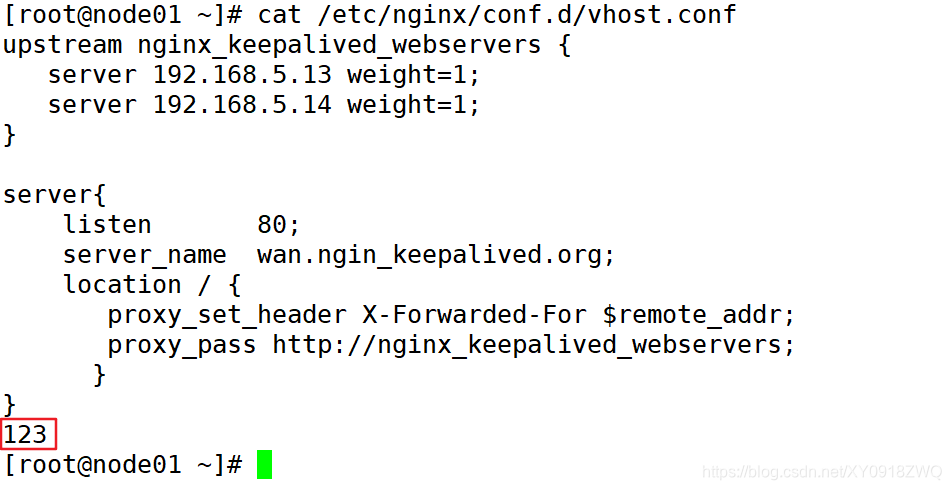

---node01

[root@node01 ~]# mv /etc/nginx/conf.d/default.conf{,.bak}

[root@node01 ~]# vim /etc/nginx/conf.d/vhost.conf

upstream nginx_keepalived_webservers {

server 192.168.5.13:80 weight=1;

server 192.168.5.14:80 weight=1;

}

server{

listen 80;

server_name wan.ngin_keepalived.org;

location / {

proxy_set_header x-forwarded-for $remote_addr;

proxy_pass http://nginx_keepalived_webservers;

}

}

[root@node01 ~]# systemctl restart nginx

node02

[root@node02 ~]# mv /etc/nginx/conf.d/default.conf{,.bak}

[root@node02 ~]# vim /etc/nginx/conf.d/vhost.conf

upstream nginx_keepalived_webservers {

server 192.168.5.13:80 weight=1;

server 192.168.5.14:80 weight=1;

}

server{

listen 80;

server_name wan.ngin_keepalived.org;

location / {

proxy_set_header x-forwarded-for $remote_addr;

proxy_pass http://nginx_keepalived_webservers;

}

}

[root@node02 ~]# systemctl restart nginx

配置前端 nginx监控脚本

---node01

[root@node01 ~]# vim /etc/keepalived/check_web_server_keepalive.sh

#!/bin/bash

http_status=`ps -c nginx --no-header | wc -l`

if [ $http_status -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -c nginx --no-header | wc -l` -eq 0 ]

then

systemctl stop keepalived

fi

fi

[root@node02 ~]# vim /etc/keepalived/check_web_server_keepalive.sh

#!/bin/bash

http_status=`ps -c nginx --no-header | wc -l`

if [ $http_status -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -c nginx --no-header | wc -l` -eq 0 ]

then

systemctl stop keepalived

fi

fi

配置后端 web 服务

---node03 [root@node03 ~]# yum install nginx-1.18.0-1.el7.ngx.x86_64.rpm -y [root@node03 ~]# echo "`hostname -i` web test page..." > /usr/share/nginx/html/index.html [root@node03 ~]# systemctl enable --now nginx ----node04 [root@node04 ~]# yum install nginx-1.18.0-1.el7.ngx.x86_64.rpm -y [root@node04 ~]# echo "`hostname -i` web test page..." > /usr/share/nginx/html/index.html [root@node04 ~]# systemctl enable --now nginx

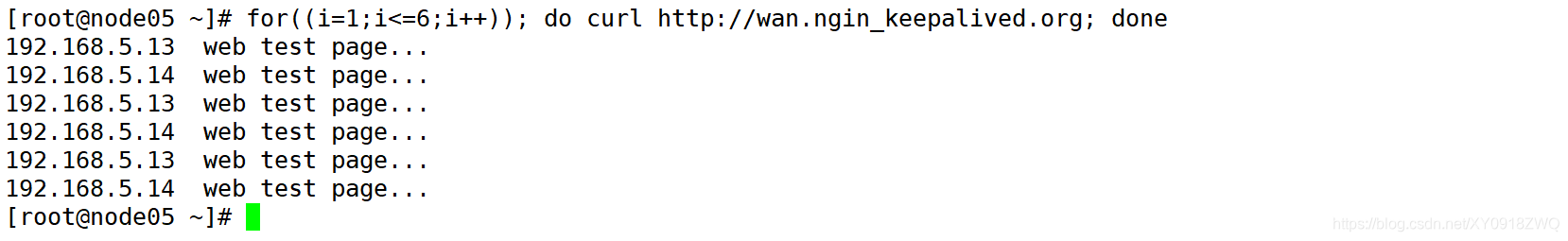

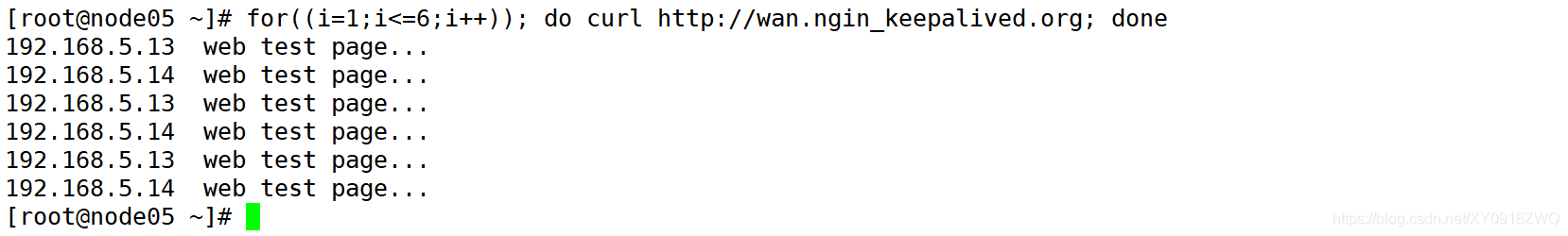

客户端访问 vip

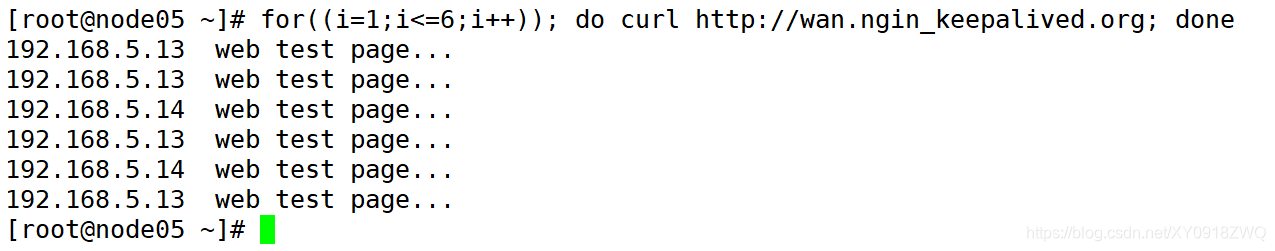

实现 web 服务负载均衡 !

四、测试

node05 添加hosts解析

1、keepalived 健康检查

检测 vip 访问 web 服务

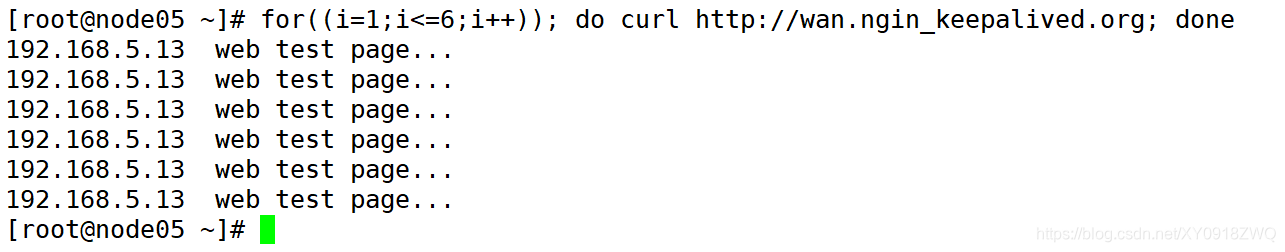

模拟后端服务故障

[root@node04 ~]# systemctl stop nginx [root@node04 ~]# systemctl is-active nginx inactive

检测 vip 访问 web 服务

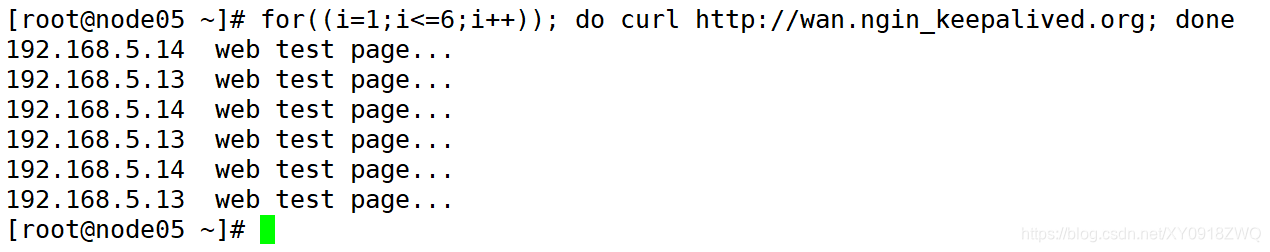

模拟后端服务故障恢复

[root@node04 ~]# systemctl start nginx [root@node04 ~]# systemctl is-active nginx active

检测 vip 访问 web 服务

注:nginx upstream 模块默认支持对后端服务健康监测,haproxy 同样也自带这种功能!

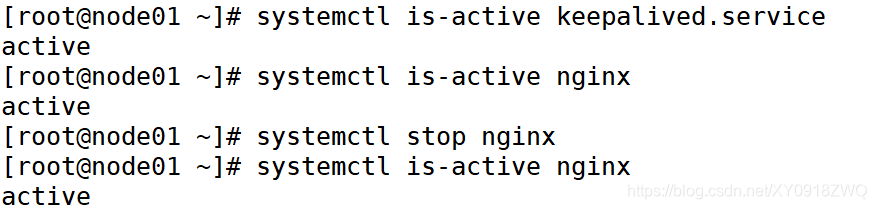

2、keepalived master/backup 切换

查看keeapalived vip 地址状况

node01

node02

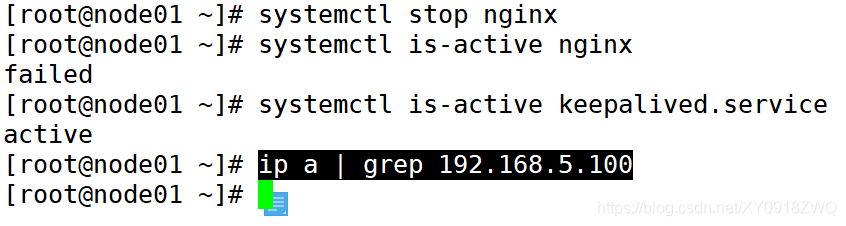

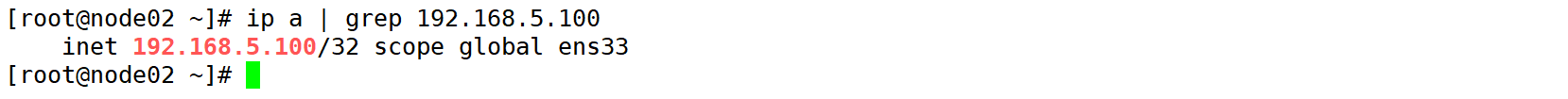

模拟 keepalived master 故障

[root@node01 ~]# systemctl stop keepalived.service

node01

node02

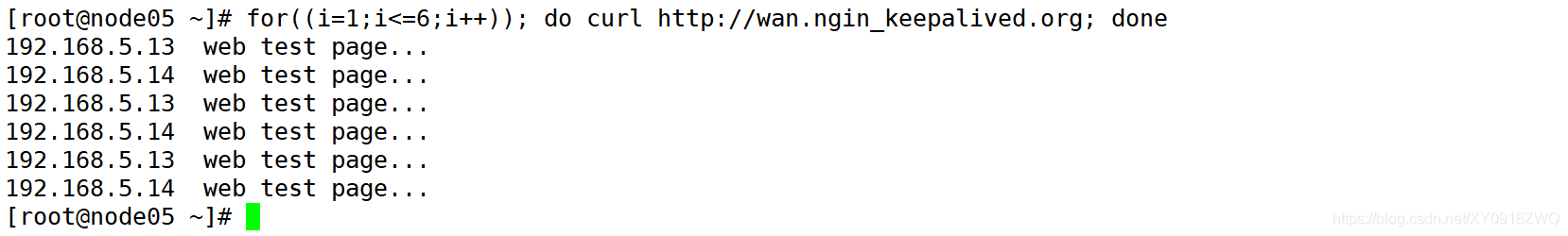

web 服务访问不受影响

模拟 keepalived master 故障恢复

[root@node01 ~]# systemctl start keepalived.service

node01

node02

实现 keeapalived vip 漂移 !

测试前端 nginx 负载均衡服务器

node01

nginx 服务异常后 自动启动!

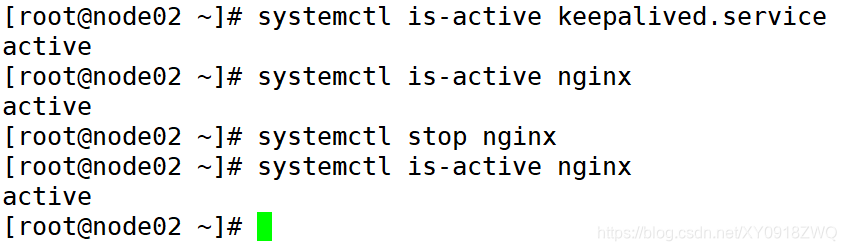

node02

web 访问不受影响

模拟node01 nginx 服务无法启动

node01

node02

web 访问不受影响

到此这篇关于nginx使用keepalived部署web集群(高可用高性能负载均衡)实战案例的文章就介绍到这了,更多相关nginx使用keepalived部署web集群内容请搜索代码网以前的文章或继续浏览下面的相关文章希望大家以后多多支持代码网!

发表评论